Ruijun Deng

InfoDecom: Decomposing Information for Defending against Privacy Leakage in Split Inference

Nov 17, 2025Abstract:Split inference (SI) enables users to access deep learning (DL) services without directly transmitting raw data. However, recent studies reveal that data reconstruction attacks (DRAs) can recover the original inputs from the smashed data sent from the client to the server, leading to significant privacy leakage. While various defenses have been proposed, they often result in substantial utility degradation, particularly when the client-side model is shallow. We identify a key cause of this trade-off: existing defenses apply excessive perturbation to redundant information in the smashed data. To address this issue in computer vision tasks, we propose InfoDecom, a defense framework that first decomposes and removes redundant information and then injects noise calibrated to provide theoretically guaranteed privacy. Experiments demonstrate that InfoDecom achieves a superior utility-privacy trade-off compared to existing baselines. The code and the appendix are available at https://github.com/SASA-cloud/InfoDecom.

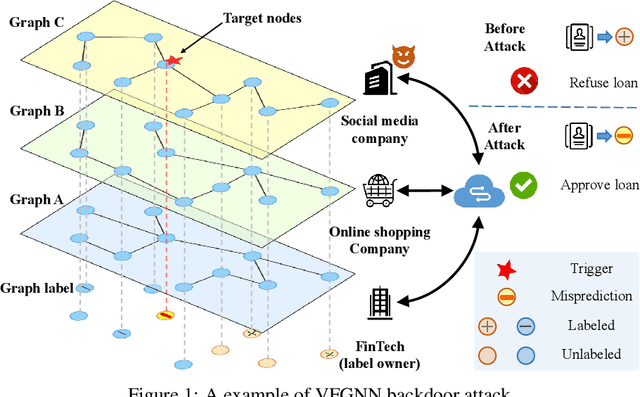

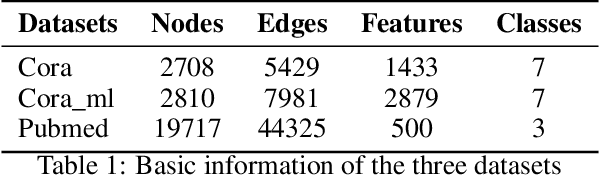

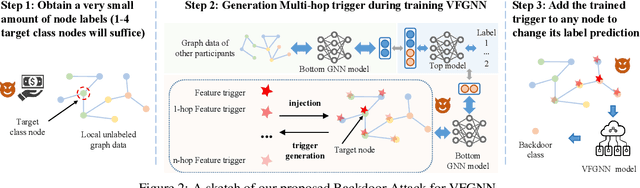

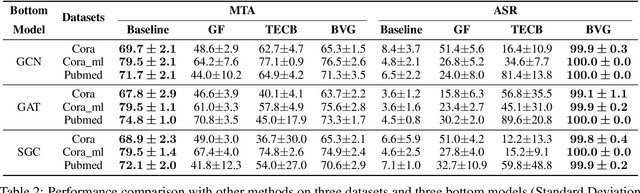

Backdoor Attack on Vertical Federated Graph Neural Network Learning

Oct 15, 2024

Abstract:Federated Graph Neural Network (FedGNN) is a privacy-preserving machine learning technology that combines federated learning (FL) and graph neural networks (GNNs). It offers a privacy-preserving solution for training GNNs using isolated graph data. Vertical Federated Graph Neural Network (VFGNN) is an important branch of FedGNN, where data features and labels are distributed among participants, and each participant has the same sample space. Due to the difficulty of accessing and modifying distributed data and labels, the vulnerability of VFGNN to backdoor attacks remains largely unexplored. In this context, we propose BVG, the first method for backdoor attacks in VFGNN. Without accessing or modifying labels, BVG uses multi-hop triggers and requires only four target class nodes for an effective backdoor attack. Experiments show that BVG achieves high attack success rates (ASR) across three datasets and three different GNN models, with minimal impact on main task accuracy (MTA). We also evaluate several defense methods, further validating the robustness and effectiveness of BVG. This finding also highlights the need for advanced defense mechanisms to counter sophisticated backdoor attacks in practical VFGNN applications.

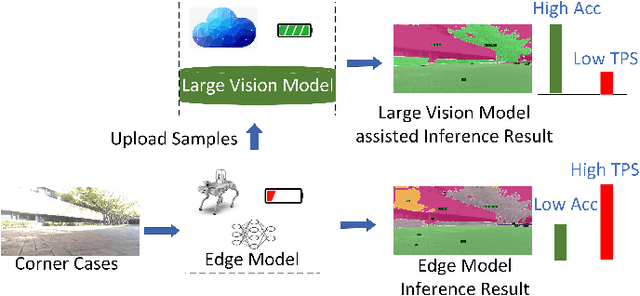

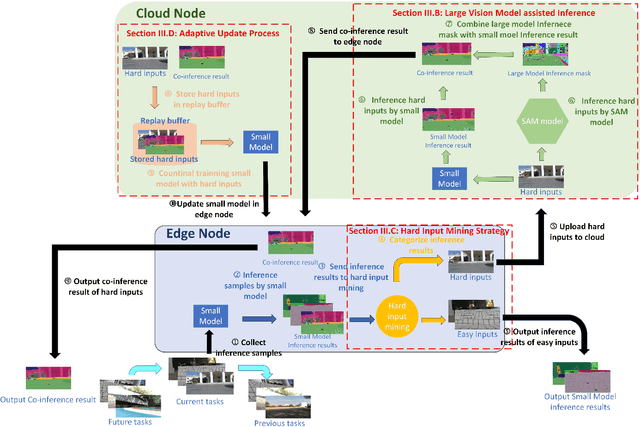

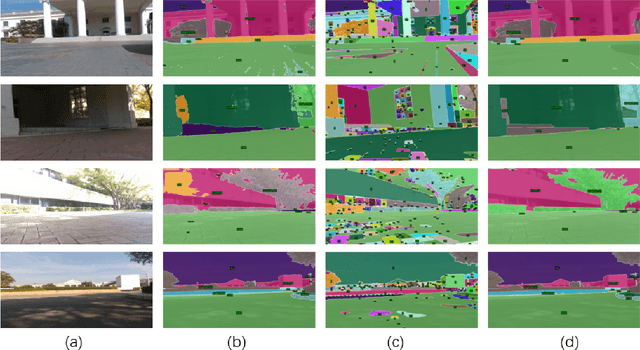

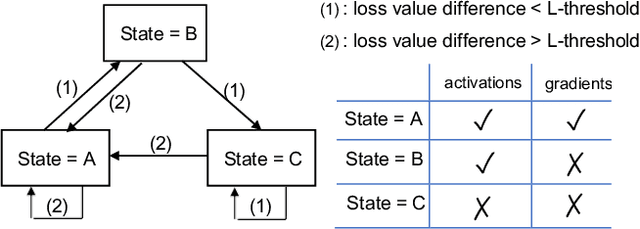

LAECIPS: Large Vision Model Assisted Adaptive Edge-Cloud Collaboration for IoT-based Perception System

Apr 16, 2024

Abstract:Recent large vision models (e.g., SAM) enjoy great potential to facilitate intelligent perception with high accuracy. Yet, the resource constraints in the IoT environment tend to limit such large vision models to be locally deployed, incurring considerable inference latency thereby making it difficult to support real-time applications, such as autonomous driving and robotics. Edge-cloud collaboration with large-small model co-inference offers a promising approach to achieving high inference accuracy and low latency. However, existing edge-cloud collaboration methods are tightly coupled with the model architecture and cannot adapt to the dynamic data drifts in heterogeneous IoT environments. To address the issues, we propose LAECIPS, a new edge-cloud collaboration framework. In LAECIPS, both the large vision model on the cloud and the lightweight model on the edge are plug-and-play. We design an edge-cloud collaboration strategy based on hard input mining, optimized for both high accuracy and low latency. We propose to update the edge model and its collaboration strategy with the cloud under the supervision of the large vision model, so as to adapt to the dynamic IoT data streams. Theoretical analysis of LAECIPS proves its feasibility. Experiments conducted in a robotic semantic segmentation system using real-world datasets show that LAECIPS outperforms its state-of-the-art competitors in accuracy, latency, and communication overhead while having better adaptability to dynamic environments.

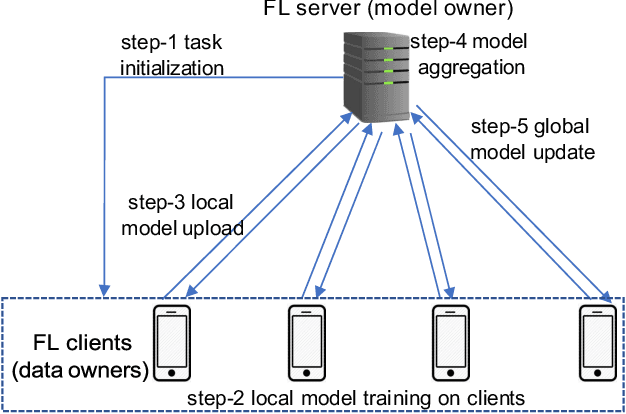

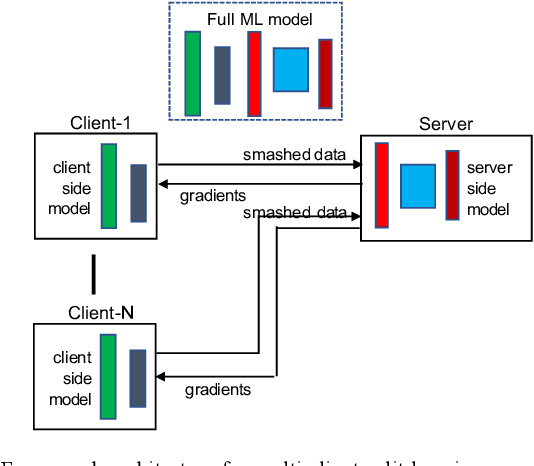

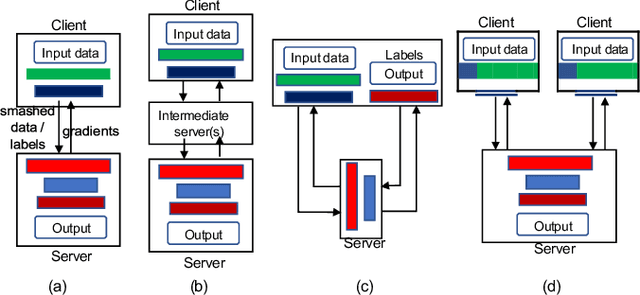

Combined Federated and Split Learning in Edge Computing for Ubiquitous Intelligence in Internet of Things: State of the Art and Future Directions

Jul 20, 2022

Abstract:Federated learning (FL) and split learning (SL) are two emerging collaborative learning methods that may greatly facilitate ubiquitous intelligence in Internet of Things (IoT). Federated learning enables machine learning (ML) models locally trained using private data to be aggregated into a global model. Split learning allows different portions of an ML model to be collaboratively trained on different workers in a learning framework. Federated learning and split learning, each has unique advantages and respective limitations, may complement each other toward ubiquitous intelligence in IoT. Therefore, combination of federated learning and split learning recently became an active research area attracting extensive interest. In this article, we review the latest developments in federated learning and split learning and present a survey on the state-of-the-art technologies for combining these two learning methods in an edge computing-based IoT environment. We also identify some open problems and discuss possible directions for future research in this area with a hope to further arouse the research community's interest in this emerging field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge