Backdoor Attack on Vertical Federated Graph Neural Network Learning

Paper and Code

Oct 15, 2024

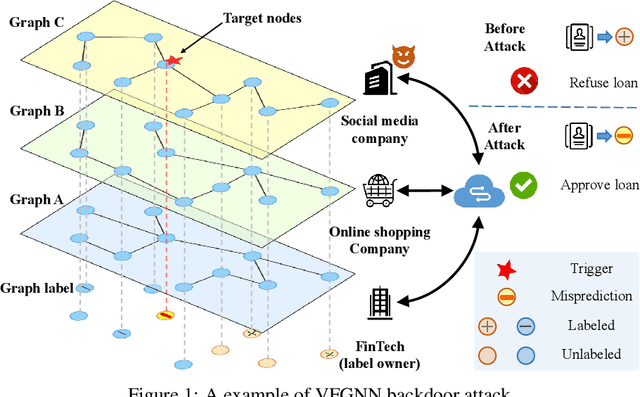

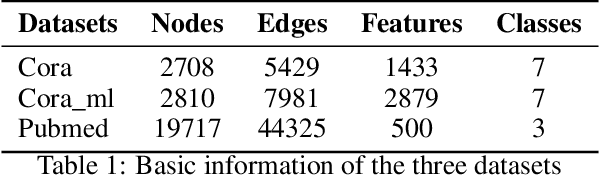

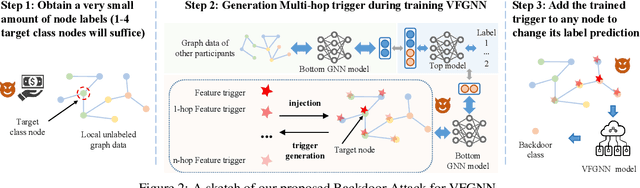

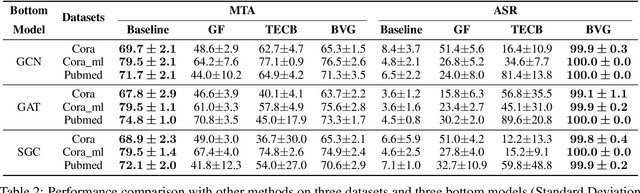

Federated Graph Neural Network (FedGNN) is a privacy-preserving machine learning technology that combines federated learning (FL) and graph neural networks (GNNs). It offers a privacy-preserving solution for training GNNs using isolated graph data. Vertical Federated Graph Neural Network (VFGNN) is an important branch of FedGNN, where data features and labels are distributed among participants, and each participant has the same sample space. Due to the difficulty of accessing and modifying distributed data and labels, the vulnerability of VFGNN to backdoor attacks remains largely unexplored. In this context, we propose BVG, the first method for backdoor attacks in VFGNN. Without accessing or modifying labels, BVG uses multi-hop triggers and requires only four target class nodes for an effective backdoor attack. Experiments show that BVG achieves high attack success rates (ASR) across three datasets and three different GNN models, with minimal impact on main task accuracy (MTA). We also evaluate several defense methods, further validating the robustness and effectiveness of BVG. This finding also highlights the need for advanced defense mechanisms to counter sophisticated backdoor attacks in practical VFGNN applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge