Shih-Chia Huang

Experience of Training a 1.7B-Parameter LLaMa Model From Scratch

Dec 17, 2024

Abstract:Pretraining large language models is a complex endeavor influenced by multiple factors, including model architecture, data quality, training continuity, and hardware constraints. In this paper, we share insights gained from the experience of training DMaS-LLaMa-Lite, a fully open source, 1.7-billion-parameter, LLaMa-based model, on approximately 20 billion tokens of carefully curated data. We chronicle the full training trajectory, documenting how evolving validation loss levels and downstream benchmarks reflect transitions from incoherent text to fluent, contextually grounded output. Beyond standard quantitative metrics, we highlight practical considerations such as the importance of restoring optimizer states when resuming from checkpoints, and the impact of hardware changes on training stability and throughput. While qualitative evaluation provides an intuitive understanding of model improvements, our analysis extends to various performance benchmarks, demonstrating how high-quality data and thoughtful scaling enable competitive results with significantly fewer training tokens. By detailing these experiences and offering training logs, checkpoints, and sample outputs, we aim to guide future researchers and practitioners in refining their pretraining strategies. The training script is available on Github at https://github.com/McGill-DMaS/DMaS-LLaMa-Lite-Training-Code. The model checkpoints are available on Huggingface at https://huggingface.co/collections/McGill-DMaS/dmas-llama-lite-6761d97ba903f82341954ceb.

On the Effectiveness of Incremental Training of Large Language Models

Nov 27, 2024

Abstract:Training large language models is a computationally intensive process that often requires substantial resources to achieve state-of-the-art results. Incremental layer-wise training has been proposed as a potential strategy to optimize the training process by progressively introducing layers, with the expectation that this approach would lead to faster convergence and more efficient use of computational resources. In this paper, we investigate the effectiveness of incremental training for LLMs, dividing the training process into multiple stages where layers are added progressively. Our experimental results indicate that while the incremental approach initially demonstrates some computational efficiency, it ultimately requires greater overall computational costs to reach comparable performance to traditional full-scale training. Although the incremental training process can eventually close the performance gap with the baseline, it does so only after significantly extended continual training. These findings suggest that incremental layer-wise training may not be a viable alternative for training large language models, highlighting its limitations and providing valuable insights into the inefficiencies of this approach.

LAECIPS: Large Vision Model Assisted Adaptive Edge-Cloud Collaboration for IoT-based Perception System

Apr 16, 2024

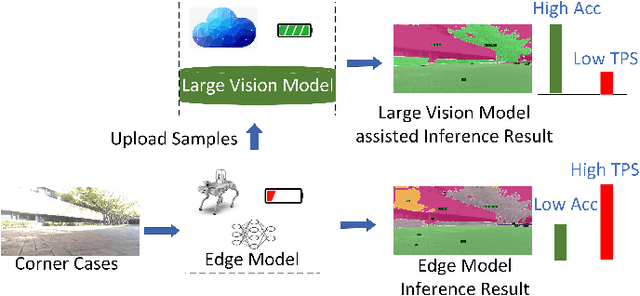

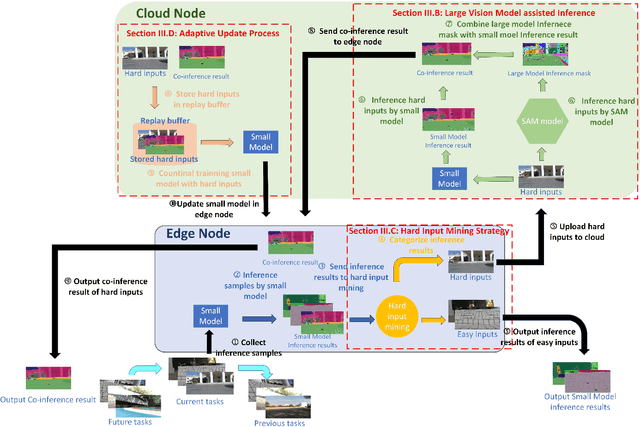

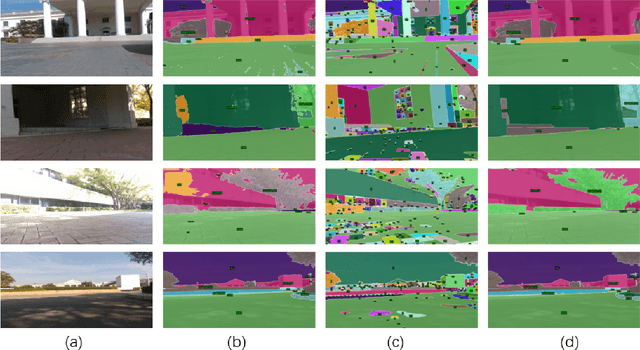

Abstract:Recent large vision models (e.g., SAM) enjoy great potential to facilitate intelligent perception with high accuracy. Yet, the resource constraints in the IoT environment tend to limit such large vision models to be locally deployed, incurring considerable inference latency thereby making it difficult to support real-time applications, such as autonomous driving and robotics. Edge-cloud collaboration with large-small model co-inference offers a promising approach to achieving high inference accuracy and low latency. However, existing edge-cloud collaboration methods are tightly coupled with the model architecture and cannot adapt to the dynamic data drifts in heterogeneous IoT environments. To address the issues, we propose LAECIPS, a new edge-cloud collaboration framework. In LAECIPS, both the large vision model on the cloud and the lightweight model on the edge are plug-and-play. We design an edge-cloud collaboration strategy based on hard input mining, optimized for both high accuracy and low latency. We propose to update the edge model and its collaboration strategy with the cloud under the supervision of the large vision model, so as to adapt to the dynamic IoT data streams. Theoretical analysis of LAECIPS proves its feasibility. Experiments conducted in a robotic semantic segmentation system using real-world datasets show that LAECIPS outperforms its state-of-the-art competitors in accuracy, latency, and communication overhead while having better adaptability to dynamic environments.

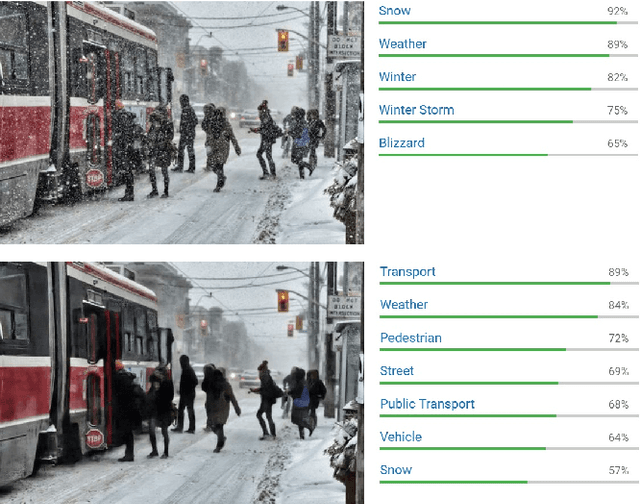

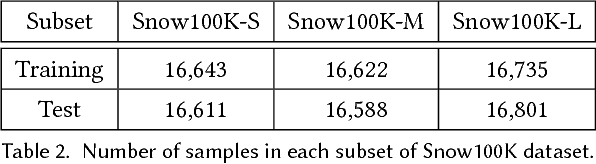

DesnowNet: Context-Aware Deep Network for Snow Removal

Aug 15, 2017

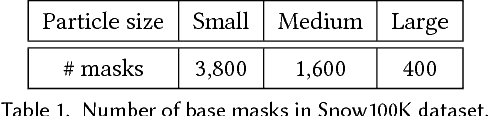

Abstract:Existing learning-based atmospheric particle-removal approaches such as those used for rainy and hazy images are designed with strong assumptions regarding spatial frequency, trajectory, and translucency. However, the removal of snow particles is more complicated because it possess the additional attributes of particle size and shape, and these attributes may vary within a single image. Currently, hand-crafted features are still the mainstream for snow removal, making significant generalization difficult to achieve. In response, we have designed a multistage network codenamed DesnowNet to in turn deal with the removal of translucent and opaque snow particles. We also differentiate snow into attributes of translucency and chromatic aberration for accurate estimation. Moreover, our approach individually estimates residual complements of the snow-free images to recover details obscured by opaque snow. Additionally, a multi-scale design is utilized throughout the entire network to model the diversity of snow. As demonstrated in experimental results, our approach outperforms state-of-the-art learning-based atmospheric phenomena removal methods and one semantic segmentation baseline on the proposed Snow100K dataset in both qualitative and quantitative comparisons. The results indicate our network would benefit applications involving computer vision and graphics.

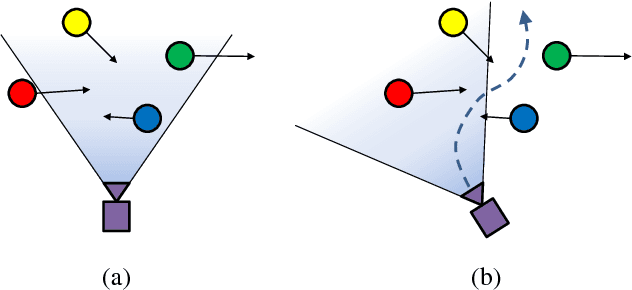

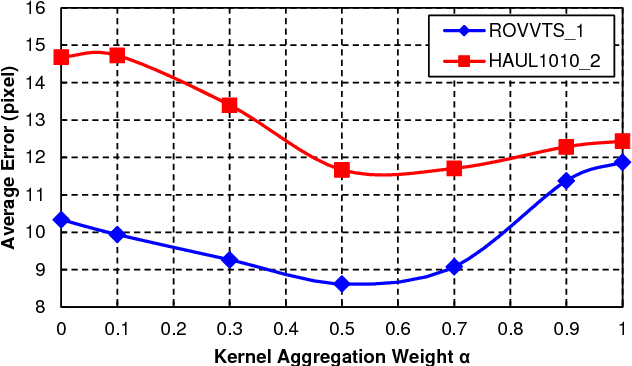

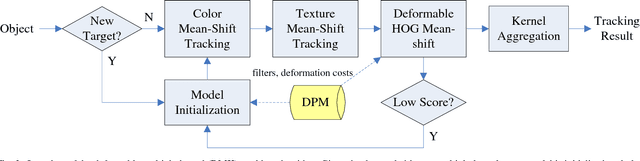

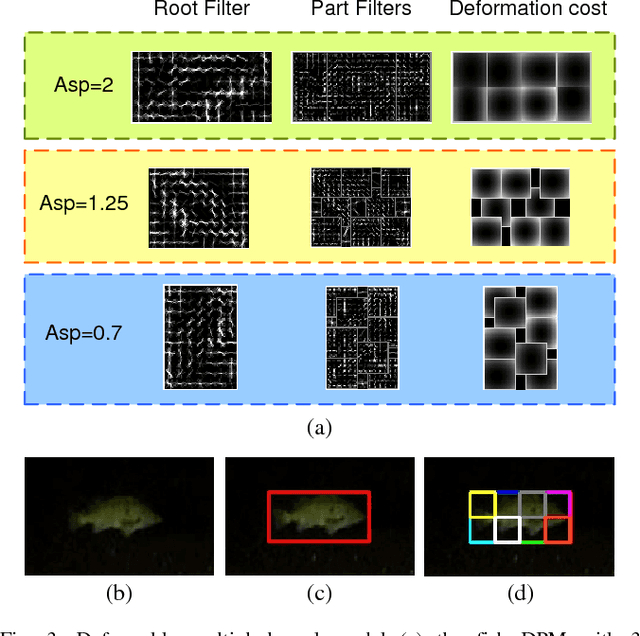

Underwater Fish Tracking for Moving Cameras based on Deformable Multiple Kernels

Mar 05, 2016

Abstract:Fishery surveys that call for the use of single or multiple underwater cameras have been an emerging technology as a non-extractive mean to estimate the abundance of fish stocks. Tracking live fish in an open aquatic environment posts challenges that are different from general pedestrian or vehicle tracking in surveillance applications. In many rough habitats fish are monitored by cameras installed on moving platforms, where tracking is even more challenging due to inapplicability of background models. In this paper, a novel tracking algorithm based on the deformable multiple kernels (DMK) is proposed to address these challenges. Inspired by the deformable part model (DPM) technique, a set of kernels is defined to represent the holistic object and several parts that are arranged in a deformable configuration. Color histogram, texture histogram and the histogram of oriented gradients (HOG) are extracted and serve as object features. Kernel motion is efficiently estimated by the mean-shift algorithm on color and texture features to realize tracking. Furthermore, the HOG-feature deformation costs are adopted as soft constraints on kernel positions to maintain the part configuration. Experimental results on practical video set from underwater moving cameras show the reliable performance of the proposed method with much less computational cost comparing with state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge