LAECIPS: Large Vision Model Assisted Adaptive Edge-Cloud Collaboration for IoT-based Perception System

Paper and Code

Apr 16, 2024

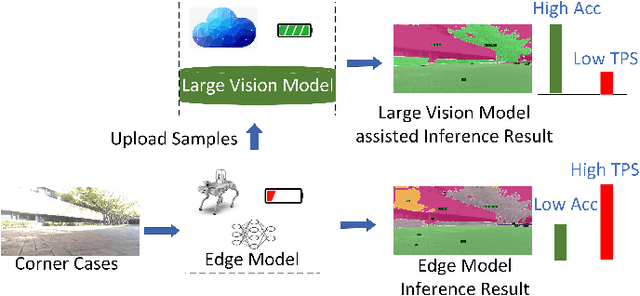

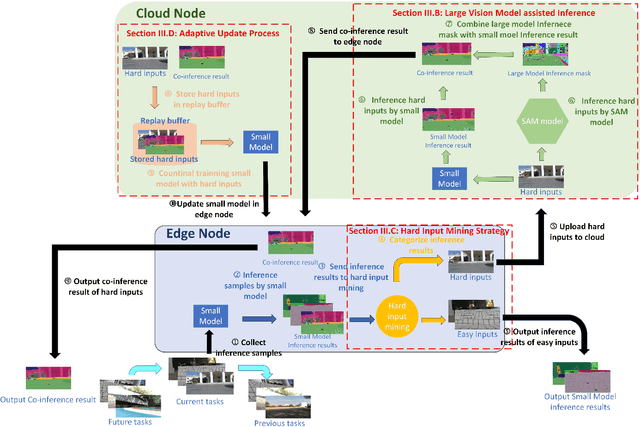

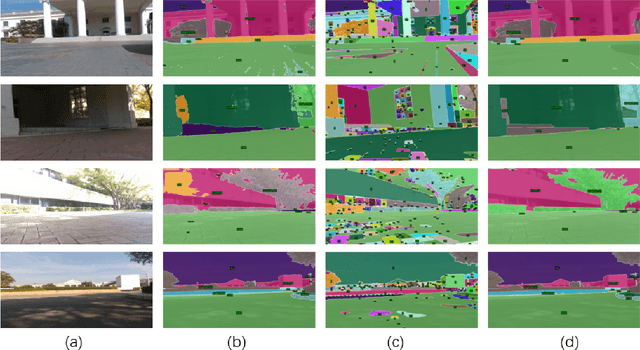

Recent large vision models (e.g., SAM) enjoy great potential to facilitate intelligent perception with high accuracy. Yet, the resource constraints in the IoT environment tend to limit such large vision models to be locally deployed, incurring considerable inference latency thereby making it difficult to support real-time applications, such as autonomous driving and robotics. Edge-cloud collaboration with large-small model co-inference offers a promising approach to achieving high inference accuracy and low latency. However, existing edge-cloud collaboration methods are tightly coupled with the model architecture and cannot adapt to the dynamic data drifts in heterogeneous IoT environments. To address the issues, we propose LAECIPS, a new edge-cloud collaboration framework. In LAECIPS, both the large vision model on the cloud and the lightweight model on the edge are plug-and-play. We design an edge-cloud collaboration strategy based on hard input mining, optimized for both high accuracy and low latency. We propose to update the edge model and its collaboration strategy with the cloud under the supervision of the large vision model, so as to adapt to the dynamic IoT data streams. Theoretical analysis of LAECIPS proves its feasibility. Experiments conducted in a robotic semantic segmentation system using real-world datasets show that LAECIPS outperforms its state-of-the-art competitors in accuracy, latency, and communication overhead while having better adaptability to dynamic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge