Rongchan Zhu

Riemannian Neural Geodesic Interpolant

Apr 22, 2025

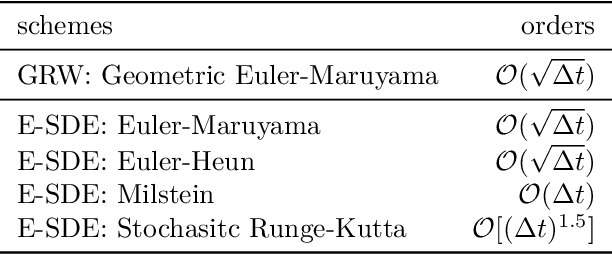

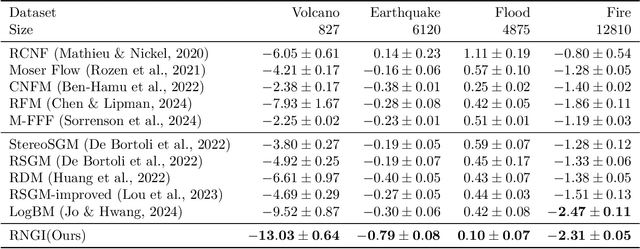

Abstract:Stochastic interpolants are efficient generative models that bridge two arbitrary probability density functions in finite time, enabling flexible generation from the source to the target distribution or vice versa. These models are primarily developed in Euclidean space, and are therefore limited in their application to many distribution learning problems defined on Riemannian manifolds in real-world scenarios. In this work, we introduce the Riemannian Neural Geodesic Interpolant (RNGI) model, which interpolates between two probability densities on a Riemannian manifold along the stochastic geodesics, and then samples from one endpoint as the final state using the continuous flow originating from the other endpoint. We prove that the temporal marginal density of RNGI solves a transport equation on the Riemannian manifold. After training the model's the neural velocity and score fields, we propose the Embedding Stochastic Differential Equation (E-SDE) algorithm for stochastic sampling of RNGI. E-SDE significantly improves the sampling quality by reducing the accumulated error caused by the excessive intrinsic discretization of Riemannian Brownian motion in the classical Geodesic Random Walk (GRW) algorithm. We also provide theoretical bounds on the generative bias measured in terms of KL-divergence. Finally, we demonstrate the effectiveness of the proposed RNGI and E-SDE through experiments conducted on both collected and synthetic distributions on S2 and SO(3).

Monte Carlo Neural Operator for Learning PDEs via Probabilistic Representation

Feb 10, 2023

Abstract:Neural operators, which use deep neural networks to approximate the solution mappings of partial differential equation (PDE) systems, are emerging as a new paradigm for PDE simulation. The neural operators could be trained in supervised or unsupervised ways, i.e., by using the generated data or the PDE information. The unsupervised training approach is essential when data generation is costly or the data is less qualified (e.g., insufficient and noisy). However, its performance and efficiency have plenty of room for improvement. To this end, we design a new loss function based on the Feynman-Kac formula and call the developed neural operator Monte-Carlo Neural Operator (MCNO), which can allow larger temporal steps and efficiently handle fractional diffusion operators. Our analyses show that MCNO has advantages in handling complex spatial conditions and larger temporal steps compared with other unsupervised methods. Furthermore, MCNO is more robust with the perturbation raised by the numerical scheme and operator approximation. Numerical experiments on the diffusion equation and Navier-Stokes equation show significant accuracy improvement compared with other unsupervised baselines, especially for the vibrated initial condition and long-time simulation settings.

Deep Random Vortex Method for Simulation and Inference of Navier-Stokes Equations

Jun 20, 2022

Abstract:Navier-Stokes equations are significant partial differential equations that describe the motion of fluids such as liquids and air. Due to the importance of Navier-Stokes equations, the development on efficient numerical schemes is important for both science and engineer. Recently, with the development of AI techniques, several approaches have been designed to integrate deep neural networks in simulating and inferring the fluid dynamics governed by incompressible Navier-Stokes equations, which can accelerate the simulation or inferring process in a mesh-free and differentiable way. In this paper, we point out that the capability of existing deep Navier-Stokes informed methods is limited to handle non-smooth or fractional equations, which are two critical situations in reality. To this end, we propose the \emph{Deep Random Vortex Method} (DRVM), which combines the neural network with a random vortex dynamics system equivalent to the Navier-Stokes equation. Specifically, the random vortex dynamics motivates a Monte Carlo based loss function for training the neural network, which avoids the calculation of derivatives through auto-differentiation. Therefore, DRVM not only can efficiently solve Navier-Stokes equations involving rough path, non-differentiable initial conditions and fractional operators, but also inherits the mesh-free and differentiable benefits of the deep-learning-based solver. We conduct experiments on the Cauchy problem, parametric solver learning, and the inverse problem of both 2-d and 3-d incompressible Navier-Stokes equations. The proposed method achieves accurate results for simulation and inference of Navier-Stokes equations. Especially for the cases that include singular initial conditions, DRVM significantly outperforms existing PINN method.

Neural Operator with Regularity Structure for Modeling Dynamics Driven by SPDEs

Apr 14, 2022

Abstract:Stochastic partial differential equations (SPDEs) are significant tools for modeling dynamics in many areas including atmospheric sciences and physics. Neural Operators, generations of neural networks with capability of learning maps between infinite-dimensional spaces, are strong tools for solving parametric PDEs. However, they lack the ability to modeling SPDEs which usually have poor regularity due to the driving noise. As the theory of regularity structure has achieved great successes in analyzing SPDEs and provides the concept model feature vectors that well-approximate SPDEs' solutions, we propose the Neural Operator with Regularity Structure (NORS) which incorporates the feature vectors for modeling dynamics driven by SPDEs. We conduct experiments on various of SPDEs including the dynamic Phi41 model and the 2d stochastic Navier-Stokes equation, and the results demonstrate that the NORS is resolution-invariant, efficient, and achieves one order of magnitude lower error with a modest amount of data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge