Robert Wille

Feature Identification for Hierarchical Contrastive Learning

Oct 01, 2025Abstract:Hierarchical classification is a crucial task in many applications, where objects are organized into multiple levels of categories. However, conventional classification approaches often neglect inherent inter-class relationships at different hierarchy levels, thus missing important supervisory signals. Thus, we propose two novel hierarchical contrastive learning (HMLC) methods. The first, leverages a Gaussian Mixture Model (G-HMLC) and the second uses an attention mechanism to capture hierarchy-specific features (A-HMLC), imitating human processing. Our approach explicitly models inter-class relationships and imbalanced class distribution at higher hierarchy levels, enabling fine-grained clustering across all hierarchy levels. On the competitive CIFAR100 and ModelNet40 datasets, our method achieves state-of-the-art performance in linear evaluation, outperforming existing hierarchical contrastive learning methods by 2 percentage points in terms of accuracy. The effectiveness of our approach is backed by both quantitative and qualitative results, highlighting its potential for applications in computer vision and beyond.

GENIE-ASI: Generative Instruction and Executable Code for Analog Subcircuit Identification

Aug 26, 2025Abstract:Analog subcircuit identification is a core task in analog design, essential for simulation, sizing, and layout. Traditional methods often require extensive human expertise, rule-based encoding, or large labeled datasets. To address these challenges, we propose GENIE-ASI, the first training-free, large language model (LLM)-based methodology for analog subcircuit identification. GENIE-ASI operates in two phases: it first uses in-context learning to derive natural language instructions from a few demonstration examples, then translates these into executable Python code to identify subcircuits in unseen SPICE netlists. In addition, to evaluate LLM-based approaches systematically, we introduce a new benchmark composed of operational amplifier netlists (op-amps) that cover a wide range of subcircuit variants. Experimental results on the proposed benchmark show that GENIE-ASI matches rule-based performance on simple structures (F1-score = 1.0), remains competitive on moderate abstractions (F1-score = 0.81), and shows potential even on complex subcircuits (F1-score = 0.31). These findings demonstrate that LLMs can serve as adaptable, general-purpose tools in analog design automation, opening new research directions for foundation model applications in analog design automation.

TRIDE: A Text-assisted Radar-Image weather-aware fusion network for Depth Estimation

Aug 11, 2025Abstract:Depth estimation, essential for autonomous driving, seeks to interpret the 3D environment surrounding vehicles. The development of radar sensors, known for their cost-efficiency and robustness, has spurred interest in radar-camera fusion-based solutions. However, existing algorithms fuse features from these modalities without accounting for weather conditions, despite radars being known to be more robust than cameras under adverse weather. Additionally, while Vision-Language models have seen rapid advancement, utilizing language descriptions alongside other modalities for depth estimation remains an open challenge. This paper first introduces a text-generation strategy along with feature extraction and fusion techniques that can assist monocular depth estimation pipelines, leading to improved accuracy across different algorithms on the KITTI dataset. Building on this, we propose TRIDE, a radar-camera fusion algorithm that enhances text feature extraction by incorporating radar point information. To address the impact of weather on sensor performance, we introduce a weather-aware fusion block that adaptively adjusts radar weighting based on current weather conditions. Our method, benchmarked on the nuScenes dataset, demonstrates performance gains over the state-of-the-art, achieving a 12.87% improvement in MAE and a 9.08% improvement in RMSE. Code: https://github.com/harborsarah/TRIDE

MoRAL: Motion-aware Multi-Frame 4D Radar and LiDAR Fusion for Robust 3D Object Detection

May 14, 2025Abstract:Reliable autonomous driving systems require accurate detection of traffic participants. To this end, multi-modal fusion has emerged as an effective strategy. In particular, 4D radar and LiDAR fusion methods based on multi-frame radar point clouds have demonstrated the effectiveness in bridging the point density gap. However, they often neglect radar point clouds' inter-frame misalignment caused by object movement during accumulation and do not fully exploit the object dynamic information from 4D radar. In this paper, we propose MoRAL, a motion-aware multi-frame 4D radar and LiDAR fusion framework for robust 3D object detection. First, a Motion-aware Radar Encoder (MRE) is designed to compensate for inter-frame radar misalignment from moving objects. Later, a Motion Attention Gated Fusion (MAGF) module integrate radar motion features to guide LiDAR features to focus on dynamic foreground objects. Extensive evaluations on the View-of-Delft (VoD) dataset demonstrate that MoRAL outperforms existing methods, achieving the highest mAP of 73.30% in the entire area and 88.68% in the driving corridor. Notably, our method also achieves the best AP of 69.67% for pedestrians in the entire area and 96.25% for cyclists in the driving corridor.

CaRaFFusion: Improving 2D Semantic Segmentation with Camera-Radar Point Cloud Fusion and Zero-Shot Image Inpainting

May 06, 2025Abstract:Segmenting objects in an environment is a crucial task for autonomous driving and robotics, as it enables a better understanding of the surroundings of each agent. Although camera sensors provide rich visual details, they are vulnerable to adverse weather conditions. In contrast, radar sensors remain robust under such conditions, but often produce sparse and noisy data. Therefore, a promising approach is to fuse information from both sensors. In this work, we propose a novel framework to enhance camera-only baselines by integrating a diffusion model into a camera-radar fusion architecture. We leverage radar point features to create pseudo-masks using the Segment-Anything model, treating the projected radar points as point prompts. Additionally, we propose a noise reduction unit to denoise these pseudo-masks, which are further used to generate inpainted images that complete the missing information in the original images. Our method improves the camera-only segmentation baseline by 2.63% in mIoU and enhances our camera-radar fusion architecture by 1.48% in mIoU on the Waterscenes dataset. This demonstrates the effectiveness of our approach for semantic segmentation using camera-radar fusion under adverse weather conditions.

4D mmWave Radar in Adverse Environments for Autonomous Driving: A Survey

Mar 31, 2025Abstract:Autonomous driving systems require accurate and reliable perception. However, adverse environments, such as rain, snow, and fog, can significantly degrade the performance of LiDAR and cameras. In contrast, 4D millimeter-wave (mmWave) radar not only provides 3D sensing and additional velocity measurements but also maintains robustness in challenging conditions, making it increasingly valuable for autonomous driving. Recently, research on 4D mmWave radar under adverse environments has been growing, but a comprehensive survey is still lacking. To bridge this gap, this survey comprehensively reviews the current research on 4D mmWave radar under adverse environments. First, we present an overview of existing 4D mmWave radar datasets encompassing diverse weather and lighting scenarios. Next, we analyze methods and models according to different adverse conditions. Finally, the challenges faced in current studies and potential future directions are discussed for advancing 4D mmWave radar applications in harsh environments. To the best of our knowledge, this is the first survey specifically focusing on 4D mmWave radar in adverse environments for autonomous driving.

MutualForce: Mutual-Aware Enhancement for 4D Radar-LiDAR 3D Object Detection

Jan 17, 2025Abstract:Radar and LiDAR have been widely used in autonomous driving as LiDAR provides rich structure information, and radar demonstrates high robustness under adverse weather. Recent studies highlight the effectiveness of fusing radar and LiDAR point clouds. However, challenges remain due to the modality misalignment and information loss during feature extractions. To address these issues, we propose a 4D radar-LiDAR framework to mutually enhance their representations. Initially, the indicative features from radar are utilized to guide both radar and LiDAR geometric feature learning. Subsequently, to mitigate their sparsity gap, the shape information from LiDAR is used to enrich radar BEV features. Extensive experiments on the View-of-Delft (VoD) dataset demonstrate our approach's superiority over existing methods, achieving the highest mAP of 71.76% across the entire area and 86.36\% within the driving corridor. Especially for cars, we improve the AP by 4.17% and 4.20% due to the strong indicative features and symmetric shapes.

LiRCDepth: Lightweight Radar-Camera Depth Estimation via Knowledge Distillation and Uncertainty Guidance

Dec 20, 2024Abstract:Recently, radar-camera fusion algorithms have gained significant attention as radar sensors provide geometric information that complements the limitations of cameras. However, most existing radar-camera depth estimation algorithms focus solely on improving performance, often neglecting computational efficiency. To address this gap, we propose LiRCDepth, a lightweight radar-camera depth estimation model. We incorporate knowledge distillation to enhance the training process, transferring critical information from a complex teacher model to our lightweight student model in three key domains. Firstly, low-level and high-level features are transferred by incorporating pixel-wise and pair-wise distillation. Additionally, we introduce an uncertainty-aware inter-depth distillation loss to refine intermediate depth maps during decoding. Leveraging our proposed knowledge distillation scheme, the lightweight model achieves a 6.6% improvement in MAE on the nuScenes dataset compared to the model trained without distillation.

GET-UP: GEomeTric-aware Depth Estimation with Radar Points UPsampling

Sep 02, 2024

Abstract:Depth estimation plays a pivotal role in autonomous driving, facilitating a comprehensive understanding of the vehicle's 3D surroundings. Radar, with its robustness to adverse weather conditions and capability to measure distances, has drawn significant interest for radar-camera depth estimation. However, existing algorithms process the inherently noisy and sparse radar data by projecting 3D points onto the image plane for pixel-level feature extraction, overlooking the valuable geometric information contained within the radar point cloud. To address this gap, we propose GET-UP, leveraging attention-enhanced Graph Neural Networks (GNN) to exchange and aggregate both 2D and 3D information from radar data. This approach effectively enriches the feature representation by incorporating spatial relationships compared to traditional methods that rely only on 2D feature extraction. Furthermore, we incorporate a point cloud upsampling task to densify the radar point cloud, rectify point positions, and derive additional 3D features under the guidance of lidar data. Finally, we fuse radar and camera features during the decoding phase for depth estimation. We benchmark our proposed GET-UP on the nuScenes dataset, achieving state-of-the-art performance with a 15.3% and 14.7% improvement in MAE and RMSE over the previously best-performing model.

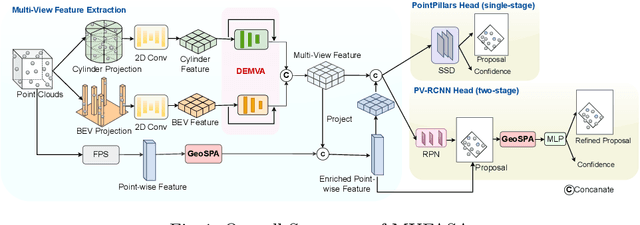

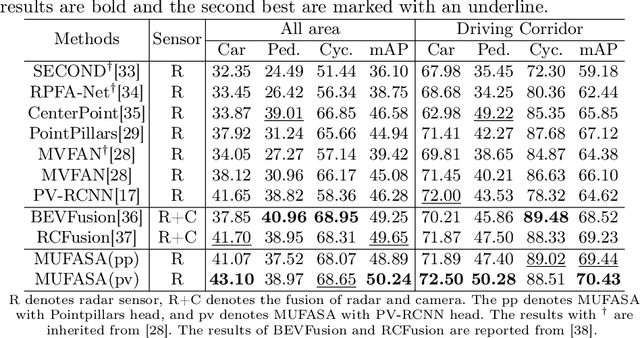

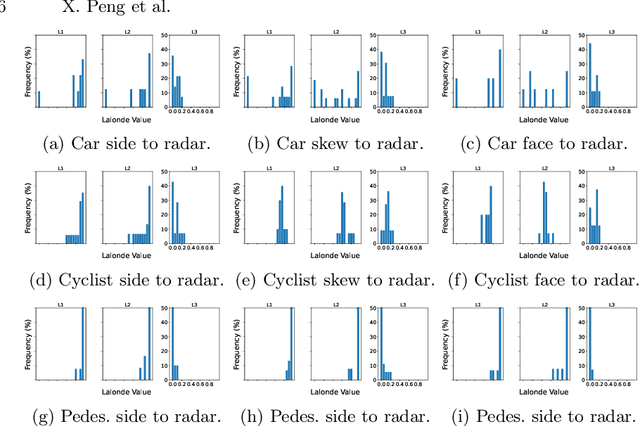

MUFASA: Multi-View Fusion and Adaptation Network with Spatial Awareness for Radar Object Detection

Aug 01, 2024

Abstract:In recent years, approaches based on radar object detection have made significant progress in autonomous driving systems due to their robustness under adverse weather compared to LiDAR. However, the sparsity of radar point clouds poses challenges in achieving precise object detection, highlighting the importance of effective and comprehensive feature extraction technologies. To address this challenge, this paper introduces a comprehensive feature extraction method for radar point clouds. This study first enhances the capability of detection networks by using a plug-and-play module, GeoSPA. It leverages the Lalonde features to explore local geometric patterns. Additionally, a distributed multi-view attention mechanism, DEMVA, is designed to integrate the shared information across the entire dataset with the global information of each individual frame. By employing the two modules, we present our method, MUFASA, which enhances object detection performance through improved feature extraction. The approach is evaluated on the VoD and TJ4DRaDSet datasets to demonstrate its effectiveness. In particular, we achieve state-of-the-art results among radar-based methods on the VoD dataset with the mAP of 50.24%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge