Robert Amelard

Enhancing Food Intake Tracking in Long-Term Care with Automated Food Imaging and Nutrient Intake Tracking Technology

Dec 08, 2021

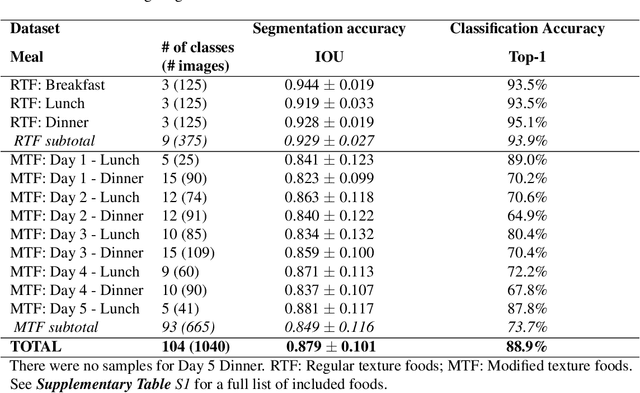

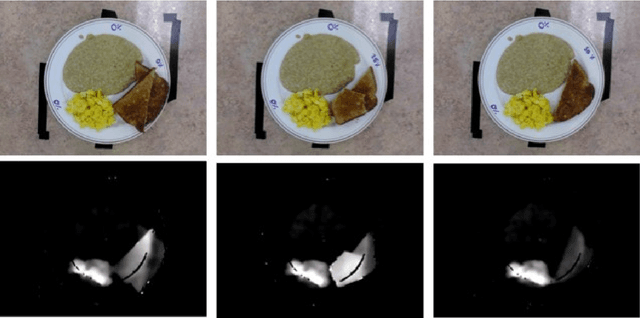

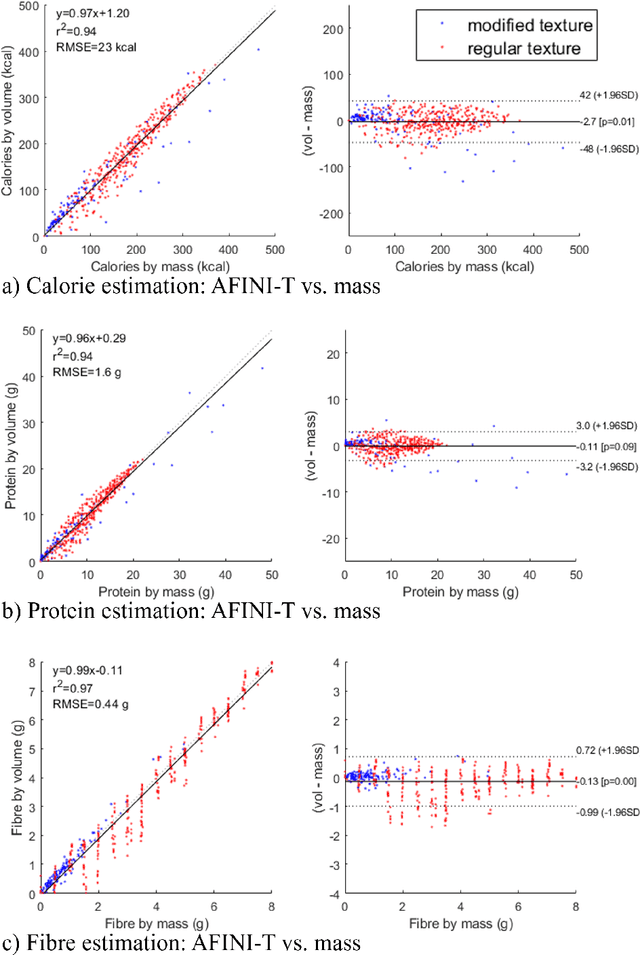

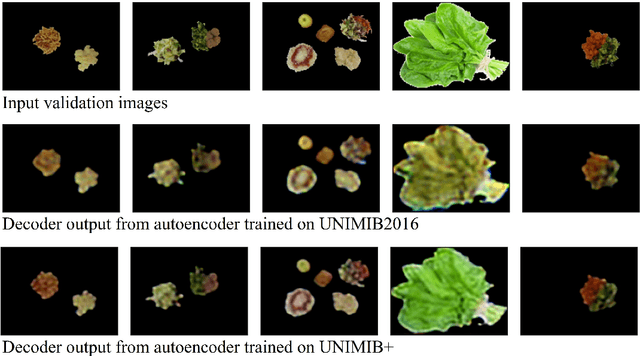

Abstract:Half of long-term care (LTC) residents are malnourished increasing hospitalization, mortality, morbidity, with lower quality of life. Current tracking methods are subjective and time consuming. This paper presents the automated food imaging and nutrient intake tracking (AFINI-T) technology designed for LTC. We propose a novel convolutional autoencoder for food classification, trained on an augmented UNIMIB2016 dataset and tested on our simulated LTC food intake dataset (12 meal scenarios; up to 15 classes each; top-1 classification accuracy: 88.9%; mean intake error: -0.4 mL$\pm$36.7 mL). Nutrient intake estimation by volume was strongly linearly correlated with nutrient estimates from mass ($r^2$ 0.92 to 0.99) with good agreement between methods ($\sigma$= -2.7 to -0.01; zero within each of the limits of agreement). The AFINI-T approach is a deep-learning powered computational nutrient sensing system that may provide a novel means for more accurately and objectively tracking LTC resident food intake to support and prevent malnutrition tracking strategies.

Temporal prediction of oxygen uptake dynamics from wearable sensors during low-, moderate-, and heavy-intensity exercise

May 20, 2021

Abstract:Oxygen consumption (VO$_2$) provides established clinical and physiological indicators of cardiorespiratory function and exercise capacity. However, VO$_2$ monitoring is largely limited to specialized laboratory settings, making its widespread monitoring elusive. Here, we investigate temporal prediction of VO$_2$ from wearable sensors during cycle ergometer exercise using a temporal convolutional network (TCN). Cardiorespiratory signals were acquired from a smart shirt with integrated textile sensors alongside ground-truth VO$_2$ from a metabolic system on twenty-two young healthy adults. Participants performed one ramp-incremental and three pseudorandom binary sequence exercise protocols to assess a range of VO$_2$ dynamics. A TCN model was developed using causal convolutions across an effective history length to model the time-dependent nature of VO$_2$. Optimal history length was determined through minimum validation loss across hyperparameter values. The best performing model encoded 218 s history length (TCN-VO$_2$ A), with 187 s, 97 s, and 76 s yielding less than 3% deviation from the optimal validation loss. TCN-VO$_2$ A showed strong prediction accuracy (mean, 95% CI) across all exercise intensities (-22 ml.min$^{-1}$, [-262, 218]), spanning transitions from low-moderate (-23 ml.min$^{-1}$, [-250, 204]), low-heavy (14 ml.min$^{-1}$, [-252, 280]), ventilatory threshold-heavy (-49 ml.min$^{-1}$, [-274, 176]), and maximal (-32 ml.min$^{-1}$, [-261, 197]) exercise. Second-by-second classification of physical activity across 16090 s of predicted VO$_2$ was able to discern between vigorous, moderate, and light activity with high accuracy (94.1%). This system enables quantitative aerobic activity monitoring in non-laboratory settings across a range of exercise intensities using wearable sensors for monitoring exercise prescription adherence and personal fitness.

Fully-Automatic Semantic Segmentation for Food Intake Tracking in Long-Term Care Homes

Oct 24, 2019

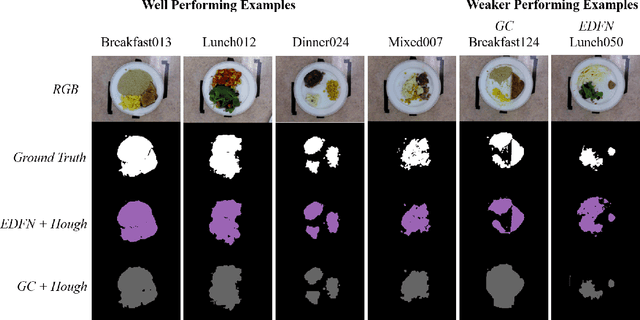

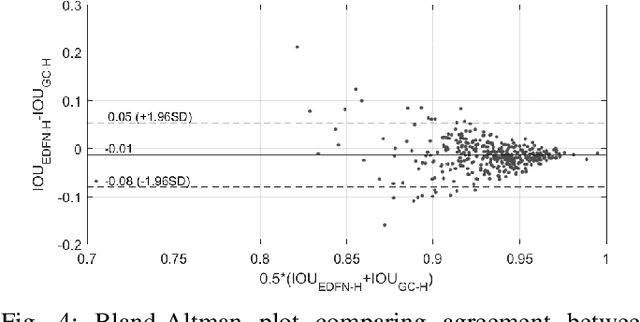

Abstract:Malnutrition impacts quality of life and places annually-recurring burden on the health care system. Half of older adults are at risk for malnutrition in long-term care (LTC). Monitoring and measuring nutritional intake is paramount yet involves time-consuming and subjective visual assessment, limiting current methods' reliability. The opportunity for automatic image-based estimation exists. Some progress outside LTC has been made (e.g., calories consumed, food classification), however, these methods have not been implemented in LTC, potentially due to a lack of ability to independently evaluate automatic segmentation methods within the intake estimation pipeline. Here, we propose and evaluate a novel fully-automatic semantic segmentation method for pixel-level classification of food on a plate using a deep convolutional neural network (DCNN). The macroarchitecture of the DCNN is a multi-scale encoder-decoder food network (EDFN) architecture comprising a residual encoder microarchitecture, a pyramid scene parsing decoder microarchitecture, and a specialized per-pixel food/no-food classification layer. The network was trained and validated on the pre-labelled UNIMIB 2016 food dataset (1027 tray images, 73 categories), and tested on our novel LTC plate dataset (390 plate images, 9 categories). Our fully-automatic segmentation method attained similar intersection over union to the semi-automatic graph cuts (91.2% vs. 93.7%). Advantages of our proposed system include: testing on a novel dataset, decoupled error analysis, no user-initiated annotations, with similar segmentation accuracy and enhanced reliability in terms of types of segmentation errors. This may address several short-comings currently limiting utility of automated food intake tracking in time-constrained LTC and hospital settings.

Assessing postural instability during cerebral hypoperfusion using sub-millimeter monocular 3D sway tracking

Jul 11, 2019

Abstract:Postural instability is prevalent in aging and neurodegenerative disease, decreasing quality of life and independence. Quantitatively monitoring balance control is important for assessing treatment efficacy and rehabilitation progress. However, existing technologies for assessing postural sway are complex and expensive, limiting their widespread utility. Here, we propose a monocular imaging system capable of assessing sub-millimeter 3D sway dynamics. By physically embedding anatomical targets with known \textit{a priori} geometric models, 3D central and upper body kinematic motion was automatically assessed through geometric feature tracking and 3D kinematic motion inverse estimation from a set of 2D frames. Sway was tracked in 3D and compared between control and hypoperfusion conditions. The proposed system demonstrated high agreement with a commercial motion capture system (error $4.4 \times 10^{-16} \pm 0.30$~mm, $r^2=0.9773$). Significant differences in sway dynamics were observed in early stance central anterior-posterior sway (control: $147.1 \pm 7.43$~mm, hypoperfusion: $177.8 \pm 15.3$~mm; $p=0.039$) and mid stance upper body coronal sway (control: $106.3 \pm 5.80$~mm, hypoperfusion: $128.1 \pm 18.4$~mm; $p=0.040$) commensurate with cerebral blood flow (CBF) perfusion deficit, followed by recovered sway dynamics during late stance governed by CBF recovery. This inexpensive single-camera system enables quantitative 3D sway monitoring for assessing neuromuscular balance control in weakly constrained environments.

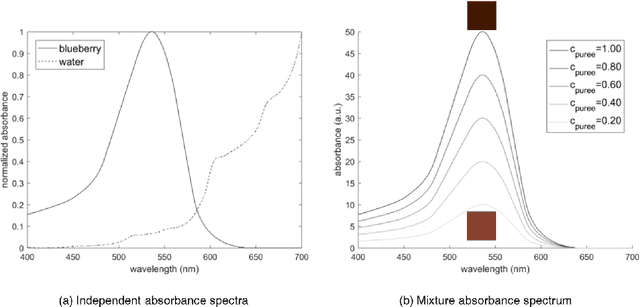

Towards computer vision powered color-nutrient assessment of pureed food

May 01, 2019

Abstract:With one in four individuals afflicted with malnutrition, computer vision may provide a way of introducing a new level of automation in the nutrition field to reliably monitor food and nutrient intake. In this study, we present a novel approach to modeling the link between color and vitamin A content using transmittance imaging of a pureed foods dilution series in a computer vision powered nutrient sensing system via a fine-tuned deep autoencoder network, which in this case was trained to predict the relative concentration of sweet potato purees. Experimental results show the deep autoencoder network can achieve an accuracy of 80% across beginner (6 month) and intermediate (8 month) commercially prepared pureed sweet potato samples. Prediction errors may be explained by fundamental differences in optical properties which are further discussed.

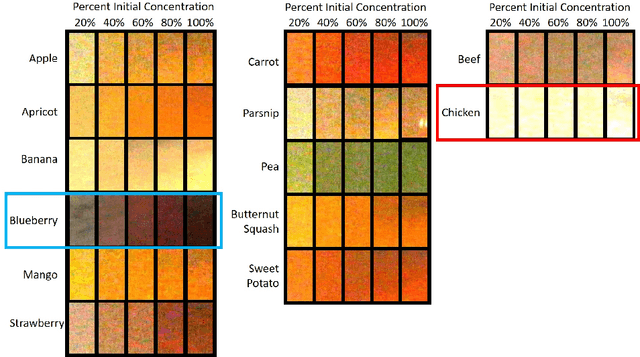

A new take on measuring relative nutritional density: The feasibility of using a deep neural network to assess commercially-prepared pureed food concentrations

Nov 03, 2017

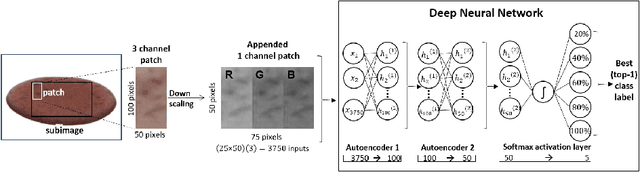

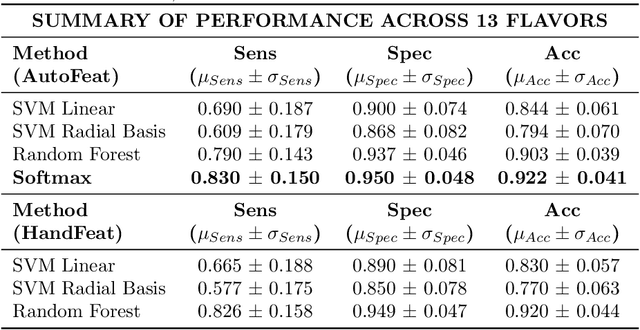

Abstract:Dysphagia affects 590 million people worldwide and increases risk for malnutrition. Pureed food may reduce choking, however preparation differences impact nutrient density making quality assurance necessary. This paper is the first study to investigate the feasibility of computational pureed food nutritional density analysis using an imaging system. Motivated by a theoretical optical dilution model, a novel deep neural network (DNN) was evaluated using 390 samples from thirteen types of commercially prepared purees at five dilutions. The DNN predicted relative concentration of the puree sample (20%, 40%, 60%, 80%, 100% initial concentration). Data were captured using same-side reflectance of multispectral imaging data at different polarizations at three exposures. Experimental results yielded an average top-1 prediction accuracy of 92.2+/-0.41% with sensitivity and specificity of 83.0+/-15.0% and 95.0+/-4.8%, respectively. This DNN imaging system for nutrient density analysis of pureed food shows promise as a novel tool for nutrient quality assurance.

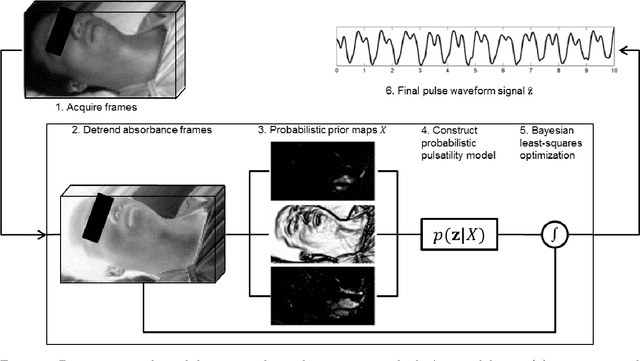

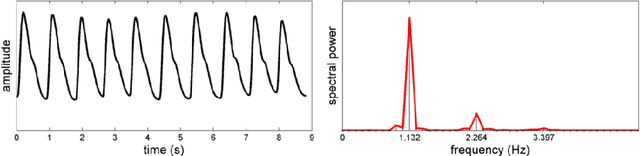

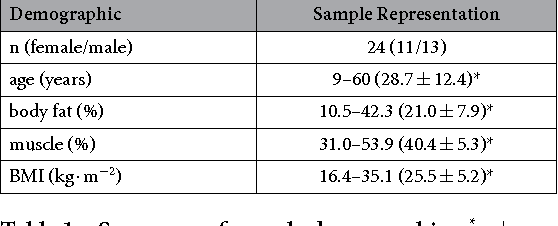

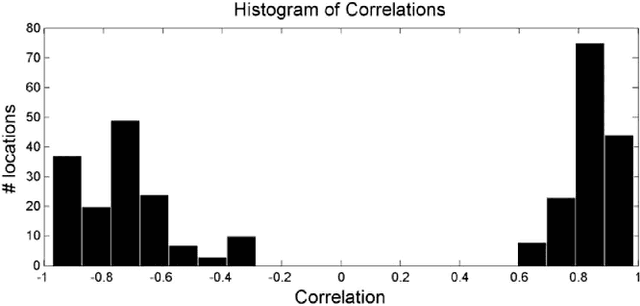

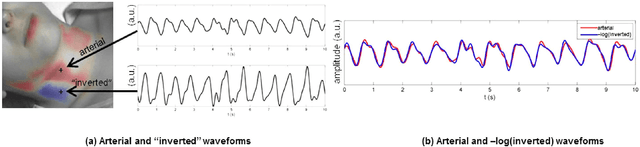

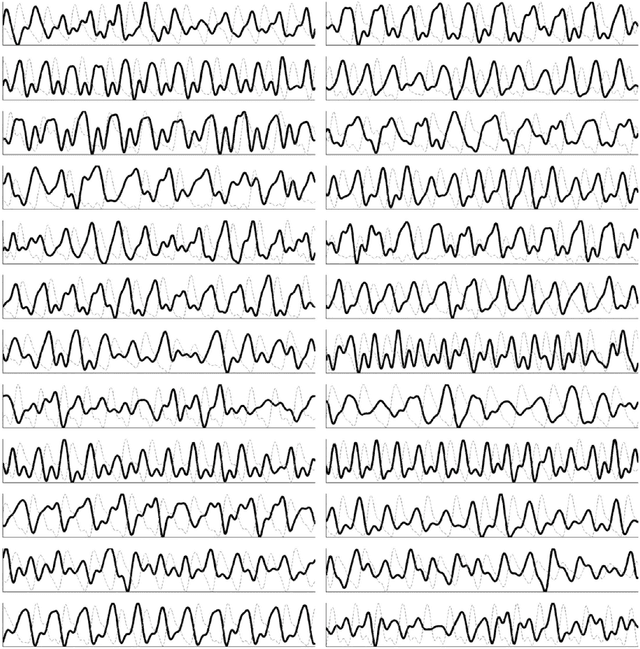

Spatial probabilistic pulsatility model for enhancing photoplethysmographic imaging systems

Jul 27, 2016Abstract:Photolethysmographic imaging (PPGI) is a widefield non-contact biophotonic technology able to remotely monitor cardiovascular function over anatomical areas. Though spatial context can provide increased physiological insight, existing PPGI systems rely on coarse spatial averaging with no anatomical priors for assessing arterial pulsatility. Here, we developed a continuous probabilistic pulsatility model for importance-weighted blood pulse waveform extraction. Using a data-driven approach, the model was constructed using a 23 participant sample with large demographic variation (11/12 female/male, age 11-60 years, BMI 16.4-35.1 kg$\cdot$m$^{-2}$). Using time-synchronized ground-truth waveforms, spatial correlation priors were computed and projected into a co-aligned importance-weighted Cartesian space. A modified Parzen-Rosenblatt kernel density estimation method was used to compute the continuous resolution-agnostic probabilistic pulsatility model. The model identified locations that consistently exhibited pulsatility across the sample. Blood pulse waveform signals extracted with the model exhibited significantly stronger temporal correlation ($W=35,p<0.01$) and spectral SNR ($W=31,p<0.01$) compared to uniform spatial averaging. Heart rate estimation was in strong agreement with true heart rate ($r^2=0.9619$, error $(\mu,\sigma)=(0.52,1.69)$ bpm).

A spectral-spatial fusion model for robust blood pulse waveform extraction in photoplethysmographic imaging

Jun 29, 2016

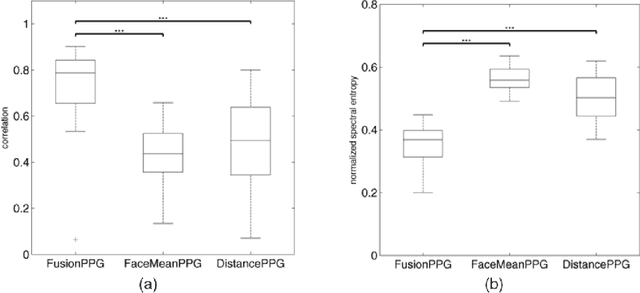

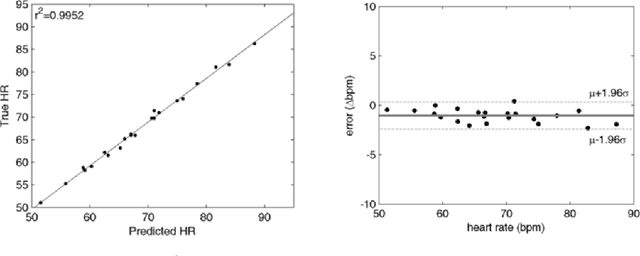

Abstract:Photoplethysmographic imaging is a camera-based solution for non-contact cardiovascular monitoring from a distance. This technology enables monitoring in situations where contact-based devices may be problematic or infeasible, such as ambulatory, sleep, and multi-individual monitoring. However, extracting the blood pulse waveform signal is challenging due to the unknown mixture of relevant (pulsatile) and irrelevant pixels in the scene. Here, we design and implement a signal fusion framework, FusionPPG, for extracting a blood pulse waveform signal with strong temporal fidelity from a scene without requiring anatomical priors (e.g., facial tracking). The extraction problem is posed as a Bayesian least squares fusion problem, and solved using a novel probabilistic pulsatility model that incorporates both physiologically derived spectral and spatial waveform priors to identify pulsatility characteristics in the scene. Experimental results show statistically significantly improvements compared to the FaceMeanPPG method ($p<0.001$) and DistancePPG ($p<0.001$) methods. Heart rates predicted using FusionPPG correlated strongly with ground truth measurements ($r^2=0.9952$). FusionPPG was the only method able to assess cardiac arrhythmia via temporal analysis.

Non-contact hemodynamic imaging reveals the jugular venous pulse waveform

Apr 21, 2016

Abstract:Cardiovascular monitoring is important to prevent diseases from progressing. The jugular venous pulse (JVP) waveform offers important clinical information about cardiac health, but is not routinely examined due to its invasive catheterisation procedure. Here, we demonstrate for the first time that the JVP can be consistently observed in a non-contact manner using a novel light-based photoplethysmographic imaging system, coded hemodynamic imaging (CHI). While traditional monitoring methods measure the JVP at a single location, CHI's wide-field imaging capabilities were able to observe the jugular venous pulse's spatial flow profile for the first time. The important inflection points in the JVP were observed, meaning that cardiac abnormalities can be assessed through JVP distortions. CHI provides a new way to assess cardiac health through non-contact light-based JVP monitoring, and can be used in non-surgical environments for cardiac assessment.

Non-contact transmittance photoplethysmographic imaging (PPGI) for long-distance cardiovascular monitoring

Mar 23, 2015

Abstract:Photoplethysmography (PPG) devices are widely used for monitoring cardiovascular function. However, these devices require skin contact, which restrict their use to at-rest short-term monitoring using single-point measurements. Photoplethysmographic imaging (PPGI) has been recently proposed as a non-contact monitoring alternative by measuring blood pulse signals across a spatial region of interest. Existing systems operate in reflectance mode, of which many are limited to short-distance monitoring and are prone to temporal changes in ambient illumination. This paper is the first study to investigate the feasibility of long-distance non-contact cardiovascular monitoring at the supermeter level using transmittance PPGI. For this purpose, a novel PPGI system was designed at the hardware and software level using ambient correction via temporally coded illumination (TCI) and signal processing for PPGI signal extraction. Experimental results show that the processing steps yield a substantially more pulsatile PPGI signal than the raw acquired signal, resulting in statistically significant increases in correlation to ground-truth PPG in both short- ($p \in [<0.0001, 0.040]$) and long-distance ($p \in [<0.0001, 0.056]$) monitoring. The results support the hypothesis that long-distance heart rate monitoring is feasible using transmittance PPGI, allowing for new possibilities of monitoring cardiovascular function in a non-contact manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge