A spectral-spatial fusion model for robust blood pulse waveform extraction in photoplethysmographic imaging

Paper and Code

Jun 29, 2016

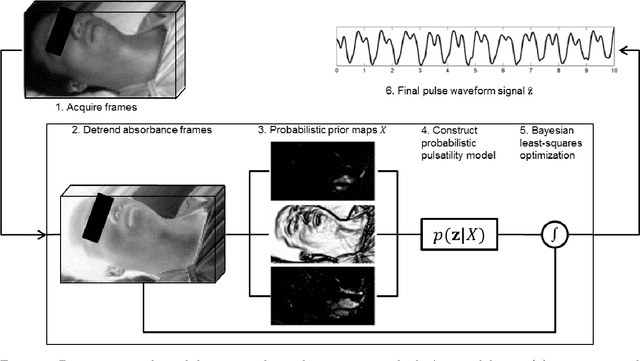

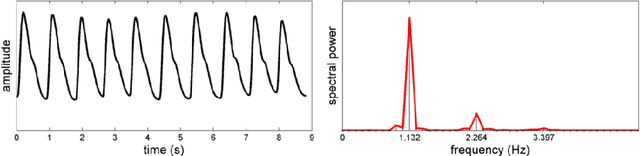

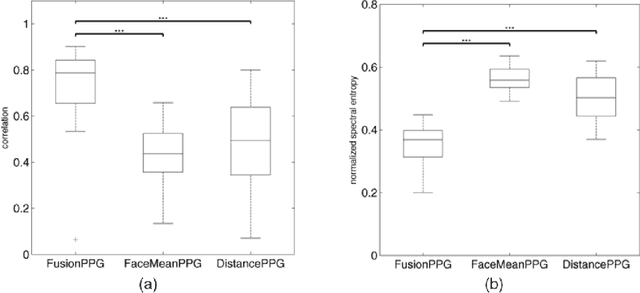

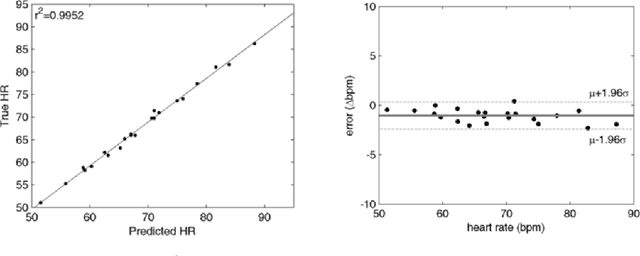

Photoplethysmographic imaging is a camera-based solution for non-contact cardiovascular monitoring from a distance. This technology enables monitoring in situations where contact-based devices may be problematic or infeasible, such as ambulatory, sleep, and multi-individual monitoring. However, extracting the blood pulse waveform signal is challenging due to the unknown mixture of relevant (pulsatile) and irrelevant pixels in the scene. Here, we design and implement a signal fusion framework, FusionPPG, for extracting a blood pulse waveform signal with strong temporal fidelity from a scene without requiring anatomical priors (e.g., facial tracking). The extraction problem is posed as a Bayesian least squares fusion problem, and solved using a novel probabilistic pulsatility model that incorporates both physiologically derived spectral and spatial waveform priors to identify pulsatility characteristics in the scene. Experimental results show statistically significantly improvements compared to the FaceMeanPPG method ($p<0.001$) and DistancePPG ($p<0.001$) methods. Heart rates predicted using FusionPPG correlated strongly with ground truth measurements ($r^2=0.9952$). FusionPPG was the only method able to assess cardiac arrhythmia via temporal analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge