Braeden Syrnyk

Dual Attention Network for Heart Rate and Respiratory Rate Estimation

Oct 31, 2021

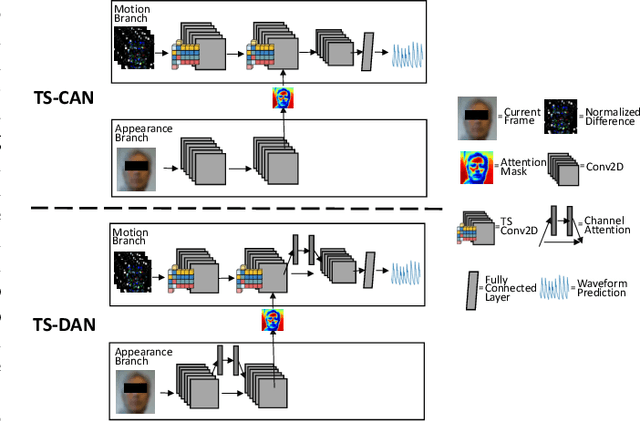

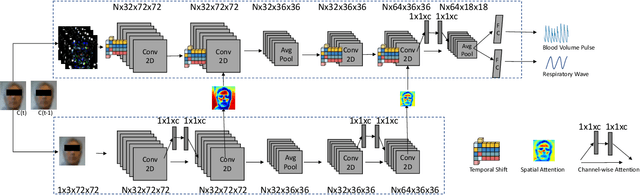

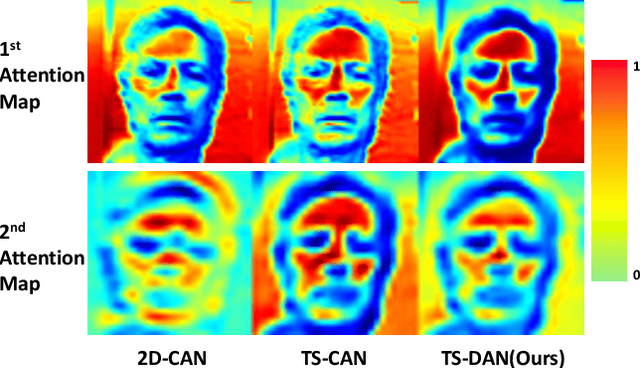

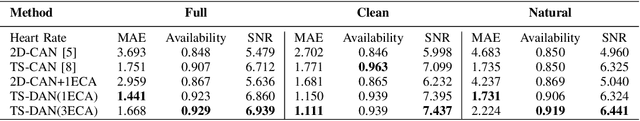

Abstract:Heart rate and respiratory rate measurement is a vital step for diagnosing many diseases. Non-contact camera based physiological measurement is more accessible and convenient in Telehealth nowadays than contact instruments such as fingertip oximeters since non-contact methods reduce risk of infection. However, remote physiological signal measurement is challenging due to environment illumination variations, head motion, facial expression, etc. It's also desirable to have a unified network which could estimate both heart rate and respiratory rate to reduce system complexity and latency. We propose a convolutional neural network which leverages spatial attention and channel attention, which we call it dual attention network (DAN) to jointly estimate heart rate and respiratory rate with camera video as input. Extensive experiments demonstrate that our proposed system significantly improves heart rate and respiratory rate measurement accuracy.

Fully-Automatic Semantic Segmentation for Food Intake Tracking in Long-Term Care Homes

Oct 24, 2019

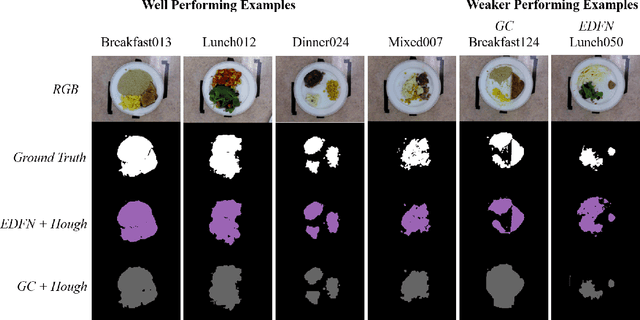

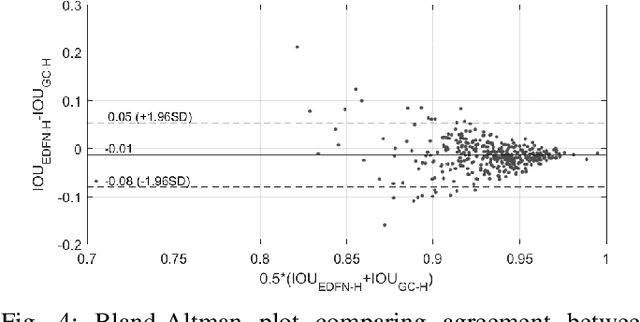

Abstract:Malnutrition impacts quality of life and places annually-recurring burden on the health care system. Half of older adults are at risk for malnutrition in long-term care (LTC). Monitoring and measuring nutritional intake is paramount yet involves time-consuming and subjective visual assessment, limiting current methods' reliability. The opportunity for automatic image-based estimation exists. Some progress outside LTC has been made (e.g., calories consumed, food classification), however, these methods have not been implemented in LTC, potentially due to a lack of ability to independently evaluate automatic segmentation methods within the intake estimation pipeline. Here, we propose and evaluate a novel fully-automatic semantic segmentation method for pixel-level classification of food on a plate using a deep convolutional neural network (DCNN). The macroarchitecture of the DCNN is a multi-scale encoder-decoder food network (EDFN) architecture comprising a residual encoder microarchitecture, a pyramid scene parsing decoder microarchitecture, and a specialized per-pixel food/no-food classification layer. The network was trained and validated on the pre-labelled UNIMIB 2016 food dataset (1027 tray images, 73 categories), and tested on our novel LTC plate dataset (390 plate images, 9 categories). Our fully-automatic segmentation method attained similar intersection over union to the semi-automatic graph cuts (91.2% vs. 93.7%). Advantages of our proposed system include: testing on a novel dataset, decoupled error analysis, no user-initiated annotations, with similar segmentation accuracy and enhanced reliability in terms of types of segmentation errors. This may address several short-comings currently limiting utility of automated food intake tracking in time-constrained LTC and hospital settings.

Towards computer vision powered color-nutrient assessment of pureed food

May 01, 2019

Abstract:With one in four individuals afflicted with malnutrition, computer vision may provide a way of introducing a new level of automation in the nutrition field to reliably monitor food and nutrient intake. In this study, we present a novel approach to modeling the link between color and vitamin A content using transmittance imaging of a pureed foods dilution series in a computer vision powered nutrient sensing system via a fine-tuned deep autoencoder network, which in this case was trained to predict the relative concentration of sweet potato purees. Experimental results show the deep autoencoder network can achieve an accuracy of 80% across beginner (6 month) and intermediate (8 month) commercially prepared pureed sweet potato samples. Prediction errors may be explained by fundamental differences in optical properties which are further discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge