Kaylen J. Pfisterer

Enhancing Food Intake Tracking in Long-Term Care with Automated Food Imaging and Nutrient Intake Tracking Technology

Dec 08, 2021

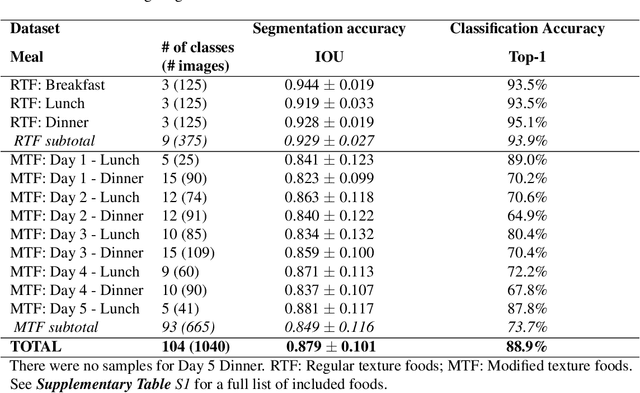

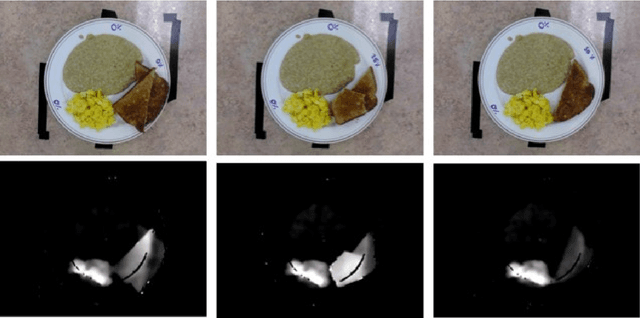

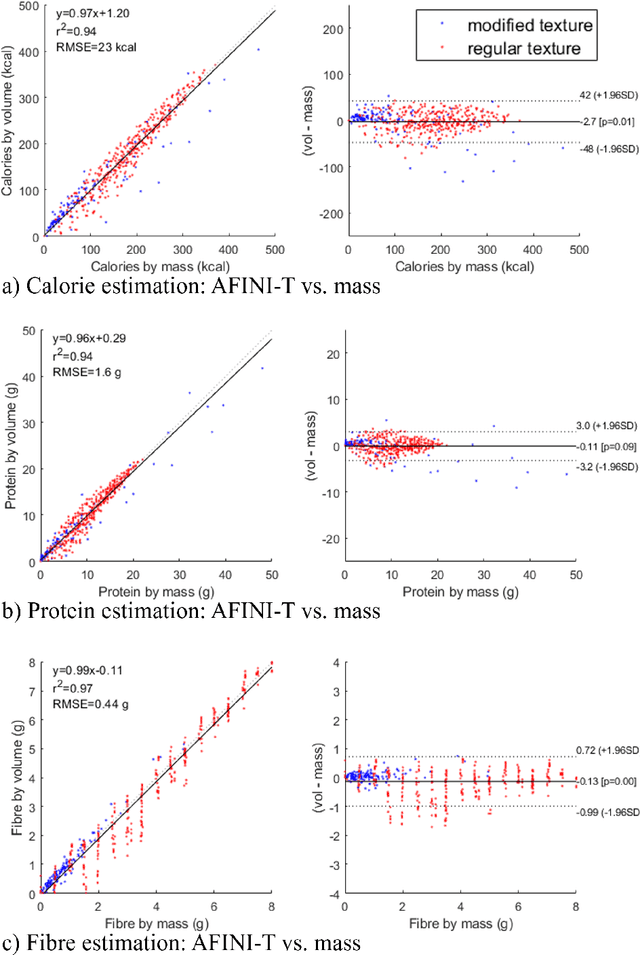

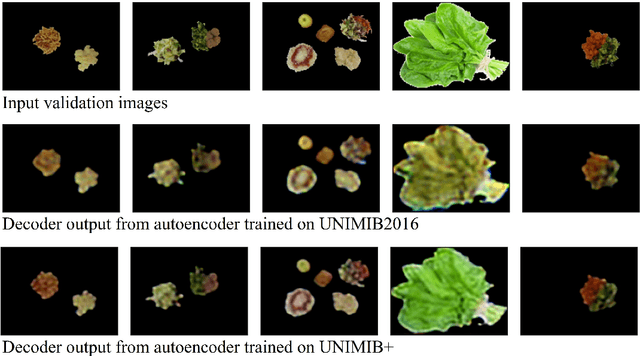

Abstract:Half of long-term care (LTC) residents are malnourished increasing hospitalization, mortality, morbidity, with lower quality of life. Current tracking methods are subjective and time consuming. This paper presents the automated food imaging and nutrient intake tracking (AFINI-T) technology designed for LTC. We propose a novel convolutional autoencoder for food classification, trained on an augmented UNIMIB2016 dataset and tested on our simulated LTC food intake dataset (12 meal scenarios; up to 15 classes each; top-1 classification accuracy: 88.9%; mean intake error: -0.4 mL$\pm$36.7 mL). Nutrient intake estimation by volume was strongly linearly correlated with nutrient estimates from mass ($r^2$ 0.92 to 0.99) with good agreement between methods ($\sigma$= -2.7 to -0.01; zero within each of the limits of agreement). The AFINI-T approach is a deep-learning powered computational nutrient sensing system that may provide a novel means for more accurately and objectively tracking LTC resident food intake to support and prevent malnutrition tracking strategies.

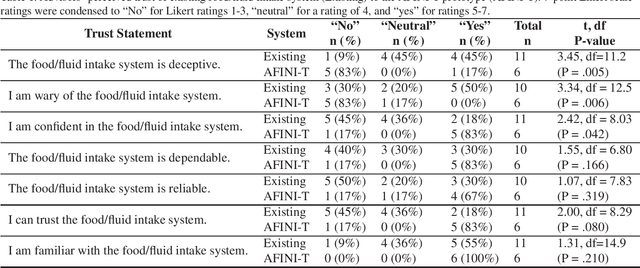

Food for thought: Ethical considerations of user trust in computer vision

May 29, 2019

Abstract:In computer vision research, especially when novel applications of tools are developed, ethical implications around user perceptions of trust in the underlying technology should be considered and supported. Here, we describe an example of the incorporation of such considerations within the long-term care sector for tracking resident food and fluid intake. We highlight our recent user study conducted to develop a Goldilocks quality horizontal prototype designed to support trust cues in which perceived trust in our horizontal prototype was higher than the existing system in place. We discuss the importance and need for user engagement as part of ongoing computer vision-driven technology development and describe several important factors related to trust that are relevant to developing decision-making tools.

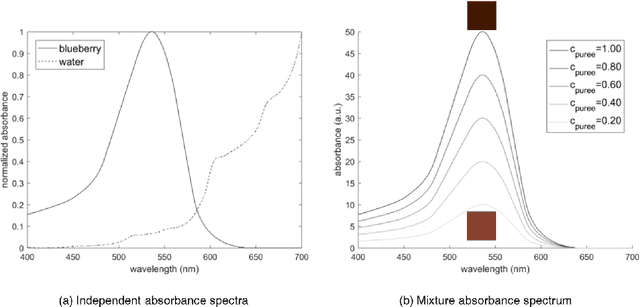

Towards computer vision powered color-nutrient assessment of pureed food

May 01, 2019

Abstract:With one in four individuals afflicted with malnutrition, computer vision may provide a way of introducing a new level of automation in the nutrition field to reliably monitor food and nutrient intake. In this study, we present a novel approach to modeling the link between color and vitamin A content using transmittance imaging of a pureed foods dilution series in a computer vision powered nutrient sensing system via a fine-tuned deep autoencoder network, which in this case was trained to predict the relative concentration of sweet potato purees. Experimental results show the deep autoencoder network can achieve an accuracy of 80% across beginner (6 month) and intermediate (8 month) commercially prepared pureed sweet potato samples. Prediction errors may be explained by fundamental differences in optical properties which are further discussed.

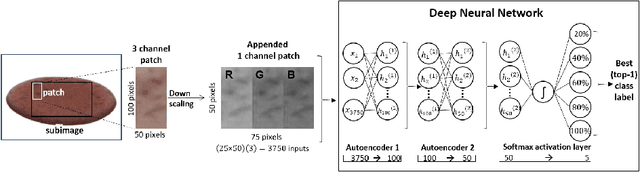

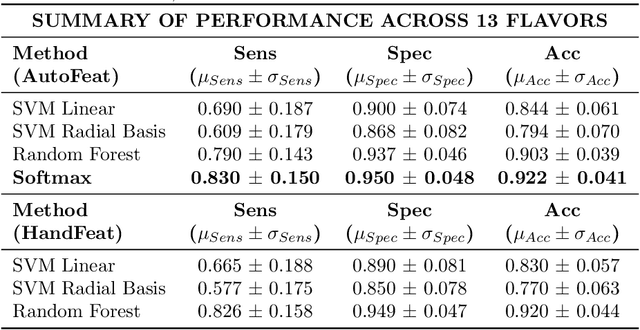

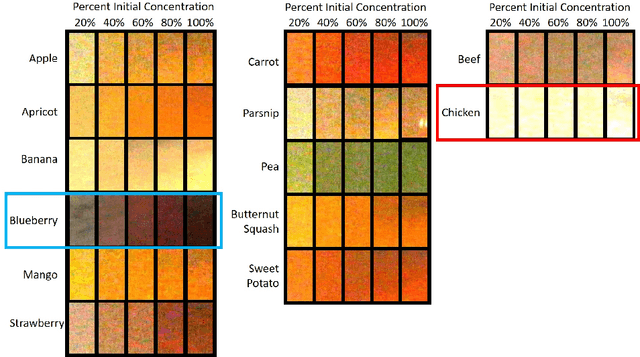

A new take on measuring relative nutritional density: The feasibility of using a deep neural network to assess commercially-prepared pureed food concentrations

Nov 03, 2017

Abstract:Dysphagia affects 590 million people worldwide and increases risk for malnutrition. Pureed food may reduce choking, however preparation differences impact nutrient density making quality assurance necessary. This paper is the first study to investigate the feasibility of computational pureed food nutritional density analysis using an imaging system. Motivated by a theoretical optical dilution model, a novel deep neural network (DNN) was evaluated using 390 samples from thirteen types of commercially prepared purees at five dilutions. The DNN predicted relative concentration of the puree sample (20%, 40%, 60%, 80%, 100% initial concentration). Data were captured using same-side reflectance of multispectral imaging data at different polarizations at three exposures. Experimental results yielded an average top-1 prediction accuracy of 92.2+/-0.41% with sensitivity and specificity of 83.0+/-15.0% and 95.0+/-4.8%, respectively. This DNN imaging system for nutrient density analysis of pureed food shows promise as a novel tool for nutrient quality assurance.

Non-contact transmittance photoplethysmographic imaging (PPGI) for long-distance cardiovascular monitoring

Mar 23, 2015

Abstract:Photoplethysmography (PPG) devices are widely used for monitoring cardiovascular function. However, these devices require skin contact, which restrict their use to at-rest short-term monitoring using single-point measurements. Photoplethysmographic imaging (PPGI) has been recently proposed as a non-contact monitoring alternative by measuring blood pulse signals across a spatial region of interest. Existing systems operate in reflectance mode, of which many are limited to short-distance monitoring and are prone to temporal changes in ambient illumination. This paper is the first study to investigate the feasibility of long-distance non-contact cardiovascular monitoring at the supermeter level using transmittance PPGI. For this purpose, a novel PPGI system was designed at the hardware and software level using ambient correction via temporally coded illumination (TCI) and signal processing for PPGI signal extraction. Experimental results show that the processing steps yield a substantially more pulsatile PPGI signal than the raw acquired signal, resulting in statistically significant increases in correlation to ground-truth PPG in both short- ($p \in [<0.0001, 0.040]$) and long-distance ($p \in [<0.0001, 0.056]$) monitoring. The results support the hypothesis that long-distance heart rate monitoring is feasible using transmittance PPGI, allowing for new possibilities of monitoring cardiovascular function in a non-contact manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge