Riccardo Scattolini

Nonlinear MPC design for incrementally ISS systems with application to GRU networks

Sep 28, 2023Abstract:This brief addresses the design of a Nonlinear Model Predictive Control (NMPC) strategy for exponentially incremental Input-to-State Stable (ISS) systems. In particular, a novel formulation is devised, which does not necessitate the onerous computation of terminal ingredients, but rather relies on the explicit definition of a minimum prediction horizon ensuring closed-loop stability. The designed methodology is particularly suited for the control of systems learned by Recurrent Neural Networks (RNNs), which are known for their enhanced modeling capabilities and for which the incremental ISS properties can be studied thanks to simple algebraic conditions. The approach is applied to Gated Recurrent Unit (GRU) networks, providing also a method for the design of a tailored state observer with convergence guarantees. The resulting control architecture is tested on a benchmark system, demonstrating its good control performances and efficient applicability.

Deep Long-Short Term Memory networks: Stability properties and Experimental validation

Apr 06, 2023Abstract:The aim of this work is to investigate the use of Incrementally Input-to-State Stable ($\delta$ISS) deep Long Short Term Memory networks (LSTMs) for the identification of nonlinear dynamical systems. We show that suitable sufficient conditions on the weights of the network can be leveraged to setup a training procedure able to learn provenly-$\delta$ISS LSTM models from data. The proposed approach is tested on a real brake-by-wire apparatus to identify a model of the system from input-output experimentally collected data. Results show satisfactory modeling performances.

Towards lifelong learning of Recurrent Neural Networks for control design

Aug 08, 2022

Abstract:This paper proposes a method for lifelong learning of Recurrent Neural Networks, such as NNARX, ESN, LSTM, and GRU, to be used as plant models in control system synthesis. The problem is significant because in many practical applications it is required to adapt the model when new information is available and/or the system undergoes changes, without the need to store an increasing amount of data as time proceeds. Indeed, in this context, many problems arise, such as the well known Catastrophic Forgetting and Capacity Saturation ones. We propose an adaptation algorithm inspired by Moving Horizon Estimators, deriving conditions for its convergence. The described method is applied to a simulated chemical plant, already adopted as a challenging benchmark in the existing literature. The main results achieved are discussed.

* Copyright 2022 EUCA. This article appears in the Proceedings of the 2022 European Control Conference (ECC'22), July 12-15, 2022, London, pp. 2018-2023

An Offset-Free Nonlinear MPC scheme for systems learned by Neural NARX models

Mar 30, 2022

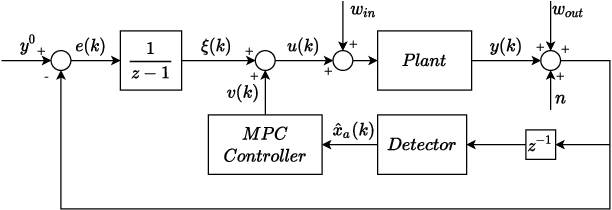

Abstract:This paper deals with the design of nonlinear MPC controllers that provide offset-free setpoint tracking for models described by Neural Nonlinear AutoRegressive eXogenous (NNARX) networks. The NNARX model is identified from input-output data collected from the plant, and can be given a state-space representation with known measurable states made by past input and output variables, so that a state observer is not required. In the training phase, the Incremental Input-to-State Stability ({\delta}ISS) property can be forced when consistent with the behavior of the plant. The {\delta}ISS property is then leveraged to augment the model with an explicit integral action on the output tracking error, which allows to achieve offset-free tracking capabilities to the designed control scheme. The proposed control architecture is numerically tested on a water heating system and the achieved results are compared to those scored by another popular offset-free MPC method, showing that the proposed scheme attains remarkable performances even in presence of disturbances acting on the plant.

On Recurrent Neural Networks for learning-based control: recent results and ideas for future developments

Nov 26, 2021

Abstract:This paper aims to discuss and analyze the potentialities of Recurrent Neural Networks (RNN) in control design applications. The main families of RNN are considered, namely Neural Nonlinear AutoRegressive eXogenous, (NNARX), Echo State Networks (ESN), Long Short Term Memory (LSTM), and Gated Recurrent Units (GRU). The goal is twofold. Firstly, to survey recent results concerning the training of RNN that enjoy Input-to-State Stability (ISS) and Incremental Input-to-State Stability ({\delta}ISS) guarantees. Secondly, to discuss the issues that still hinder the widespread use of RNN for control, namely their robustness, verifiability, and interpretability. The former properties are related to the so-called generalization capabilities of the networks, i.e. their consistency with the underlying real plants, even in presence of unseen or perturbed input trajectories. The latter is instead related to the possibility of providing a clear formal connection between the RNN model and the plant. In this context, we illustrate how ISS and {\delta}ISS represent a significant step towards the robustness and verifiability of the RNN models, while the requirement of interpretability paves the way to the use of physics-based networks. The design of model predictive controllers with RNN as plant's model is also briefly discussed. Lastly, some of the main topics of the paper are illustrated on a simulated chemical system.

Recurrent neural network-based Internal Model Control of unknown nonlinear stable systems

Aug 10, 2021

Abstract:Owing to their superior modeling capabilities, gated Recurrent Neural Networks (RNNs), such as Gated Recurrent Units (GRUs) and Long Short-Term Memory networks (LSTMs), have become popular tools for learning dynamical systems. This paper aims to discuss how these networks can be adopted for the synthesis of Internal Model Control (IMC) architectures. To this end, a first gated RNN is used to learn a model of the unknown input-output stable plant. Then, another gated RNN approximating the model inverse is trained. The proposed scheme is able to cope with the saturation of the control variables, and it can be deployed on low-power embedded controllers since it does not require any online computation. The approach is then tested on the Quadruple Tank benchmark system, resulting in satisfactory closed-loop performances.

Nonlinear MPC for Offset-Free Tracking of systems learned by GRU Neural Networks

Mar 03, 2021

Abstract:The use of Recurrent Neural Networks (RNNs) for system identification has recently gathered increasing attention, thanks to their black-box modeling capabilities.Albeit RNNs have been fruitfully adopted in many applications, only few works are devoted to provide rigorous theoretical foundations that justify their use for control purposes. The aim of this paper is to describe how stable Gated Recurrent Units (GRUs), a particular RNN architecture, can be trained and employed in a Nonlinear MPC framework to perform offset-free tracking of constant references with guaranteed closed-loop stability. The proposed approach is tested on a pH neutralization process benchmark, showing remarkable performances.

On the stability properties of Gated Recurrent Units neural networks

Nov 17, 2020

Abstract:The goal of this paper is to provide sufficient conditions for guaranteeing the Input-to-State Stability (ISS) and the Incremental Input-to-State Stability ({\delta}ISS) of Gated Recurrent Units (GRUs) neural networks. These conditions, devised for both single-layer and multi-layer architectures, consist of nonlinear inequalities on network's weights. They can be employed to check the stability of trained networks, or can be enforced as constraints during the training procedure of a GRU. The resulting training procedure is tested on a Quadruple Tank nonlinear benchmark system, showing satisfactory modeling performances.

Tustin neural networks: a class of recurrent nets for adaptive MPC of mechanical systems

Nov 04, 2019

Abstract:The use of recurrent neural networks to represent the dynamics of unstable systems is difficult due to the need to properly initialize their internal states, which in most of the cases do not have any physical meaning, consequent to the non-smoothness of the optimization problem. For this reason, in this paper focus is placed on mechanical systems characterized by a number of degrees of freedom, each one represented by two states, namely position and velocity. For these systems, a new recurrent neural network is proposed: Tustin-Net. Inspired by second-order dynamics, the network hidden states can be straightforwardly estimated, as their differential relationships with the measured states are hardcoded in the forward pass. The proposed structure is used to model a double inverted pendulum and for model-based Reinforcement Learning, where an adaptive Model Predictive Controller scheme using the Unscented Kalman Filter is proposed to deal with parameter changes in the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge