Caio Fabio Oliveira da Silva

Integrating Reinforcement Learning and Model Predictive Control with Applications to Microgrids

Sep 17, 2024Abstract:This work proposes an approach that integrates reinforcement learning and model predictive control (MPC) to efficiently solve finite-horizon optimal control problems in mixed-logical dynamical systems. Optimization-based control of such systems with discrete and continuous decision variables entails the online solution of mixed-integer quadratic or linear programs, which suffer from the curse of dimensionality. Our approach aims at mitigating this issue by effectively decoupling the decision on the discrete variables and the decision on the continuous variables. Moreover, to mitigate the combinatorial growth in the number of possible actions due to the prediction horizon, we conceive the definition of decoupled Q-functions to make the learning problem more tractable. The use of reinforcement learning reduces the online optimization problem of the MPC controller from a mixed-integer linear (quadratic) program to a linear (quadratic) program, greatly reducing the computational time. Simulation experiments for a microgrid, based on real-world data, demonstrate that the proposed method significantly reduces the online computation time of the MPC approach and that it generates policies with small optimality gaps and high feasibility rates.

Nonlinear MPC for Offset-Free Tracking of systems learned by GRU Neural Networks

Mar 03, 2021

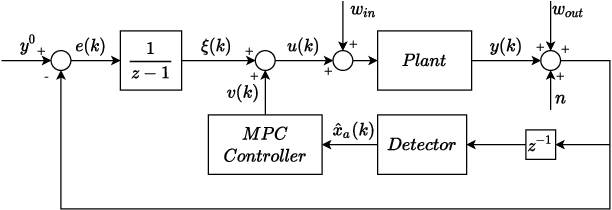

Abstract:The use of Recurrent Neural Networks (RNNs) for system identification has recently gathered increasing attention, thanks to their black-box modeling capabilities.Albeit RNNs have been fruitfully adopted in many applications, only few works are devoted to provide rigorous theoretical foundations that justify their use for control purposes. The aim of this paper is to describe how stable Gated Recurrent Units (GRUs), a particular RNN architecture, can be trained and employed in a Nonlinear MPC framework to perform offset-free tracking of constant references with guaranteed closed-loop stability. The proposed approach is tested on a pH neutralization process benchmark, showing remarkable performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge