Ricardo Borsoi

Department of EE, Federal University of Santa Catarina

Riemannian Change Point Detection on Manifolds with Robust Centroid Estimation

Aug 25, 2025Abstract:Non-parametric change-point detection in streaming time series data is a long-standing challenge in signal processing. Recent advancements in statistics and machine learning have increasingly addressed this problem for data residing on Riemannian manifolds. One prominent strategy involves monitoring abrupt changes in the center of mass of the time series. Implemented in a streaming fashion, this strategy, however, requires careful step size tuning when computing the updates of the center of mass. In this paper, we propose to leverage robust centroid on manifolds from M-estimation theory to address this issue. Our proposal consists of comparing two centroid estimates: the classical Karcher mean (sensitive to change) versus one defined from Huber's function (robust to change). This comparison leads to the definition of a test statistic whose performance is less sensitive to the underlying estimation method. We propose a stochastic Riemannian optimization algorithm to estimate both robust centroids efficiently. Experiments conducted on both simulated and real-world data across two representative manifolds demonstrate the superior performance of our proposed method.

Robust Recursive Fusion of Multiresolution Multispectral Images with Location-Aware Neural Networks

Jun 16, 2025Abstract:Multiresolution image fusion is a key problem for real-time satellite imaging and plays a central role in detecting and monitoring natural phenomena such as floods. It aims to solve the trade-off between temporal and spatial resolution in remote sensing instruments. Although several algorithms have been proposed for this problem, the presence of outliers such as clouds downgrades their performance. Moreover, strategies that integrate robustness, recursive operation and learned models are missing. In this paper, a robust recursive image fusion framework leveraging location-aware neural networks (NN) to model the image dynamics is proposed. Outliers are modeled by representing the probability of contamination of a given pixel and band. A NN model trained on a small dataset provides accurate predictions of the stochastic image time evolution, which improves both the accuracy and robustness of the method. A recursive solution is proposed to estimate the high-resolution images using a Bayesian variational inference framework. Experiments fusing images from the Landsat 8 and MODIS instruments show that the proposed approach is significantly more robust against cloud cover, without losing performance when no clouds are present.

KODA: A Data-Driven Recursive Model for Time Series Forecasting and Data Assimilation using Koopman Operators

Sep 29, 2024

Abstract:Approaches based on Koopman operators have shown great promise in forecasting time series data generated by complex nonlinear dynamical systems (NLDS). Although such approaches are able to capture the latent state representation of a NLDS, they still face difficulty in long term forecasting when applied to real world data. Specifically many real-world NLDS exhibit time-varying behavior, leading to nonstationarity that is hard to capture with such models. Furthermore they lack a systematic data-driven approach to perform data assimilation, that is, exploiting noisy measurements on the fly in the forecasting task. To alleviate the above issues, we propose a Koopman operator-based approach (named KODA - Koopman Operator with Data Assimilation) that integrates forecasting and data assimilation in NLDS. In particular we use a Fourier domain filter to disentangle the data into a physical component whose dynamics can be accurately represented by a Koopman operator, and residual dynamics that represents the local or time varying behavior that are captured by a flexible and learnable recursive model. We carefully design an architecture and training criterion that ensures this decomposition lead to stable and long-term forecasts. Moreover, we introduce a course correction strategy to perform data assimilation with new measurements at inference time. The proposed approach is completely data-driven and can be learned end-to-end. Through extensive experimental comparisons we show that KODA outperforms existing state of the art methods on multiple time series benchmarks such as electricity, temperature, weather, lorenz 63 and duffing oscillator demonstrating its superior performance and efficacy along the three tasks a) forecasting, b) data assimilation and c) state prediction.

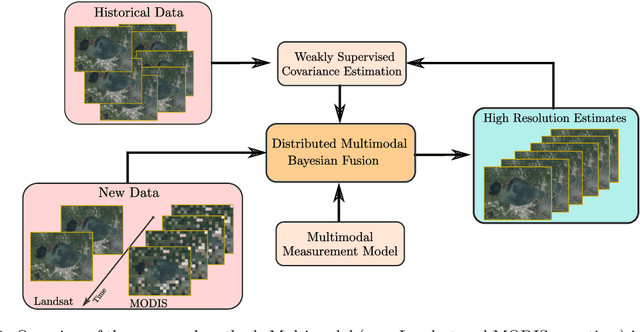

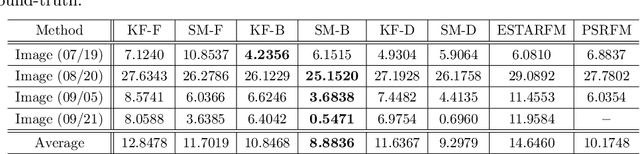

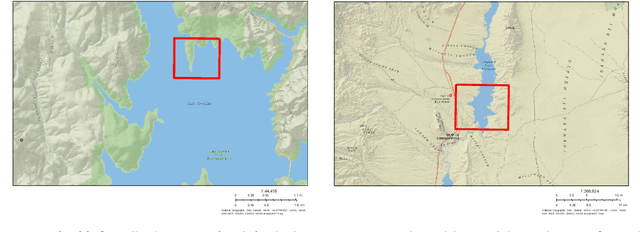

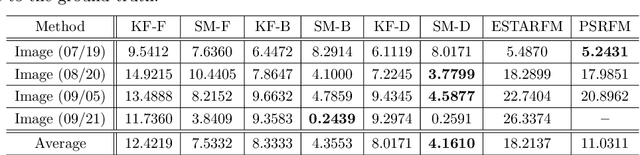

Online Fusion of Multi-resolution Multispectral Images with Weakly Supervised Temporal Dynamics

Jan 06, 2023

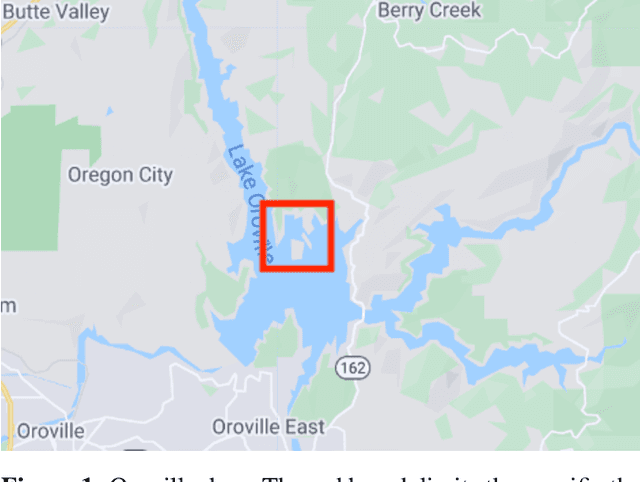

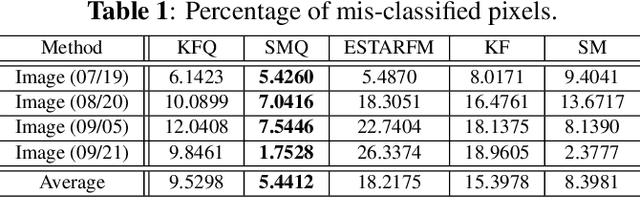

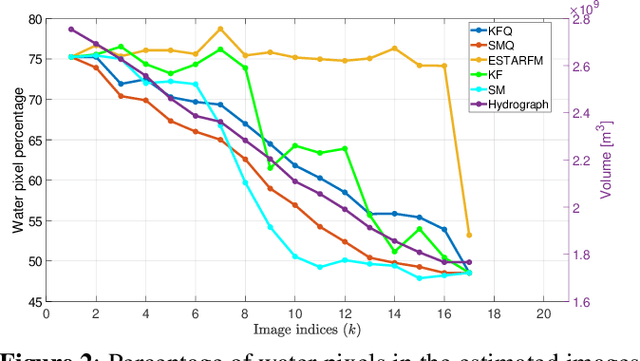

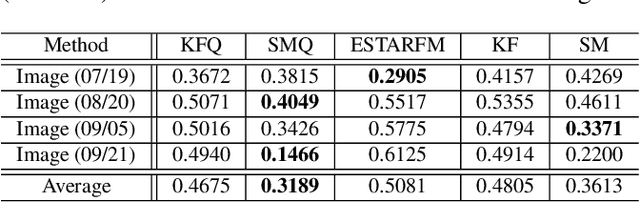

Abstract:Real-time satellite imaging has a central role in monitoring, detecting and estimating the intensity of key natural phenomena such as floods, earthquakes, etc. One important constraint of satellite imaging is the trade-off between spatial/spectral resolution and their revisiting time, a consequence of design and physical constraints imposed by satellite orbit among other technical limitations. In this paper, we focus on fusing multi-temporal, multi-spectral images where data acquired from different instruments with different spatial resolutions is used. We leverage the spatial relationship between images at multiple modalities to generate high-resolution image sequences at higher revisiting rates. To achieve this goal, we formulate the fusion method as a recursive state estimation problem and study its performance in filtering and smoothing contexts. Furthermore, a calibration strategy is proposed to estimate the time-varying temporal dynamics of the image sequence using only a small amount of historical image data. Differently from the training process in traditional machine learning algorithms, which usually require large datasets and computation times, the parameters of the temporal dynamical model are calibrated based on an analytical expression that uses only two of the images in the historical dataset. A distributed version of the Bayesian filtering and smoothing strategies is also proposed to reduce its computational complexity. To evaluate the proposed methodology we consider a water mapping task where real data acquired by the Landsat and MODIS instruments are fused generating high spatial-temporal resolution image estimates. Our experiments show that the proposed methodology outperforms the competing methods in both estimation accuracy and water mapping tasks.

Recursive classification of satellite imaging time-series: An application to water and land cover mapping

Jan 04, 2023

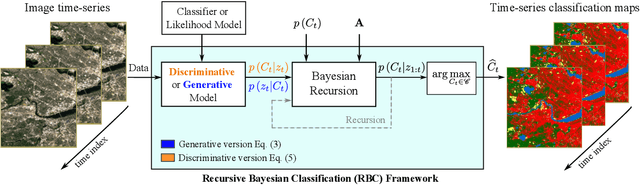

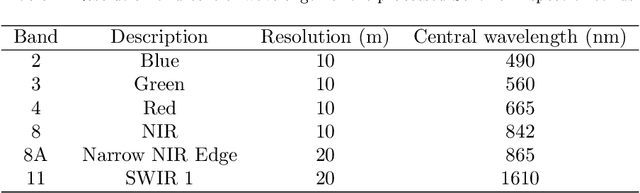

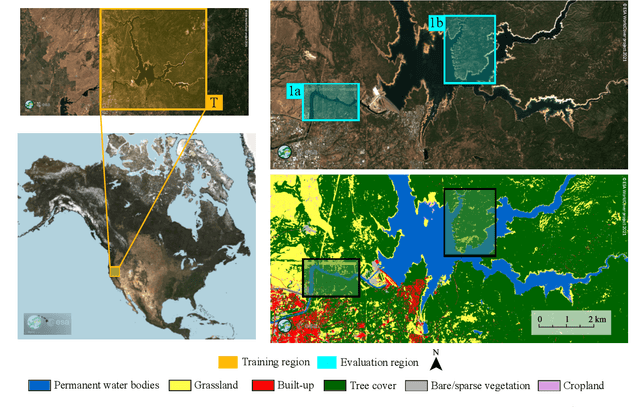

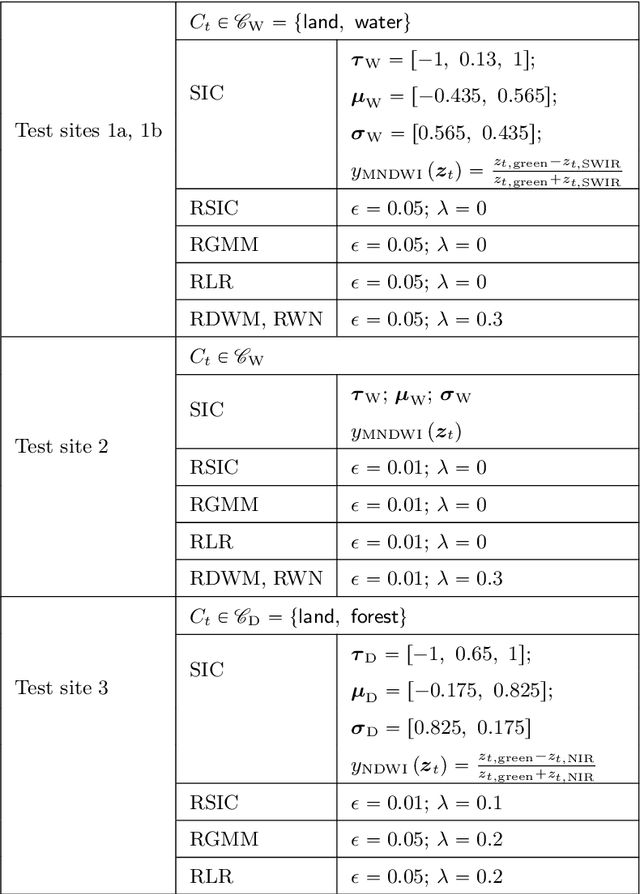

Abstract:A wide variety of applications of fundamental importance for security, environmental protection and urban development need access to accurate land cover monitoring and water mapping, for which the analysis of optical remote sensing imagery is key. Classification of time-series images, particularly with recursive methods, is of increasing interest in the current literature. Nevertheless, existing recursive approaches typically require large amounts of training data. This paper introduces a recursive classification framework that provides high accuracy while requiring low computational cost and minimal supervision. The proposed approach transforms a static classifier into a recursive one using a probabilistic framework that is robust to non-informative image variations. A water mapping and a land cover experiment are conducted analyzing Sentinel-2 satellite data covering two areas in the United States. The performance of three static classification algorithms and their recursive versions is compared, including a Gaussian Mixture Model (GMM), Logistic Regression (LR) and Spectral Index Classifiers (SICs). SICs consist in a new approach that we introduce to convert the Modified Normalized Difference Water Index (MNDWI) and the Normalized Difference Vegetation Index (NDVI) into probabilistic classification results. Two state-of-the-art deep learning-based classifiers are also used as benchmark models. Results show that the proposed method significantly increases the robustness of existing static classifiers in multitemporal settings. Our method also improves the performance of deep learning-based classifiers without the need of additional training data.

Online multi-resolution fusion of space-borne multispectral images

Apr 26, 2022

Abstract:Satellite imaging has a central role in monitoring, detecting and estimating the intensity of key natural phenomena. One important feature of satellite images is the trade-off between spatial/spectral resolution and their revisiting time, a consequence of design and physical constraints imposed by satellite orbit among other technical limitations. In this paper, we focus on fusing multi-temporal, multi-spectral images where data acquired from different instruments with different spatial resolutions is used. We leverage the spatial relationship between images at multiple modalities to generate high-resolution image sequences at higher revisiting rates. To achieve this goal, we formulate the fusion method as a recursive state estimation problem and study its performance in filtering and smoothing contexts. The proposed strategy clearly outperforms competing methodologies, which is shown in the paper for real data acquired by the Landsat and MODIS instruments.

Robust parameter design for Wiener-based binaural noise reduction methods in hearing aids

Apr 19, 2021

Abstract:This work presents a method for designing the weighting parameter required by Wiener-based binaural noise reduction methods. This parameter establishes the desired tradeoff between noise reduction and binaural cue preservation in hearing aid applications. The proposed strategy was specially derived for the preservation of interaural level difference, interaural time difference and interaural coherence binaural cues. It is defined as a function of the average input noise power at the microphones, providing robustness against the influence of joint changes in noise and speech power (Lombard effect), as well as to signal to noise ratio (SNR) variations. A theoretical framework, based on the mathematical definition of the homogeneity degree, is presented and applied to a generic augmented Wiener-based cost function. The theoretical insights obtained are supported bycomputational simulations and psychoacoustic experiments using the multichannel Wiener filter with interaural transfer function preservation technique (MWF-ITF), as a case study. Statistical analysis indicates that the proposed dynamic structure for the weighting parameter and the design method of its fixed part provide significant robustness against changes in the original binaural cues of both speech and residual noise, at the cost of a small decrease in the noise reduction performance, as compared to the use of a purely fixed weighting parameter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge