Rhianna Lee

Long-Term, in-the-Wild Study of Feedback about Speech Intelligibility for K-12 Students Attending Class via a Telepresence Robot

Aug 24, 2021

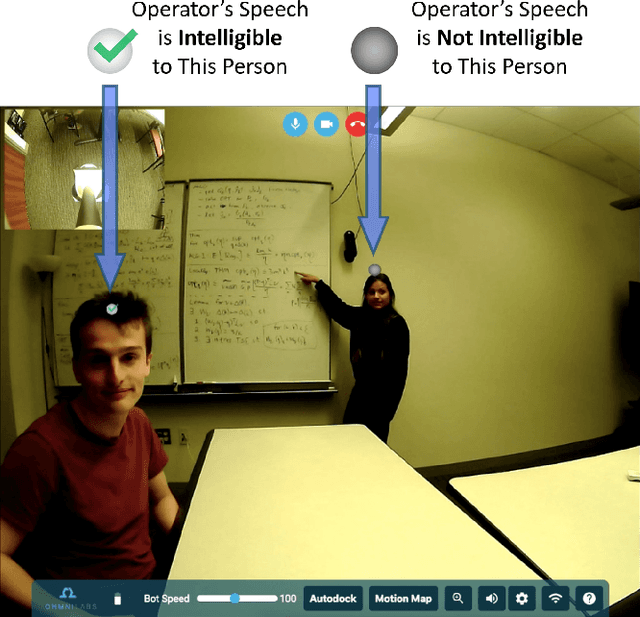

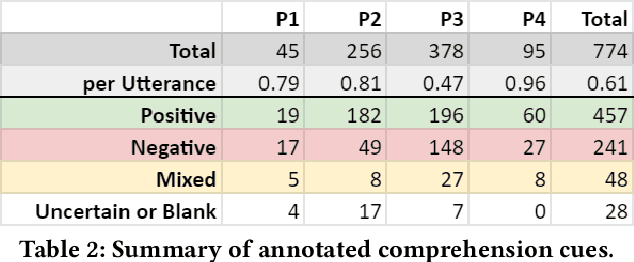

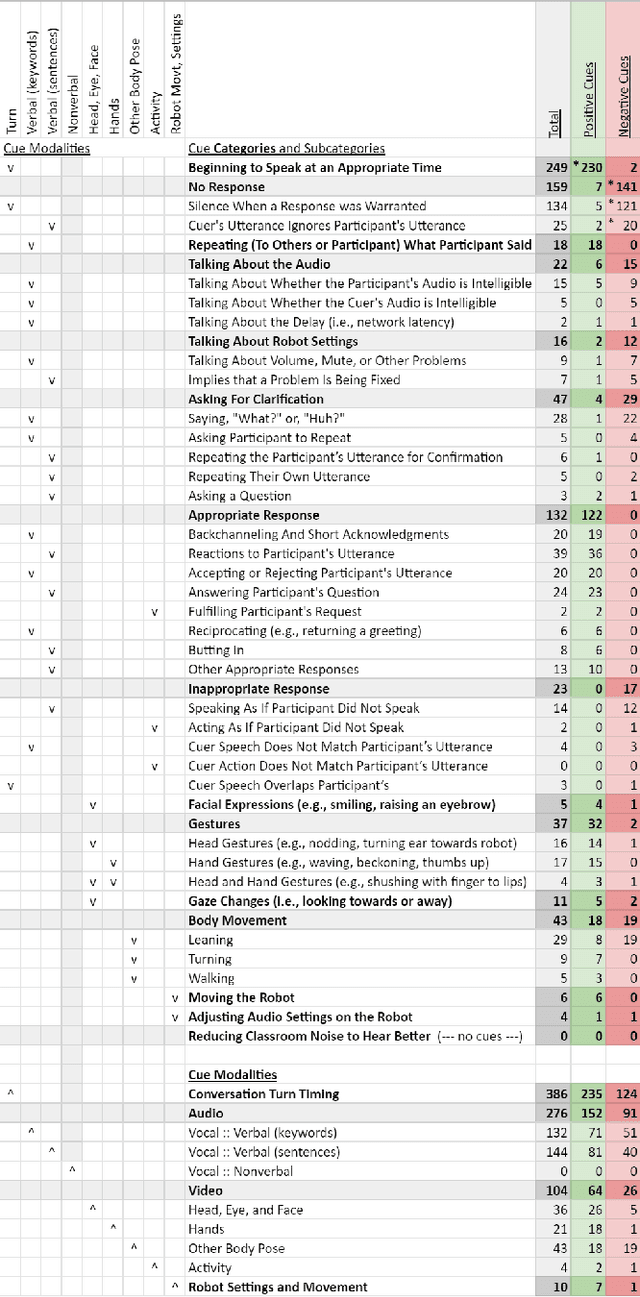

Abstract:Telepresence robots offer presence, embodiment, and mobility to remote users, making them promising options for homebound K-12 students. It is difficult, however, for robot operators to know how well they are being heard in remote and noisy classroom environments. One solution is to estimate the operator's speech intelligibility to their listeners in order to provide feedback about it to the operator. This work contributes the first evaluation of a speech intelligibility feedback system for homebound K-12 students attending class remotely. In our four long-term, in-the-wild deployments we found that students speak at different volumes instead of adjusting the robot's volume, and that detailed audio calibration and network latency feedback are needed. We also contribute the first findings about the types and frequencies of multimodal comprehension cues given to homebound students by listeners in the classroom. By annotating and categorizing over 700 cues, we found that the most common cue modalities were conversation turn timing and verbal content. Conversation turn timing cues occurred more frequently overall, whereas verbal content cues contained more information and might be the most frequent modality for negative cues. Our work provides recommendations for telepresence systems that could intervene to ensure that remote users are being heard.

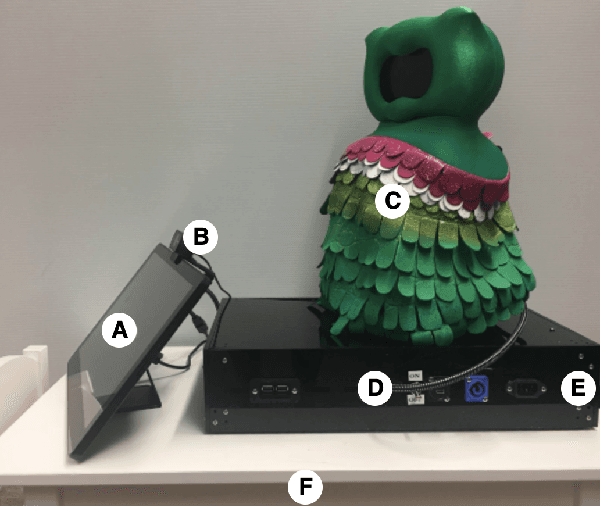

Designing a Socially Assistive Robot for Long-Term In-Home Use for Children with Autism Spectrum Disorders

Jan 22, 2020

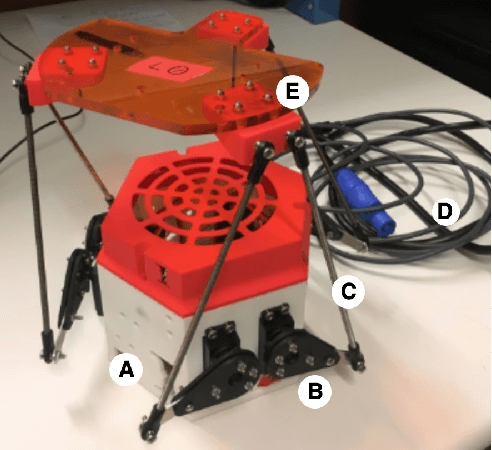

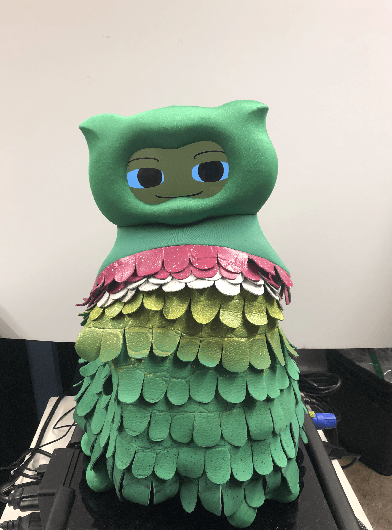

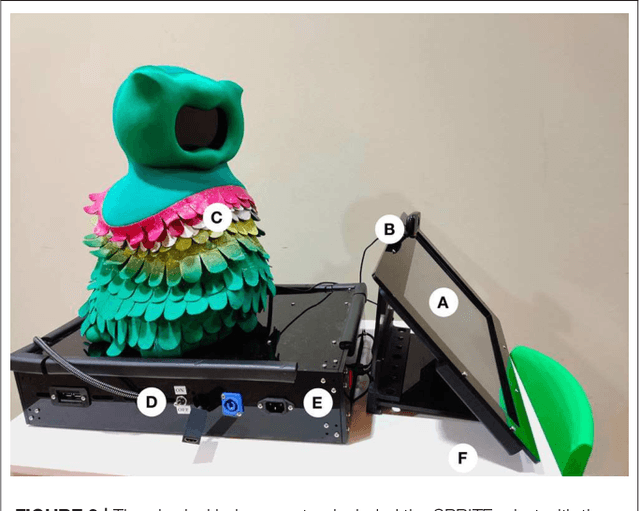

Abstract:Socially assistive robotics (SAR) research has shown great potential for supplementing and augmenting therapy for children with autism spectrum disorders (ASD). However, the vast majority of SAR research has been limited to short-term studies in highly controlled environments. The design and development of a SAR system capable of interacting autonomously {\it in situ} for long periods of time involves many engineering and computing challenges. This paper presents the design of a fully autonomous SAR system for long-term, in-home use with children with ASD. We address design decisions based on robustness and adaptability needs, discuss the development of the robot's character and interactions, and provide insights from the month-long, in-home data collections with children with ASD. This work contributes to a larger research program that is exploring how SAR can be used for enhancing the social and cognitive development of children with ASD.

Long-Term Personalization of an In-Home Socially Assistive Robot for Children With Autism Spectrum Disorders

Nov 18, 2019

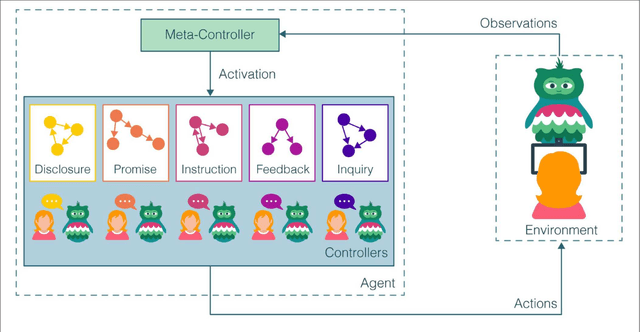

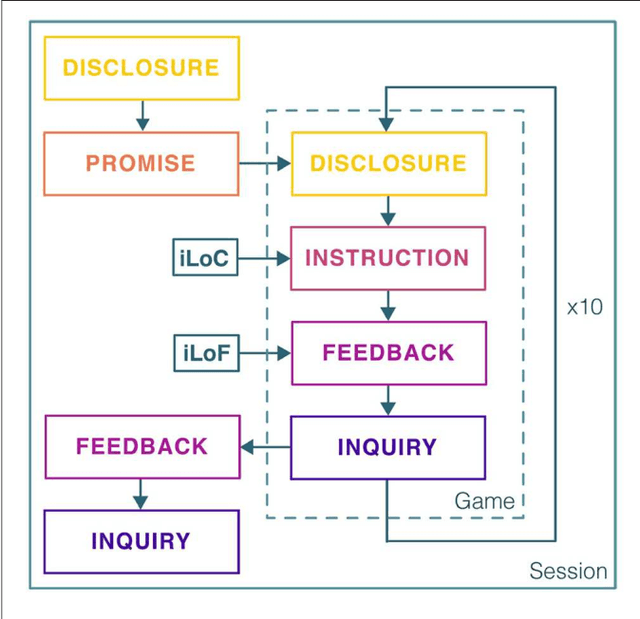

Abstract:Socially assistive robots (SAR) have shown great potential to augment the social and educational development of children with autism spectrum disorders (ASD). As SAR continues to substantiate itself as an effective enhancement to human intervention, researchers have sought to study its longitudinal impacts in real-world environments, including the home. Computational personalization stands out as a central computational challenge as it is necessary to enable SAR systems to adapt to each child's unique and changing needs. Toward that end, we formalized personalization as a hierarchical human robot learning framework (hHRL) consisting of five controllers (disclosure, promise, instruction, feedback, and inquiry) mediated by a meta-controller that utilized reinforcement learning to personalize instruction challenge levels and robot feedback based on each user's unique learning patterns. We instantiated and evaluated the approach in a study with 17 children with ASD, aged 3 to 7 years old, over month-long interventions in their homes. Our findings demonstrate that the fully autonomous SAR system was able to personalize its instruction and feedback over time to each child's proficiency. As a result, every child participant showed improvements in targeted skills and long-term retention of intervention content. Moreover, all child users were engaged for a majority of the intervention, and their families reported the SAR system to be useful and adaptable. In summary, our results show that autonomous, personalized SAR interventions are both feasible and effective in providing long-term in-home developmental support for children with diverse learning needs.

* 30 pages, 10 figures, Frontiers in Robotics and AI journal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge