Renlang Huang

A Consistency-Aware Spot-Guided Transformer for Versatile and Hierarchical Point Cloud Registration

Oct 14, 2024

Abstract:Deep learning-based feature matching has shown great superiority for point cloud registration in the absence of pose priors. Although coarse-to-fine matching approaches are prevalent, the coarse matching of existing methods is typically sparse and loose without consideration of geometric consistency, which makes the subsequent fine matching rely on ineffective optimal transport and hypothesis-and-selection methods for consistency. Therefore, these methods are neither efficient nor scalable for real-time applications such as odometry in robotics. To address these issues, we design a consistency-aware spot-guided Transformer (CAST), which incorporates a spot-guided cross-attention module to avoid interfering with irrelevant areas, and a consistency-aware self-attention module to enhance matching capabilities with geometrically consistent correspondences. Furthermore, a lightweight fine matching module for both sparse keypoints and dense features can estimate the transformation accurately. Extensive experiments on both outdoor LiDAR point cloud datasets and indoor RGBD point cloud datasets demonstrate that our method achieves state-of-the-art accuracy, efficiency, and robustness.

KDD-LOAM: Jointly Learned Keypoint Detector and Descriptors Assisted LiDAR Odometry and Mapping

Sep 27, 2023

Abstract:Sparse keypoint matching based on distinct 3D feature representations can improve the efficiency and robustness of point cloud registration. Existing learning-based 3D descriptors and keypoint detectors are either independent or loosely coupled, so they cannot fully adapt to each other. In this work, we propose a tightly coupled keypoint detector and descriptor (TCKDD) based on a multi-task fully convolutional network with a probabilistic detection loss. In particular, this self-supervised detection loss fully adapts the keypoint detector to any jointly learned descriptors and benefits the self-supervised learning of descriptors. Extensive experiments on both indoor and outdoor datasets show that our TCKDD achieves state-of-the-art performance in point cloud registration. Furthermore, we design a keypoint detector and descriptors-assisted LiDAR odometry and mapping framework (KDD-LOAM), whose real-time odometry relies on keypoint descriptor matching-based RANSAC. The sparse keypoints are further used for efficient scan-to-map registration and mapping. Experiments on KITTI dataset demonstrate that KDD-LOAM significantly surpasses LOAM and shows competitive performance in odometry.

Efficient Object Manipulation to an Arbitrary Goal Pose: Learning-based Anytime Prioritized Planning

Sep 22, 2021

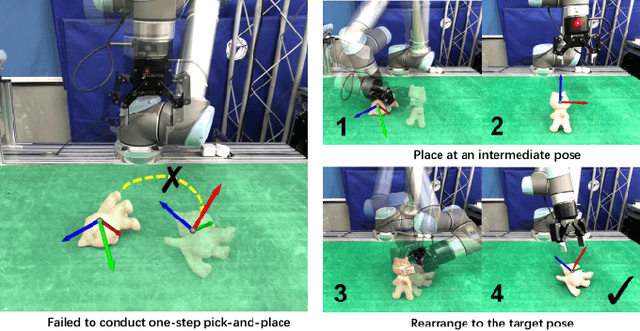

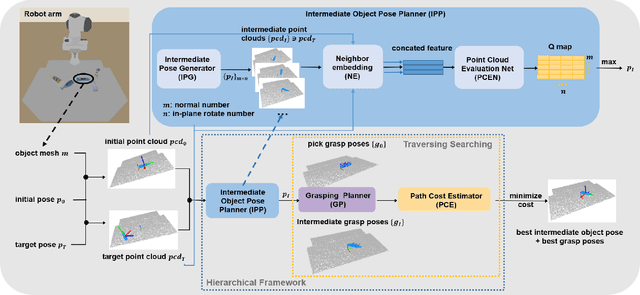

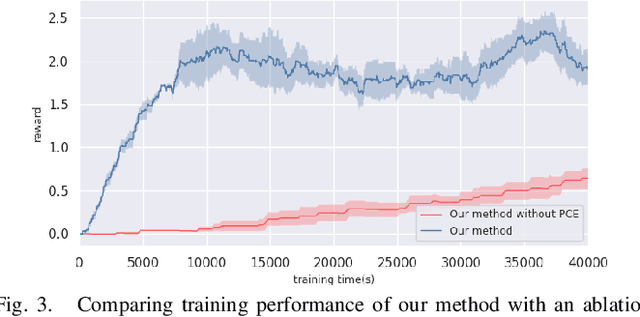

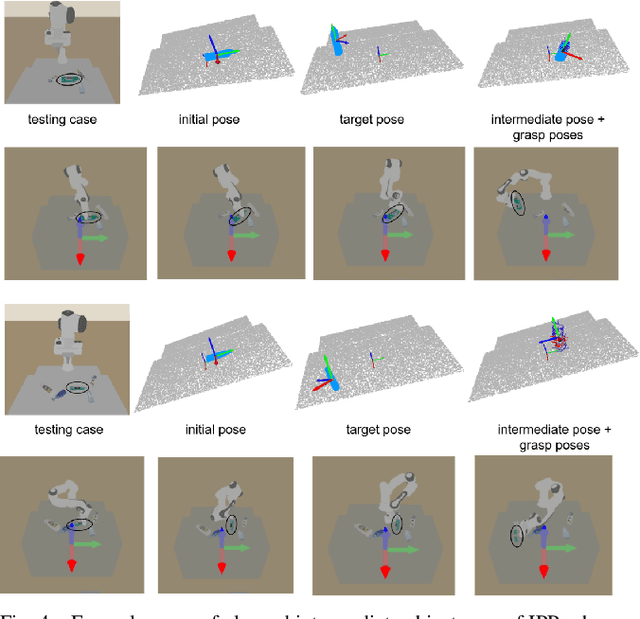

Abstract:We focus on the task of object manipulation to an arbitrary goal pose, in which a robot is supposed to pick an assigned object to place at the goal position with a specific pose. However, limited by the execution space of the manipulator with gripper, one-step picking, moving and releasing might be failed, where an intermediate object pose is required as a transition. In this paper, we propose a learning-driven anytime prioritized search-based solver to find a feasible solution with low path cost in a short time. In our work, the problem is formulated as a hierarchical learning problem, with the high level aiming at finding an intermediate object pose, and the low-level manipulator path planning between adjacent grasps. We learn an off-line training path cost estimator to predict approximate path planning costs, which serve as pseudo rewards to allow for pre-training the high-level planner without interacting with the simulator. To deal with the problem of distribution mismatch of the cost net and the actual execution cost space, a refined training stage is conducted with simulation interaction. A series of experiments carried out in simulation and real world indicate that our system can achieve better performances in the object manipulation task with less time and less cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge