René Wagner

Linear Transformer Topological Masking with Graph Random Features

Oct 04, 2024

Abstract:When training transformers on graph-structured data, incorporating information about the underlying topology is crucial for good performance. Topological masking, a type of relative position encoding, achieves this by upweighting or downweighting attention depending on the relationship between the query and keys in a graph. In this paper, we propose to parameterise topological masks as a learnable function of a weighted adjacency matrix -- a novel, flexible approach which incorporates a strong structural inductive bias. By approximating this mask with graph random features (for which we prove the first known concentration bounds), we show how this can be made fully compatible with linear attention, preserving $\mathcal{O}(N)$ time and space complexity with respect to the number of input tokens. The fastest previous alternative was $\mathcal{O}(N \log N)$ and only suitable for specific graphs. Our efficient masking algorithms provide strong performance gains for tasks on image and point cloud data, including with $>30$k nodes.

Integrating Generic Sensor Fusion Algorithms with Sound State Representations through Encapsulation of Manifolds

Jul 06, 2011

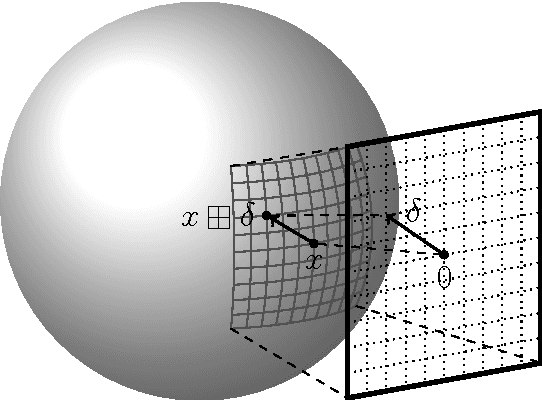

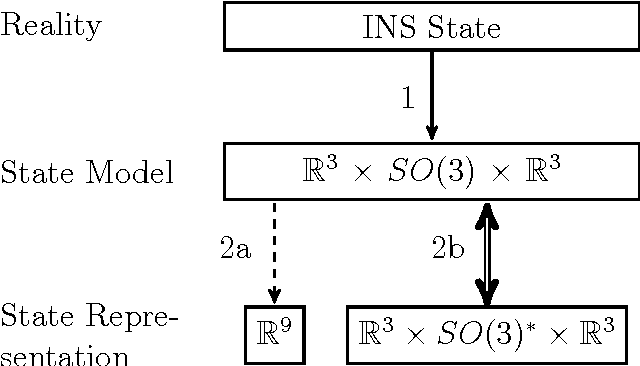

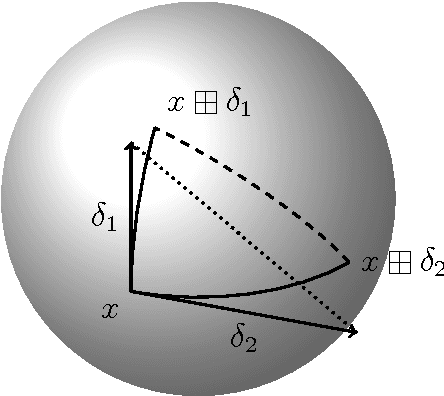

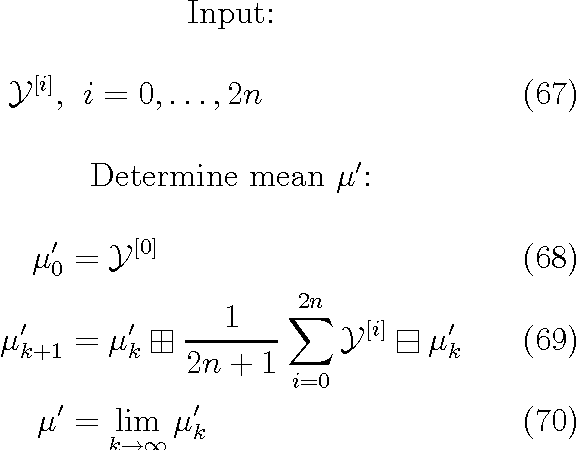

Abstract:Common estimation algorithms, such as least squares estimation or the Kalman filter, operate on a state in a state space S that is represented as a real-valued vector. However, for many quantities, most notably orientations in 3D, S is not a vector space, but a so-called manifold, i.e. it behaves like a vector space locally but has a more complex global topological structure. For integrating these quantities, several ad-hoc approaches have been proposed. Here, we present a principled solution to this problem where the structure of the manifold S is encapsulated by two operators, state displacement [+]:S x R^n --> S and its inverse [-]: S x S --> R^n. These operators provide a local vector-space view \delta; --> x [+] \delta; around a given state x. Generic estimation algorithms can then work on the manifold S mainly by replacing +/- with [+]/[-] where appropriate. We analyze these operators axiomatically, and demonstrate their use in least-squares estimation and the Unscented Kalman Filter. Moreover, we exploit the idea of encapsulation from a software engineering perspective in the Manifold Toolkit, where the [+]/[-] operators mediate between a "flat-vector" view for the generic algorithm and a "named-members" view for the problem specific functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge