Rasmus Nyholm Jørgensen

Ground vehicle mapping of fields using LiDAR to enable prediction of crop biomass

May 03, 2018

Abstract:Mapping field environments into point clouds using a 3D LIDAR has the ability to become a new approach for online estimation of crop biomass in the field. The estimation of crop biomass in agriculture is expected to be closely correlated to canopy heights. The work presented in this paper contributes to the mapping and textual analysis of agricultural fields. Crop and environmental state information can be used to tailor treatments to the specific site. This paper presents the current results with our ground vehicle LiDAR mapping systems for broad acre crop fields. The proposed vehicle system and method facilitates LiDAR recordings in an experimental winter wheat field. LiDAR data are combined with data from Global Navigation Satellite System (GNSS) and Inertial Measurement Unit (IMU) sensors to conduct environment mapping for point clouds. The sensory data from the vehicle are recorded, mapped, and analyzed using the functionalities of the Robot Operating System (ROS) and the Point Cloud Library (PCL). In this experiment winter wheat (Triticum aestivum L.) in field plots, was mapped using 3D point clouds with a point density on the centimeter level. The purpose of the experiment was to create 3D LiDAR point-clouds of the field plots enabling canopy volume and textural analysis to discriminate different crop treatments. Estimated crop volumes ranging from 3500-6200 (m3) per hectare are correlated to the manually collected samples of cut biomass extracted from the experimental field.

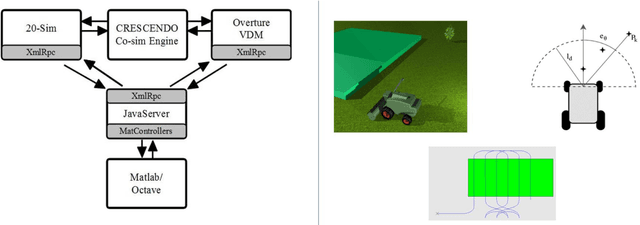

Robotic design choice overview using co-simulation

Feb 17, 2018

Abstract:Rapid robotic system development sets a demand for multi-disciplinary methods and tools to explore and compare design alternatives. In this paper, we present collaborative modeling that combines discrete-event models of controller software with continuous-time models of physical robot components. The presented co-modeling method utilized VDM for discrete-event and 20-sim for continuous-time modeling. The collaborative modeling method is illustrated with a concrete example of collaborative model development of a mobile robot animal feeding system. Simulations are used to evaluate the robot model output response in relation to operational demands. The result of the simulations provides the developers with an overview of the impacts of each solution instance in the chosen design space. Based on the solution overview the developers can select candidates that are deemed viable to be deployed and tested on an actual physical robot.

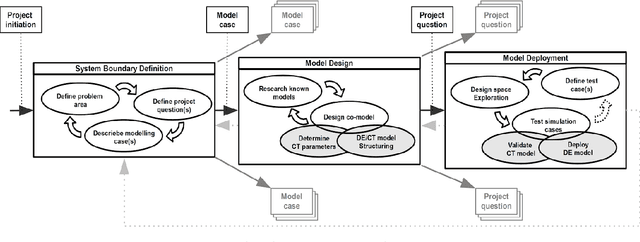

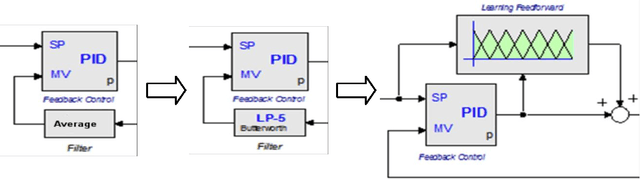

Collaborative model based design of automated and robotic agricultural vehicles in the Crescendo Tool

Feb 17, 2018

Abstract:This paper describes a collaborative modelling approach to automated and robotic agricultural vehicle design. The Cresendo technology allows engineers from different disciplines to collaborate and produce system models. The combined models are called co-models and their execution co-simulation. To support future development efforts a template library of different vehicle and controllers types are provided. This paper describes a methodology to developing co-models from initial problem definition to deployment of the actual system. We illustrate the development methodology with an example development case from the agricultural domain. The case relates to an encountered speed controller problem on a differential driven vehicle, where we iterate through different candidate solutions and end up with an adaptive controller solution based on a combination of classical control and learning feedforward. The second case is an example of combining human control interface and co-simulation of agricultural robotic operation to illustrate collaborative development

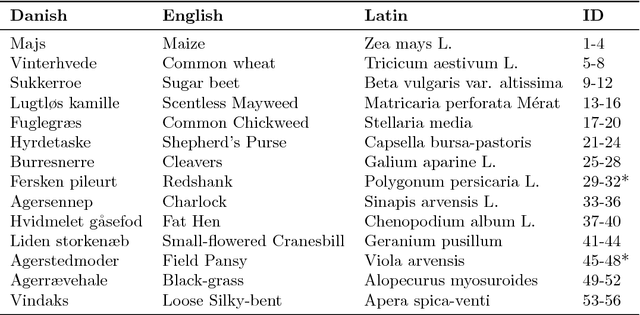

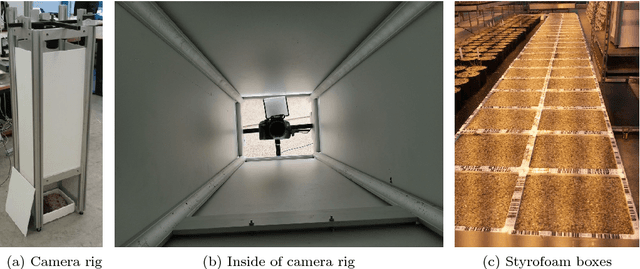

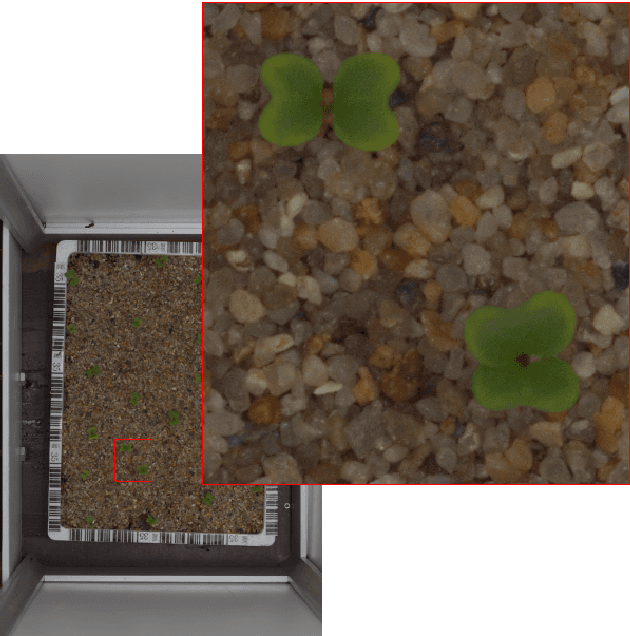

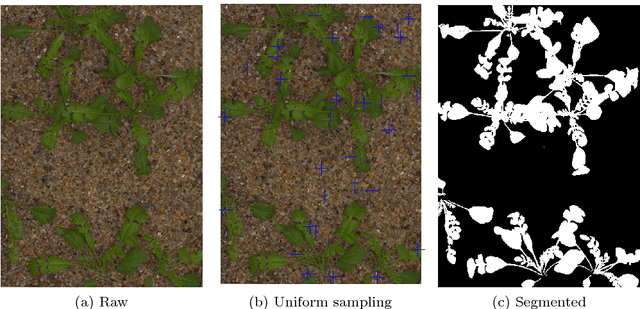

A Public Image Database for Benchmark of Plant Seedling Classification Algorithms

Nov 15, 2017

Abstract:A database of images of approximately 960 unique plants belonging to 12 species at several growth stages is made publicly available. It comprises annotated RGB images with a physical resolution of roughly 10 pixels per mm. To standardise the evaluation of classification results obtained with the database, a benchmark based on $f_{1}$ scores is proposed. The dataset is available at https://vision.eng.au.dk/plant-seedlings-dataset

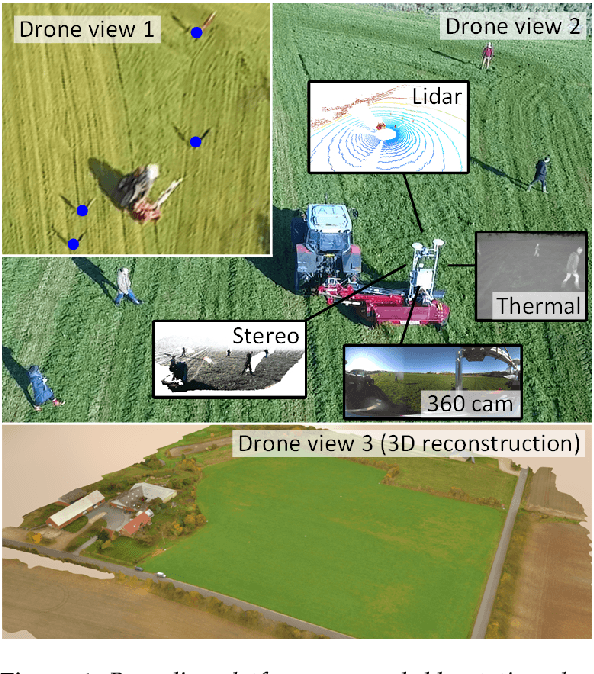

FieldSAFE: Dataset for Obstacle Detection in Agriculture

Sep 11, 2017

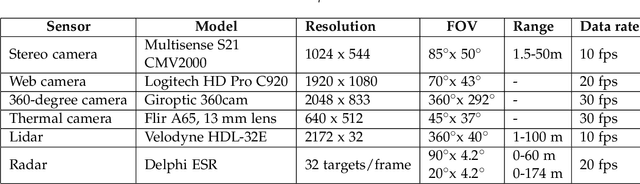

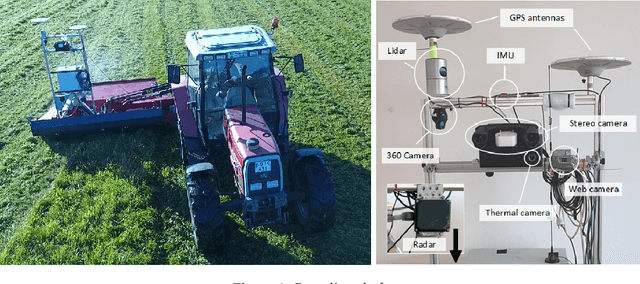

Abstract:In this paper, we present a novel multi-modal dataset for obstacle detection in agriculture. The dataset comprises approximately 2 hours of raw sensor data from a tractor-mounted sensor system in a grass mowing scenario in Denmark, October 2016. Sensing modalities include stereo camera, thermal camera, web camera, 360-degree camera, lidar, and radar, while precise localization is available from fused IMU and GNSS. Both static and moving obstacles are present including humans, mannequin dolls, rocks, barrels, buildings, vehicles, and vegetation. All obstacles have ground truth object labels and geographic coordinates.

* Submitted to special issue of MDPI Sensors: Sensors in Agriculture

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge