Rasmus Berg Palm

Severe Damage Recovery in Evolving Soft Robots through Differentiable Programming

Jun 14, 2022Abstract:Biological systems are very robust to morphological damage, but artificial systems (robots) are currently not. In this paper we present a system based on neural cellular automata, in which locomoting robots are evolved and then given the ability to regenerate their morphology from damage through gradient-based training. Our approach thus combines the benefits of evolution to discover a wide range of different robot morphologies, with the efficiency of supervised training for robustness through differentiable update rules. The resulting neural cellular automata are able to grow virtual robots capable of regaining more than 80\% of their functionality, even after severe types of morphological damage.

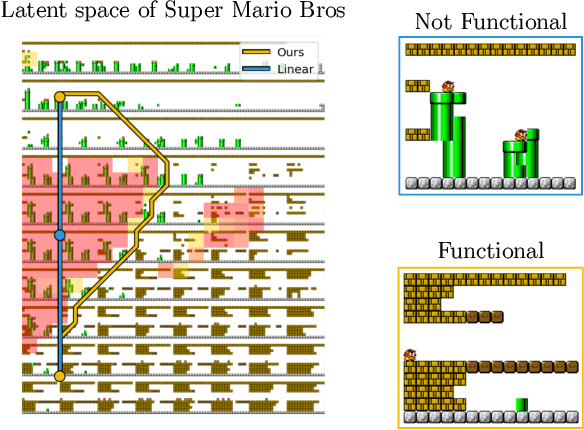

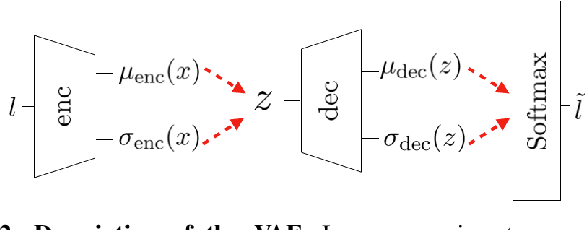

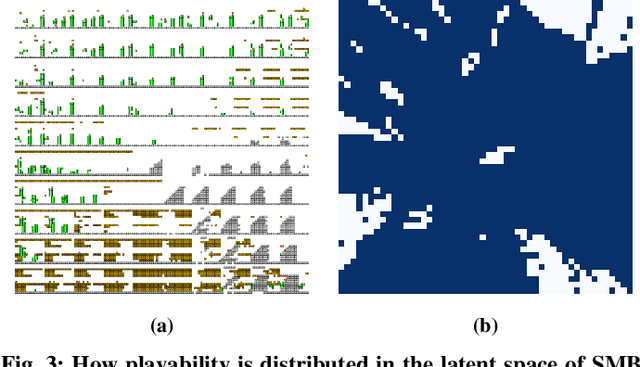

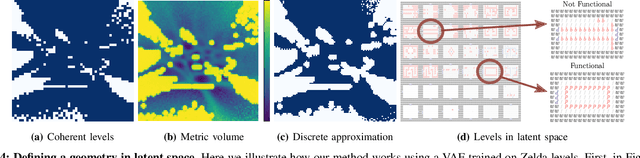

Mario Plays on a Manifold: Generating Functional Content in Latent Space through Differential Geometry

May 31, 2022

Abstract:Deep generative models can automatically create content of diverse types. However, there are no guarantees that such content will satisfy the criteria necessary to present it to end-users and be functional, e.g. the generated levels could be unsolvable or incoherent. In this paper we study this problem from a geometric perspective, and provide a method for reliable interpolation and random walks in the latent spaces of Categorical VAEs based on Riemannian geometry. We test our method with "Super Mario Bros" and "The Legend of Zelda" levels, and against simpler baselines inspired by current practice. Results show that the geometry we propose is better able to interpolate and sample, reliably staying closer to parts of the latent space that decode to playable content.

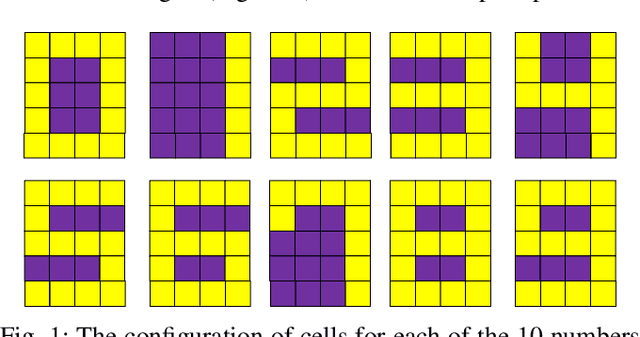

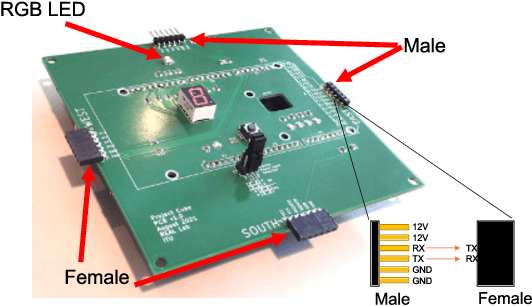

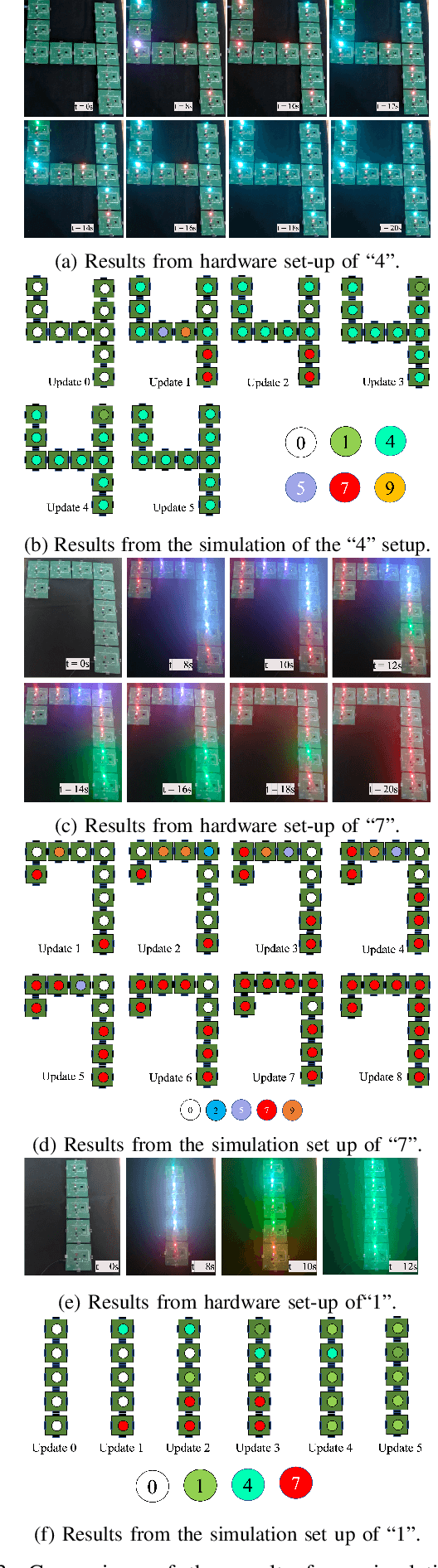

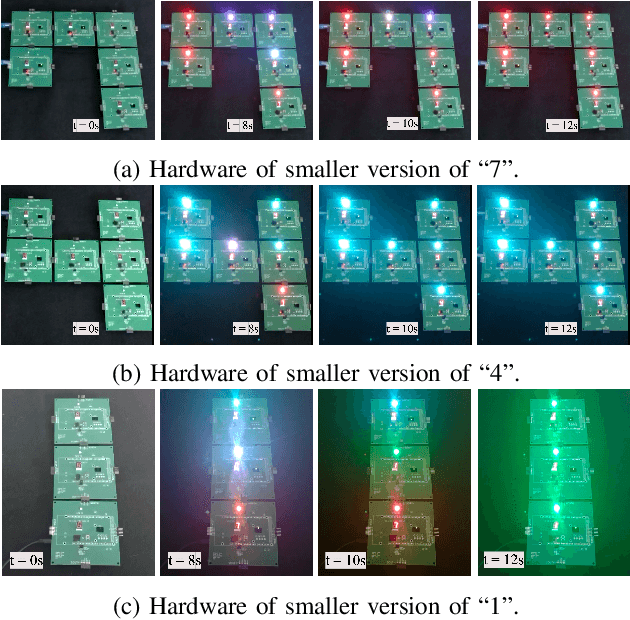

Physical Neural Cellular Automata for 2D Shape Classification

Mar 14, 2022

Abstract:Materials with the ability to self-classify their own shape have the potential to advance a wide range of engineering applications and industries. Biological systems possess the ability not only to self-reconfigure but also to self-classify themselves to determine a general shape and function. Previous work into modular robotics systems have only enabled self-recognition and self-reconfiguration into a specific target shape, missing the inherent robustness present in nature to self-classify. In this paper we therefore take advantage of recent advances in deep learning and neural cellular automata, and present a simple modular 2D robotic system that can infer its own class of shape through the local communication of its components. Furthermore, we show that our system can be successfully transferred to hardware which thus opens opportunities for future self-classifying machines.

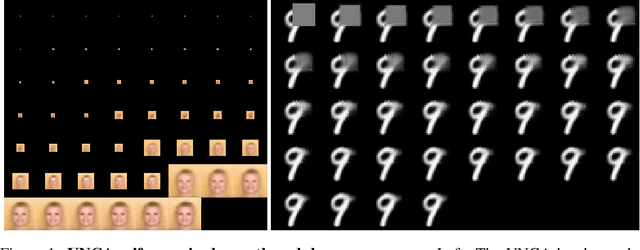

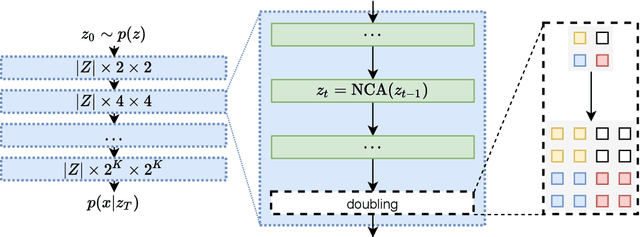

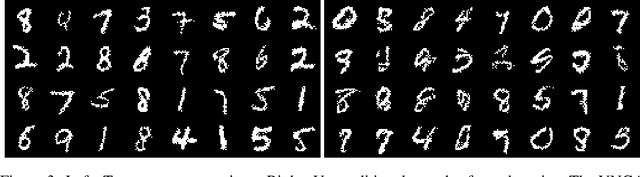

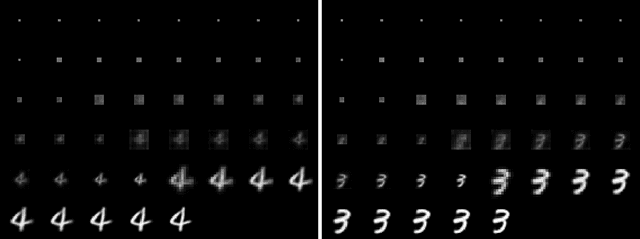

Variational Neural Cellular Automata

Feb 02, 2022

Abstract:In nature, the process of cellular growth and differentiation has lead to an amazing diversity of organisms -- algae, starfish, giant sequoia, tardigrades, and orcas are all created by the same generative process. Inspired by the incredible diversity of this biological generative process, we propose a generative model, the Variational Neural Cellular Automata (VNCA), which is loosely inspired by the biological processes of cellular growth and differentiation. Unlike previous related works, the VNCA is a proper probabilistic generative model, and we evaluate it according to best practices. We find that the VNCA learns to reconstruct samples well and that despite its relatively few parameters and simple local-only communication, the VNCA can learn to generate a large variety of output from information encoded in a common vector format. While there is a significant gap to the current state-of-the-art in terms of generative modeling performance, we show that the VNCA can learn a purely self-organizing generative process of data. Additionally, we show that the VNCA can learn a distribution of stable attractors that can recover from significant damage.

Fast Game Content Adaptation Through Bayesian-based Player Modelling

May 18, 2021

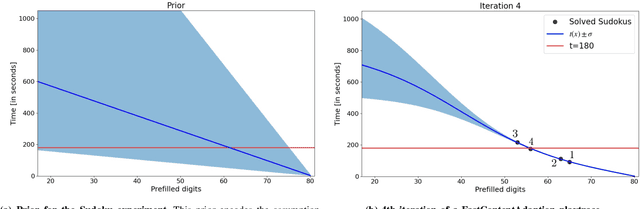

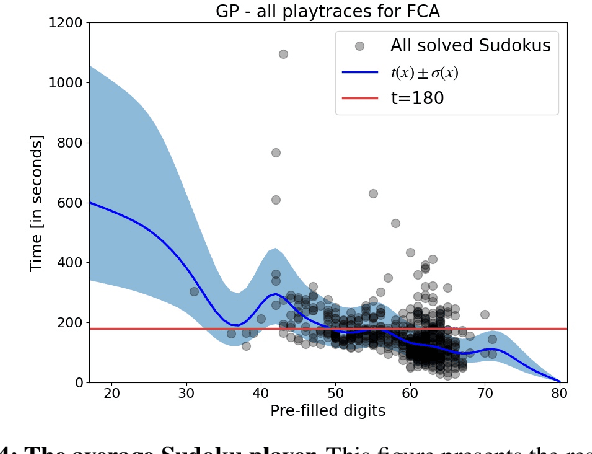

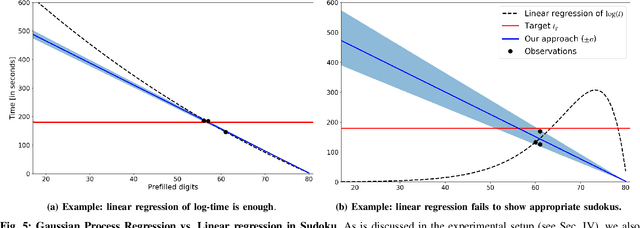

Abstract:In games (as well as many user-facing systems), adapting content to user's preferences and experience is an important challenge. This paper explores a novel method to realize this goal in the context of dynamic difficulty adjustment (DDA). Here the aim is to constantly adapt the content of a game to the skill level of the player, keeping them engaged by avoiding states that are either too difficult or too easy. Current systems for DDA rely on expensive data mining, or on hand-crafted rules designed for particular domains, and usually adapts to keep players in the flow, leaving no room for the designer to present content that is purposefully easy or difficult. This paper presents a Bayesian Optimization-based system for DDA that is agnostic to the domain and that can target particular difficulties. We deploy this framework in two different domains: the puzzle game Sudoku, and a simple Roguelike game. By modifying the acquisition function's optimization, we are reliably able to present a puzzle with a bespoke difficulty for players with different skill levels in less than five iterations (for Sudoku) and fifteen iterations (for the simple Roguelike), significantly outperforming simpler heuristics for difficulty adjustment in said domains, with the added benefit of maintaining a model of the user. These results point towards a promising alternative for content adaption in a variety of different domains.

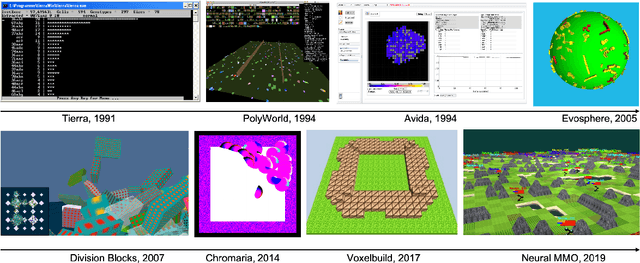

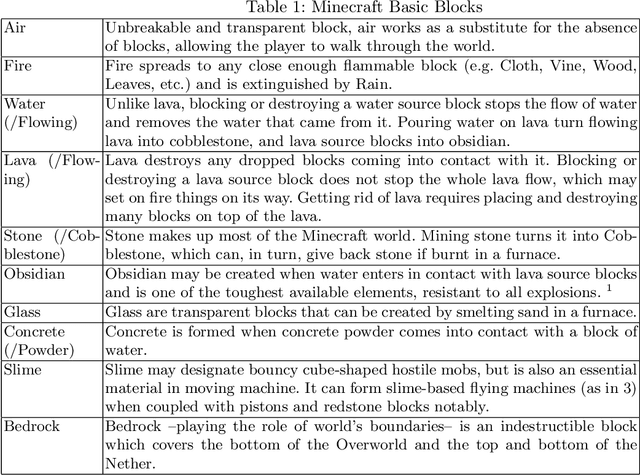

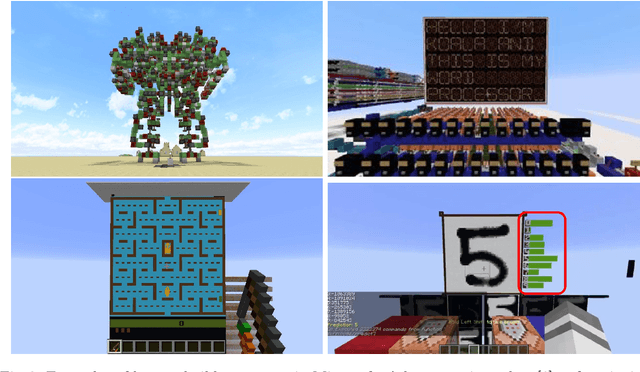

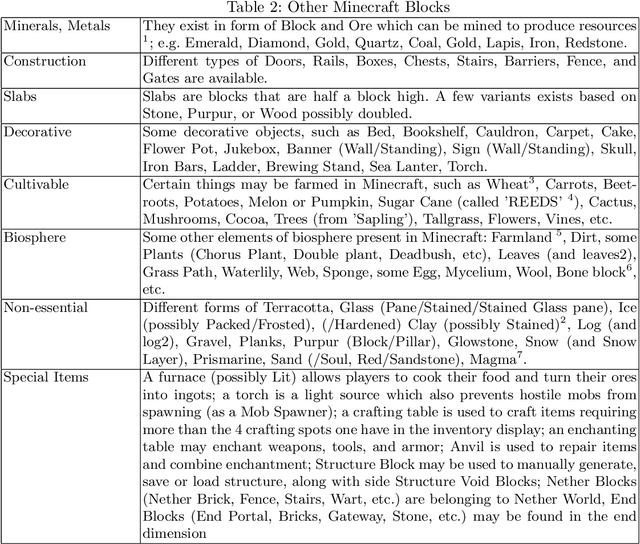

EvoCraft: A New Challenge for Open-Endedness

Dec 08, 2020

Abstract:This paper introduces EvoCraft, a framework for Minecraft designed to study open-ended algorithms. We introduce an API that provides an open-source Python interface for communicating with Minecraft to place and track blocks. In contrast to previous work in Minecraft that focused on learning to play the game, the grand challenge we pose here is to automatically search for increasingly complex artifacts in an open-ended fashion. Compared to other environments used to study open-endedness, Minecraft allows the construction of almost any kind of structure, including actuated machines with circuits and mechanical components. We present initial baseline results in evolving simple Minecraft creations through both interactive and automated evolution. While evolution succeeds when tasked to grow a structure towards a specific target, it is unable to find a solution when rewarded for creating a simple machine that moves. Thus, EvoCraft offers a challenging new environment for automated search methods (such as evolution) to find complex artifacts that we hope will spur the development of more open-ended algorithms. A Python implementation of the EvoCraft framework is available at: https://github.com/real-itu/Evocraft-py.

Evolutionary Planning in Latent Space

Nov 23, 2020

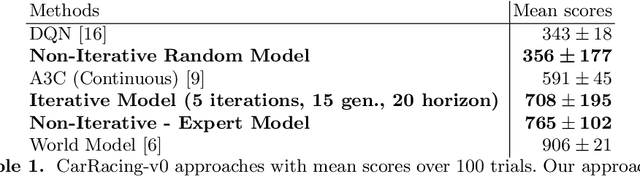

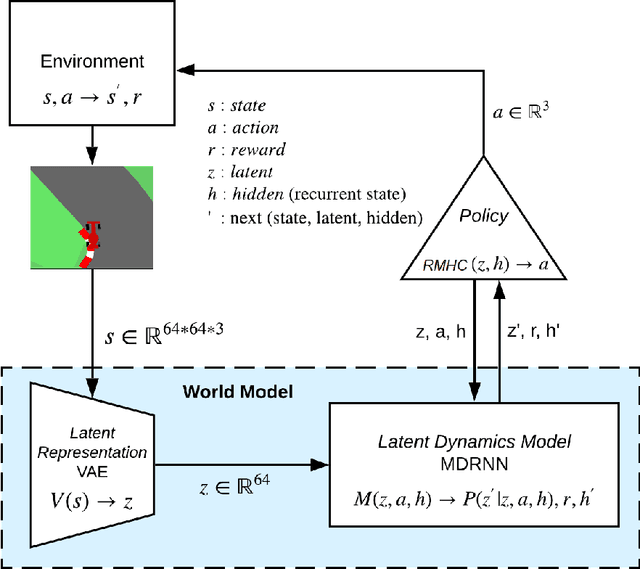

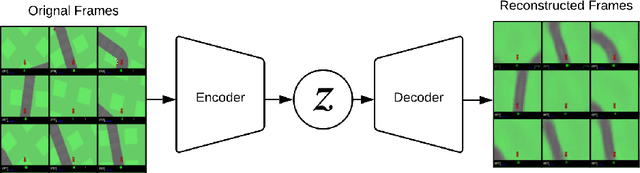

Abstract:Planning is a powerful approach to reinforcement learning with several desirable properties. However, it requires a model of the world, which is not readily available in many real-life problems. In this paper, we propose to learn a world model that enables Evolutionary Planning in Latent Space (EPLS). We use a Variational Auto Encoder (VAE) to learn a compressed latent representation of individual observations and extend a Mixture Density Recurrent Neural Network (MDRNN) to learn a stochastic, multi-modal forward model of the world that can be used for planning. We use the Random Mutation Hill Climbing (RMHC) to find a sequence of actions that maximize expected reward in this learned model of the world. We demonstrate how to build a model of the world by bootstrapping it with rollouts from a random policy and iteratively refining it with rollouts from an increasingly accurate planning policy using the learned world model. After a few iterations of this refinement, our planning agents are better than standard model-free reinforcement learning approaches demonstrating the viability of our approach.

Testing the Genomic Bottleneck Hypothesis in Hebbian Meta-Learning

Nov 13, 2020

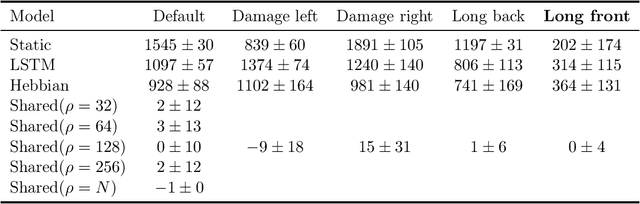

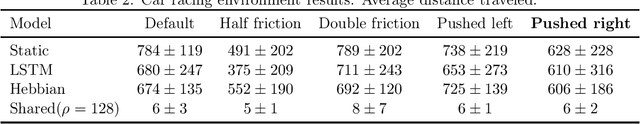

Abstract:Recent work has shown promising results using Hebbian meta-learning to solve hard reinforcement learning problems and adapt-to a limited degree-to changes in the environment. In previous works each synapse has its own learning rule. This allows each synapse to learn very specific learning rules and we hypothesize this limits the ability to discover generally useful Hebbian learning rules. We hypothesize that limiting the number of Hebbian learning rules through a "genomic bottleneck" can act as a regularizer leading to better generalization across changes to the environment. We test this hypothesis by decoupling the number of Hebbian learning rules from the number of synapses and systematically varying the number of Hebbian learning rules. We thoroughly explore how well these Hebbian meta-learning networks adapt to changes in their environment.

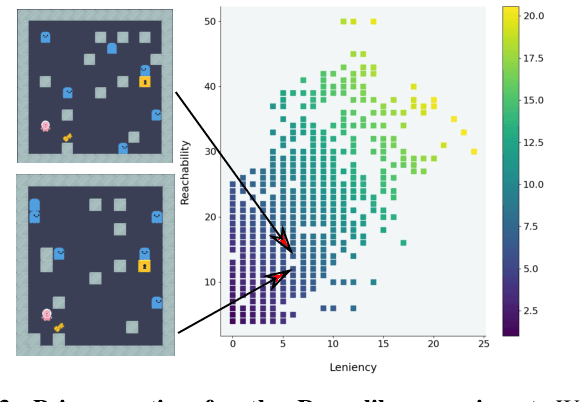

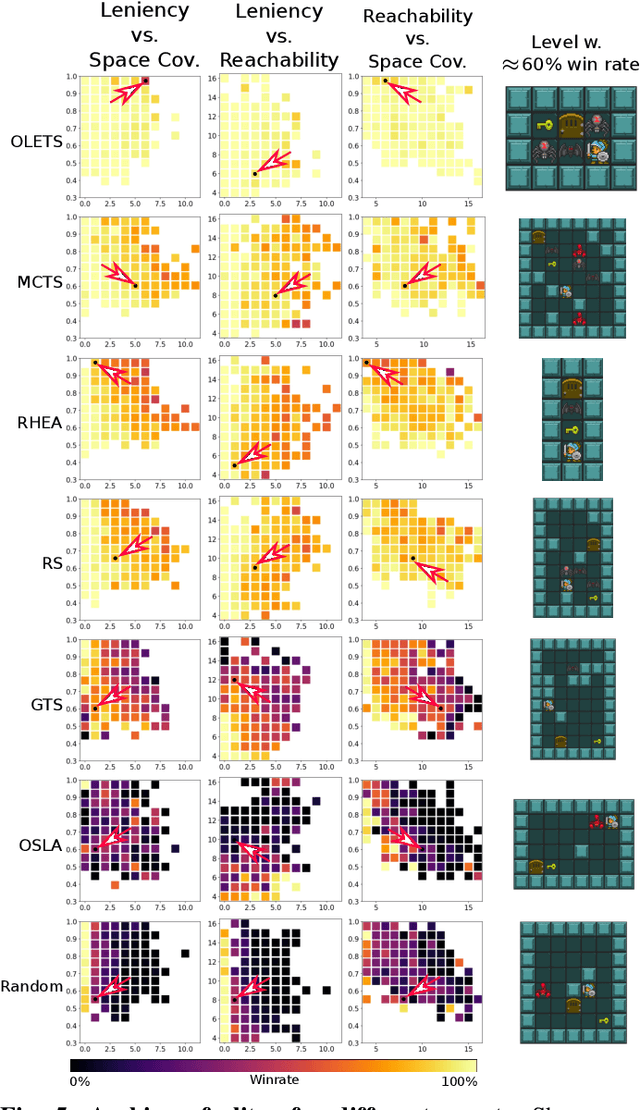

Finding Game Levels with the Right Difficulty in a Few Trials through Intelligent Trial-and-Error

May 15, 2020

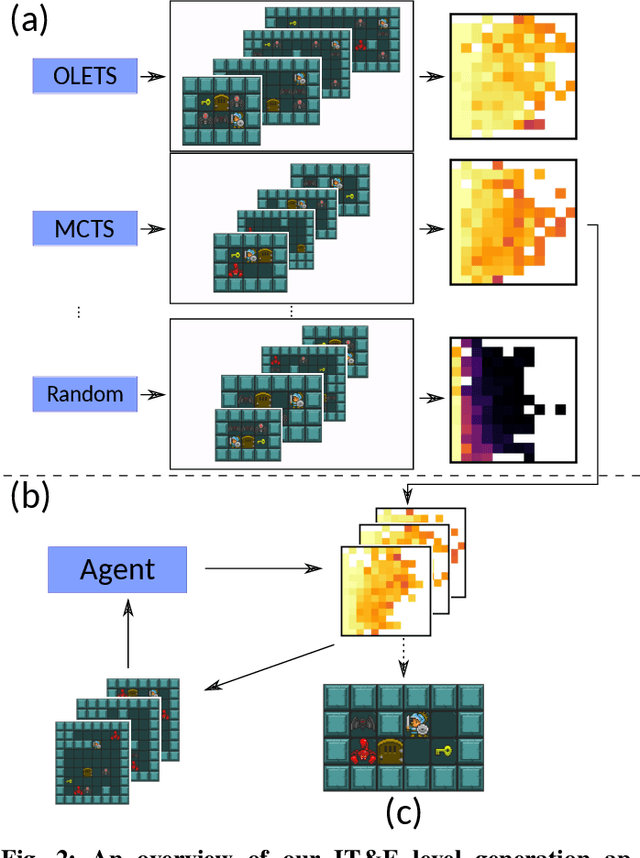

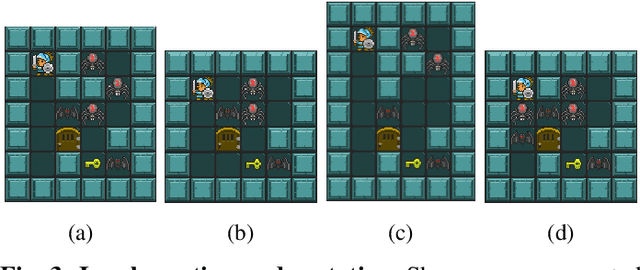

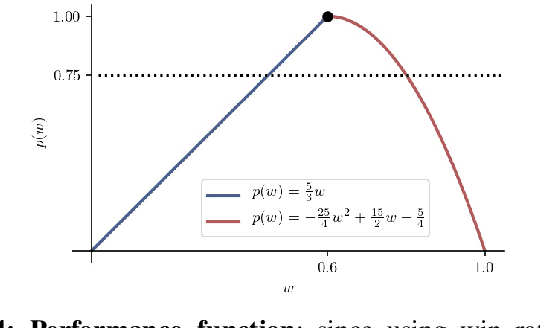

Abstract:Methods for dynamic difficulty adjustment allow games to be tailored to particular players to maximize their engagement. However, current methods often only modify a limited set of game features such as the difficulty of the opponents, or the availability of resources. Other approaches, such as experience-driven Procedural Content Generation (PCG), can generate complete levels with desired properties such as levels that are neither too hard nor too easy, but require many iterations. This paper presents a method that can generate and search for complete levels with a specific target difficulty in only a few trials. This advance is enabled by through an Intelligent Trial-and-Error algorithm, originally developed to allow robots to adapt quickly. Our algorithm first creates a large variety of different levels that vary across predefined dimensions such as leniency or map coverage. The performance of an AI playing agent on these maps gives a proxy for how difficult the level would be for another AI agent (e.g. one that employs Monte Carlo Tree Search instead of Greedy Tree Search); using this information, a Bayesian Optimization procedure is deployed, updating the difficulty of the prior map to reflect the ability of the agent. The approach can reliably find levels with a specific target difficulty for a variety of planning agents in only a few trials, while maintaining an understanding of their skill landscape.

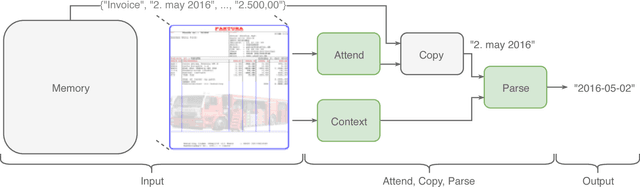

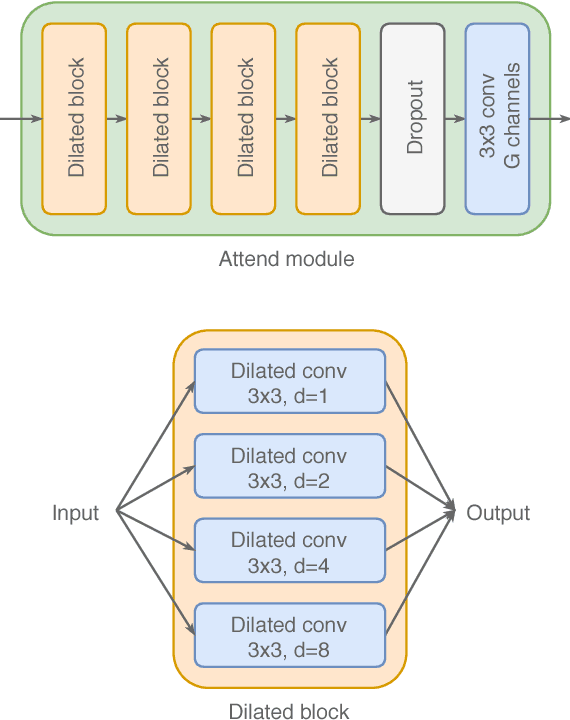

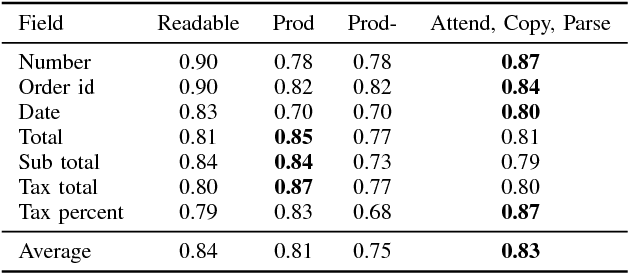

Attend, Copy, Parse - End-to-end information extraction from documents

Dec 18, 2018

Abstract:Document information extraction tasks performed by humans create data consisting of a PDF or document image input, and extracted string outputs. This end-to-end data is naturally consumed and produced when performing the task because it is valuable in and of itself. It is naturally available, at no additional cost. Unfortunately, state-of-the-art word classification methods for information extraction cannot use this data, instead requiring word-level labels which are expensive to create and consequently not available for many real life tasks. In this paper we propose the Attend, Copy, Parse architecture, a deep neural network model that can be trained directly on end-to-end data, bypassing the need for word-level labels. We evaluate the proposed architecture on a large diverse set of invoices, and outperform a state-of-the-art production system based on word classification. We believe our proposed architecture can be used on many real life information extraction tasks where word classification cannot be used due to a lack of the required word-level labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge