Rajkumar Kubendran

University of California San Diego, University of Pittsburgh

EveryDayVLA: A Vision-Language-Action Model for Affordable Robotic Manipulation

Nov 07, 2025

Abstract:While Vision-Language-Action (VLA) models map visual inputs and language instructions directly to robot actions, they often rely on costly hardware and struggle in novel or cluttered scenes. We introduce EverydayVLA, a 6-DOF manipulator that can be assembled for under $300, capable of modest payloads and workspace. A single unified model jointly outputs discrete and continuous actions, and our adaptive-horizon ensemble monitors motion uncertainty to trigger on-the-fly re-planning for safe, reliable operation. On LIBERO, EverydayVLA matches state-of-the-art success rates, and in real-world tests it outperforms prior methods by 49% in-distribution and 34.9% out-of-distribution. By combining a state-of-the-art VLA with cost-effective hardware, EverydayVLA democratizes access to a robotic foundation model and paves the way for economical use in homes and research labs alike. Experiment videos and details: https://everydayvla.github.io/

Efficient and Low-Footprint Object Classification using Spatial Contrast

Nov 06, 2023Abstract:Event-based vision sensors traditionally compute temporal contrast that offers potential for low-power and low-latency sensing and computing. In this research, an alternative paradigm for event-based sensors using localized spatial contrast (SC) under two different thresholding techniques, relative and absolute, is investigated. Given the slow maturity of spatial contrast in comparison to temporal-based sensors, a theoretical simulated output of such a hardware sensor is explored. Furthermore, we evaluate traffic sign classification using the German Traffic Sign dataset (GTSRB) with well-known Deep Neural Networks (DNNs). This study shows that spatial contrast can effectively capture salient image features needed for classification using a Binarized DNN with significant reduction in input data usage (at least 12X) and memory resources (17.5X), compared to high precision RGB images and DNN, with only a small loss (~2%) in macro F1-score. Binarized MicronNet achieves an F1-score of 94.4% using spatial contrast, compared to only 56.3% when using RGB input images. Thus, SC offers great promise for deployment in power and resource constrained edge computing environments.

Custom DNN using Reward Modulated Inverted STDP Learning for Temporal Pattern Recognition

Jul 15, 2023Abstract:Temporal spike recognition plays a crucial role in various domains, including anomaly detection, keyword spotting and neuroscience. This paper presents a novel algorithm for efficient temporal spike pattern recognition on sparse event series data. The algorithm leverages a combination of reward-modulatory behavior, Hebbian and anti-Hebbian based learning methods to identify patterns in dynamic datasets with short intervals of training. The algorithm begins with a preprocessing step, where the input data is rationalized and translated to a feature-rich yet sparse spike time series data. Next, a linear feed forward spiking neural network processes this data to identify a trained pattern. Finally, the next layer performs a weighted check to ensure the correct pattern has been detected.To evaluate the performance of the proposed algorithm, it was trained on a complex dataset containing spoken digits with spike information and its output compared to state-of-the-art.

Corticomorphic Hybrid CNN-SNN Architecture for EEG-based Low-footprint Low-latency Auditory Attention Detection

Jul 13, 2023Abstract:In a multi-speaker "cocktail party" scenario, a listener can selectively attend to a speaker of interest. Studies into the human auditory attention network demonstrate cortical entrainment to speech envelopes resulting in highly correlated Electroencephalography (EEG) measurements. Current trends in EEG-based auditory attention detection (AAD) using artificial neural networks (ANN) are not practical for edge-computing platforms due to longer decision windows using several EEG channels, with higher power consumption and larger memory footprint requirements. Nor are ANNs capable of accurately modeling the brain's top-down attention network since the cortical organization is complex and layer. In this paper, we propose a hybrid convolutional neural network-spiking neural network (CNN-SNN) corticomorphic architecture, inspired by the auditory cortex, which uses EEG data along with multi-speaker speech envelopes to successfully decode auditory attention with low latency down to 1 second, using only 8 EEG electrodes strategically placed close to the auditory cortex, at a significantly higher accuracy of 91.03%, compared to the state-of-the-art. Simultaneously, when compared to a traditional CNN reference model, our model uses ~15% fewer parameters at a lower bit precision resulting in ~57% memory footprint reduction. The results show great promise for edge-computing in brain-embedded devices, like smart hearing aids.

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

Edge AI without Compromise: Efficient, Versatile and Accurate Neurocomputing in Resistive Random-Access Memory

Aug 17, 2021

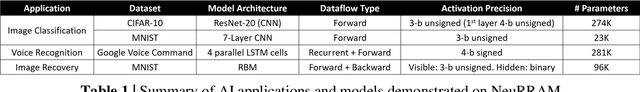

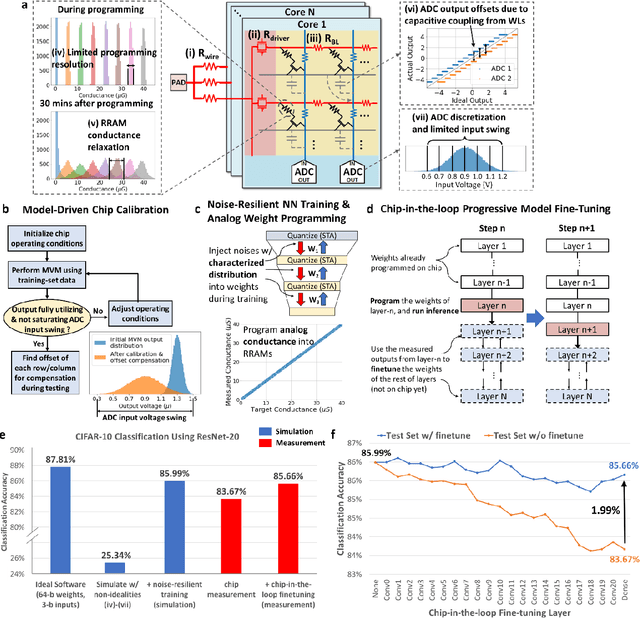

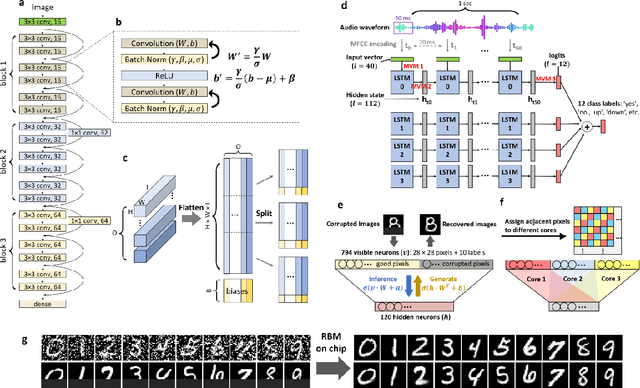

Abstract:Realizing today's cloud-level artificial intelligence functionalities directly on devices distributed at the edge of the internet calls for edge hardware capable of processing multiple modalities of sensory data (e.g. video, audio) at unprecedented energy-efficiency. AI hardware architectures today cannot meet the demand due to a fundamental "memory wall": data movement between separate compute and memory units consumes large energy and incurs long latency. Resistive random-access memory (RRAM) based compute-in-memory (CIM) architectures promise to bring orders of magnitude energy-efficiency improvement by performing computation directly within memory. However, conventional approaches to CIM hardware design limit its functional flexibility necessary for processing diverse AI workloads, and must overcome hardware imperfections that degrade inference accuracy. Such trade-offs between efficiency, versatility and accuracy cannot be addressed by isolated improvements on any single level of the design. By co-optimizing across all hierarchies of the design from algorithms and architecture to circuits and devices, we present NeuRRAM - the first multimodal edge AI chip using RRAM CIM to simultaneously deliver a high degree of versatility for diverse model architectures, record energy-efficiency $5\times$ - $8\times$ better than prior art across various computational bit-precisions, and inference accuracy comparable to software models with 4-bit weights on all measured standard AI benchmarks including accuracy of 99.0% on MNIST and 85.7% on CIFAR-10 image classification, 84.7% accuracy on Google speech command recognition, and a 70% reduction in image reconstruction error on a Bayesian image recovery task. This work paves a way towards building highly efficient and reconfigurable edge AI hardware platforms for the more demanding and heterogeneous AI applications of the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge