Raheem Beyah

NeuroSep-CP-LCB: A Deep Learning-based Contextual Multi-armed Bandit Algorithm with Uncertainty Quantification for Early Sepsis Prediction

Mar 20, 2025

Abstract:In critical care settings, timely and accurate predictions can significantly impact patient outcomes, especially for conditions like sepsis, where early intervention is crucial. We aim to model patient-specific reward functions in a contextual multi-armed bandit setting. The goal is to leverage patient-specific clinical features to optimize decision-making under uncertainty. This paper proposes NeuroSep-CP-LCB, a novel integration of neural networks with contextual bandits and conformal prediction tailored for early sepsis detection. Unlike the algorithm pool selection problem in the previous paper, where the primary focus was identifying the most suitable pre-trained model for prediction tasks, this work directly models the reward function using a neural network, allowing for personalized and adaptive decision-making. Combining the representational power of neural networks with the robustness of conformal prediction intervals, this framework explicitly accounts for uncertainty in offline data distributions and provides actionable confidence bounds on predictions.

Transfer Attacks Revisited: A Large-Scale Empirical Study in Real Computer Vision Settings

Apr 07, 2022

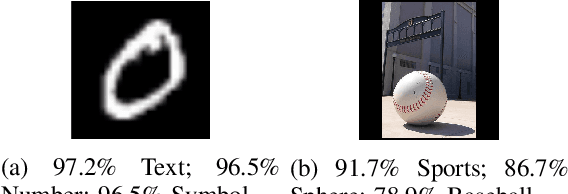

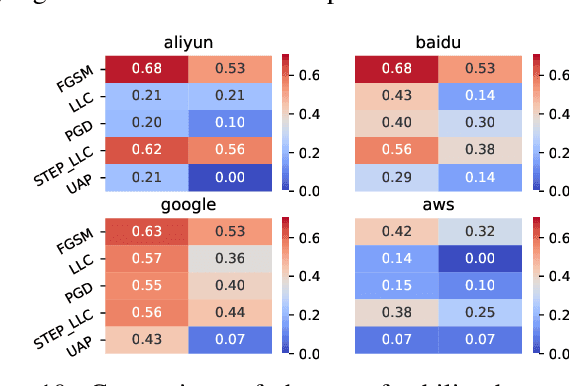

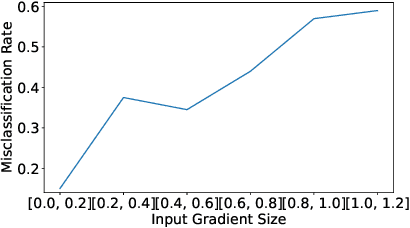

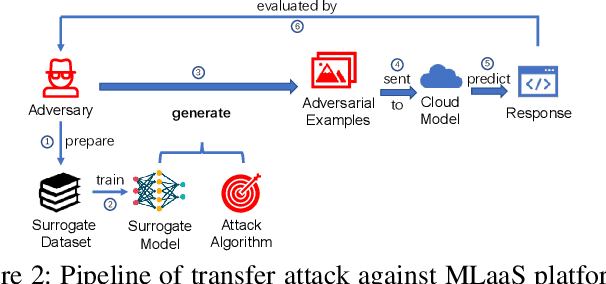

Abstract:One intriguing property of adversarial attacks is their "transferability" -- an adversarial example crafted with respect to one deep neural network (DNN) model is often found effective against other DNNs as well. Intensive research has been conducted on this phenomenon under simplistic controlled conditions. Yet, thus far, there is still a lack of comprehensive understanding about transferability-based attacks ("transfer attacks") in real-world environments. To bridge this critical gap, we conduct the first large-scale systematic empirical study of transfer attacks against major cloud-based MLaaS platforms, taking the components of a real transfer attack into account. The study leads to a number of interesting findings which are inconsistent to the existing ones, including: (1) Simple surrogates do not necessarily improve real transfer attacks. (2) No dominant surrogate architecture is found in real transfer attacks. (3) It is the gap between posterior (output of the softmax layer) rather than the gap between logit (so-called $\kappa$ value) that increases transferability. Moreover, by comparing with prior works, we demonstrate that transfer attacks possess many previously unknown properties in real-world environments, such as (1) Model similarity is not a well-defined concept. (2) $L_2$ norm of perturbation can generate high transferability without usage of gradient and is a more powerful source than $L_\infty$ norm. We believe this work sheds light on the vulnerabilities of popular MLaaS platforms and points to a few promising research directions.

On Evaluating the Effectiveness of the HoneyBot: A Case Study

May 28, 2019

Abstract:In recent years, cyber-physical system (CPS) security as applied to robotic systems has become a popular research area. Mainly because robotics systems have traditionally emphasized the completion of a specific objective and lack security oriented design. Our previous work, HoneyBot \cite{celine}, presented the concept and prototype of the first software hybrid interaction honeypot specifically designed for networked robotic systems. The intuition behind HoneyBot was that it would be a remotely accessible robotic system that could simulate unsafe actions and physically perform safe actions to fool attackers. Unassuming attackers would think they were connected to an ordinary robotic system, believing their exploits were being successfully executed. All the while, the HoneyBot is logging all communications and exploits sent to be used for attacker attribution and threat model creation. In this paper, we present findings from the result of a user study performed to evaluate the effectiveness of the HoneyBot framework and architecture as it applies to real robotic systems. The user study consisted of 40 participants, was conducted over the course of several weeks, and drew from a wide range of participants aged between 18-60 with varying level of technical expertise. From the study we found that research subjects could not tell the difference between the simulated sensor values and the real sensor values coming from the HoneyBot, meaning the HoneyBot convincingly spoofed communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge