Raffaello D'Andrea

Flow Gym

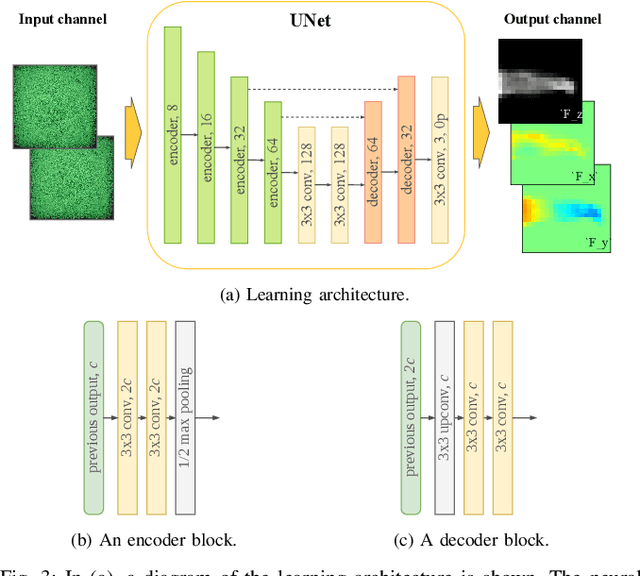

Dec 12, 2025Abstract:Flow Gym is a toolkit for research and deployment of flow-field quantification methods inspired by OpenAI Gym and Stable-Baselines3. It uses SynthPix as synthetic image generation engine and provides a unified interface for the testing, deployment and training of (learning-based) algorithms for flow-field quantification from a number of consecutive images of tracer particles. It also contains a growing number of integrations of existing algorithms and stable (re-)implementations in JAX.

SynthPix: A lightspeed PIV images generator

Dec 10, 2025Abstract:We describe SynthPix, a synthetic image generator for Particle Image Velocimetry (PIV) with a focus on performance and parallelism on accelerators, implemented in JAX. SynthPix supports the same configuration parameters as existing tools but achieves a throughput several orders of magnitude higher in image-pair generation per second. SynthPix was developed to enable the training of data-hungry reinforcement learning methods for flow estimation and for reducing the iteration times during the development of fast flow estimation methods used in recent active fluids control studies with real-time PIV feedback. We believe SynthPix to be useful for the fluid dynamics community, and in this paper we describe the main ideas behind this software package.

Using reinforcement learning to probe the role of feedback in skill acquisition

Dec 09, 2025Abstract:Many high-performance human activities are executed with little or no external feedback: think of a figure skater landing a triple jump, a pitcher throwing a curveball for a strike, or a barista pouring latte art. To study the process of skill acquisition under fully controlled conditions, we bypass human subjects. Instead, we directly interface a generalist reinforcement learning agent with a spinning cylinder in a tabletop circulating water channel to maximize or minimize drag. This setup has several desirable properties. First, it is a physical system, with the rich interactions and complex dynamics that only the physical world has: the flow is highly chaotic and extremely difficult, if not impossible, to model or simulate accurately. Second, the objective -- drag minimization or maximization -- is easy to state and can be captured directly in the reward, yet good strategies are not obvious beforehand. Third, decades-old experimental studies provide recipes for simple, high-performance open-loop policies. Finally, the setup is inexpensive and far easier to reproduce than human studies. In our experiments we find that high-dimensional flow feedback lets the agent discover high-performance drag-control strategies with only minutes of real-world interaction. When we later replay the same action sequences without any feedback, we obtain almost identical performance. This shows that feedback, and in particular flow feedback, is not needed to execute the learned policy. Surprisingly, without flow feedback during training the agent fails to discover any well-performing policy in drag maximization, but still succeeds in drag minimization, albeit more slowly and less reliably. Our studies show that learning a high-performance skill can require richer information than executing it, and learning conditions can be kind or wicked depending solely on the goal, not on dynamics or policy complexity.

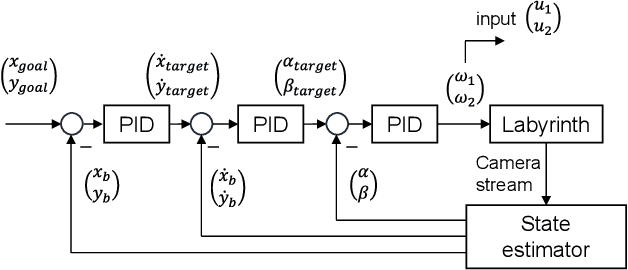

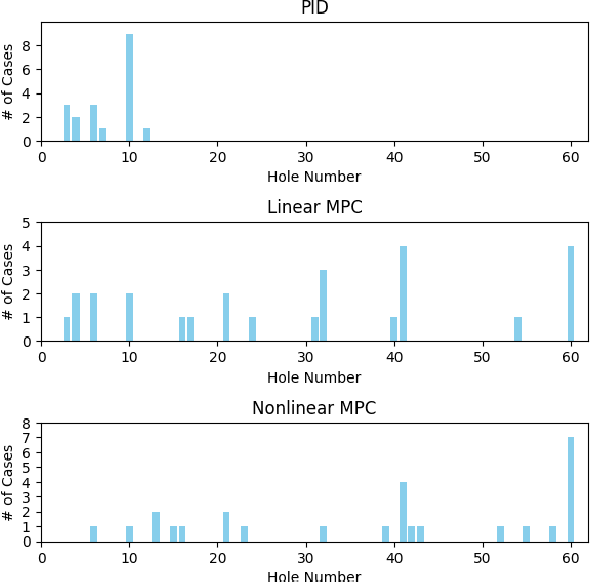

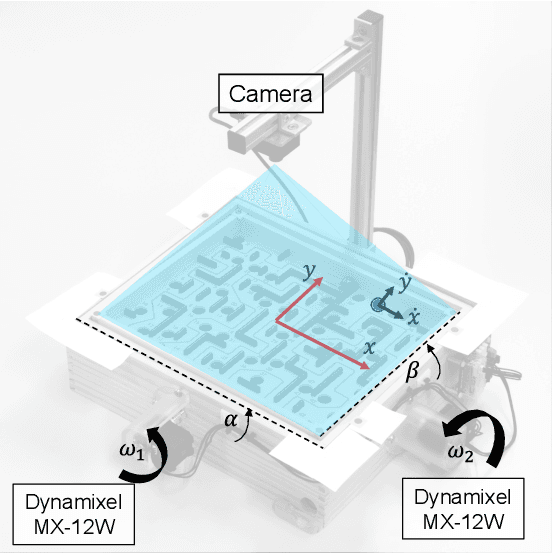

Adaptive Nonlinear Model Predictive Control for a Real-World Labyrinth Game

Jun 12, 2024

Abstract:We present a nonlinear non-convex model predictive control approach to solving a real-world labyrinth game. We introduce adaptive nonlinear constraints, representing the non-convex obstacles within the labyrinth. Our method splits the computation-heavy optimization problem into two layers; first, a high-level model predictive controller which incorporates the full problem formulation and finds pseudo-global optimal trajectories at a low frequency. Secondly, a low-level model predictive controller that receives a reduced, computationally optimized version of the optimization problem to follow the given high-level path in real-time. Further, a map of the labyrinth surface irregularities is learned. Our controller is able to handle the major disturbances and model inaccuracies encountered on the labyrinth and outperforms other classical control methods.

Optical Tactile Sensing for Aerial Multi-Contact Interaction: Design, Integration, and Evaluation

Jan 30, 2024

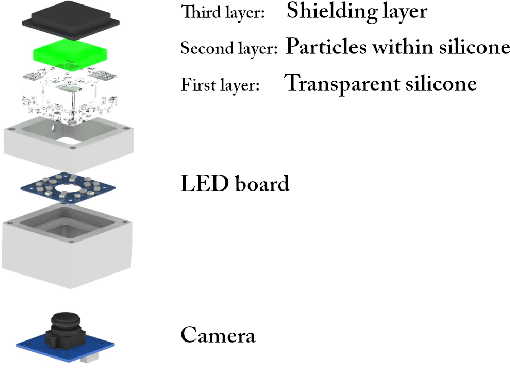

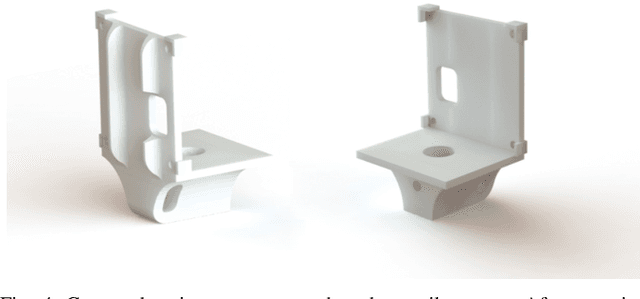

Abstract:Distributed tactile sensing for multi-force detection is crucial for various aerial robot interaction tasks. However, current contact sensing solutions on drones only exploit single end-effector sensors and cannot provide distributed multi-contact sensing. Designed to be easily mounted at the bottom of a drone, we propose an optical tactile sensor that features a large and curved soft sensing surface, a hollow structure and a new illumination system. Even when spaced only 2 cm apart, multiple contacts can be detected simultaneously using our software pipeline, which provides real-world quantities of 3D contact locations (mm) and 3D force vectors (N), with an accuracy of 1.5 mm and 0.17 N respectively. We demonstrate the sensor's applicability and reliability onboard and in real-time with two demos related to i) the estimation of the compliance of different perches and subsequent re-alignment and landing on the stiffer one, and ii) the mapping of sparse obstacles. The implementation of our distributed tactile sensor represents a significant step towards attaining the full potential of drones as versatile robots capable of interacting with and navigating within complex environments.

Sample-Efficient Learning to Solve a Real-World Labyrinth Game Using Data-Augmented Model-Based Reinforcement Learning

Dec 15, 2023Abstract:Motivated by the challenge of achieving rapid learning in physical environments, this paper presents the development and training of a robotic system designed to navigate and solve a labyrinth game using model-based reinforcement learning techniques. The method involves extracting low-dimensional observations from camera images, along with a cropped and rectified image patch centered on the current position within the labyrinth, providing valuable information about the labyrinth layout. The learning of a control policy is performed purely on the physical system using model-based reinforcement learning, where the progress along the labyrinth's path serves as a reward signal. Additionally, we exploit the system's inherent symmetries to augment the training data. Consequently, our approach learns to successfully solve a popular real-world labyrinth game in record time, with only 5 hours of real-world training data.

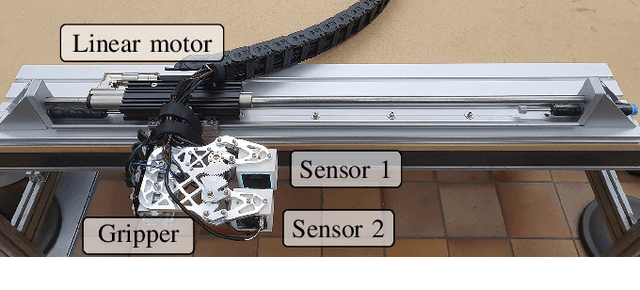

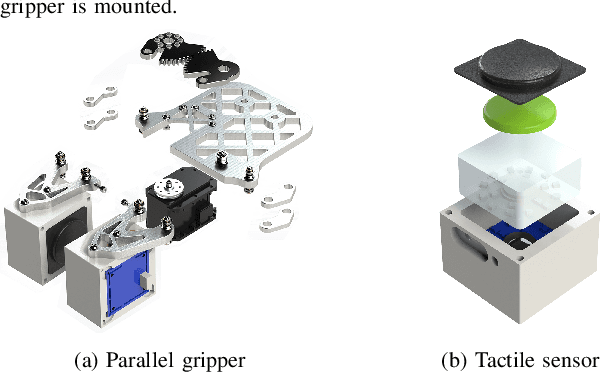

Leveraging distributed contact force measurements for slip detection: a physics-based approach enabled by a data-driven tactile sensor

Sep 23, 2021

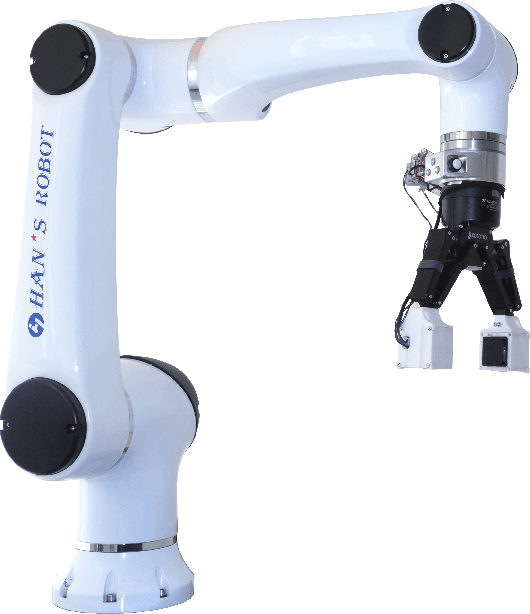

Abstract:Grasping objects whose physical properties are unknown is still a great challenge in robotics. Most solutions rely entirely on visual data to plan the best grasping strategy. However, to match human abilities and be able to reliably pick and hold unknown objects, the integration of an artificial sense of touch in robotic systems is pivotal. This paper describes a novel model-based slip detection pipeline that can predict possibly failing grasps in real-time and signal a necessary increase in grip force. As such, the slip detector does not rely on manually collected data, but exploits physics to generalize across different tasks. To evaluate the approach, a state-of-the-art vision-based tactile sensor that accurately estimates distributed forces was integrated into a grasping setup composed of a six degrees-of-freedom cobot and a two-finger gripper. Results show that the system can reliably predict slip while manipulating objects of different shapes, materials, and weights. The sensor can detect both translational and rotational slip in various scenarios, making it suitable to improve the stability of a grasp.

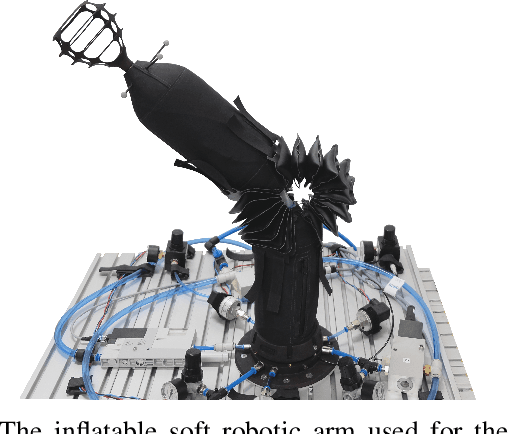

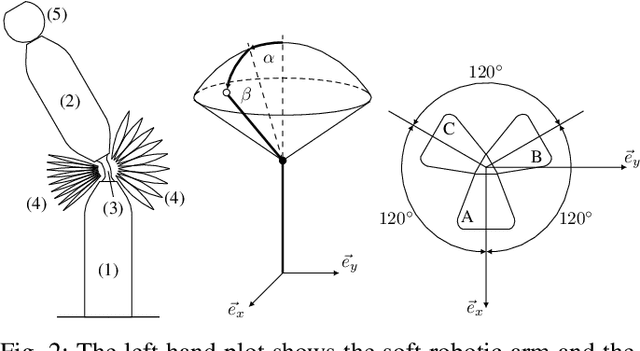

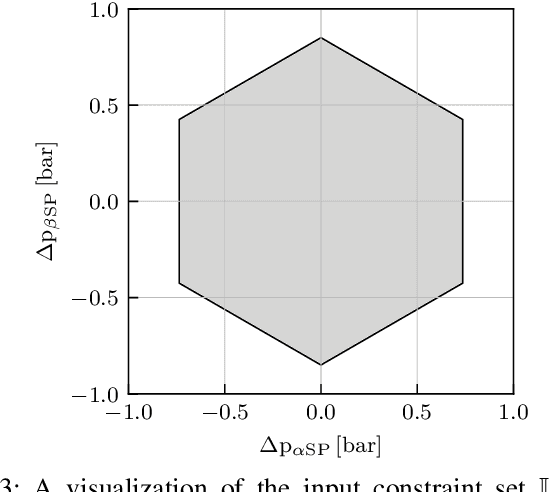

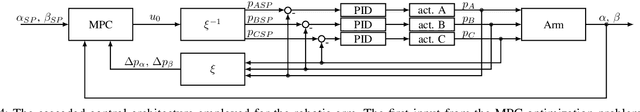

Offset-free Model Predictive Control: A Ball Catching Application with a Spherical Soft Robotic Arm

Mar 12, 2021

Abstract:This paper presents an offset-free model predictive controller for fast and accurate control of a spherical soft robotic arm. In this control scheme, a linear model is combined with an online disturbance estimation technique to systematically compensate model deviations. Dynamic effects such as material relaxation resulting from the use of soft materials can be addressed to achieve offset-free tracking. The tracking error can be reduced by 35% when compared to a standard model predictive controller without a disturbance compensation scheme. The improved tracking performance enables the realization of a ball catching application, where the spherical soft robotic arm can catch a ball thrown by a human.

Distributed Estimation, Control and Coordination of Quadcopter Swarm Robots

Feb 14, 2021

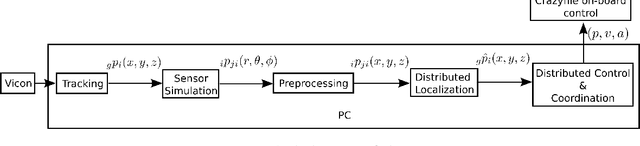

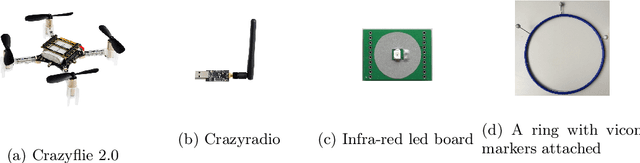

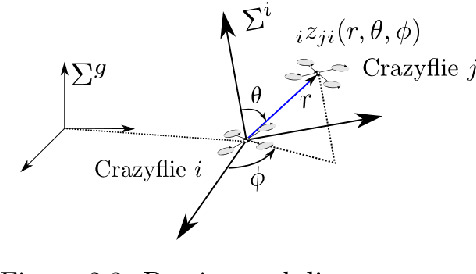

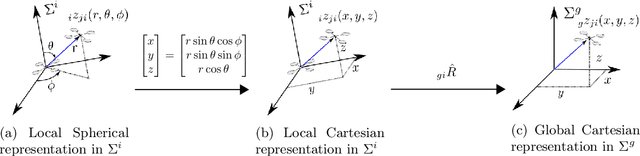

Abstract:In this thesis we are interested in applying distributed estimation, control and optimization techniques to enable a group of quadcopters to fly through openings. The quadcopters are assumed to be equipped with a simulated bearing and distance sensor for localization. Some quadcopters are designated as leaders who carry global position sensors. We assume quadcopters can communicate information with each other.

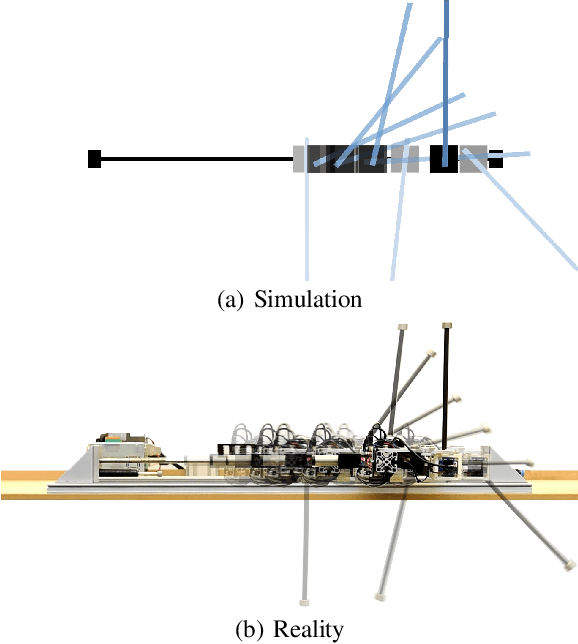

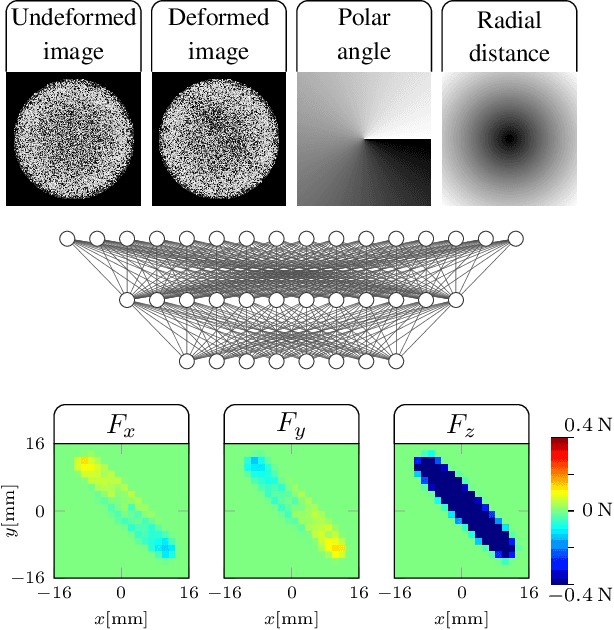

Zero-shot sim-to-real transfer of tactile control policies for aggressive swing-up manipulation

Jan 07, 2021

Abstract:This paper aims to show that robots equipped with a vision-based tactile sensor can perform dynamic manipulation tasks without prior knowledge of all the physical attributes of the objects to be manipulated. For this purpose, a robotic system is presented that is able to swing up poles of different masses, radii and lengths, to an angle of 180 degrees, while relying solely on the feedback provided by the tactile sensor. This is achieved by developing a novel simulator that accurately models the interaction of a pole with the soft sensor. A feedback policy that is conditioned on a sensory observation history, and which has no prior knowledge of the physical features of the pole, is then learned in the aforementioned simulation. When evaluated on the physical system, the policy is able to swing up a wide range of poles that differ significantly in their physical attributes without further adaptation. To the authors' knowledge, this is the first work where a feedback policy from high-dimensional tactile observations is used to control the swing-up manipulation of poles in closed-loop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge