Leveraging distributed contact force measurements for slip detection: a physics-based approach enabled by a data-driven tactile sensor

Paper and Code

Sep 23, 2021

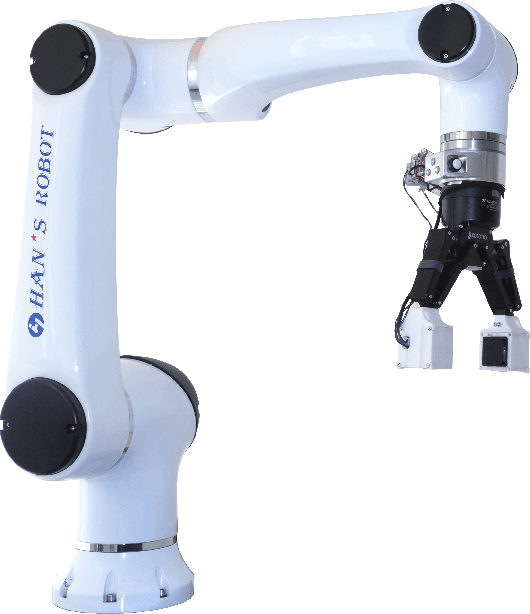

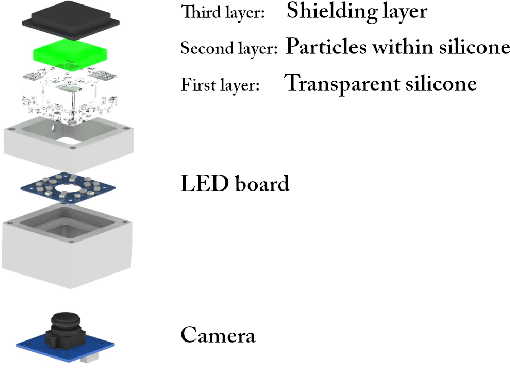

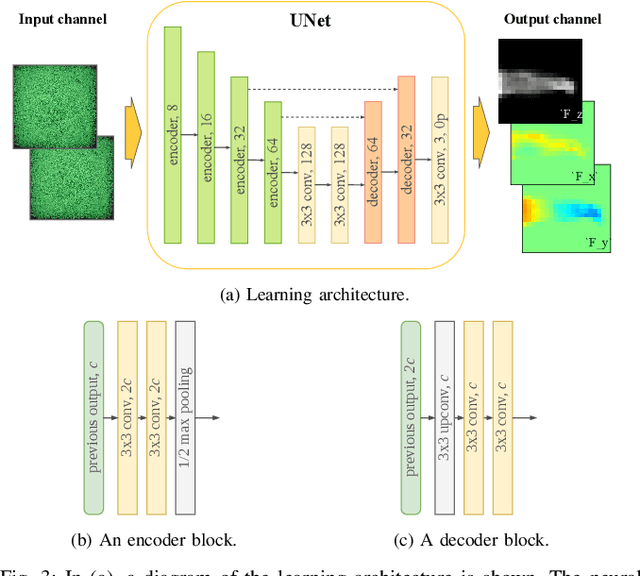

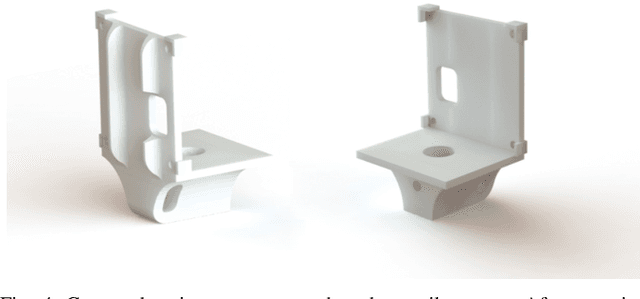

Grasping objects whose physical properties are unknown is still a great challenge in robotics. Most solutions rely entirely on visual data to plan the best grasping strategy. However, to match human abilities and be able to reliably pick and hold unknown objects, the integration of an artificial sense of touch in robotic systems is pivotal. This paper describes a novel model-based slip detection pipeline that can predict possibly failing grasps in real-time and signal a necessary increase in grip force. As such, the slip detector does not rely on manually collected data, but exploits physics to generalize across different tasks. To evaluate the approach, a state-of-the-art vision-based tactile sensor that accurately estimates distributed forces was integrated into a grasping setup composed of a six degrees-of-freedom cobot and a two-finger gripper. Results show that the system can reliably predict slip while manipulating objects of different shapes, materials, and weights. The sensor can detect both translational and rotational slip in various scenarios, making it suitable to improve the stability of a grasp.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge