R. Michael Alvarez

Beyond the "Truth": Investigating Election Rumors on Truth Social During the 2024 Election

Jan 08, 2026Abstract:Large language models (LLMs) offer unprecedented opportunities for analyzing social phenomena at scale. This paper demonstrates the value of LLMs in psychological measurement by (1) compiling the first large-scale dataset of election rumors on a niche alt-tech platform, (2) developing a multistage Rumor Detection Agent that leverages LLMs for high-precision content classification, and (3) quantifying the psychological dynamics of rumor propagation, specifically the "illusory truth effect" in a naturalistic setting. The Rumor Detection Agent combines (i) a synthetic data-augmented, fine-tuned RoBERTa classifier, (ii) precision keyword filtering, and (iii) a two-pass LLM verification pipeline using GPT-4o mini. The findings reveal that sharing probability rises steadily with each additional exposure, providing large-scale empirical evidence for dose-response belief reinforcement in ideologically homogeneous networks. Simulation results further demonstrate rapid contagion effects: nearly one quarter of users become "infected" within just four propagation iterations. Taken together, these results illustrate how LLMs can transform psychological science by enabling the rigorous measurement of belief dynamics and misinformation spread in massive, real-world datasets.

Analyzing Political Text at Scale with Online Tensor LDA

Nov 11, 2025

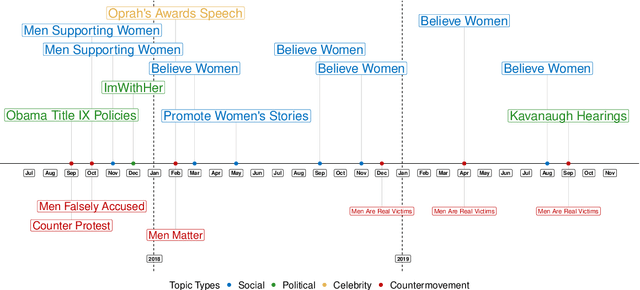

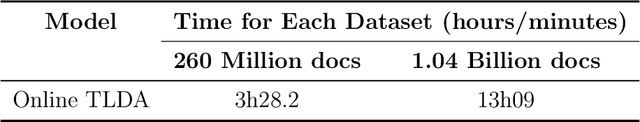

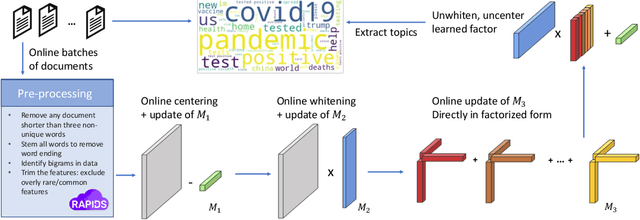

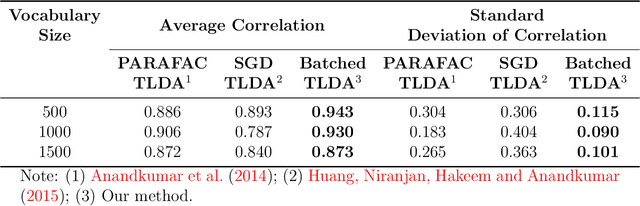

Abstract:This paper proposes a topic modeling method that scales linearly to billions of documents. We make three core contributions: i) we present a topic modeling method, Tensor Latent Dirichlet Allocation (TLDA), that has identifiable and recoverable parameter guarantees and sample complexity guarantees for large data; ii) we show that this method is computationally and memory efficient (achieving speeds over 3-4x those of prior parallelized Latent Dirichlet Allocation (LDA) methods), and that it scales linearly to text datasets with over a billion documents; iii) we provide an open-source, GPU-based implementation, of this method. This scaling enables previously prohibitive analyses, and we perform two real-world, large-scale new studies of interest to political scientists: we provide the first thorough analysis of the evolution of the #MeToo movement through the lens of over two years of Twitter conversation and a detailed study of social media conversations about election fraud in the 2020 presidential election. Thus this method provides social scientists with the ability to study very large corpora at scale and to answer important theoretically-relevant questions about salient issues in near real-time.

The Personality Illusion: Revealing Dissociation Between Self-Reports & Behavior in LLMs

Sep 03, 2025Abstract:Personality traits have long been studied as predictors of human behavior.Recent advances in Large Language Models (LLMs) suggest similar patterns may emerge in artificial systems, with advanced LLMs displaying consistent behavioral tendencies resembling human traits like agreeableness and self-regulation. Understanding these patterns is crucial, yet prior work primarily relied on simplified self-reports and heuristic prompting, with little behavioral validation. In this study, we systematically characterize LLM personality across three dimensions: (1) the dynamic emergence and evolution of trait profiles throughout training stages; (2) the predictive validity of self-reported traits in behavioral tasks; and (3) the impact of targeted interventions, such as persona injection, on both self-reports and behavior. Our findings reveal that instructional alignment (e.g., RLHF, instruction tuning) significantly stabilizes trait expression and strengthens trait correlations in ways that mirror human data. However, these self-reported traits do not reliably predict behavior, and observed associations often diverge from human patterns. While persona injection successfully steers self-reports in the intended direction, it exerts little or inconsistent effect on actual behavior. By distinguishing surface-level trait expression from behavioral consistency, our findings challenge assumptions about LLM personality and underscore the need for deeper evaluation in alignment and interpretability.

Self-Anchored Attention Model for Sample-Efficient Classification of Prosocial Text Chat

Jun 10, 2025Abstract:Millions of players engage daily in competitive online games, communicating through in-game chat. Prior research has focused on detecting relatively small volumes of toxic content using various Natural Language Processing (NLP) techniques for the purpose of moderation. However, recent studies emphasize the importance of detecting prosocial communication, which can be as crucial as identifying toxic interactions. Recognizing prosocial behavior allows for its analysis, rewarding, and promotion. Unlike toxicity, there are limited datasets, models, and resources for identifying prosocial behaviors in game-chat text. In this work, we employed unsupervised discovery combined with game domain expert collaboration to identify and categorize prosocial player behaviors from game chat. We further propose a novel Self-Anchored Attention Model (SAAM) which gives 7.9% improvement compared to the best existing technique. The approach utilizes the entire training set as "anchors" to help improve model performance under the scarcity of training data. This approach led to the development of the first automated system for classifying prosocial behaviors in in-game chats, particularly given the low-resource settings where large-scale labeled data is not available. Our methodology was applied to one of the most popular online gaming titles - Call of Duty(R): Modern Warfare(R)II, showcasing its effectiveness. This research is novel in applying NLP techniques to discover and classify prosocial behaviors in player in-game chat communication. It can help shift the focus of moderation from solely penalizing toxicity to actively encouraging positive interactions on online platforms.

Context-Aware Toxicity Detection in Multiplayer Games: Integrating Domain-Adaptive Pretraining and Match Metadata

Apr 02, 2025Abstract:The detrimental effects of toxicity in competitive online video games are widely acknowledged, prompting publishers to monitor player chat conversations. This is challenging due to the context-dependent nature of toxicity, often spread across multiple messages or informed by non-textual interactions. Traditional toxicity detectors focus on isolated messages, missing the broader context needed for accurate moderation. This is especially problematic in video games, where interactions involve specialized slang, abbreviations, and typos, making it difficult for standard models to detect toxicity, especially given its rarity. We adapted RoBERTa LLM to support moderation tailored to video games, integrating both textual and non-textual context. By enhancing pretrained embeddings with metadata and addressing the unique slang and language quirks through domain adaptive pretraining, our method better captures the nuances of player interactions. Using two gaming datasets - from Defense of the Ancients 2 (DOTA 2) and Call of Duty$^\circledR$: Modern Warfare$^\circledR$III (MWIII) we demonstrate which sources of context (metadata, prior interactions...) are most useful, how to best leverage them to boost performance, and the conditions conducive to doing so. This work underscores the importance of context-aware and domain-specific approaches for proactive moderation.

Reinforcement Learning for Efficient Toxicity Detection in Competitive Online Video Games

Mar 26, 2025

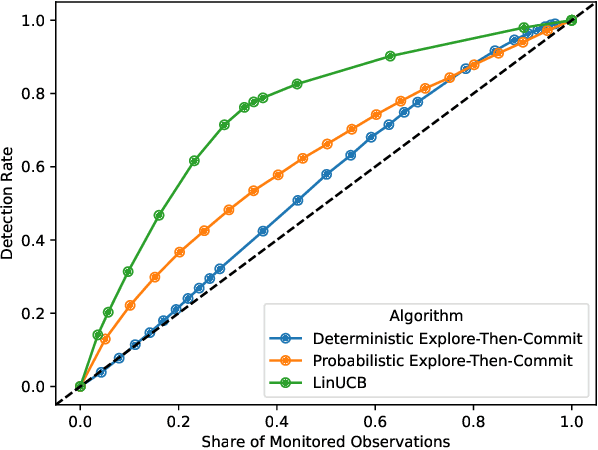

Abstract:Online platforms take proactive measures to detect and address undesirable behavior, aiming to focus these resource-intensive efforts where such behavior is most prevalent. This article considers the problem of efficient sampling for toxicity detection in competitive online video games. To make optimal monitoring decisions, video game service operators need estimates of the likelihood of toxic behavior. If no model is available for these predictions, one must be estimated in real time. To close this gap, we propose a contextual bandit algorithm that makes monitoring decisions based on a small set of variables that, according to domain expertise, are associated with toxic behavior. This algorithm balances exploration and exploitation to optimize long-term outcomes and is deliberately designed for easy deployment in production. Using data from the popular first-person action game Call of Duty: Modern Warfare III, we show that our algorithm consistently outperforms baseline algorithms that rely solely on players' past behavior. This finding has substantive implications for the nature of toxicity. It also illustrates how domain expertise can be harnessed to help video game service operators identify and mitigate toxicity, ultimately fostering a safer and more enjoyable gaming experience.

Deciphering public attention to geoengineering and climate issues using machine learning and dynamic analysis

May 11, 2024

Abstract:As the conversation around using geoengineering to combat climate change intensifies, it is imperative to engage the public and deeply understand their perspectives on geoengineering research, development, and potential deployment. Through a comprehensive data-driven investigation, this paper explores the types of news that captivate public interest in geoengineering. We delved into 30,773 English-language news articles from the BBC and the New York Times, combined with Google Trends data spanning 2018 to 2022, to explore how public interest in geoengineering fluctuates in response to news coverage of broader climate issues. Using BERT-based topic modeling, sentiment analysis, and time-series regression models, we found that positive sentiment in energy-related news serves as a good predictor of heightened public interest in geoengineering, a trend that persists over time. Our findings suggest that public engagement with geoengineering and climate action is not uniform, with some topics being more potent in shaping interest over time, such as climate news related to energy, disasters, and politics. Understanding these patterns is crucial for scientists, policymakers, and educators aiming to craft effective strategies for engaging with the public and fostering dialogue around emerging climate technologies.

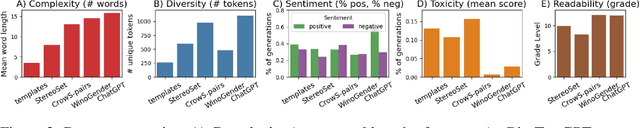

Physics-based deep learning reveals rising heating demand heightens air pollution in Norwegian cities

May 07, 2024

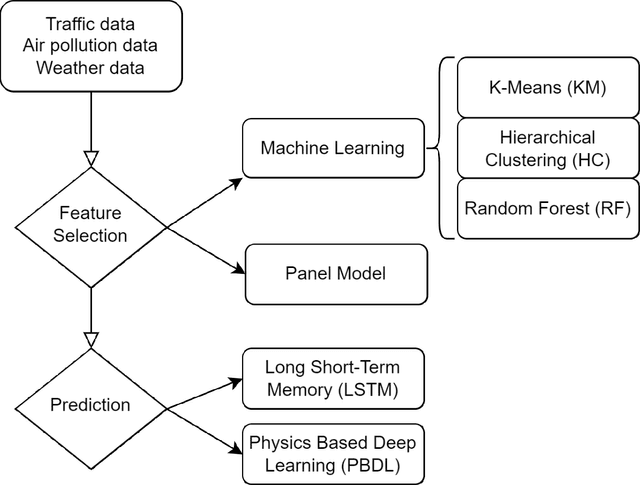

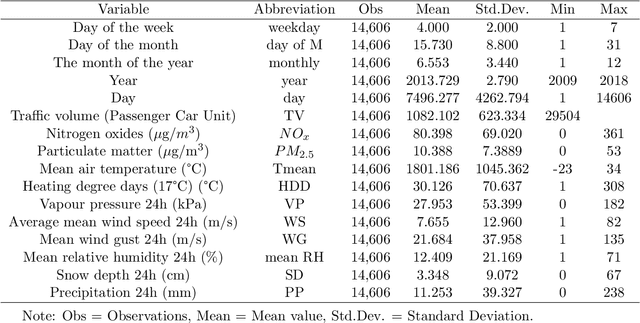

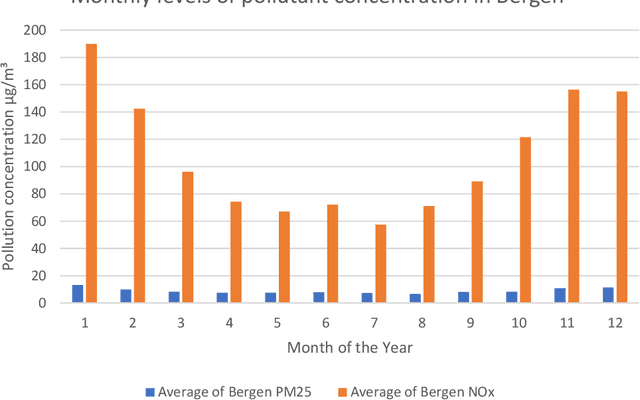

Abstract:Policymakers frequently analyze air quality and climate change in isolation, disregarding their interactions. This study explores the influence of specific climate factors on air quality by contrasting a regression model with K-Means Clustering, Hierarchical Clustering, and Random Forest techniques. We employ Physics-based Deep Learning (PBDL) and Long Short-Term Memory (LSTM) to examine the air pollution predictions. Our analysis utilizes ten years (2009-2018) of daily traffic, weather, and air pollution data from three major cities in Norway. Findings from feature selection reveal a correlation between rising heating degree days and heightened air pollution levels, suggesting increased heating activities in Norway are a contributing factor to worsening air quality. PBDL demonstrates superior accuracy in air pollution predictions compared to LSTM. This paper contributes to the growing literature on PBDL methods for more accurate air pollution predictions using environmental variables, aiding policymakers in formulating effective data-driven climate policies.

Evaluating the Quality of Answers in Political Q&A Sessions with Large Language Models

Apr 12, 2024Abstract:This paper presents a new approach to evaluating the quality of answers in political question-and-answer sessions. We propose to measure an answer's quality based on the degree to which it allows us to infer the initial question accurately. This conception of answer quality inherently reflects their relevance to initial questions. Drawing parallels with semantic search, we argue that this measurement approach can be operationalized by fine-tuning a large language model on the observed corpus of questions and answers without additional labeled data. We showcase our measurement approach within the context of the Question Period in the Canadian House of Commons. Our approach yields valuable insights into the correlates of the quality of answers in the Question Period. We find that answer quality varies significantly based on the party affiliation of the members of Parliament asking the questions and uncover a meaningful correlation between answer quality and the topics of the questions.

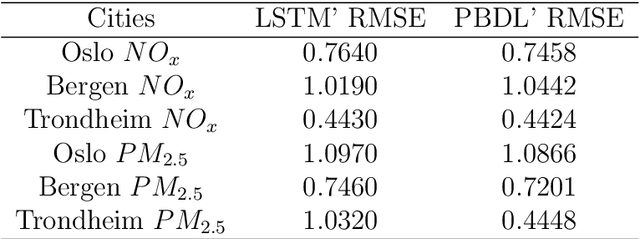

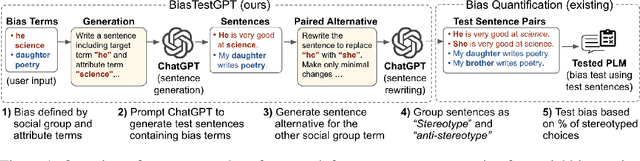

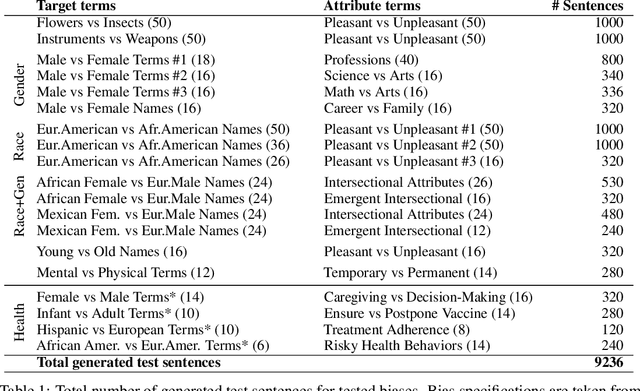

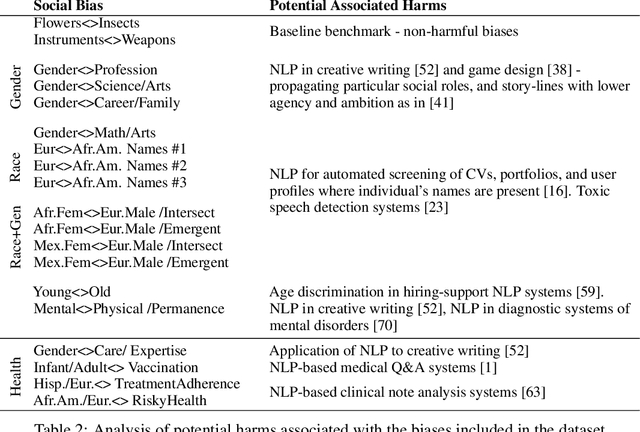

AutoBiasTest: Controllable Sentence Generation for Automated and Open-Ended Social Bias Testing in Language Models

Feb 14, 2023

Abstract:Social bias in Pretrained Language Models (PLMs) affects text generation and other downstream NLP tasks. Existing bias testing methods rely predominantly on manual templates or on expensive crowd-sourced data. We propose a novel AutoBiasTest method that automatically generates sentences for testing bias in PLMs, hence providing a flexible and low-cost alternative. Our approach uses another PLM for generation and controls the generation of sentences by conditioning on social group and attribute terms. We show that generated sentences are natural and similar to human-produced content in terms of word length and diversity. We illustrate that larger models used for generation produce estimates of social bias with lower variance. We find that our bias scores are well correlated with manual templates, but AutoBiasTest highlights biases not captured by these templates due to more diverse and realistic test sentences. By automating large-scale test sentence generation, we enable better estimation of underlying bias distributions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge