Quanquan Shao

Object-Agnostic Suction Grasp Affordance Detection in Dense Cluster Using Self-Supervised Learning.docx

Jun 07, 2019

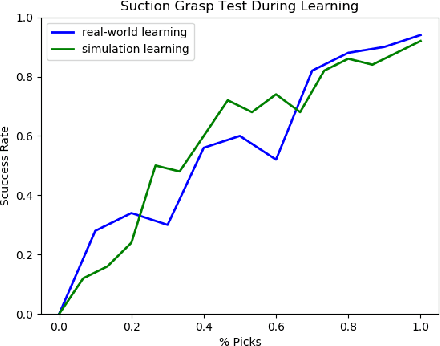

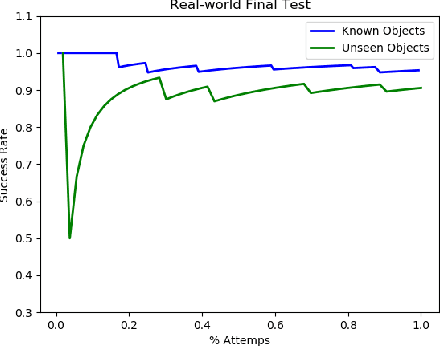

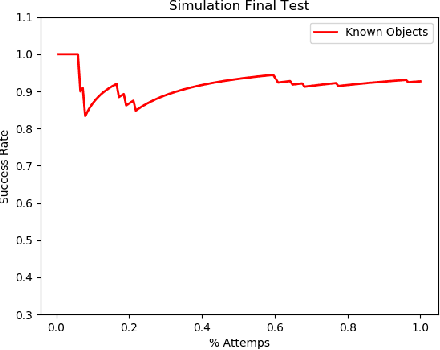

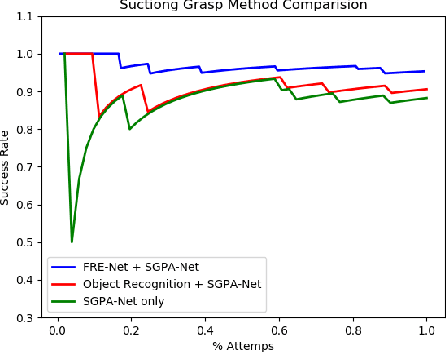

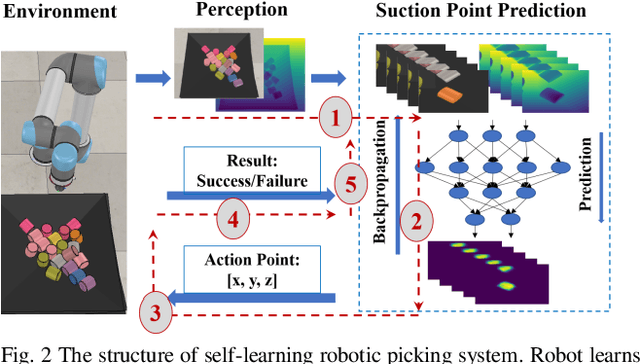

Abstract:In this paper we study grasp problem in dense cluster, a challenging task in warehouse logistics scenario. By introducing a two-step robust suction affordance detection method, we focus on using vacuum suction pad to clear up a box filled with seen and unseen objects. Two CNN based neural networks are proposed. A Fast Region Estimation Network (FRE-Net) predicts which region contains pickable objects, and a Suction Grasp Point Affordance network (SGPA-Net) determines which point in that region is pickable. So as to enable such two networks, we design a self-supervised learning pipeline to accumulate data, train and test the performance of our method. In both virtual and real environment, within 1500 picks (~5 hours), we reach a picking accuracy of 95% for known objects and 90% for unseen objects with similar geometry features.

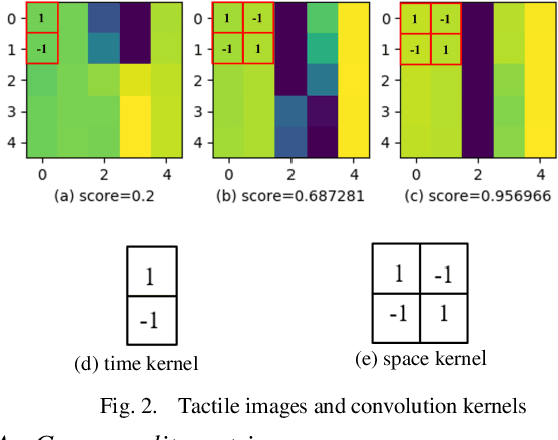

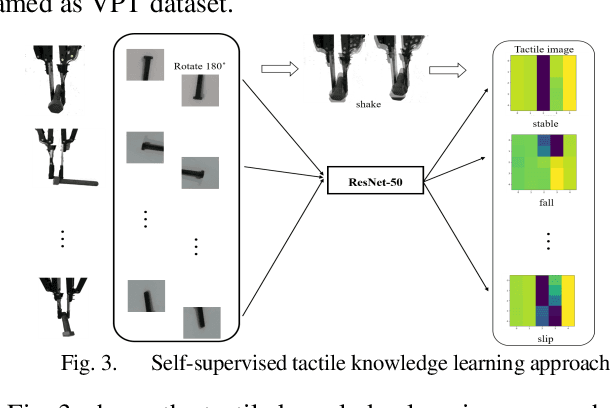

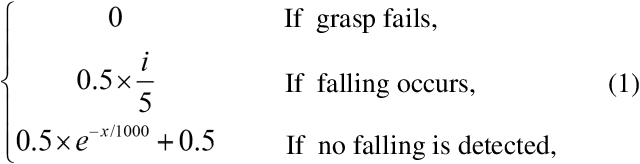

Bayesian Grasp: Robotic visual stable grasp based on prior tactile knowledge

May 30, 2019

Abstract:Robotic grasp detection is a fundamental capability for intelligent manipulation in unstructured environments. Previous work mainly employed visual and tactile fusion to achieve stable grasp, while, the whole process depending heavily on regrasping, which wastes much time to regulate and evaluate. We propose a novel way to improve robotic grasping: by using learned tactile knowledge, a robot can achieve a stable grasp from an image. First, we construct a prior tactile knowledge learning framework with novel grasp quality metric which is determined by measuring its resistance to external perturbations. Second, we propose a multi-phases Bayesian Grasp architecture to generate stable grasp configurations through a single RGB image based on prior tactile knowledge. Results show that this framework can classify the outcome of grasps with an average accuracy of 86% on known objects and 79% on novel objects. The prior tactile knowledge improves the successful rate of 55% over traditional vision-based strategies.

Combining RGB and Points to Predict Grasping Region for Robotic Bin-Picking

Apr 24, 2019

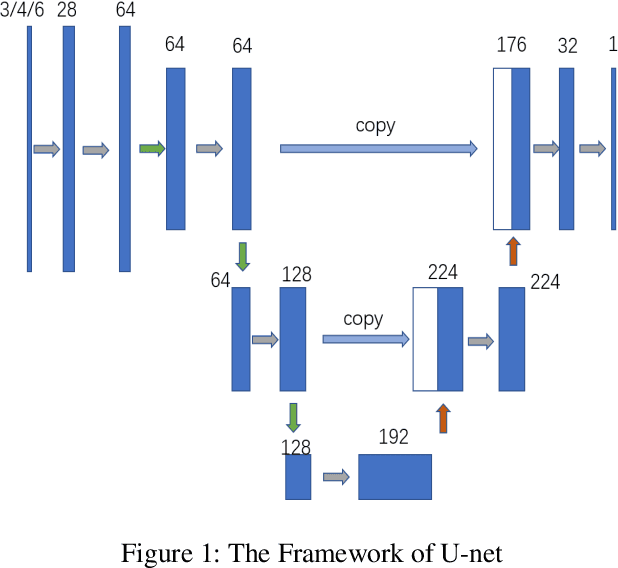

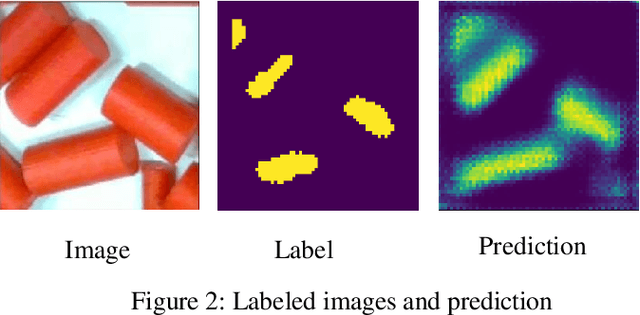

Abstract:This paper focuses on a robotic picking tasks in cluttered scenario. Because of the diversity of objects and clutter by placing, it is much difficult to recognize and estimate their pose before grasping. Here, we use U-net, a special Convolution Neural Networks (CNN), to combine RGB images and depth information to predict picking region without recognition and pose estimation. The efficiency of diverse visual input of the network were compared, including RGB, RGB-D and RGB-Points. And we found the RGB-Points input could get a precision of 95.74%.

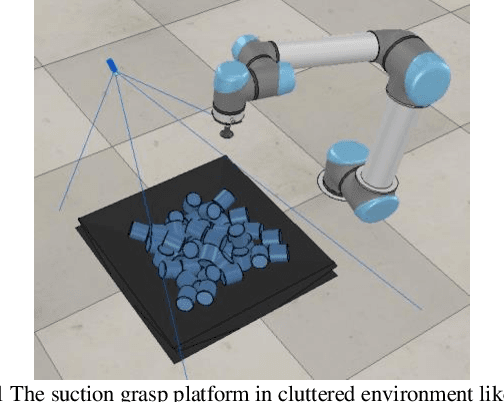

Suction Grasp Region Prediction using Self-supervised Learning for Object Picking in Dense Clutter

Apr 24, 2019

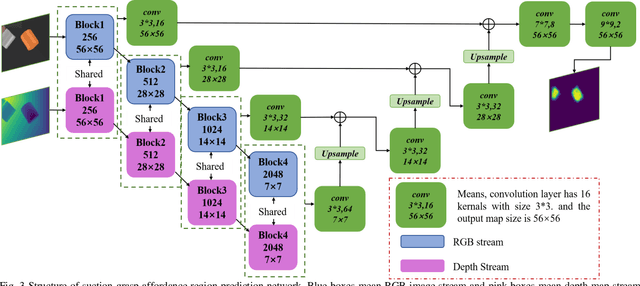

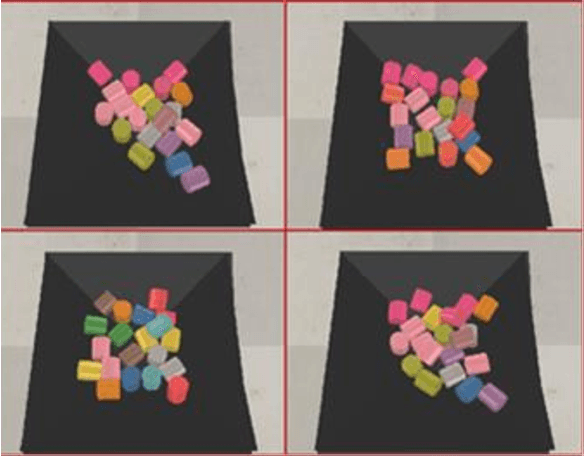

Abstract:This paper focuses on robotic picking tasks in cluttered scenario. Because of the diversity of poses, types of stack and complicated background in bin picking situation, it is much difficult to recognize and estimate their pose before grasping them. Here, this paper combines Resnet with U-net structure, a special framework of Convolution Neural Networks (CNN), to predict picking region without recognition and pose estimation. And it makes robotic picking system learn picking skills from scratch. At the same time, we train the network end to end with online samples. In the end of this paper, several experiments are conducted to demonstrate the performance of our methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge