Bayesian Grasp: Robotic visual stable grasp based on prior tactile knowledge

Paper and Code

May 30, 2019

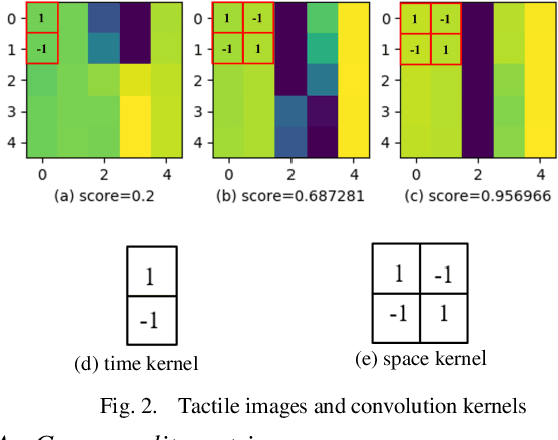

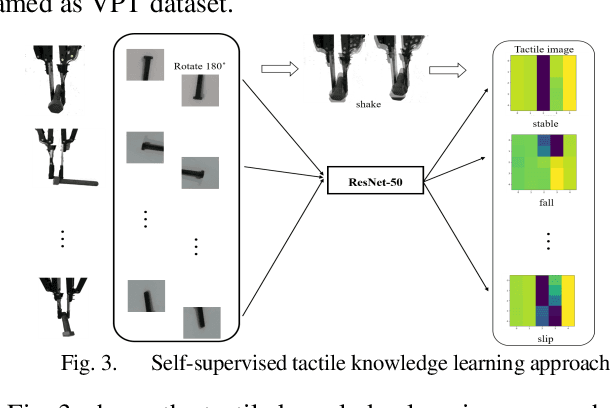

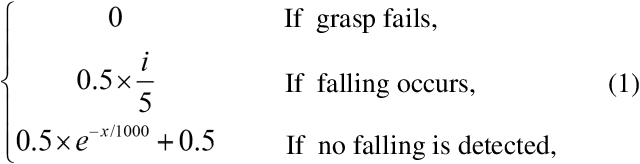

Robotic grasp detection is a fundamental capability for intelligent manipulation in unstructured environments. Previous work mainly employed visual and tactile fusion to achieve stable grasp, while, the whole process depending heavily on regrasping, which wastes much time to regulate and evaluate. We propose a novel way to improve robotic grasping: by using learned tactile knowledge, a robot can achieve a stable grasp from an image. First, we construct a prior tactile knowledge learning framework with novel grasp quality metric which is determined by measuring its resistance to external perturbations. Second, we propose a multi-phases Bayesian Grasp architecture to generate stable grasp configurations through a single RGB image based on prior tactile knowledge. Results show that this framework can classify the outcome of grasps with an average accuracy of 86% on known objects and 79% on novel objects. The prior tactile knowledge improves the successful rate of 55% over traditional vision-based strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge