Qiyue Xia

Interpretability by Design for Efficient Multi-Objective Reinforcement Learning

Jun 04, 2025Abstract:Multi-objective reinforcement learning (MORL) aims at optimising several, often conflicting goals in order to improve flexibility and reliability of RL in practical tasks. This can be achieved by finding diverse policies that are optimal for some objective preferences and non-dominated by optimal policies for other preferences so that they form a Pareto front in the multi-objective performance space. The relation between the multi-objective performance space and the parameter space that represents the policies is generally non-unique. Using a training scheme that is based on a locally linear map between the parameter space and the performance space, we show that an approximate Pareto front can provide an interpretation of the current parameter vectors in terms of the objectives which enables an effective search within contiguous solution domains. Experiments are conducted with and without retraining across different domains, and the comparison with previous methods demonstrates the efficiency of our approach.

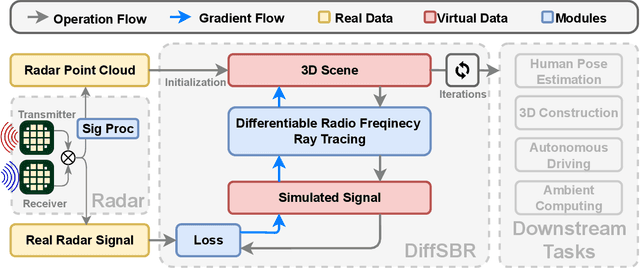

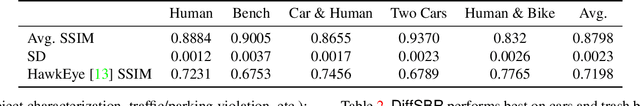

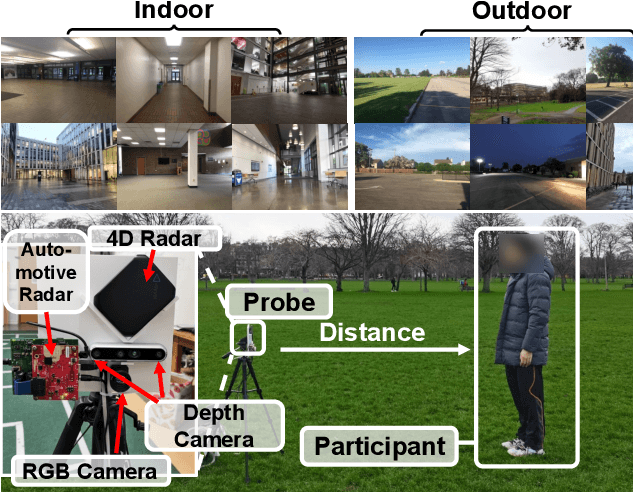

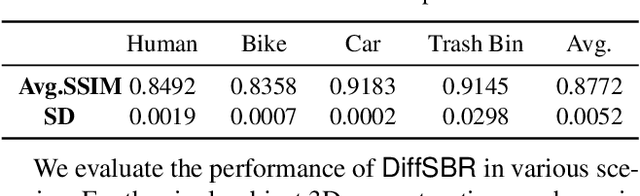

Differentiable Radio Frequency Ray Tracing for Millimeter-Wave Sensing

Nov 22, 2023

Abstract:Millimeter wave (mmWave) sensing is an emerging technology with applications in 3D object characterization and environment mapping. However, realizing precise 3D reconstruction from sparse mmWave signals remains challenging. Existing methods rely on data-driven learning, constrained by dataset availability and difficulty in generalization. We propose DiffSBR, a differentiable framework for mmWave-based 3D reconstruction. DiffSBR incorporates a differentiable ray tracing engine to simulate radar point clouds from virtual 3D models. A gradient-based optimizer refines the model parameters to minimize the discrepancy between simulated and real point clouds. Experiments using various radar hardware validate DiffSBR's capability for fine-grained 3D reconstruction, even for novel objects unseen by the radar previously. By integrating physics-based simulation with gradient optimization, DiffSBR transcends the limitations of data-driven approaches and pioneers a new paradigm for mmWave sensing.

Multimodal Indoor Localization Using Crowdsourced Radio Maps

Nov 17, 2023

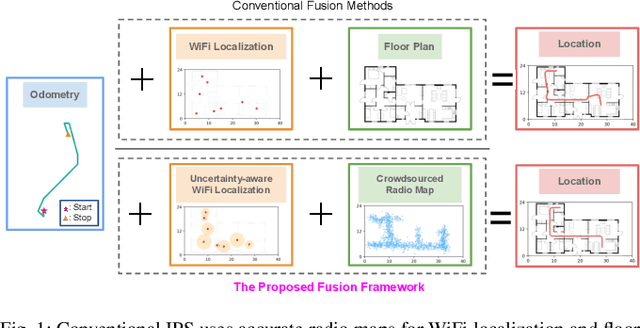

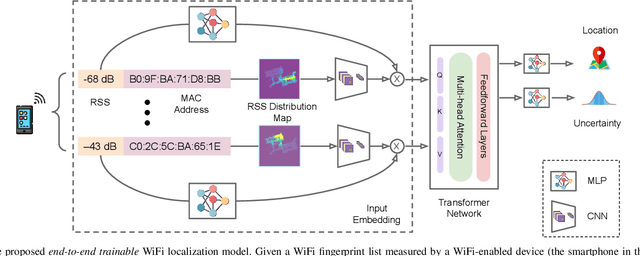

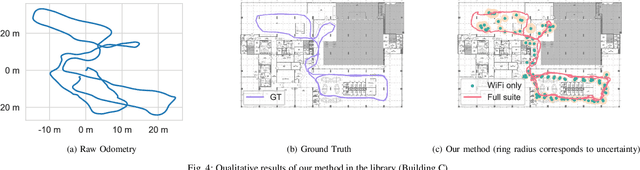

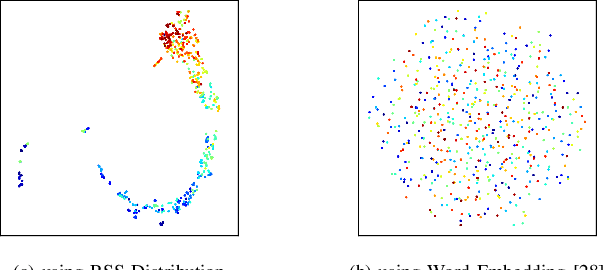

Abstract:Indoor Positioning Systems (IPS) traditionally rely on odometry and building infrastructures like WiFi, often supplemented by building floor plans for increased accuracy. However, the limitation of floor plans in terms of availability and timeliness of updates challenges their wide applicability. In contrast, the proliferation of smartphones and WiFi-enabled robots has made crowdsourced radio maps - databases pairing locations with their corresponding Received Signal Strengths (RSS) - increasingly accessible. These radio maps not only provide WiFi fingerprint-location pairs but encode movement regularities akin to the constraints imposed by floor plans. This work investigates the possibility of leveraging these radio maps as a substitute for floor plans in multimodal IPS. We introduce a new framework to address the challenges of radio map inaccuracies and sparse coverage. Our proposed system integrates an uncertainty-aware neural network model for WiFi localization and a bespoken Bayesian fusion technique for optimal fusion. Extensive evaluations on multiple real-world sites indicate a significant performance enhancement, with results showing ~ 25% improvement over the best baseline

Robust Human Detection under Visual Degradation via Thermal and mmWave Radar Fusion

Jul 07, 2023Abstract:The majority of human detection methods rely on the sensor using visible lights (e.g., RGB cameras) but such sensors are limited in scenarios with degraded vision conditions. In this paper, we present a multimodal human detection system that combines portable thermal cameras and single-chip mmWave radars. To mitigate the noisy detection features caused by the low contrast of thermal cameras and the multi-path noise of radar point clouds, we propose a Bayesian feature extractor and a novel uncertainty-guided fusion method that surpasses a variety of competing methods, either single-modal or multi-modal. We evaluate the proposed method on real-world data collection and demonstrate that our approach outperforms the state-of-the-art methods by a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge