Qitan Lv

HIPPO: Accelerating Video Large Language Models Inference via Holistic-aware Parallel Speculative Decoding

Jan 13, 2026Abstract:Speculative decoding (SD) has emerged as a promising approach to accelerate LLM inference without sacrificing output quality. Existing SD methods tailored for video-LLMs primarily focus on pruning redundant visual tokens to mitigate the computational burden of massive visual inputs. However, existing methods do not achieve inference acceleration comparable to text-only LLMs. We observe from extensive experiments that this phenomenon mainly stems from two limitations: (i) their pruning strategies inadequately preserve visual semantic tokens, degrading draft quality and acceptance rates; (ii) even with aggressive pruning (e.g., 90% visual tokens removed), the draft model's remaining inference cost limits overall speedup. To address these limitations, we propose HIPPO, a general holistic-aware parallel speculative decoding framework. Specifically, HIPPO proposes (i) a semantic-aware token preservation method, which fuses global attention scores with local visual semantics to retain semantic information at high pruning ratios; (ii) a video parallel SD algorithm that decouples and overlaps draft generation and target verification phases. Experiments on four video-LLMs across six benchmarks demonstrate HIPPO's effectiveness, yielding up to 3.51x speedup compared to vanilla auto-regressive decoding.

KALE: Enhancing Knowledge Manipulation in Large Language Models via Knowledge-aware Learning

Jan 12, 2026Abstract:Despite the impressive performance of large language models (LLMs) pretrained on vast knowledge corpora, advancing their knowledge manipulation-the ability to effectively recall, reason, and transfer relevant knowledge-remains challenging. Existing methods mainly leverage Supervised Fine-Tuning (SFT) on labeled datasets to enhance LLMs' knowledge manipulation ability. However, we observe that SFT models still exhibit the known&incorrect phenomenon, where they explicitly possess relevant knowledge for a given question but fail to leverage it for correct answers. To address this challenge, we propose KALE (Knowledge-Aware LEarning)-a post-training framework that leverages knowledge graphs (KGs) to generate high-quality rationales and enhance LLMs' knowledge manipulation ability. Specifically, KALE first introduces a Knowledge-Induced (KI) data synthesis method that efficiently extracts multi-hop reasoning paths from KGs to generate high-quality rationales for question-answer pairs. Then, KALE employs a Knowledge-Aware (KA) fine-tuning paradigm that enhances knowledge manipulation by internalizing rationale-guided reasoning through minimizing the KL divergence between predictions with and without rationales. Extensive experiments on eight popular benchmarks across six different LLMs demonstrate the effectiveness of KALE, achieving accuracy improvements of up to 11.72% and an average of 4.18%.

TALON: Confidence-Aware Speculative Decoding with Adaptive Token Trees

Jan 12, 2026Abstract:Speculative decoding (SD) has become a standard technique for accelerating LLM inference without sacrificing output quality. Recent advances in speculative decoding have shifted from sequential chain-based drafting to tree-structured generation, where the draft model constructs a tree of candidate tokens to explore multiple possible drafts in parallel. However, existing tree-based SD methods typically build a fixed-width, fixed-depth draft tree, which fails to adapt to the varying difficulty of tokens and contexts. As a result, the draft model cannot dynamically adjust the tree structure to early stop on difficult tokens and extend generation for simple ones. To address these challenges, we introduce TALON, a training-free, budget-driven adaptive tree expansion framework that can be plugged into existing tree-based methods. Unlike static methods, TALON constructs the draft tree iteratively until a fixed token budget is met, using a hybrid expansion strategy that adaptively allocates the node budget to each layer of the draft tree. This framework naturally shapes the draft tree into a "deep-and-narrow" form for deterministic contexts and a "shallow-and-wide" form for uncertain branches, effectively optimizing the trade-off between exploration width and generation depth under a given budget. Extensive experiments across 5 models and 6 datasets demonstrate that TALON consistently outperforms state-of-the-art EAGLE-3, achieving up to 5.16x end-to-end speedup over auto-regressive decoding.

SafeWork-R1: Coevolving Safety and Intelligence under the AI-45$^{\circ}$ Law

Jul 24, 2025

Abstract:We introduce SafeWork-R1, a cutting-edge multimodal reasoning model that demonstrates the coevolution of capabilities and safety. It is developed by our proposed SafeLadder framework, which incorporates large-scale, progressive, safety-oriented reinforcement learning post-training, supported by a suite of multi-principled verifiers. Unlike previous alignment methods such as RLHF that simply learn human preferences, SafeLadder enables SafeWork-R1 to develop intrinsic safety reasoning and self-reflection abilities, giving rise to safety `aha' moments. Notably, SafeWork-R1 achieves an average improvement of $46.54\%$ over its base model Qwen2.5-VL-72B on safety-related benchmarks without compromising general capabilities, and delivers state-of-the-art safety performance compared to leading proprietary models such as GPT-4.1 and Claude Opus 4. To further bolster its reliability, we implement two distinct inference-time intervention methods and a deliberative search mechanism, enforcing step-level verification. Finally, we further develop SafeWork-R1-InternVL3-78B, SafeWork-R1-DeepSeek-70B, and SafeWork-R1-Qwen2.5VL-7B. All resulting models demonstrate that safety and capability can co-evolve synergistically, highlighting the generalizability of our framework in building robust, reliable, and trustworthy general-purpose AI.

LogitSpec: Accelerating Retrieval-based Speculative Decoding via Next Next Token Speculation

Jul 02, 2025Abstract:Speculative decoding (SD), where a small draft model is employed to propose draft tokens in advance and then the target model validates them in parallel, has emerged as a promising technique for LLM inference acceleration. Many endeavors to improve SD are to eliminate the need for a draft model and generate draft tokens in a retrieval-based manner in order to further alleviate the drafting overhead and significantly reduce the difficulty in deployment and applications. However, retrieval-based SD relies on a matching paradigm to retrieval the most relevant reference as the draft tokens, where these methods often fail to find matched and accurate draft tokens. To address this challenge, we propose LogitSpec to effectively expand the retrieval range and find the most relevant reference as drafts. Our LogitSpec is motivated by the observation that the logit of the last token can not only predict the next token, but also speculate the next next token. Specifically, LogitSpec generates draft tokens in two steps: (1) utilizing the last logit to speculate the next next token; (2) retrieving relevant reference for both the next token and the next next token. LogitSpec is training-free and plug-and-play, which can be easily integrated into existing LLM inference frameworks. Extensive experiments on a wide range of text generation benchmarks demonstrate that LogitSpec can achieve up to 2.61 $\times$ speedup and 3.28 mean accepted tokens per decoding step. Our code is available at https://github.com/smart-lty/LogitSpec.

Coarse-to-Fine Highlighting: Reducing Knowledge Hallucination in Large Language Models

Oct 19, 2024

Abstract:Generation of plausible but incorrect factual information, often termed hallucination, has attracted significant research interest. Retrieval-augmented language model (RALM) -- which enhances models with up-to-date knowledge -- emerges as a promising method to reduce hallucination. However, existing RALMs may instead exacerbate hallucination when retrieving lengthy contexts. To address this challenge, we propose COFT, a novel \textbf{CO}arse-to-\textbf{F}ine highligh\textbf{T}ing method to focus on different granularity-level key texts, thereby avoiding getting lost in lengthy contexts. Specifically, COFT consists of three components: \textit{recaller}, \textit{scorer}, and \textit{selector}. First, \textit{recaller} applies a knowledge graph to extract potential key entities in a given context. Second, \textit{scorer} measures the importance of each entity by calculating its contextual weight. Finally, \textit{selector} selects high contextual weight entities with a dynamic threshold algorithm and highlights the corresponding paragraphs, sentences, or words in a coarse-to-fine manner. Extensive experiments on the knowledge hallucination benchmark demonstrate the effectiveness of COFT, leading to a superior performance over $30\%$ in the F1 score metric. Moreover, COFT also exhibits remarkable versatility across various long-form tasks, such as reading comprehension and question answering.

Parallel Speculative Decoding with Adaptive Draft Length

Aug 13, 2024

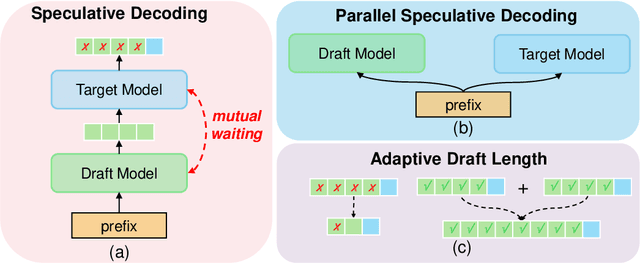

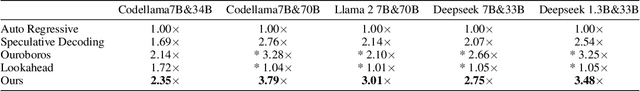

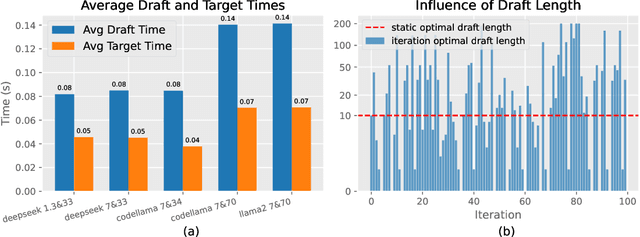

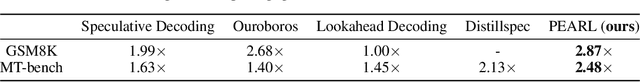

Abstract:Speculative decoding (SD), where an extra draft model is employed to provide multiple \textit{draft} tokens first and then the original target model verifies these tokens in parallel, has shown great power for LLM inference acceleration. However, existing SD methods suffer from the mutual waiting problem, i.e., the target model gets stuck when the draft model is \textit{guessing} tokens, and vice versa. This problem is directly incurred by the asynchronous execution of the draft model and the target model, and is exacerbated due to the fixed draft length in speculative decoding. To address these challenges, we propose a conceptually simple, flexible, and general framework to boost speculative decoding, namely \textbf{P}arallel sp\textbf{E}culative decoding with \textbf{A}daptive d\textbf{R}aft \textbf{L}ength (PEARL). Specifically, PEARL proposes \textit{pre-verify} to verify the first draft token in advance during the drafting phase, and \textit{post-verify} to generate more draft tokens during the verification phase. PEARL parallels the drafting phase and the verification phase via applying the two strategies, and achieves adaptive draft length for different scenarios, which effectively alleviates the mutual waiting problem. Moreover, we theoretically demonstrate that the mean accepted tokens of PEARL is more than existing \textit{draft-then-verify} works. Experiments on various text generation benchmarks demonstrate the effectiveness of our \name, leading to a superior speedup performance up to \textbf{3.79$\times$} and \textbf{1.52$\times$}, compared to auto-regressive decoding and vanilla speculative decoding, respectively.

Learning Rule-Induced Subgraph Representations for Inductive Relation Prediction

Aug 09, 2024Abstract:Inductive relation prediction (IRP) -- where entities can be different during training and inference -- has shown great power for completing evolving knowledge graphs. Existing works mainly focus on using graph neural networks (GNNs) to learn the representation of the subgraph induced from the target link, which can be seen as an implicit rule-mining process to measure the plausibility of the target link. However, these methods cannot differentiate the target link and other links during message passing, hence the final subgraph representation will contain irrelevant rule information to the target link, which reduces the reasoning performance and severely hinders the applications for real-world scenarios. To tackle this problem, we propose a novel \textit{single-source edge-wise} GNN model to learn the \textbf{R}ule-induc\textbf{E}d \textbf{S}ubgraph represen\textbf{T}ations (\textbf{REST}), which encodes relevant rules and eliminates irrelevant rules within the subgraph. Specifically, we propose a \textit{single-source} initialization approach to initialize edge features only for the target link, which guarantees the relevance of mined rules and target link. Then we propose several RNN-based functions for \textit{edge-wise} message passing to model the sequential property of mined rules. REST is a simple and effective approach with theoretical support to learn the \textit{rule-induced subgraph representation}. Moreover, REST does not need node labeling, which significantly accelerates the subgraph preprocessing time by up to \textbf{11.66$\times$}. Experiments on inductive relation prediction benchmarks demonstrate the effectiveness of our REST. Our code is available at https://github.com/smart-lty/REST.

Learning Complete Topology-Aware Correlations Between Relations for Inductive Link Prediction

Sep 20, 2023Abstract:Inductive link prediction -- where entities during training and inference stages can be different -- has shown great potential for completing evolving knowledge graphs in an entity-independent manner. Many popular methods mainly focus on modeling graph-level features, while the edge-level interactions -- especially the semantic correlations between relations -- have been less explored. However, we notice a desirable property of semantic correlations between relations is that they are inherently edge-level and entity-independent. This implies the great potential of the semantic correlations for the entity-independent inductive link prediction task. Inspired by this observation, we propose a novel subgraph-based method, namely TACO, to model Topology-Aware COrrelations between relations that are highly correlated to their topological structures within subgraphs. Specifically, we prove that semantic correlations between any two relations can be categorized into seven topological patterns, and then proposes Relational Correlation Network (RCN) to learn the importance of each pattern. To further exploit the potential of RCN, we propose Complete Common Neighbor induced subgraph that can effectively preserve complete topological patterns within the subgraph. Extensive experiments demonstrate that TACO effectively unifies the graph-level information and edge-level interactions to jointly perform reasoning, leading to a superior performance over existing state-of-the-art methods for the inductive link prediction task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge