Qianlei Wang

Fourier Boundary Features Network with Wider Catchers for Glass Segmentation

May 15, 2024

Abstract:Glass largely blurs the boundary between the real world and the reflection. The special transmittance and reflectance quality have confused the semantic tasks related to machine vision. Therefore, how to clear the boundary built by glass, and avoid over-capturing features as false positive information in deep structure, matters for constraining the segmentation of reflection surface and penetrating glass. We proposed the Fourier Boundary Features Network with Wider Catchers (FBWC), which might be the first attempt to utilize sufficiently wide horizontal shallow branches without vertical deepening for guiding the fine granularity segmentation boundary through primary glass semantic information. Specifically, we designed the Wider Coarse-Catchers (WCC) for anchoring large area segmentation and reducing excessive extraction from a structural perspective. We embed fine-grained features by Cross Transpose Attention (CTA), which is introduced to avoid the incomplete area within the boundary caused by reflection noise. For excavating glass features and balancing high-low layers context, a learnable Fourier Convolution Controller (FCC) is proposed to regulate information integration robustly. The proposed method has been validated on three different public glass segmentation datasets. Experimental results reveal that the proposed method yields better segmentation performance compared with the state-of-the-art (SOTA) methods in glass image segmentation.

AVM-SLAM: Semantic Visual SLAM with Multi-Sensor Fusion in a Bird's Eye View for Automated Valet Parking

Sep 15, 2023Abstract:Automated Valet Parking (AVP) requires precise localization in challenging garage conditions, including poor lighting, sparse textures, repetitive structures, dynamic scenes, and the absence of Global Positioning System (GPS) signals, which often pose problems for conventional localization methods. To address these adversities, we present AVM-SLAM, a semantic visual SLAM framework with multi-sensor fusion in a Bird's Eye View (BEV). Our framework integrates four fisheye cameras, four wheel encoders, and an Inertial Measurement Unit (IMU). The fisheye cameras form an Around View Monitor (AVM) subsystem, generating BEV images. Convolutional Neural Networks (CNNs) extract semantic features from these images, aiding in mapping and localization tasks. These semantic features provide long-term stability and perspective invariance, effectively mitigating environmental challenges. Additionally, data fusion from wheel encoders and IMU enhances system robustness by improving motion estimation and reducing drift. To validate AVM-SLAM's efficacy and robustness, we provide a large-scale, high-resolution underground garage dataset, available at https://github.com/yale-cv/avm-slam. This dataset enables researchers to further explore and assess AVM-SLAM in similar environments.

Real-time Streaming Perception System for Autonomous Driving

Jul 30, 2021

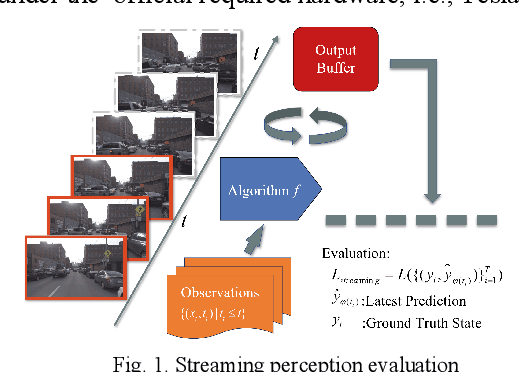

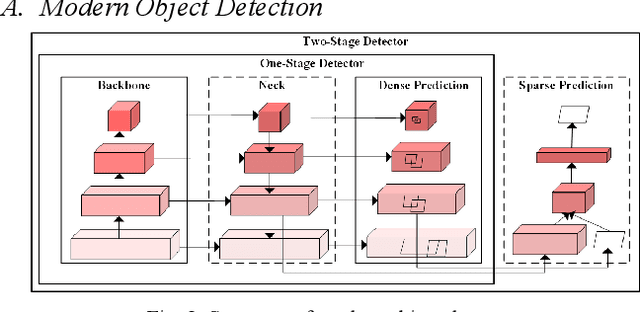

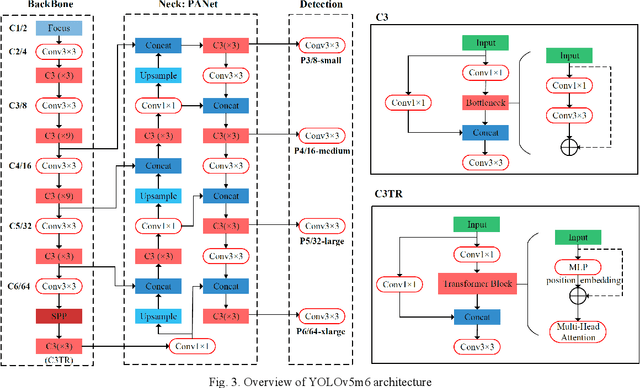

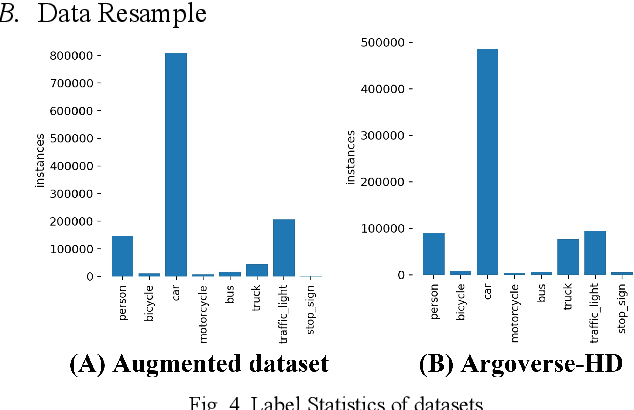

Abstract:Nowadays, plenty of deep learning technologies are being applied to all aspects of autonomous driving with promising results. Among them, object detection is the key to improve the ability of an autonomous agent to perceive its environment so that it can (re)act. However, previous vision-based object detectors cannot achieve satisfactory performance under real-time driving scenarios. To remedy this, we present the real-time steaming perception system in this paper, which is also the 2nd Place solution of Streaming Perception Challenge (Workshop on Autonomous Driving at CVPR 2021) for the detection-only track. Unlike traditional object detection challenges, which focus mainly on the absolute performance, streaming perception task requires achieving a balance of accuracy and latency, which is crucial for real-time autonomous driving. We adopt YOLOv5 as our basic framework, data augmentation, Bag-of-Freebies, and Transformer are adopted to improve streaming object detection performance with negligible extra inference cost. On the Argoverse-HD test set, our method achieves 33.2 streaming AP (34.6 streaming AP verified by the organizer) under the required hardware. Its performance significantly surpasses the fixed baseline of 13.6 (host team), demonstrating the potentiality of application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge