Real-time Streaming Perception System for Autonomous Driving

Paper and Code

Jul 30, 2021

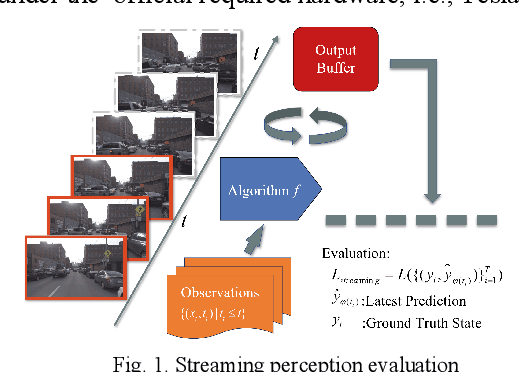

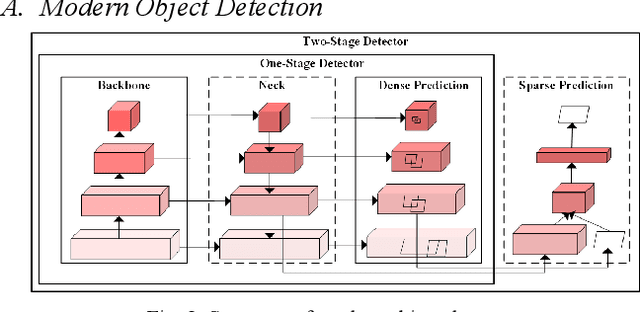

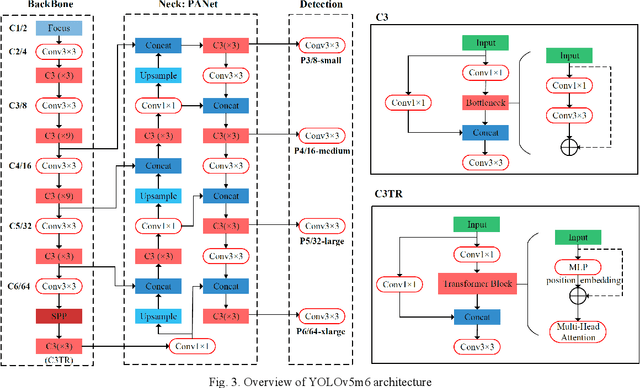

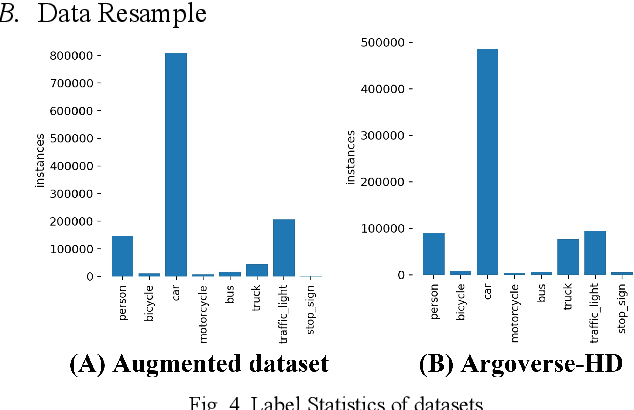

Nowadays, plenty of deep learning technologies are being applied to all aspects of autonomous driving with promising results. Among them, object detection is the key to improve the ability of an autonomous agent to perceive its environment so that it can (re)act. However, previous vision-based object detectors cannot achieve satisfactory performance under real-time driving scenarios. To remedy this, we present the real-time steaming perception system in this paper, which is also the 2nd Place solution of Streaming Perception Challenge (Workshop on Autonomous Driving at CVPR 2021) for the detection-only track. Unlike traditional object detection challenges, which focus mainly on the absolute performance, streaming perception task requires achieving a balance of accuracy and latency, which is crucial for real-time autonomous driving. We adopt YOLOv5 as our basic framework, data augmentation, Bag-of-Freebies, and Transformer are adopted to improve streaming object detection performance with negligible extra inference cost. On the Argoverse-HD test set, our method achieves 33.2 streaming AP (34.6 streaming AP verified by the organizer) under the required hardware. Its performance significantly surpasses the fixed baseline of 13.6 (host team), demonstrating the potentiality of application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge