Pullarao Maddu

FisheyeMultiNet: Real-time Multi-task Learning Architecture for Surround-view Automated Parking System

Dec 23, 2019

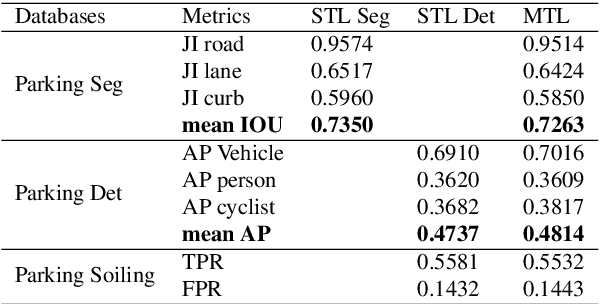

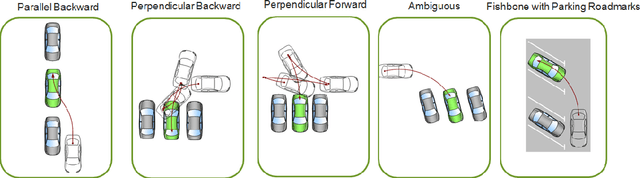

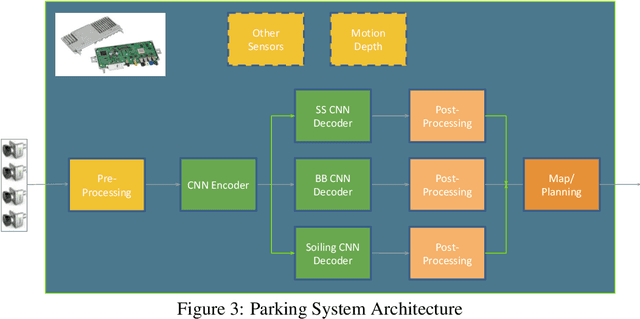

Abstract:Automated Parking is a low speed manoeuvring scenario which is quite unstructured and complex, requiring full 360{\deg} near-field sensing around the vehicle. In this paper, we discuss the design and implementation of an automated parking system from the perspective of camera based deep learning algorithms. We provide a holistic overview of an industrial system covering the embedded system, use cases and the deep learning architecture. We demonstrate a real-time multi-task deep learning network called FisheyeMultiNet, which detects all the necessary objects for parking on a low-power embedded system. FisheyeMultiNet runs at 15 fps for 4 cameras and it has three tasks namely object detection, semantic segmentation and soiling detection. To encourage further research, we release a partial dataset of 5,000 images containing semantic segmentation and bounding box detection ground truth via WoodScape project \cite{yogamani2019woodscape}.

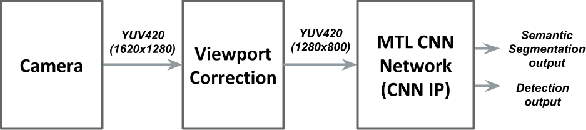

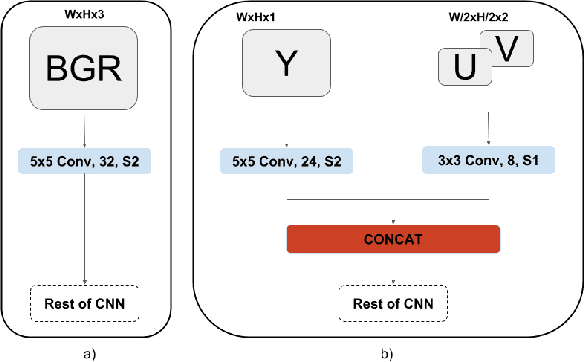

YUVMultiNet: Real-time YUV multi-task CNN for autonomous driving

Apr 11, 2019

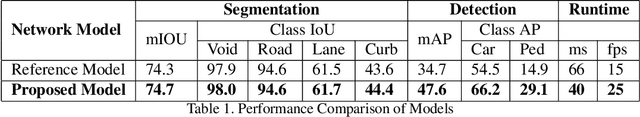

Abstract:In this paper, we propose a multi-task convolutional neural network (CNN) architecture optimized for a low power automotive grade SoC. We introduce a network based on a unified architecture where the encoder is shared among the two tasks namely detection and segmentation. The pro-posed network runs at 25FPS for 1280x800 resolution. We briefly discuss the methods used to optimize the network architecture such as using native YUV image directly, optimization of layers & feature maps and applying quantization. We also focus on memory bandwidth in our design as convolutions are data intensives and most SOCs are bandwidth bottlenecked. We then demonstrate the efficiency of our proposed network for a dedicated CNN accelerators presenting the key performance indicators (KPI) for the detection and segmentation tasks obtained from the hardware execution and the corresponding run-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge