Przemysław Rokita

ExpertSim: Fast Particle Detector Simulation Using Mixture-of-Generative-Experts

Aug 28, 2025

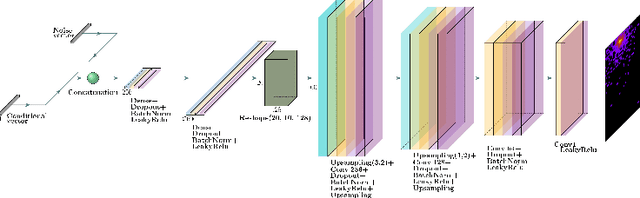

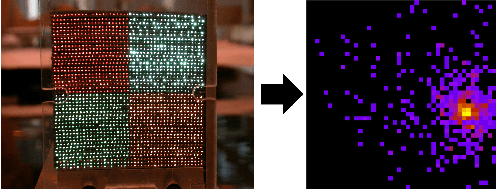

Abstract:Simulating detector responses is a crucial part of understanding the inner workings of particle collisions in the Large Hadron Collider at CERN. Such simulations are currently performed with statistical Monte Carlo methods, which are computationally expensive and put a significant strain on CERN's computational grid. Therefore, recent proposals advocate for generative machine learning methods to enable more efficient simulations. However, the distribution of the data varies significantly across the simulations, which is hard to capture with out-of-the-box methods. In this study, we present ExpertSim - a deep learning simulation approach tailored for the Zero Degree Calorimeter in the ALICE experiment. Our method utilizes a Mixture-of-Generative-Experts architecture, where each expert specializes in simulating a different subset of the data. This allows for a more precise and efficient generation process, as each expert focuses on a specific aspect of the calorimeter response. ExpertSim not only improves accuracy, but also provides a significant speedup compared to the traditional Monte-Carlo methods, offering a promising solution for high-efficiency detector simulations in particle physics experiments at CERN. We make the code available at https://github.com/patrick-bedkowski/expertsim-mix-of-generative-experts.

Deep Generative Models for Proton Zero Degree Calorimeter Simulations in ALICE, CERN

Jun 05, 2024

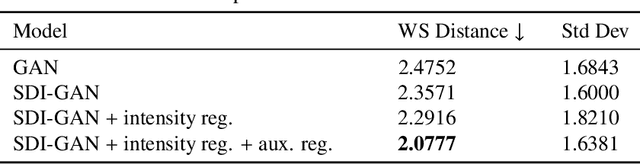

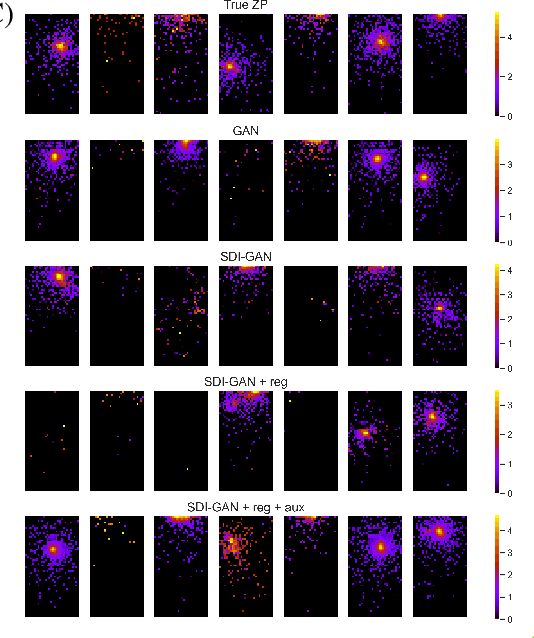

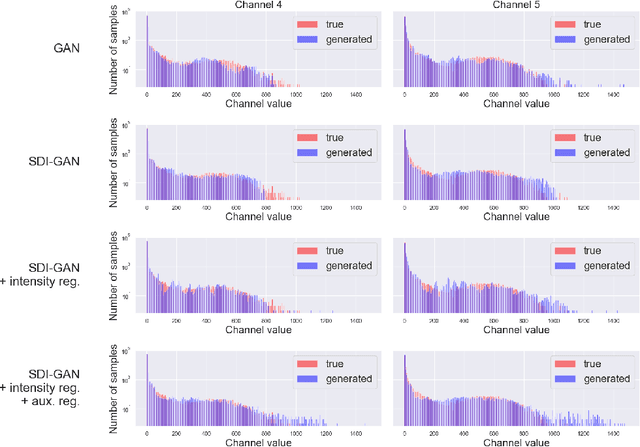

Abstract:Simulating detector responses is a crucial part of understanding the inner-workings of particle collisions in the Large Hadron Collider at CERN. The current reliance on statistical Monte-Carlo simulations strains CERN's computational grid, underscoring the urgency for more efficient alternatives. Addressing these challenges, recent proposals advocate for generative machine learning methods. In this study, we present an innovative deep learning simulation approach tailored for the proton Zero Degree Calorimeter in the ALICE experiment. Leveraging a Generative Adversarial Network model with Selective Diversity Increase loss, we directly simulate calorimeter responses. To enhance its capabilities in modeling a broad range of calorimeter response intensities, we expand the SDI-GAN architecture with additional regularization. Moreover, to improve the spatial fidelity of the generated data, we introduce an auxiliary regressor network. Our method offers a significant speedup when comparing to the traditional Monte-Carlo based approaches.

Generative Diffusion Models for Fast Simulations of Particle Collisions at CERN

Jun 05, 2024

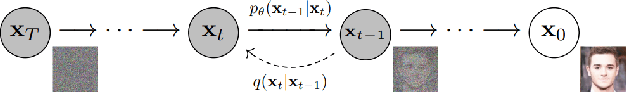

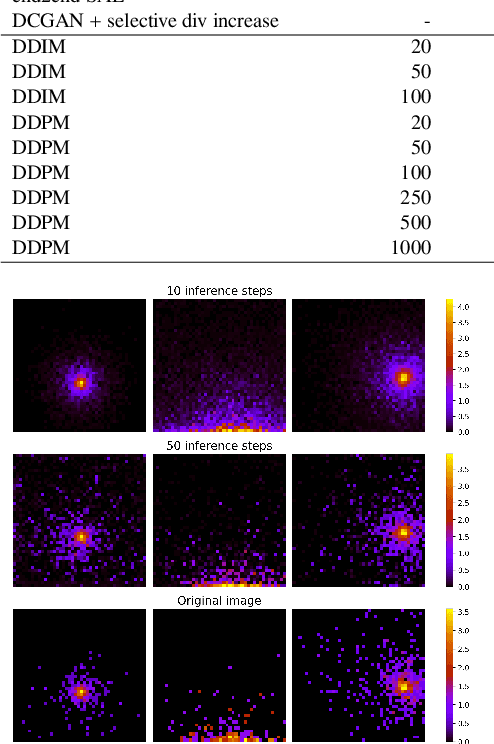

Abstract:In High Energy Physics simulations play a crucial role in unraveling the complexities of particle collision experiments within CERN's Large Hadron Collider. Machine learning simulation methods have garnered attention as promising alternatives to traditional approaches. While existing methods mainly employ Variational Autoencoders (VAEs) or Generative Adversarial Networks (GANs), recent advancements highlight the efficacy of diffusion models as state-of-the-art generative machine learning methods. We present the first simulation for Zero Degree Calorimeter (ZDC) at the ALICE experiment based on diffusion models, achieving the highest fidelity compared to existing baselines. We perform an analysis of trade-offs between generation times and the simulation quality. The results indicate a significant potential of latent diffusion model due to its rapid generation time.

Particle physics DL-simulation with control over generated data properties

May 22, 2024Abstract:The research of innovative methods aimed at reducing costs and shortening the time needed for simulation, going beyond conventional approaches based on Monte Carlo methods, has been sparked by the development of collision simulations at the Large Hadron Collider at CERN. Deep learning generative methods including VAE, GANs and diffusion models have been used for this purpose. Although they are much faster and simpler than standard approaches, they do not always keep high fidelity of the simulated data. This work aims to mitigate this issue, by providing an alternative solution to currently employed algorithms by introducing the mechanism of control over the generated data properties. To achieve this, we extend the recently introduced CorrVAE, which enables user-defined parameter manipulation of the generated output. We adapt the model to the problem of particle physics simulation. The proposed solution achieved promising results, demonstrating control over the parameters of the generated output and constituting an alternative for simulating the ZDC calorimeter in the ALICE experiment at CERN.

Machine Learning methods for simulating particle response in the Zero Degree Calorimeter at the ALICE experiment, CERN

Jun 23, 2023Abstract:Currently, over half of the computing power at CERN GRID is used to run High Energy Physics simulations. The recent updates at the Large Hadron Collider (LHC) create the need for developing more efficient simulation methods. In particular, there exists a demand for a fast simulation of the neutron Zero Degree Calorimeter, where existing Monte Carlo-based methods impose a significant computational burden. We propose an alternative approach to the problem that leverages machine learning. Our solution utilises neural network classifiers and generative models to directly simulate the response of the calorimeter. In particular, we examine the performance of variational autoencoders and generative adversarial networks, expanding the GAN architecture by an additional regularisation network and a simple, yet effective postprocessing step. Our approach increases the simulation speed by 2 orders of magnitude while maintaining the high fidelity of the simulation.

Towards More Realistic Membership Inference Attacks on Large Diffusion Models

Jun 22, 2023Abstract:Generative diffusion models, including Stable Diffusion and Midjourney, can generate visually appealing, diverse, and high-resolution images for various applications. These models are trained on billions of internet-sourced images, raising significant concerns about the potential unauthorized use of copyright-protected images. In this paper, we examine whether it is possible to determine if a specific image was used in the training set, a problem known in the cybersecurity community and referred to as a membership inference attack. Our focus is on Stable Diffusion, and we address the challenge of designing a fair evaluation framework to answer this membership question. We propose a methodology to establish a fair evaluation setup and apply it to Stable Diffusion, enabling potential extensions to other generative models. Utilizing this evaluation setup, we execute membership attacks (both known and newly introduced). Our research reveals that previously proposed evaluation setups do not provide a full understanding of the effectiveness of membership inference attacks. We conclude that the membership inference attack remains a significant challenge for large diffusion models (often deployed as black-box systems), indicating that related privacy and copyright issues will persist in the foreseeable future.

Selectively increasing the diversity of GAN-generated samples

Jul 07, 2022

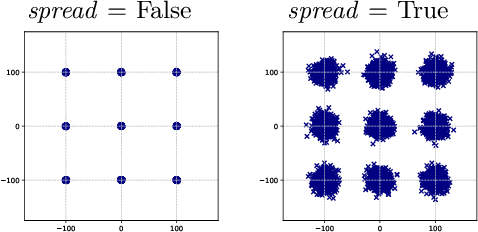

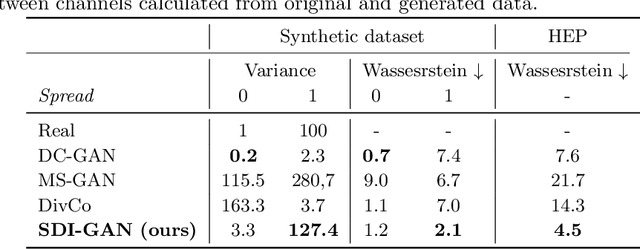

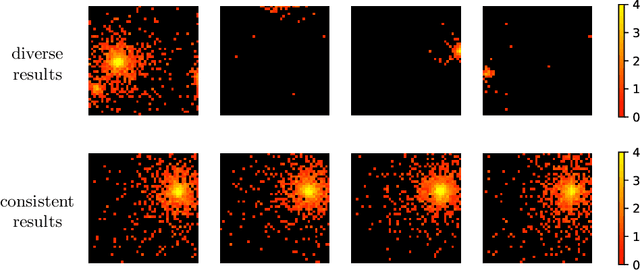

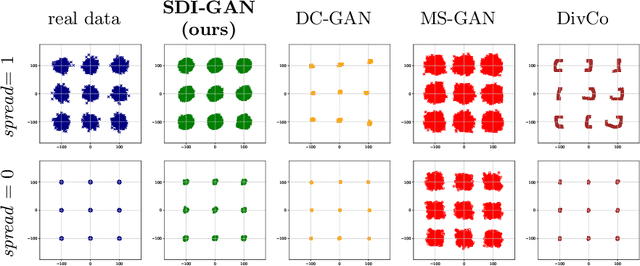

Abstract:Generative Adversarial Networks (GANs) are powerful models able to synthesize data samples closely resembling the distribution of real data, yet the diversity of those generated samples is limited due to the so-called mode collapse phenomenon observed in GANs. Especially prone to mode collapse are conditional GANs, which tend to ignore the input noise vector and focus on the conditional information. Recent methods proposed to mitigate this limitation increase the diversity of generated samples, yet they reduce the performance of the models when similarity of samples is required. To address this shortcoming, we propose a novel method to selectively increase the diversity of GAN-generated samples. By adding a simple, yet effective regularization to the training loss function we encourage the generator to discover new data modes for inputs related to diverse outputs while generating consistent samples for the remaining ones. More precisely, we maximise the ratio of distances between generated images and input latent vectors scaling the effect according to the diversity of samples for a given conditional input. We show the superiority of our method in a synthetic benchmark as well as a real-life scenario of simulating data from the Zero Degree Calorimeter of ALICE experiment in LHC, CERN.

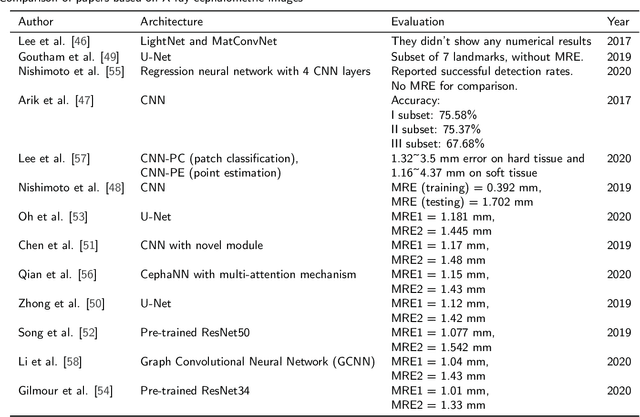

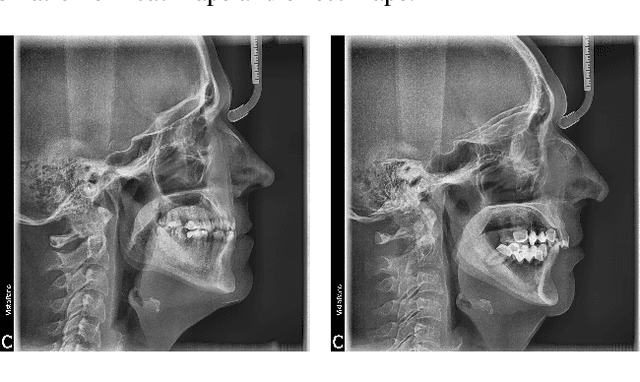

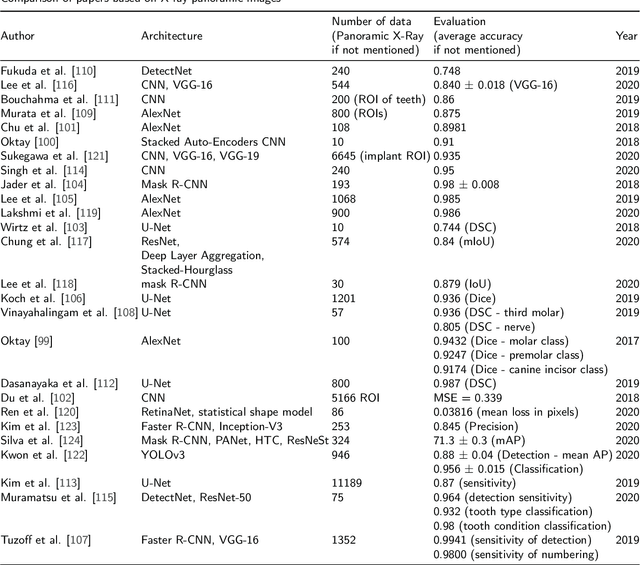

Convolutional Neural Networks in Orthodontics: a review

Apr 18, 2021

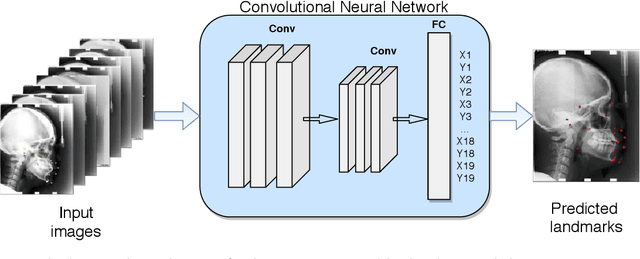

Abstract:Convolutional neural networks (CNNs) are used in many areas of computer vision, such as object tracking and recognition, security, military, and biomedical image analysis. This review presents the application of convolutional neural networks in one of the fields of dentistry - orthodontics. Advances in medical imaging technologies and methods allow CNNs to be used in orthodontics to shorten the planning time of orthodontic treatment, including an automatic search of landmarks on cephalometric X-ray images, tooth segmentation on Cone-Beam Computed Tomography (CBCT) images or digital models, and classification of defects on X-Ray panoramic images. In this work, we describe the current methods, the architectures of deep convolutional neural networks used, and their implementations, together with a comparison of the results achieved by them. The promising results and visualizations of the described studies show that the use of methods based on convolutional neural networks allows for the improvement of computer-based orthodontic treatment planning, both by reducing the examination time and, in many cases, by performing the analysis much more accurately than a manual orthodontist does.

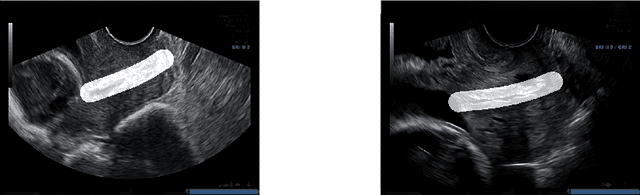

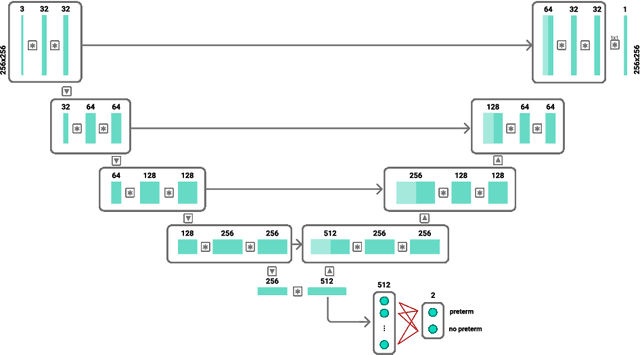

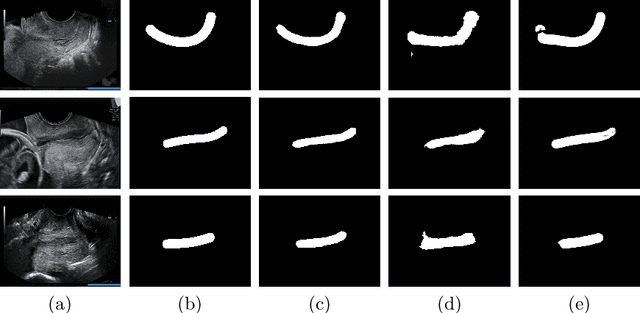

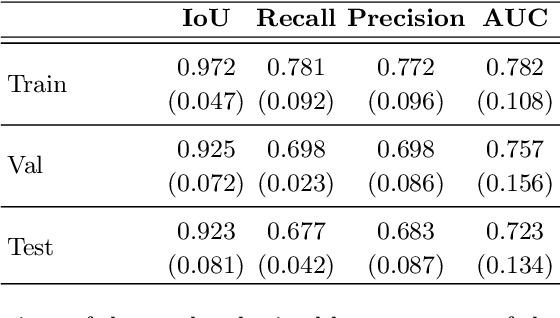

Spontaneous preterm birth prediction using convolutional neural networks

Aug 21, 2020

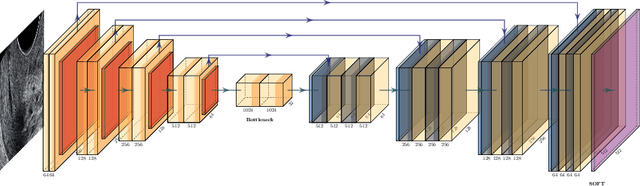

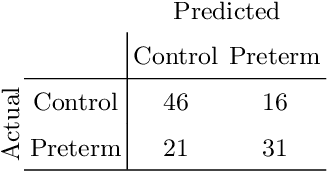

Abstract:An estimated 15 million babies are born too early every year. Approximately 1 million children die each year due to complications of preterm birth (PTB). Many survivors face a lifetime of disability, including learning disabilities and visual and hearing problems. Although manual analysis of ultrasound images (US) is still prevalent, it is prone to errors due to its subjective component and complex variations in the shape and position of organs across patients. In this work, we introduce a conceptually simple convolutional neural network (CNN) trained for segmenting prenatal ultrasound images and classifying task for the purpose of preterm birth detection. Our method efficiently segments different types of cervixes in transvaginal ultrasound images while simultaneously predicting a preterm birth based on extracted image features without human oversight. We employed three popular network models: U-Net, Fully Convolutional Network, and Deeplabv3 for the cervix segmentation task. Based on the conducted results and model efficiency, we decided to extend U-Net by adding a parallel branch for classification task. The proposed model is trained and evaluated on a dataset consisting of 354 2D transvaginal ultrasound images and achieved a segmentation accuracy with a mean Jaccard coefficient index of 0.923 $\pm$ 0.081 and a classification sensitivity of 0.677 $\pm$ 0.042 with a 3.49\% false positive rate. Our method obtained better results in the prediction of preterm birth based on transvaginal ultrasound images compared to state-of-the-art methods.

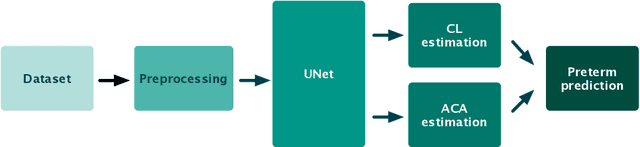

Estimation of preterm birth markers with U-Net segmentation network

Aug 24, 2019

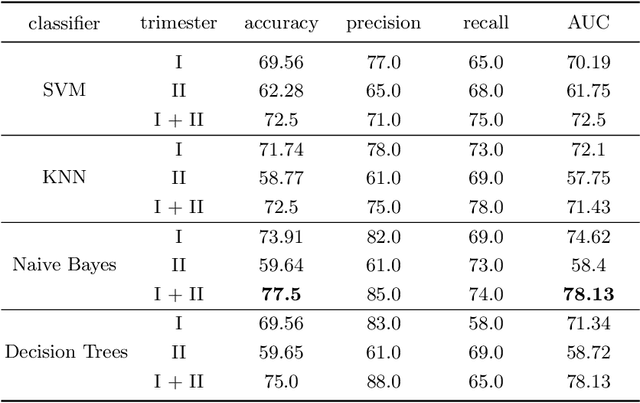

Abstract:Preterm birth is the most common cause of neonatal death. Current diagnostic methods that assess the risk of preterm birth involve the collection of maternal characteristics and transvaginal ultrasound imaging conducted in the first and second trimester of pregnancy. Analysis of the ultrasound data is based on visual inspection of images by gynaecologist, sometimes supported by hand-designed image features such as cervical length. Due to the complexity of this process and its subjective component, approximately 30% of spontaneous preterm deliveries are not correctly predicted. Moreover, 10% of the predicted preterm deliveries are false-positives. In this paper, we address the problem of predicting spontaneous preterm delivery using machine learning. To achieve this goal, we propose to first use a deep neural network architecture for segmenting prenatal ultrasound images and then automatically extract two biophysical ultrasound markers, cervical length (CL) and anterior cervical angle (ACA), from the resulting images. Our method allows to estimate ultrasound markers without human oversight. Furthermore, we show that CL and ACA markers, when combined, allow us to decrease false-negative ratio from 30% to 18%. Finally, contrary to the current approaches to diagnostics methods that rely only on gynaecologist's expertise, our method introduce objectively obtained results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge