Priyabrata Saha

Online Relational Inference for Evolving Multi-agent Interacting Systems

Nov 03, 2024

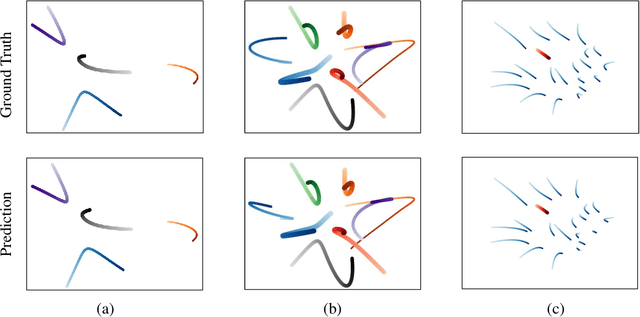

Abstract:We introduce a novel framework, Online Relational Inference (ORI), designed to efficiently identify hidden interaction graphs in evolving multi-agent interacting systems using streaming data. Unlike traditional offline methods that rely on a fixed training set, ORI employs online backpropagation, updating the model with each new data point, thereby allowing it to adapt to changing environments in real-time. A key innovation is the use of an adjacency matrix as a trainable parameter, optimized through a new adaptive learning rate technique called AdaRelation, which adjusts based on the historical sensitivity of the decoder to changes in the interaction graph. Additionally, a data augmentation method named Trajectory Mirror (TM) is introduced to improve generalization by exposing the model to varied trajectory patterns. Experimental results on both synthetic datasets and real-world data (CMU MoCap for human motion) demonstrate that ORI significantly improves the accuracy and adaptability of relational inference in dynamic settings compared to existing methods. This approach is model-agnostic, enabling seamless integration with various neural relational inference (NRI) architectures, and offers a robust solution for real-time applications in complex, evolving systems.

Bridging Autoencoders and Dynamic Mode Decomposition for Reduced-order Modeling and Control of PDEs

Sep 09, 2024

Abstract:Modeling and controlling complex spatiotemporal dynamical systems driven by partial differential equations (PDEs) often necessitate dimensionality reduction techniques to construct lower-order models for computational efficiency. This paper explores a deep autoencoding learning method for reduced-order modeling and control of dynamical systems governed by spatiotemporal PDEs. We first analytically show that an optimization objective for learning a linear autoencoding reduced-order model can be formulated to yield a solution closely resembling the result obtained through the dynamic mode decomposition with control algorithm. We then extend this linear autoencoding architecture to a deep autoencoding framework, enabling the development of a nonlinear reduced-order model. Furthermore, we leverage the learned reduced-order model to design controllers using stability-constrained deep neural networks. Numerical experiments are presented to validate the efficacy of our approach in both modeling and control using the example of a reaction-diffusion system.

RADNet: A Deep Neural Network Model for Robust Perception in Moving Autonomous Systems

Apr 30, 2022

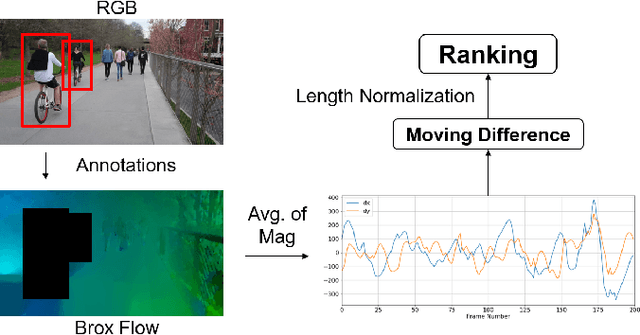

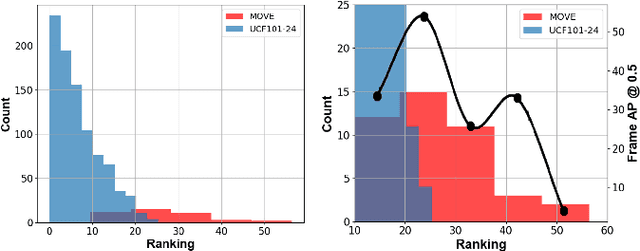

Abstract:Interactive autonomous applications require robustness of the perception engine to artifacts in unconstrained videos. In this paper, we examine the effect of camera motion on the task of action detection. We develop a novel ranking method to rank videos based on the degree of global camera motion. For the high ranking camera videos we show that the accuracy of action detection is decreased. We propose an action detection pipeline that is robust to the camera motion effect and verify it empirically. Specifically, we do actor feature alignment across frames and couple global scene features with local actor-specific features. We do feature alignment using a novel formulation of the Spatio-temporal Sampling Network (STSN) but with multi-scale offset prediction and refinement using a pyramid structure. We also propose a novel input dependent weighted averaging strategy for fusing local and global features. We show the applicability of our network on our dataset of moving camera videos with high camera motion (MOVE dataset) with a 4.1% increase in frame mAP and 17% increase in video mAP.

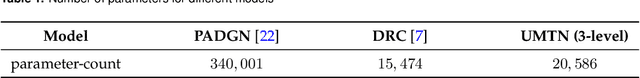

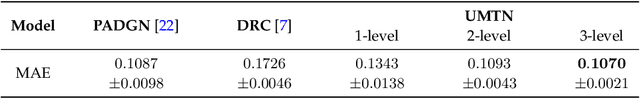

Unraveled Multilevel Transformation Networks for Predicting Sparsely-Observed Spatiotemporal Dynamics

Mar 16, 2022

Abstract:In this paper, we address the problem of predicting complex, nonlinear spatiotemporal dynamics when available data is recorded at irregularly-spaced sparse spatial locations. Most of the existing deep learning models for modeling spatiotemporal dynamics are either designed for data in a regular grid or struggle to uncover the spatial relations from sparse and irregularly-spaced data sites. We propose a deep learning model that learns to predict unknown spatiotemporal dynamics using data from sparsely-distributed data sites. We base our approach on Radial Basis Function (RBF) collocation method which is often used for meshfree solution of partial differential equations (PDEs). The RBF framework allows us to unravel the observed spatiotemporal function and learn the spatial interactions among data sites on the RBF-space. The learned spatial features are then used to compose multilevel transformations of the raw observations and predict its evolution in future time steps. We demonstrate the advantage of our approach using both synthetic and real-world climate data.

A Deep Learning-based Collocation Method for Modeling Unknown PDEs from Sparse Observation

Nov 30, 2020

Abstract:Deep learning-based modeling of dynamical systems driven by partial differential equations (PDEs) has become quite popular in recent years. However, most of the existing deep learning-based methods either assume strong physics prior, or depend on specific initial and boundary conditions, or require data in dense regular grid making them inapt for modeling unknown PDEs from sparsely-observed data. This paper presents a deep learning-based collocation method for modeling dynamical systems driven by unknown PDEs when data sites are sparsely distributed. The proposed method is spatial dimension-independent, geometrically flexible, learns from sparsely-available data and the learned model does not depend on any specific initial and boundary conditions. We demonstrate our method in the forecasting task for two-dimensional wave equation and Burgers-Fisher equation in multiple geometries with different boundary conditions.

Neural Identification for Control

Oct 20, 2020

Abstract:We present a new method for learning control law that stabilizes an unknown nonlinear dynamical system at an equilibrium point. We formulate a system identification task in a self-supervised learning setting that jointly learns a controller and corresponding stable closed-loop dynamics hypothesis. The input-output behavior of the unknown dynamical system under random control inputs is used as the supervising signal to train the neural network-based system model and the controller. The method relies on the Lyapunov stability theory to generate a stable closed-loop dynamics hypothesis and corresponding control law. We demonstrate our method on various nonlinear control problems such as n-Link pendulum balancing, pendulum on cart balancing, and wheeled vehicle path following.

PhICNet: Physics-Incorporated Convolutional Recurrent Neural Networks for Modeling Dynamical Systems

Apr 14, 2020

Abstract:Dynamical systems involving partial differential equations (PDEs) and ordinary differential equations (ODEs) arise in many fields of science and engineering. In this paper, we present a physics-incorporated deep learning framework to model and predict the spatiotemporal evolution of dynamical systems governed by partially-known inhomogenous PDEs with unobservable source dynamics. We formulate our model PhICNet as a convolutional recurrent neural network which is end-to-end trainable for spatiotemporal evolution prediction of dynamical systems. Experimental results show the long-term prediction capability of our model.

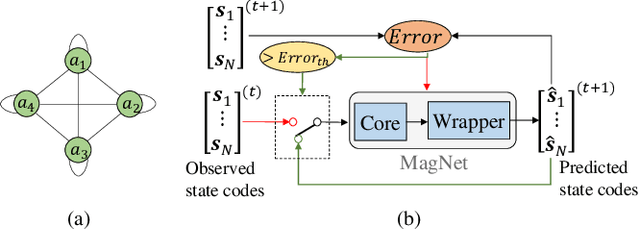

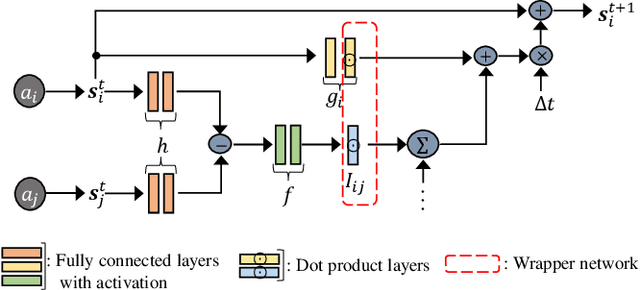

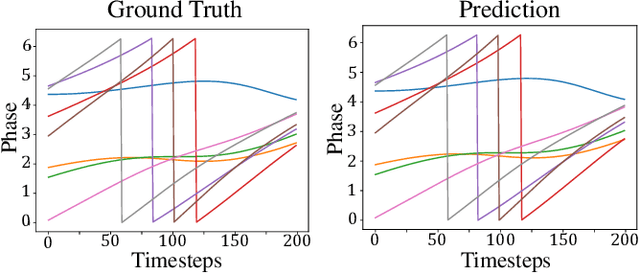

MagNet: Discovering Multi-agent Interaction Dynamics using Neural Network

Mar 03, 2020

Abstract:We present the MagNet, a neural network-based multi-agent interaction model to discover the governing dynamics and predict evolution of a complex multi-agent system from observations. We formulate a multi-agent system as a coupled non-linear network with a generic ordinary differential equation (ODE) based state evolution, and develop a neural network-based realization of its time-discretized model. MagNet is trained to discover the core dynamics of a multi-agent system from observations, and tuned on-line to learn agent-specific parameters of the dynamics to ensure accurate prediction even when physical or relational attributes of agents, or number of agents change. We evaluate MagNet on a point-mass system in two-dimensional space, Kuramoto phase synchronization dynamics and predator-swarm interaction dynamics demonstrating orders of magnitude improvement in prediction accuracy over traditional deep learning models.

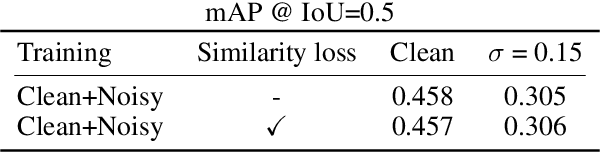

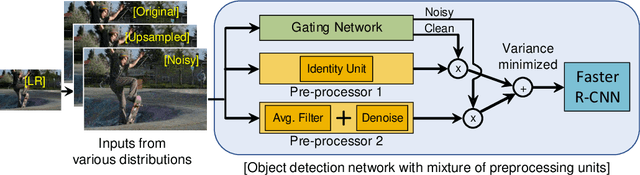

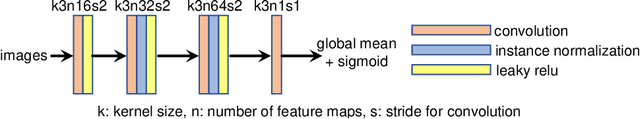

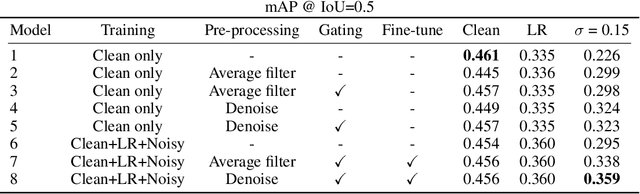

Mixture of Pre-processing Experts Model for Noise Robust Deep Learning on Resource Constrained Platforms

Apr 29, 2019

Abstract:Deep learning on an edge device requires energy efficient operation due to ever diminishing power budget. Intentional low quality data during the data acquisition for longer battery life, and natural noise from the low cost sensor degrade the quality of target output which hinders adoption of deep learning on an edge device. To overcome these problems, we propose simple yet efficient mixture of pre-processing experts (MoPE) model to handle various image distortions including low resolution and noisy images. We also propose to use adversarially trained auto encoder as a pre-processing expert for the noisy images. We evaluate our proposed method for various machine learning tasks including object detection on MS-COCO 2014 dataset, multiple object tracking problem on MOT-Challenge dataset, and human activity classification on UCF 101 dataset. Experimental results show that the proposed method achieves better detection, tracking and activity classification accuracies under noise without sacrificing accuracies for the clean images. The overheads of our proposed MoPE are 0.67% and 0.17% in terms of memory and computation compared to the baseline object detection network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge