Biswadeep Chakraborty

FLAMES: A Hybrid Spiking-State Space Model for Adaptive Memory Retention in Event-Based Learning

Apr 02, 2025

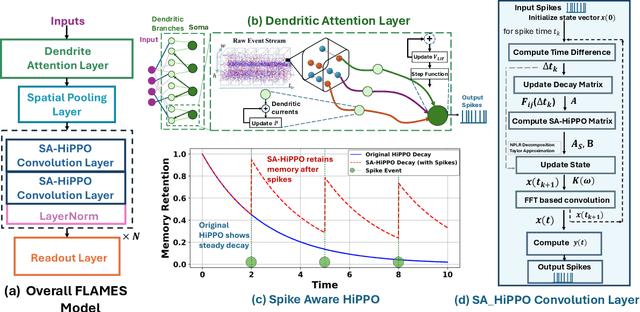

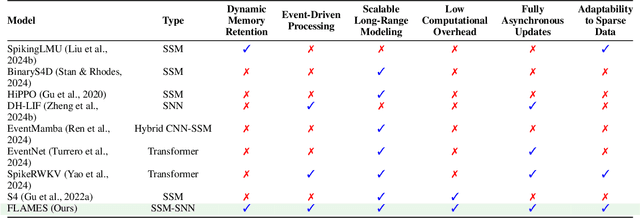

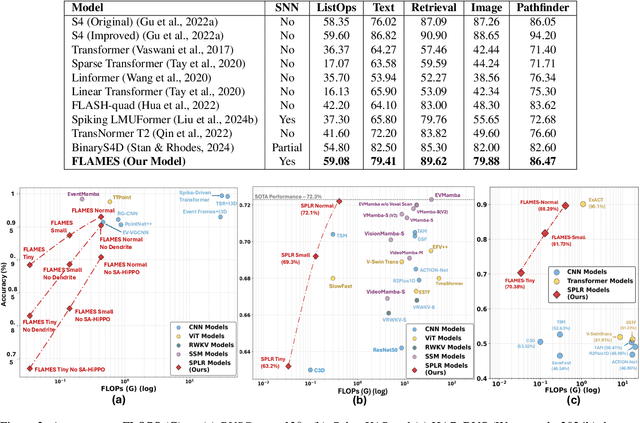

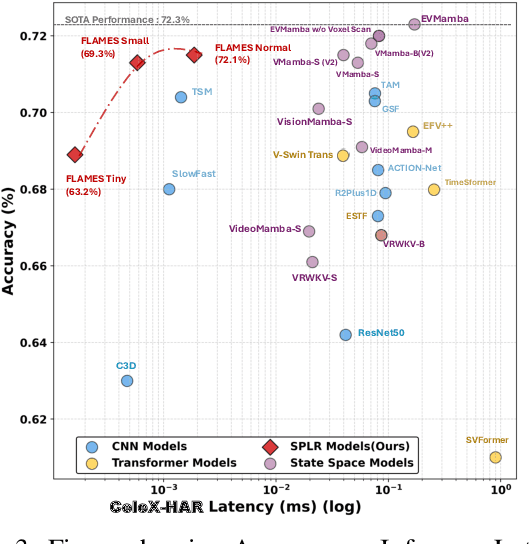

Abstract:We propose \textbf{FLAMES (Fast Long-range Adaptive Memory for Event-based Systems)}, a novel hybrid framework integrating structured state-space dynamics with event-driven computation. At its core, the \textit{Spike-Aware HiPPO (SA-HiPPO) mechanism} dynamically adjusts memory retention based on inter-spike intervals, preserving both short- and long-range dependencies. To maintain computational efficiency, we introduce a normal-plus-low-rank (NPLR) decomposition, reducing complexity from $\mathcal{O}(N^2)$ to $\mathcal{O}(Nr)$. FLAMES achieves state-of-the-art results on the Long Range Arena benchmark and event datasets like HAR-DVS and Celex-HAR. By bridging neuromorphic computing and structured sequence modeling, FLAMES enables scalable long-range reasoning in event-driven systems.

Dynamic Graph Structure Estimation for Learning Multivariate Point Process using Spiking Neural Networks

Apr 01, 2025

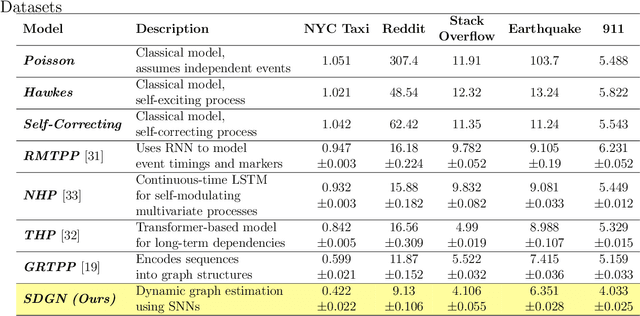

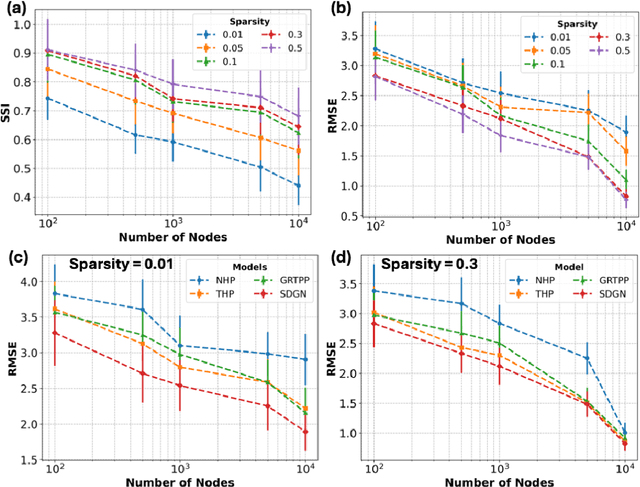

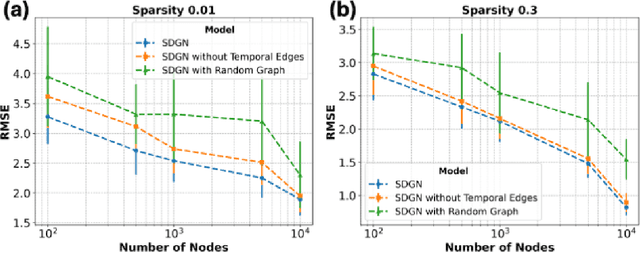

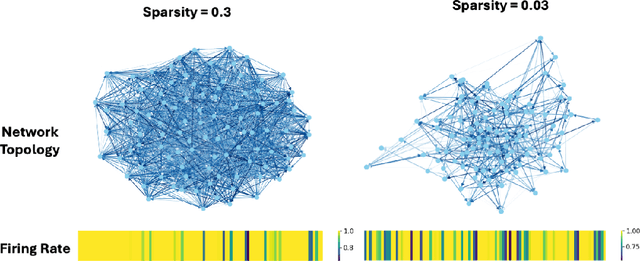

Abstract:Modeling and predicting temporal point processes (TPPs) is critical in domains such as neuroscience, epidemiology, finance, and social sciences. We introduce the Spiking Dynamic Graph Network (SDGN), a novel framework that leverages the temporal processing capabilities of spiking neural networks (SNNs) and spike-timing-dependent plasticity (STDP) to dynamically estimate underlying spatio-temporal functional graphs. Unlike existing methods that rely on predefined or static graph structures, SDGN adapts to any dataset by learning dynamic spatio-temporal dependencies directly from the event data, enhancing generalizability and robustness. While SDGN offers significant improvements over prior methods, we acknowledge its limitations in handling dense graphs and certain non-Gaussian dependencies, providing opportunities for future refinement. Our evaluations, conducted on both synthetic and real-world datasets including NYC Taxi, 911, Reddit, and Stack Overflow, demonstrate that SDGN achieves superior predictive accuracy while maintaining computational efficiency. Furthermore, we include ablation studies to highlight the contributions of its core components.

A Dynamical Systems-Inspired Pruning Strategy for Addressing Oversmoothing in Graph Neural Networks

Dec 10, 2024

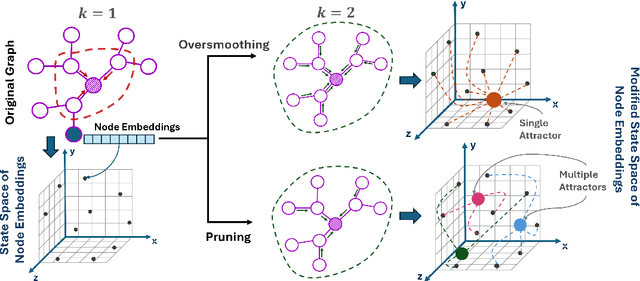

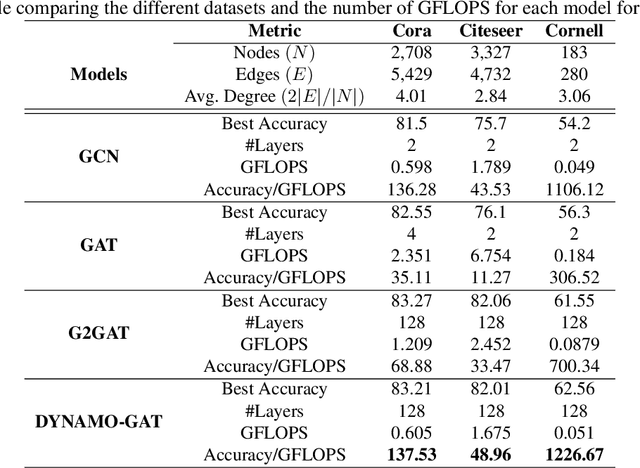

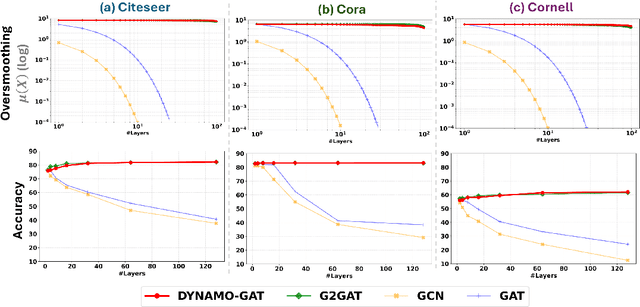

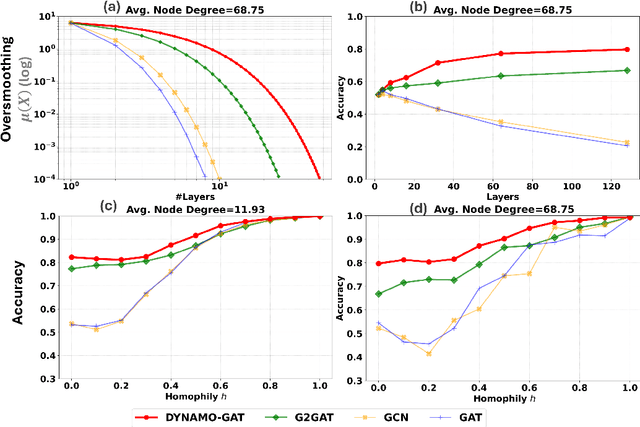

Abstract:Oversmoothing in Graph Neural Networks (GNNs) poses a significant challenge as network depth increases, leading to homogenized node representations and a loss of expressiveness. In this work, we approach the oversmoothing problem from a dynamical systems perspective, providing a deeper understanding of the stability and convergence behavior of GNNs. Leveraging insights from dynamical systems theory, we identify the root causes of oversmoothing and propose \textbf{\textit{DYNAMO-GAT}}. This approach utilizes noise-driven covariance analysis and Anti-Hebbian principles to selectively prune redundant attention weights, dynamically adjusting the network's behavior to maintain node feature diversity and stability. Our theoretical analysis reveals how DYNAMO-GAT disrupts the convergence to oversmoothed states, while experimental results on benchmark datasets demonstrate its superior performance and efficiency compared to traditional and state-of-the-art methods. DYNAMO-GAT not only advances the theoretical understanding of oversmoothing through the lens of dynamical systems but also provides a practical and effective solution for improving the stability and expressiveness of deep GNNs.

Online Relational Inference for Evolving Multi-agent Interacting Systems

Nov 03, 2024

Abstract:We introduce a novel framework, Online Relational Inference (ORI), designed to efficiently identify hidden interaction graphs in evolving multi-agent interacting systems using streaming data. Unlike traditional offline methods that rely on a fixed training set, ORI employs online backpropagation, updating the model with each new data point, thereby allowing it to adapt to changing environments in real-time. A key innovation is the use of an adjacency matrix as a trainable parameter, optimized through a new adaptive learning rate technique called AdaRelation, which adjusts based on the historical sensitivity of the decoder to changes in the interaction graph. Additionally, a data augmentation method named Trajectory Mirror (TM) is introduced to improve generalization by exposing the model to varied trajectory patterns. Experimental results on both synthetic datasets and real-world data (CMU MoCap for human motion) demonstrate that ORI significantly improves the accuracy and adaptability of relational inference in dynamic settings compared to existing methods. This approach is model-agnostic, enabling seamless integration with various neural relational inference (NRI) architectures, and offers a robust solution for real-time applications in complex, evolving systems.

RoboKoop: Efficient Control Conditioned Representations from Visual Input in Robotics using Koopman Operator

Sep 04, 2024

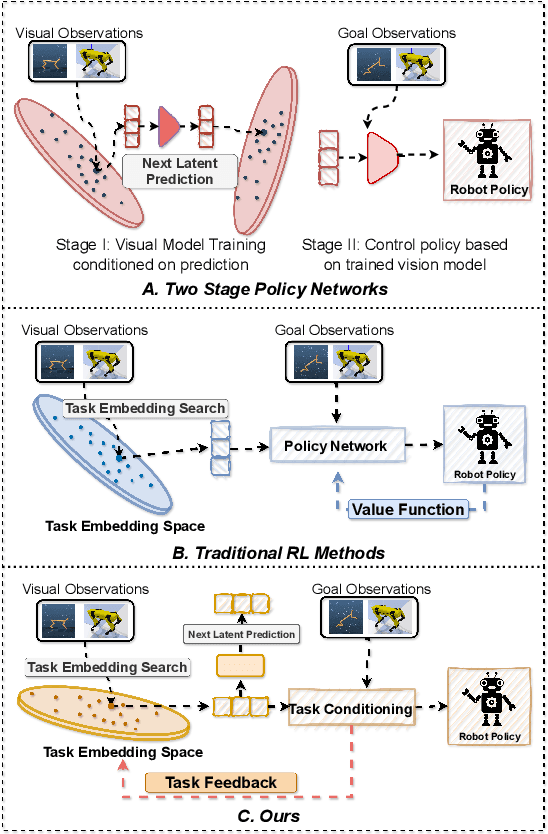

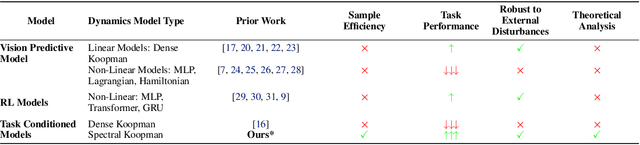

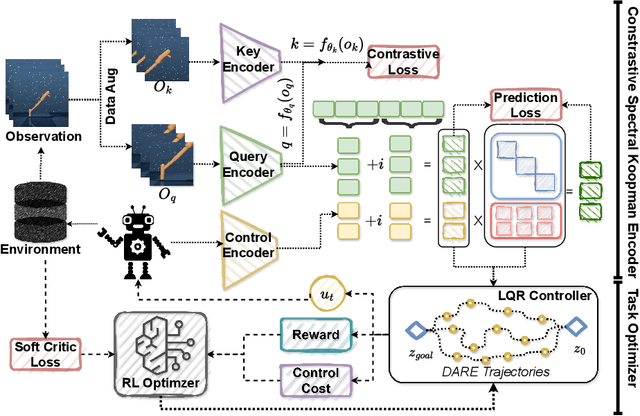

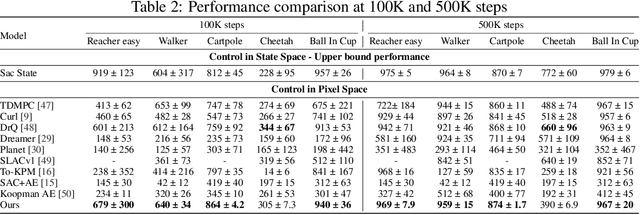

Abstract:Developing agents that can perform complex control tasks from high-dimensional observations is a core ability of autonomous agents that requires underlying robust task control policies and adapting the underlying visual representations to the task. Most existing policies need a lot of training samples and treat this problem from the lens of two-stage learning with a controller learned on top of pre-trained vision models. We approach this problem from the lens of Koopman theory and learn visual representations from robotic agents conditioned on specific downstream tasks in the context of learning stabilizing control for the agent. We introduce a Contrastive Spectral Koopman Embedding network that allows us to learn efficient linearized visual representations from the agent's visual data in a high dimensional latent space and utilizes reinforcement learning to perform off-policy control on top of the extracted representations with a linear controller. Our method enhances stability and control in gradient dynamics over time, significantly outperforming existing approaches by improving efficiency and accuracy in learning task policies over extended horizons.

Exploiting Heterogeneity in Timescales for Sparse Recurrent Spiking Neural Networks for Energy-Efficient Edge Computing

Jul 08, 2024

Abstract:Spiking Neural Networks (SNNs) represent the forefront of neuromorphic computing, promising energy-efficient and biologically plausible models for complex tasks. This paper weaves together three groundbreaking studies that revolutionize SNN performance through the introduction of heterogeneity in neuron and synapse dynamics. We explore the transformative impact of Heterogeneous Recurrent Spiking Neural Networks (HRSNNs), supported by rigorous analytical frameworks and novel pruning methods like Lyapunov Noise Pruning (LNP). Our findings reveal how heterogeneity not only enhances classification performance but also reduces spiking activity, leading to more efficient and robust networks. By bridging theoretical insights with practical applications, this comprehensive summary highlights the potential of SNNs to outperform traditional neural networks while maintaining lower computational costs. Join us on a journey through the cutting-edge advancements that pave the way for the future of intelligent, energy-efficient neural computing.

Towards Robust Real-Time Hardware-based Mobile Malware Detection using Multiple Instance Learning Formulation

Apr 19, 2024Abstract:This study introduces RT-HMD, a Hardware-based Malware Detector (HMD) for mobile devices, that refines malware representation in segmented time-series through a Multiple Instance Learning (MIL) approach. We address the mislabeling issue in real-time HMDs, where benign segments in malware time-series incorrectly inherit malware labels, leading to increased false positives. Utilizing the proposed Malicious Discriminative Score within the MIL framework, RT-HMD effectively identifies localized malware behaviors, thereby improving the predictive accuracy. Empirical analysis, using a hardware telemetry dataset collected from a mobile platform across 723 benign and 1033 malware samples, shows a 5% precision boost while maintaining recall, outperforming baselines affected by mislabeled benign segments.

Learning Locally Interacting Discrete Dynamical Systems: Towards Data-Efficient and Scalable Prediction

Apr 09, 2024

Abstract:Locally interacting dynamical systems, such as epidemic spread, rumor propagation through crowd, and forest fire, exhibit complex global dynamics originated from local, relatively simple, and often stochastic interactions between dynamic elements. Their temporal evolution is often driven by transitions between a finite number of discrete states. Despite significant advancements in predictive modeling through deep learning, such interactions among many elements have rarely explored as a specific domain for predictive modeling. We present Attentive Recurrent Neural Cellular Automata (AR-NCA), to effectively discover unknown local state transition rules by associating the temporal information between neighboring cells in a permutation-invariant manner. AR-NCA exhibits the superior generalizability across various system configurations (i.e., spatial distribution of states), data efficiency and robustness in extremely data-limited scenarios even in the presence of stochastic interactions, and scalability through spatial dimension-independent prediction.

Topological Representations of Heterogeneous Learning Dynamics of Recurrent Spiking Neural Networks

Mar 19, 2024Abstract:Spiking Neural Networks (SNNs) have become an essential paradigm in neuroscience and artificial intelligence, providing brain-inspired computation. Recent advances in literature have studied the network representations of deep neural networks. However, there has been little work that studies representations learned by SNNs, especially using unsupervised local learning methods like spike-timing dependent plasticity (STDP). Recent work by \cite{barannikov2021representation} has introduced a novel method to compare topological mappings of learned representations called Representation Topology Divergence (RTD). Though useful, this method is engineered particularly for feedforward deep neural networks and cannot be used for recurrent networks like Recurrent SNNs (RSNNs). This paper introduces a novel methodology to use RTD to measure the difference between distributed representations of RSNN models with different learning methods. We propose a novel reformulation of RSNNs using feedforward autoencoder networks with skip connections to help us compute the RTD for recurrent networks. Thus, we investigate the learning capabilities of RSNN trained using STDP and the role of heterogeneity in the synaptic dynamics in learning such representations. We demonstrate that heterogeneous STDP in RSNNs yield distinct representations than their homogeneous and surrogate gradient-based supervised learning counterparts. Our results provide insights into the potential of heterogeneous SNN models, aiding the development of more efficient and biologically plausible hybrid artificial intelligence systems.

Sparse Spiking Neural Network: Exploiting Heterogeneity in Timescales for Pruning Recurrent SNN

Mar 06, 2024Abstract:Recurrent Spiking Neural Networks (RSNNs) have emerged as a computationally efficient and brain-inspired learning model. The design of sparse RSNNs with fewer neurons and synapses helps reduce the computational complexity of RSNNs. Traditionally, sparse SNNs are obtained by first training a dense and complex SNN for a target task, and, then, pruning neurons with low activity (activity-based pruning) while maintaining task performance. In contrast, this paper presents a task-agnostic methodology for designing sparse RSNNs by pruning a large randomly initialized model. We introduce a novel Lyapunov Noise Pruning (LNP) algorithm that uses graph sparsification methods and utilizes Lyapunov exponents to design a stable sparse RSNN from a randomly initialized RSNN. We show that the LNP can leverage diversity in neuronal timescales to design a sparse Heterogeneous RSNN (HRSNN). Further, we show that the same sparse HRSNN model can be trained for different tasks, such as image classification and temporal prediction. We experimentally show that, in spite of being task-agnostic, LNP increases computational efficiency (fewer neurons and synapses) and prediction performance of RSNNs compared to traditional activity-based pruning of trained dense models.

* Published as a conference paper at ICLR 2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge