A Dynamical Systems-Inspired Pruning Strategy for Addressing Oversmoothing in Graph Neural Networks

Paper and Code

Dec 10, 2024

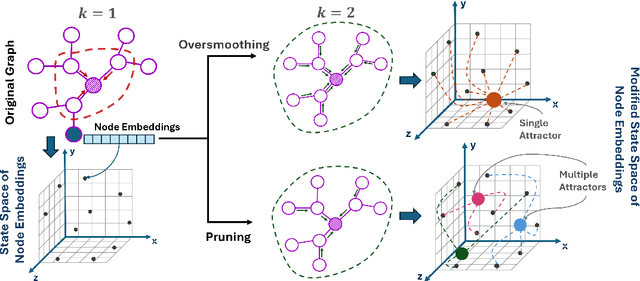

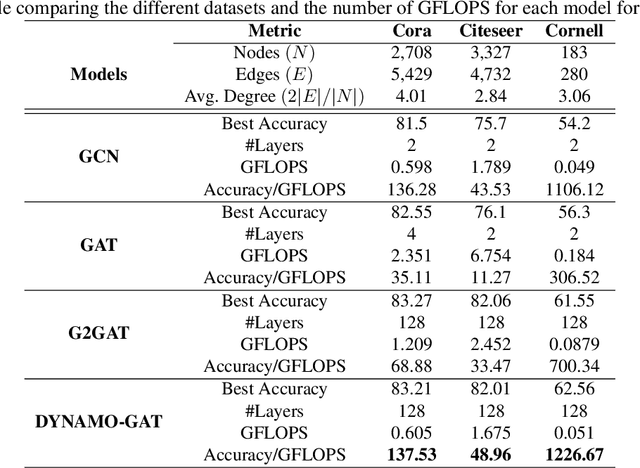

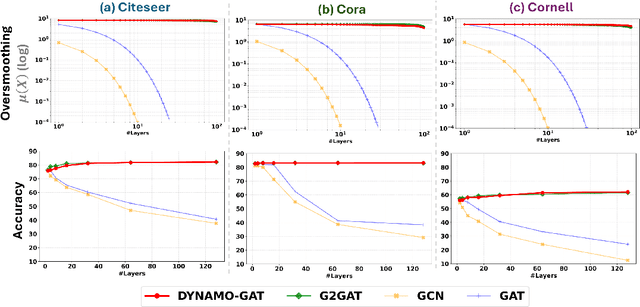

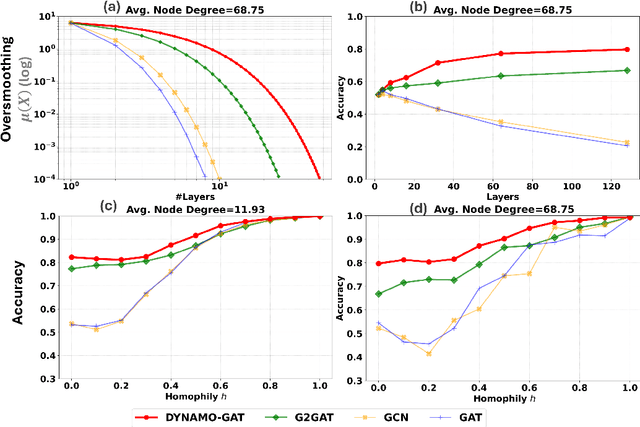

Oversmoothing in Graph Neural Networks (GNNs) poses a significant challenge as network depth increases, leading to homogenized node representations and a loss of expressiveness. In this work, we approach the oversmoothing problem from a dynamical systems perspective, providing a deeper understanding of the stability and convergence behavior of GNNs. Leveraging insights from dynamical systems theory, we identify the root causes of oversmoothing and propose \textbf{\textit{DYNAMO-GAT}}. This approach utilizes noise-driven covariance analysis and Anti-Hebbian principles to selectively prune redundant attention weights, dynamically adjusting the network's behavior to maintain node feature diversity and stability. Our theoretical analysis reveals how DYNAMO-GAT disrupts the convergence to oversmoothed states, while experimental results on benchmark datasets demonstrate its superior performance and efficiency compared to traditional and state-of-the-art methods. DYNAMO-GAT not only advances the theoretical understanding of oversmoothing through the lens of dynamical systems but also provides a practical and effective solution for improving the stability and expressiveness of deep GNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge