Pietro Michelucci

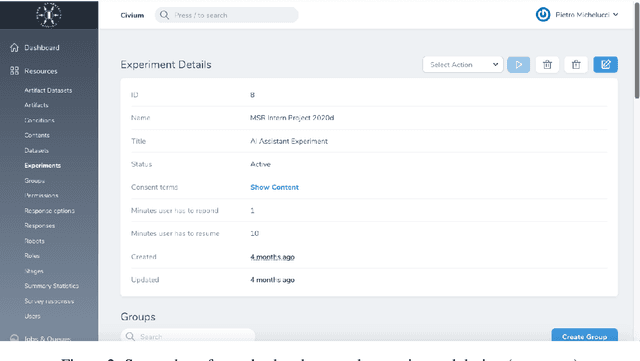

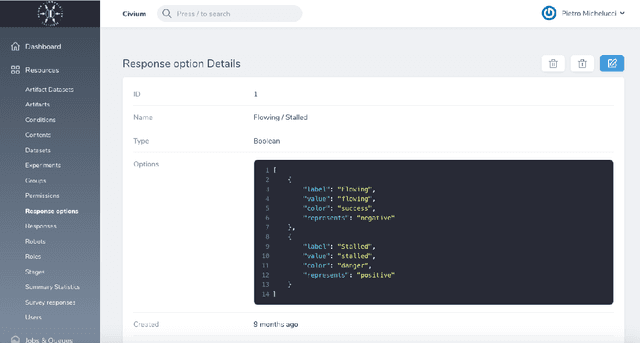

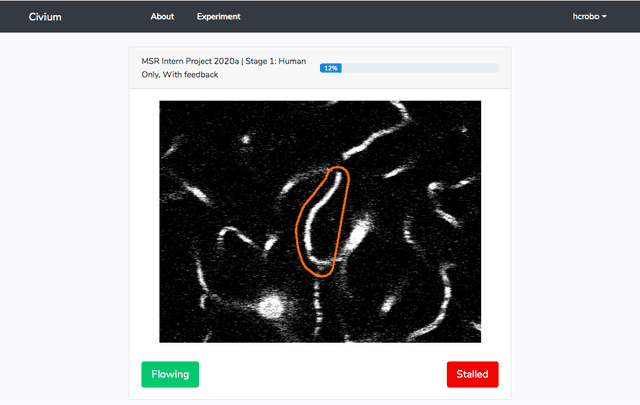

Advancing Human-AI Complementarity: The Impact of User Expertise and Algorithmic Tuning on Joint Decision Making

Aug 16, 2022

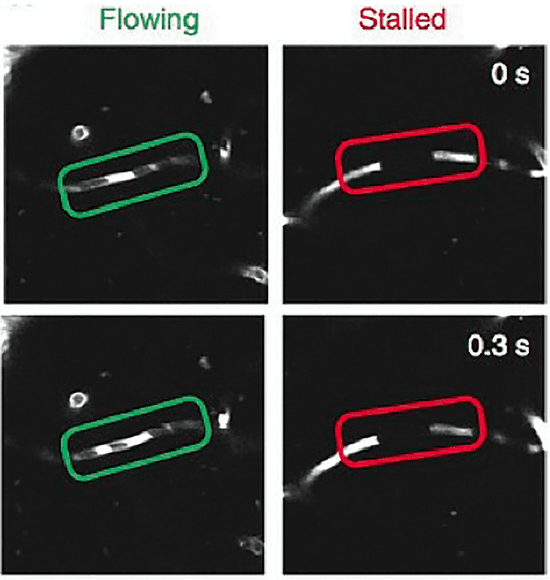

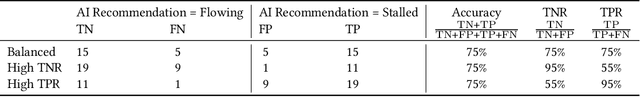

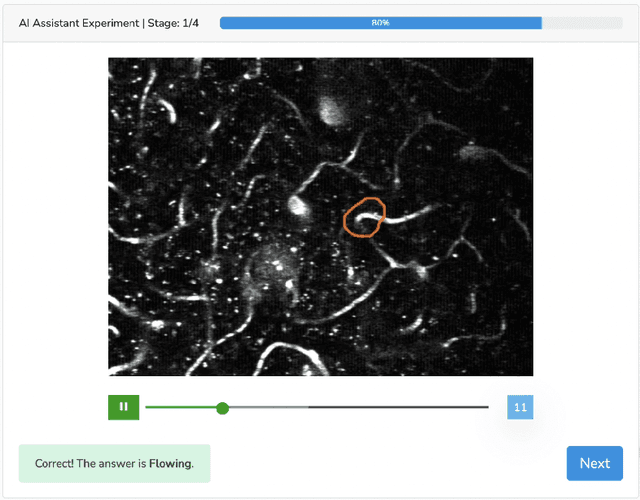

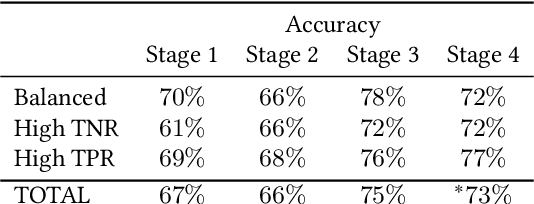

Abstract:Human-AI collaboration for decision-making strives to achieve team performance that exceeds the performance of humans or AI alone. However, many factors can impact success of Human-AI teams, including a user's domain expertise, mental models of an AI system, trust in recommendations, and more. This work examines users' interaction with three simulated algorithmic models, all with similar accuracy but different tuning on their true positive and true negative rates. Our study examined user performance in a non-trivial blood vessel labeling task where participants indicated whether a given blood vessel was flowing or stalled. Our results show that while recommendations from an AI-Assistant can aid user decision making, factors such as users' baseline performance relative to the AI and complementary tuning of AI error types significantly impact overall team performance. Novice users improved, but not to the accuracy level of the AI. Highly proficient users were generally able to discern when they should follow the AI recommendation and typically maintained or improved their performance. Mid-performers, who had a similar level of accuracy to the AI, were most variable in terms of whether the AI recommendations helped or hurt their performance. In addition, we found that users' perception of the AI's performance relative on their own also had a significant impact on whether their accuracy improved when given AI recommendations. This work provides insights on the complexity of factors related to Human-AI collaboration and provides recommendations on how to develop human-centered AI algorithms to complement users in decision-making tasks.

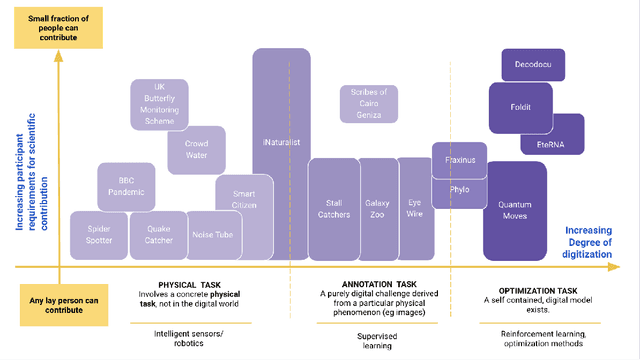

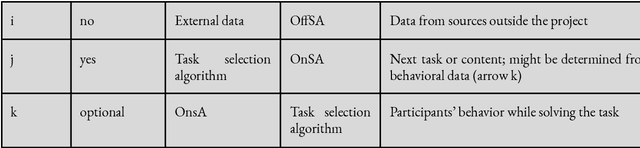

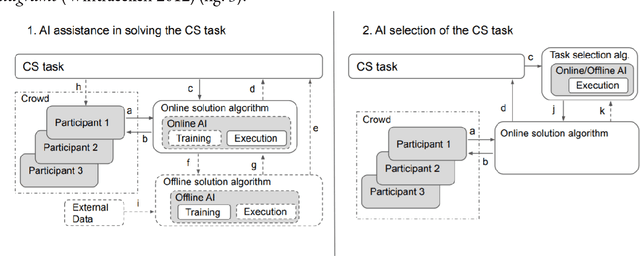

Revisiting Citizen Science Through the Lens of Hybrid Intelligence

Apr 30, 2021

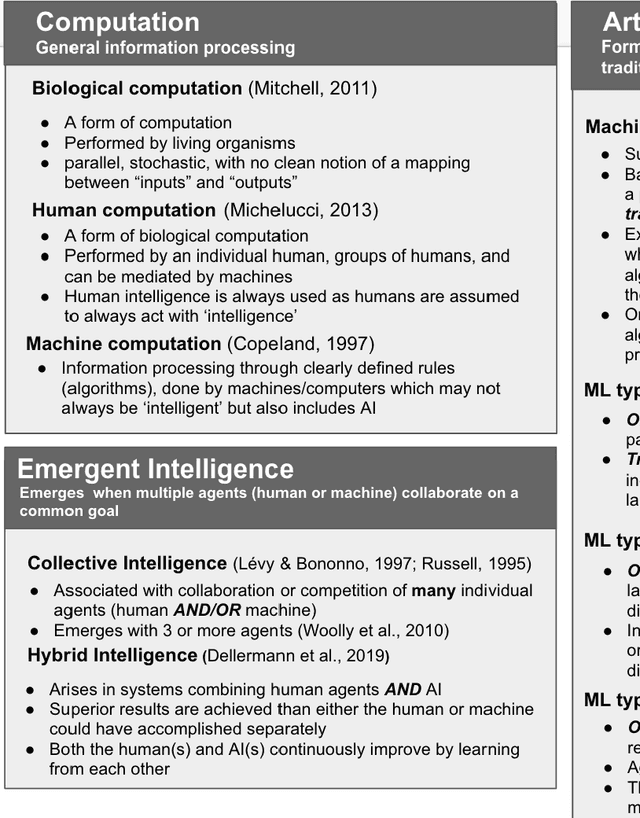

Abstract:Artificial Intelligence (AI) can augment and sometimes even replace human cognition. Inspired by efforts to value human agency alongside productivity, we discuss the benefits of solving Citizen Science (CS) tasks with Hybrid Intelligence (HI), a synergetic mixture of human and artificial intelligence. Currently there is no clear framework or methodology on how to create such an effective mixture. Due to the unique participant-centered set of values and the abundance of tasks drawing upon both human common sense and complex 21st century skills, we believe that the field of CS offers an invaluable testbed for the development of HI and human-centered AI of the 21st century, while benefiting CS as well. In order to investigate this potential, we first relate CS to adjacent computational disciplines. Then, we demonstrate that CS projects can be grouped according to their potential for HI-enhancement by examining two key dimensions: the level of digitization and the amount of knowledge or experience required for participation. Finally, we propose a framework for types of human-AI interaction in CS based on established criteria of HI. This "HI lens" provides the CS community with an overview of several ways to utilize the combination of AI and human intelligence in their projects. It also allows the AI community to gain ideas on how developing AI in CS projects can further their own field.

Imagine All the People: Citizen Science, Artificial Intelligence, and Computational Research

Apr 05, 2021Abstract:Machine learning, artificial intelligence, and deep learning have advanced significantly over the past decade. Nonetheless, humans possess unique abilities such as creativity, intuition, context and abstraction, analytic problem solving, and detecting unusual events. To successfully tackle pressing scientific and societal challenges, we need the complementary capabilities of both humans and machines. The Federal Government could accelerate its priorities on multiple fronts through judicious integration of citizen science and crowdsourcing with artificial intelligence (AI), Internet of Things (IoT), and cloud strategies.

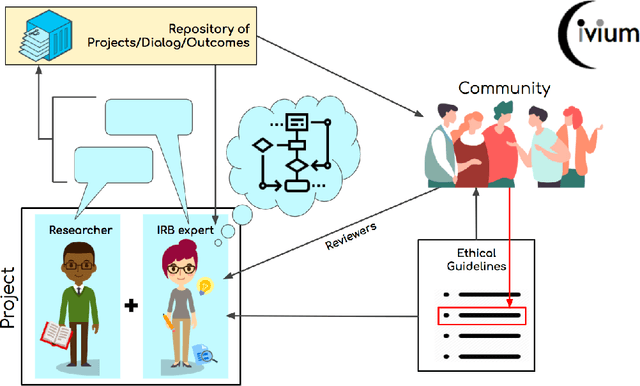

Human computation requires and enables a new approach to ethical review

Nov 21, 2020

Abstract:With humans increasingly serving as computational elements in distributed information processing systems and in consideration of the profit-driven motives and potential inequities that might accompany the emerging thinking economy[1], we recognize the need for establishing a set of related ethics to ensure the fair treatment and wellbeing of online cognitive laborers and the conscientious use of the capabilities to which they contribute. Toward this end, we first describe human-in-the-loop computing in context of the new concerns it raises that are not addressed by traditional ethical research standards. We then describe shortcomings in the traditional approach to ethical review and introduce a dynamic approach for sustaining an ethical framework that can continue to evolve within the rapidly shifting context of disruptive new technologies.

A U.S. Research Roadmap for Human Computation

May 26, 2015

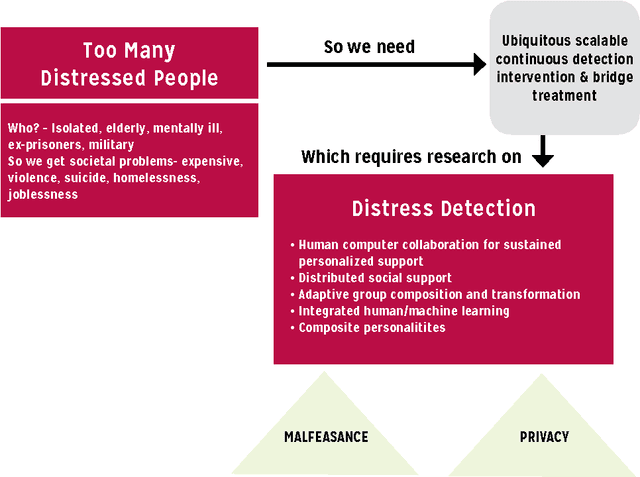

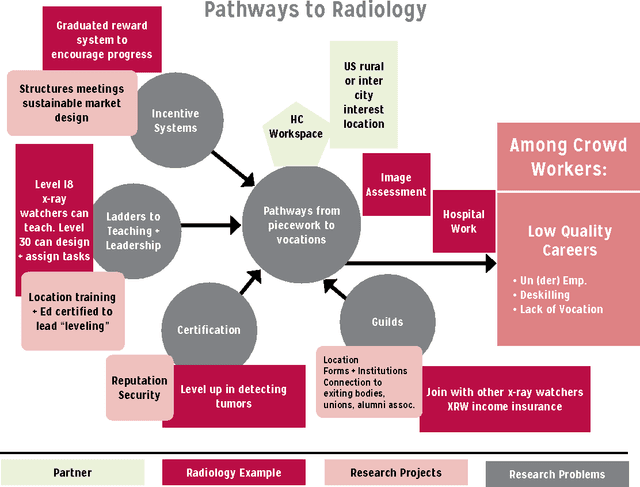

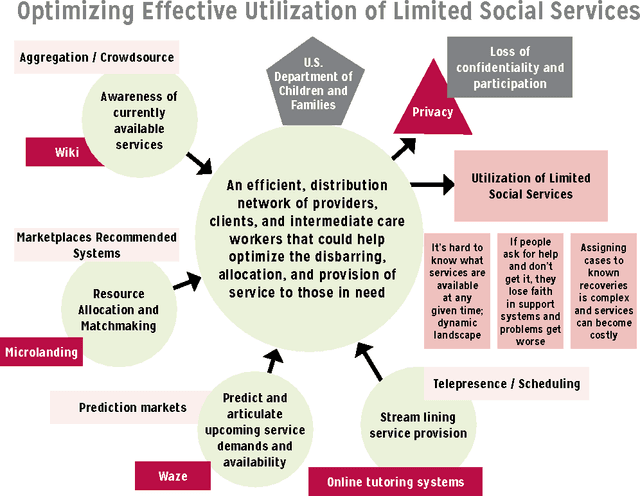

Abstract:The Web has made it possible to harness human cognition en masse to achieve new capabilities. Some of these successes are well known; for example Wikipedia has become the go-to place for basic information on all things; Duolingo engages millions of people in real-life translation of text, while simultaneously teaching them to speak foreign languages; and fold.it has enabled public-driven scientific discoveries by recasting complex biomedical challenges into popular online puzzle games. These and other early successes hint at the tremendous potential for future crowd-powered capabilities for the benefit of health, education, science, and society. In the process, a new field called Human Computation has emerged to better understand, replicate, and improve upon these successes through scientific research. Human Computation refers to the science that underlies online crowd-powered systems and was the topic of a recent visioning activity in which a representative cross-section of researchers, industry practitioners, visionaries, funding agency representatives, and policy makers came together to understand what makes crowd-powered systems successful. Teams of experts considered past, present, and future human computation systems to explore which kinds of crowd-powered systems have the greatest potential for societal impact and which kinds of research will best enable the efficient development of new crowd-powered systems to achieve this impact. This report summarize the products and findings of those activities as well as the unconventional process and activities employed by the workshop, which were informed by human computation research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge