Divya Ramesh

Advancing Human-AI Complementarity: The Impact of User Expertise and Algorithmic Tuning on Joint Decision Making

Aug 16, 2022

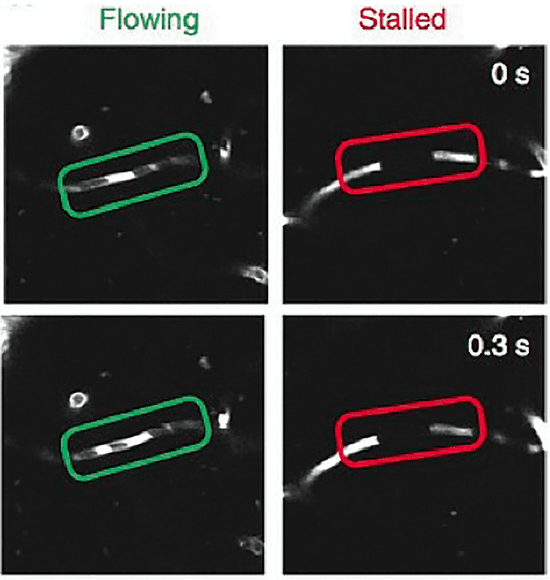

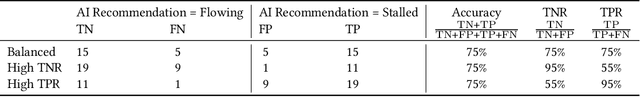

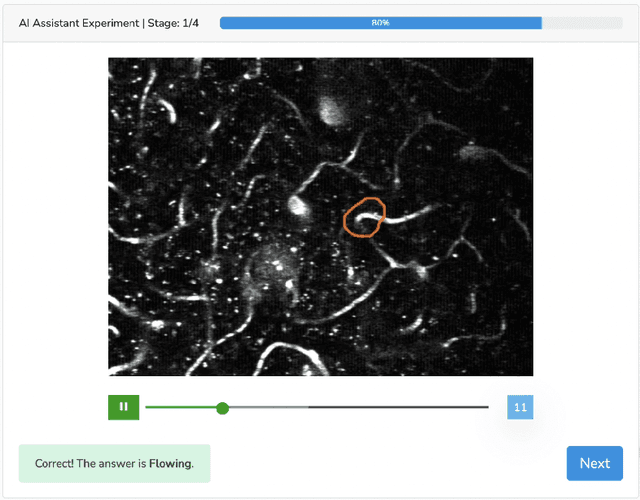

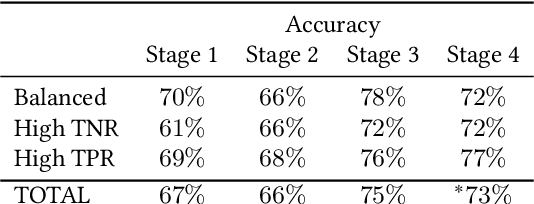

Abstract:Human-AI collaboration for decision-making strives to achieve team performance that exceeds the performance of humans or AI alone. However, many factors can impact success of Human-AI teams, including a user's domain expertise, mental models of an AI system, trust in recommendations, and more. This work examines users' interaction with three simulated algorithmic models, all with similar accuracy but different tuning on their true positive and true negative rates. Our study examined user performance in a non-trivial blood vessel labeling task where participants indicated whether a given blood vessel was flowing or stalled. Our results show that while recommendations from an AI-Assistant can aid user decision making, factors such as users' baseline performance relative to the AI and complementary tuning of AI error types significantly impact overall team performance. Novice users improved, but not to the accuracy level of the AI. Highly proficient users were generally able to discern when they should follow the AI recommendation and typically maintained or improved their performance. Mid-performers, who had a similar level of accuracy to the AI, were most variable in terms of whether the AI recommendations helped or hurt their performance. In addition, we found that users' perception of the AI's performance relative on their own also had a significant impact on whether their accuracy improved when given AI recommendations. This work provides insights on the complexity of factors related to Human-AI collaboration and provides recommendations on how to develop human-centered AI algorithms to complement users in decision-making tasks.

How Platform-User Power Relations Shape Algorithmic Accountability: A Case Study of Instant Loan Platforms and Financially Stressed Users in India

May 11, 2022Abstract:Accountability, a requisite for responsible AI, can be facilitated through transparency mechanisms such as audits and explainability. However, prior work suggests that the success of these mechanisms may be limited to Global North contexts; understanding the limitations of current interventions in varied socio-political conditions is crucial to help policymakers facilitate wider accountability. To do so, we examined the mediation of accountability in the existing interactions between vulnerable users and a 'high-risk' AI system in a Global South setting. We report on a qualitative study with 29 financially-stressed users of instant loan platforms in India. We found that users experienced intense feelings of indebtedness for the 'boon' of instant loans, and perceived huge obligations towards loan platforms. Users fulfilled obligations by accepting harsh terms and conditions, over-sharing sensitive data, and paying high fees to unknown and unverified lenders. Users demonstrated a dependence on loan platforms by persisting with such behaviors despite risks of harms such as abuse, recurring debts, discrimination, privacy harms, and self-harm to them. Instead of being enraged with loan platforms, users assumed responsibility for their negative experiences, thus releasing the high-powered loan platforms from accountability obligations. We argue that accountability is shaped by platform-user power relations, and urge caution to policymakers in adopting a purely technical approach to fostering algorithmic accountability. Instead, we call for situated interventions that enhance agency of users, enable meaningful transparency, reconfigure designer-user relations, and prompt a critical reflection in practitioners towards wider accountability. We conclude with implications for responsibly deploying AI in FinTech applications in India and beyond.

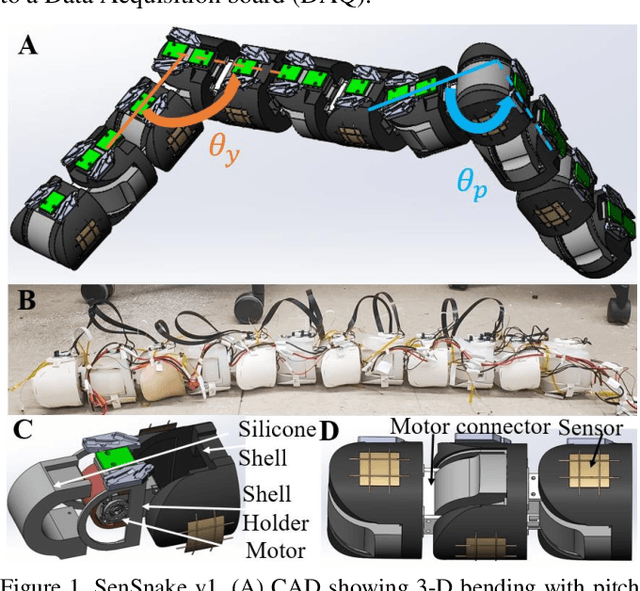

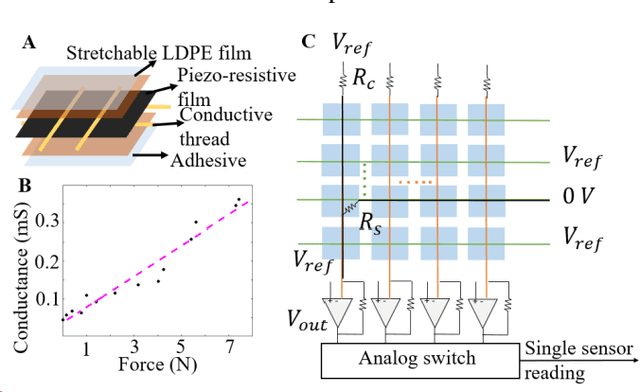

SenSnake: A snake robot with contact force sensing for studying locomotion in complex 3-D terrain

Dec 15, 2021

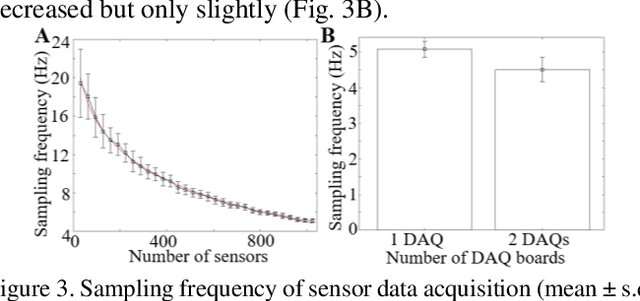

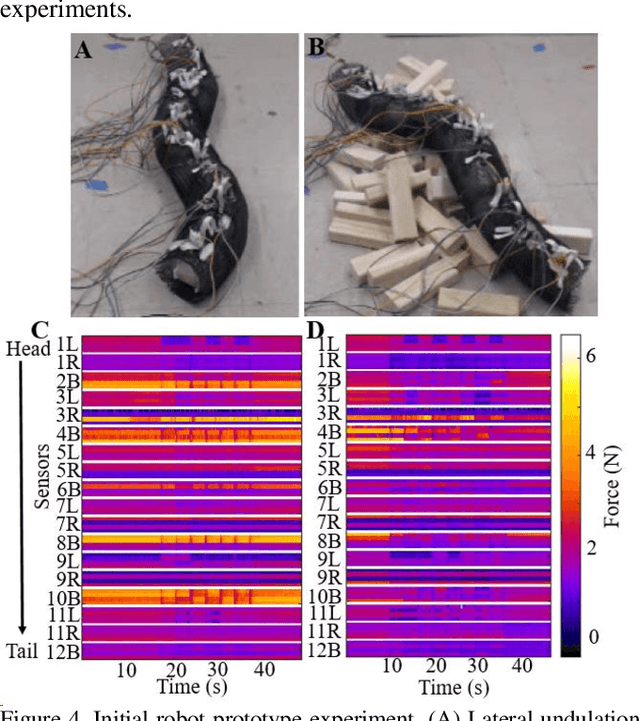

Abstract:Despite advances in a diversity of environments, snake robots are still far behind snakes in traversing complex 3-D terrain with large obstacles. This is due to a lack of understanding of how to control 3-D body bending to push against terrain features to generate and control propulsion. Biological studies suggested that generalist snakes use contact force sensing to adjust body bending in real time to do so. However, studying this sensory-modulated force control in snakes is challenging, due to a lack of basic knowledge of how their force sensing organs work. Here, we take a robophysics approach to make progress, starting by developing a snake robot capable of 3-D body bending with contact force sensing to enable systematic locomotion experiments and force measurements. Through two development and testing iterations, we created a 12-segment robot with 36 piezo-resistive sheet sensors distributed on all segments with compliant shells with a sampling frequency of 30 Hz. The robot measured contact forces while traversing a large obstacle using vertical bending with high repeatability, achieving the goal of providing a platform for systematic experiments. Finally, we explored model-based calibration considering the viscoelastic behavior of the piezo-resistive sensor, which will for useful for future studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge