Nithya Sambasivan

How Platform-User Power Relations Shape Algorithmic Accountability: A Case Study of Instant Loan Platforms and Financially Stressed Users in India

May 11, 2022Abstract:Accountability, a requisite for responsible AI, can be facilitated through transparency mechanisms such as audits and explainability. However, prior work suggests that the success of these mechanisms may be limited to Global North contexts; understanding the limitations of current interventions in varied socio-political conditions is crucial to help policymakers facilitate wider accountability. To do so, we examined the mediation of accountability in the existing interactions between vulnerable users and a 'high-risk' AI system in a Global South setting. We report on a qualitative study with 29 financially-stressed users of instant loan platforms in India. We found that users experienced intense feelings of indebtedness for the 'boon' of instant loans, and perceived huge obligations towards loan platforms. Users fulfilled obligations by accepting harsh terms and conditions, over-sharing sensitive data, and paying high fees to unknown and unverified lenders. Users demonstrated a dependence on loan platforms by persisting with such behaviors despite risks of harms such as abuse, recurring debts, discrimination, privacy harms, and self-harm to them. Instead of being enraged with loan platforms, users assumed responsibility for their negative experiences, thus releasing the high-powered loan platforms from accountability obligations. We argue that accountability is shaped by platform-user power relations, and urge caution to policymakers in adopting a purely technical approach to fostering algorithmic accountability. Instead, we call for situated interventions that enhance agency of users, enable meaningful transparency, reconfigure designer-user relations, and prompt a critical reflection in practitioners towards wider accountability. We conclude with implications for responsibly deploying AI in FinTech applications in India and beyond.

Re-imagining Algorithmic Fairness in India and Beyond

Jan 27, 2021

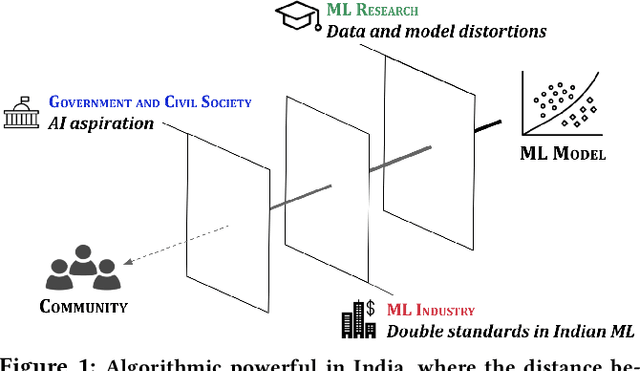

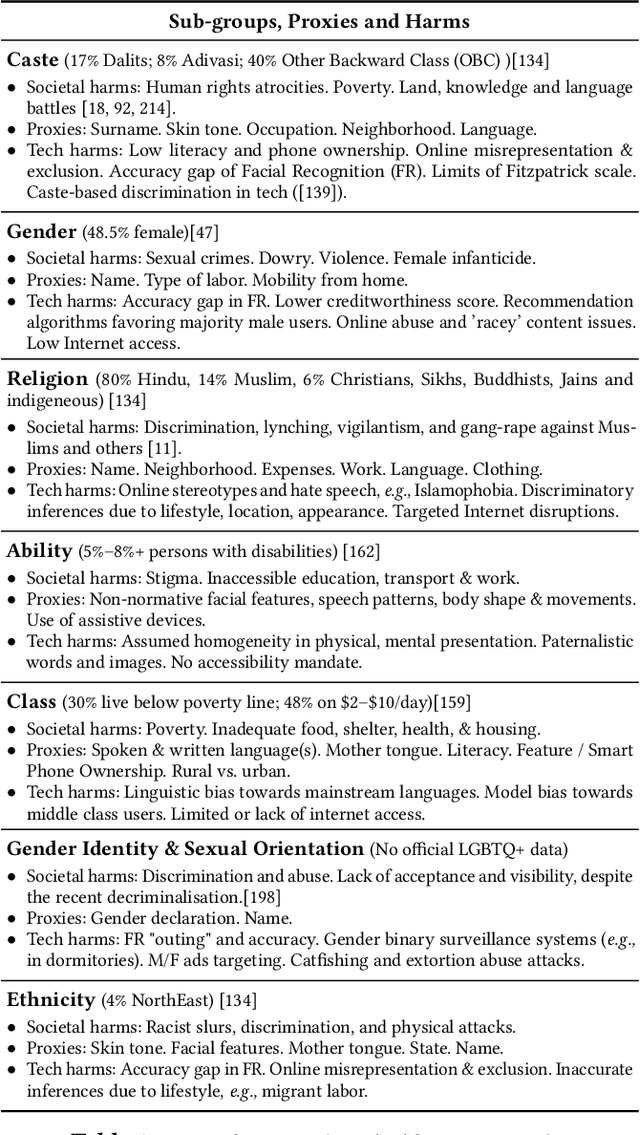

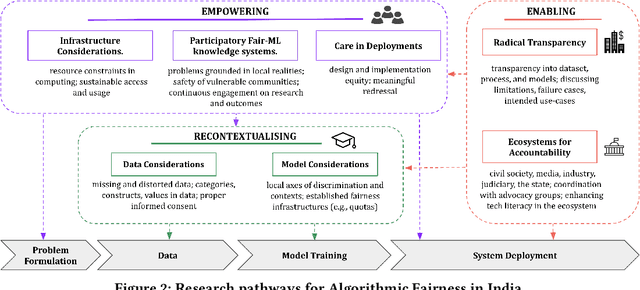

Abstract:Conventional algorithmic fairness is West-centric, as seen in its sub-groups, values, and methods. In this paper, we de-center algorithmic fairness and analyse AI power in India. Based on 36 qualitative interviews and a discourse analysis of algorithmic deployments in India, we find that several assumptions of algorithmic fairness are challenged. We find that in India, data is not always reliable due to socio-economic factors, ML makers appear to follow double standards, and AI evokes unquestioning aspiration. We contend that localising model fairness alone can be window dressing in India, where the distance between models and oppressed communities is large. Instead, we re-imagine algorithmic fairness in India and provide a roadmap to re-contextualise data and models, empower oppressed communities, and enable Fair-ML ecosystems.

Non-portability of Algorithmic Fairness in India

Dec 08, 2020

Abstract:Conventional algorithmic fairness is Western in its sub-groups, values, and optimizations. In this paper, we ask how portable the assumptions of this largely Western take on algorithmic fairness are to a different geo-cultural context such as India. Based on 36 expert interviews with Indian scholars, and an analysis of emerging algorithmic deployments in India, we identify three clusters of challenges that engulf the large distance between machine learning models and oppressed communities in India. We argue that a mere translation of technical fairness work to Indian subgroups may serve only as a window dressing, and instead, call for a collective re-imagining of Fair-ML, by re-contextualising data and models, empowering oppressed communities, and more importantly, enabling ecosystems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge