Libuše Hannah Vepřek

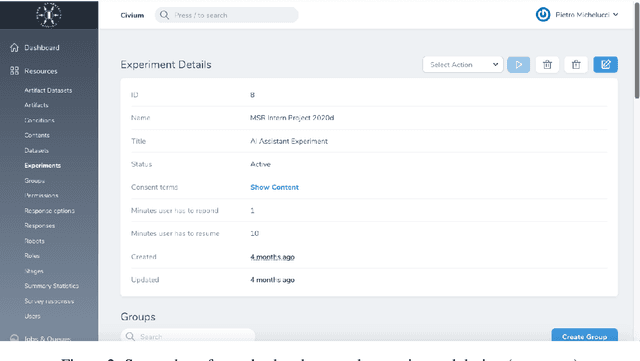

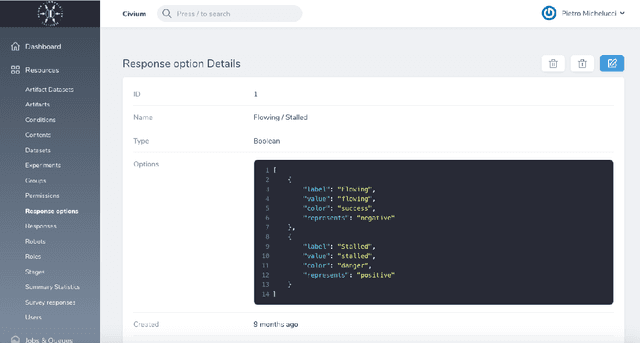

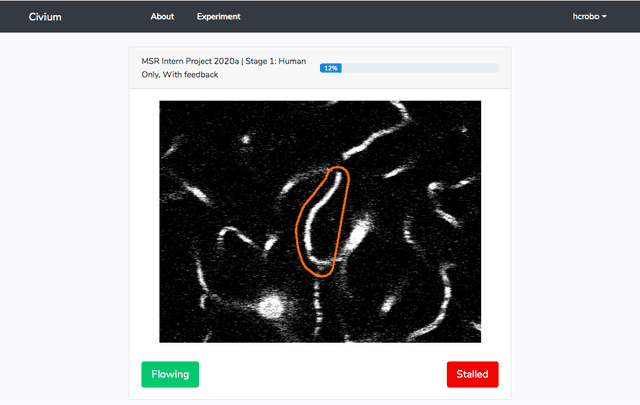

Advancing Human-AI Complementarity: The Impact of User Expertise and Algorithmic Tuning on Joint Decision Making

Aug 16, 2022

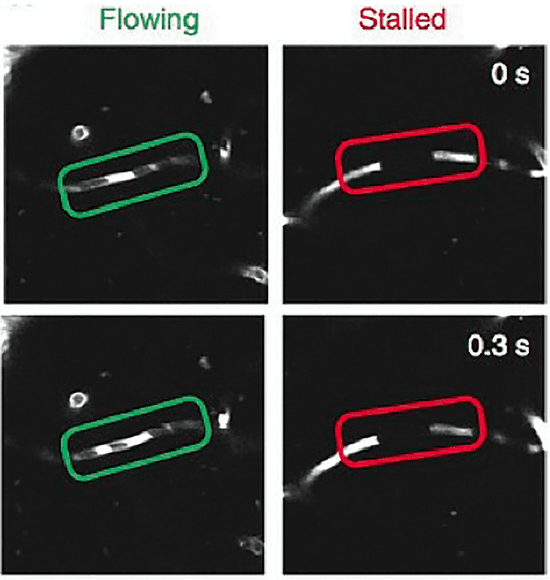

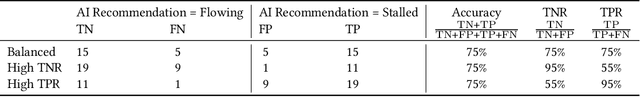

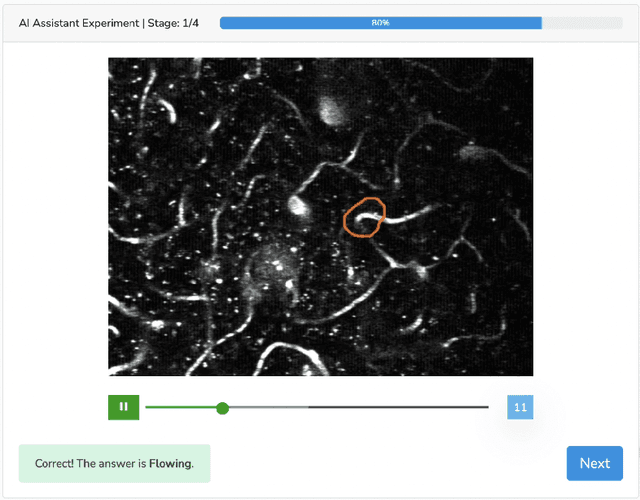

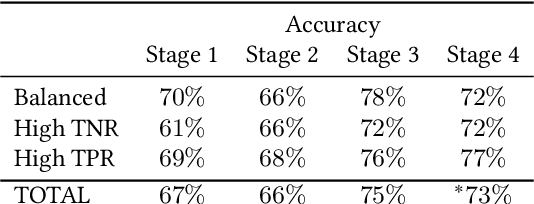

Abstract:Human-AI collaboration for decision-making strives to achieve team performance that exceeds the performance of humans or AI alone. However, many factors can impact success of Human-AI teams, including a user's domain expertise, mental models of an AI system, trust in recommendations, and more. This work examines users' interaction with three simulated algorithmic models, all with similar accuracy but different tuning on their true positive and true negative rates. Our study examined user performance in a non-trivial blood vessel labeling task where participants indicated whether a given blood vessel was flowing or stalled. Our results show that while recommendations from an AI-Assistant can aid user decision making, factors such as users' baseline performance relative to the AI and complementary tuning of AI error types significantly impact overall team performance. Novice users improved, but not to the accuracy level of the AI. Highly proficient users were generally able to discern when they should follow the AI recommendation and typically maintained or improved their performance. Mid-performers, who had a similar level of accuracy to the AI, were most variable in terms of whether the AI recommendations helped or hurt their performance. In addition, we found that users' perception of the AI's performance relative on their own also had a significant impact on whether their accuracy improved when given AI recommendations. This work provides insights on the complexity of factors related to Human-AI collaboration and provides recommendations on how to develop human-centered AI algorithms to complement users in decision-making tasks.

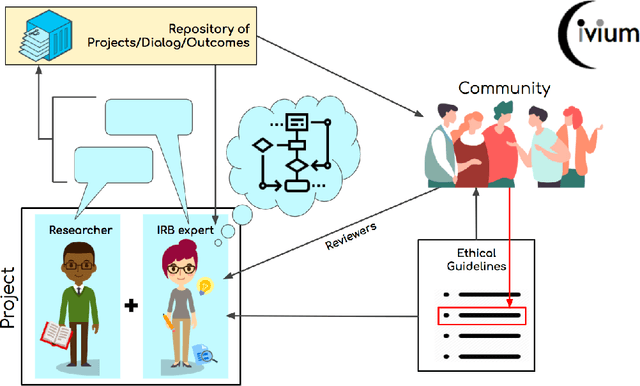

Human computation requires and enables a new approach to ethical review

Nov 21, 2020

Abstract:With humans increasingly serving as computational elements in distributed information processing systems and in consideration of the profit-driven motives and potential inequities that might accompany the emerging thinking economy[1], we recognize the need for establishing a set of related ethics to ensure the fair treatment and wellbeing of online cognitive laborers and the conscientious use of the capabilities to which they contribute. Toward this end, we first describe human-in-the-loop computing in context of the new concerns it raises that are not addressed by traditional ethical research standards. We then describe shortcomings in the traditional approach to ethical review and introduce a dynamic approach for sustaining an ethical framework that can continue to evolve within the rapidly shifting context of disruptive new technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge