Philippe-Henri Gosselin

S2F2: Self-Supervised High Fidelity Face Reconstruction from Monocular Image

Apr 05, 2022

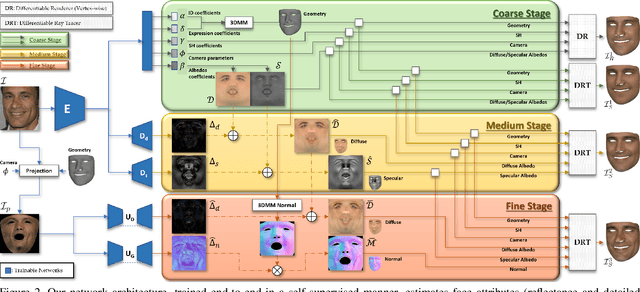

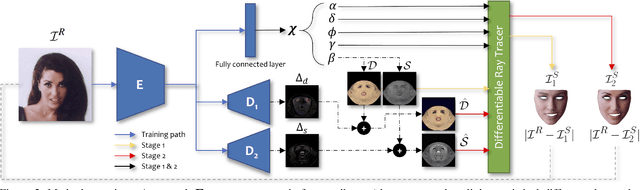

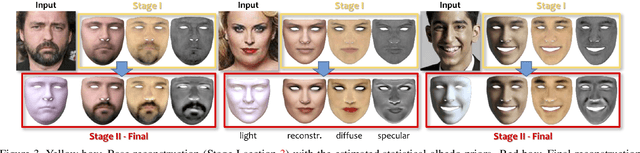

Abstract:We present a novel face reconstruction method capable of reconstructing detailed face geometry, spatially varying face reflectance from a single monocular image. We build our work upon the recent advances of DNN-based auto-encoders with differentiable ray tracing image formation, trained in self-supervised manner. While providing the advantage of learning-based approaches and real-time reconstruction, the latter methods lacked fidelity. In this work, we achieve, for the first time, high fidelity face reconstruction using self-supervised learning only. Our novel coarse-to-fine deep architecture allows us to solve the challenging problem of decoupling face reflectance from geometry using a single image, at high computational speed. Compared to state-of-the-art methods, our method achieves more visually appealing reconstruction.

Towards High Fidelity Monocular Face Reconstruction with Rich Reflectance using Self-supervised Learning and Ray Tracing

Mar 29, 2021

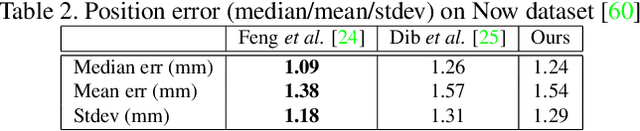

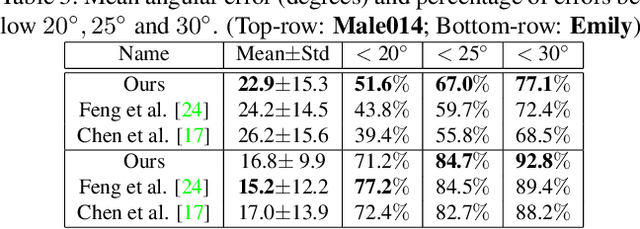

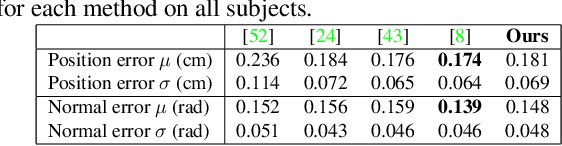

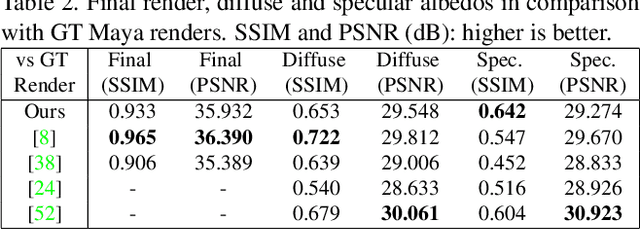

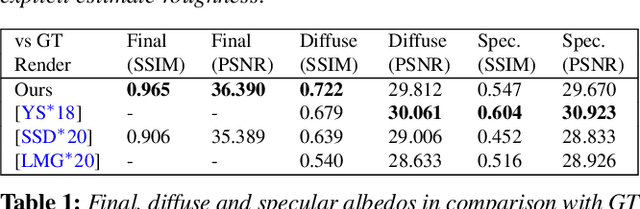

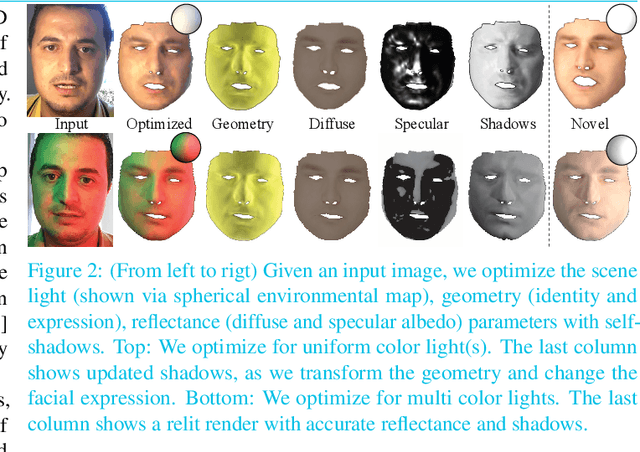

Abstract:Robust face reconstruction from monocular image in general lighting conditions is challenging. Methods combining deep neural network encoders with differentiable rendering have opened up the path for very fast monocular reconstruction of geometry, lighting and reflectance. They can also be trained in self-supervised manner for increased robustness and better generalization. However, their differentiable rasterization based image formation models, as well as underlying scene parameterization, limit them to Lambertian face reflectance and to poor shape details. More recently, ray tracing was introduced for monocular face reconstruction within a classic optimization-based framework and enables state-of-the art results. However optimization-based approaches are inherently slow and lack robustness. In this paper, we build our work on the aforementioned approaches and propose a new method that greatly improves reconstruction quality and robustness in general scenes. We achieve this by combining a CNN encoder with a differentiable ray tracer, which enables us to base the reconstruction on much more advanced personalized diffuse and specular albedos, a more sophisticated illumination model and a plausible representation of self-shadows. This enables to take a big leap forward in reconstruction quality of shape, appearance and lighting even in scenes with difficult illumination. With consistent face attributes reconstruction, our method leads to practical applications such as relighting and self-shadows removal. Compared to state-of-the-art methods, our results show improved accuracy and validity of the approach.

Practical Face Reconstruction via Differentiable Ray Tracing

Jan 13, 2021

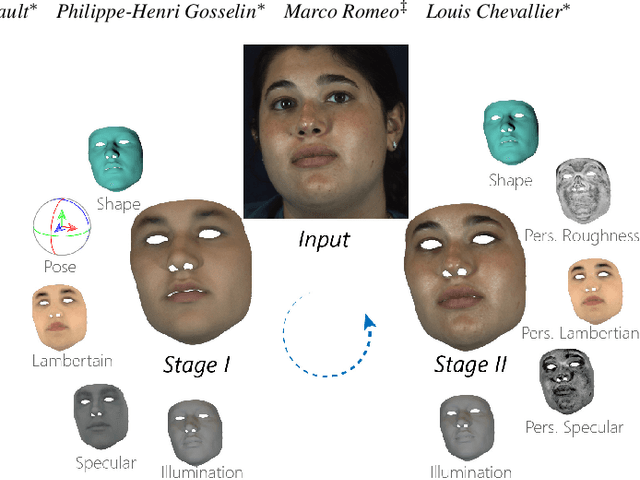

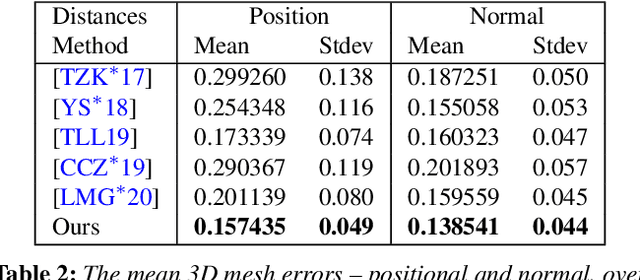

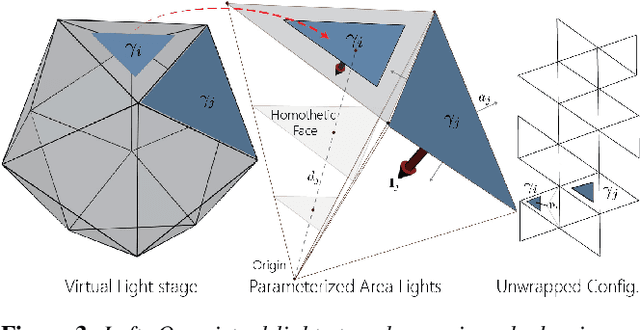

Abstract:We present a differentiable ray-tracing based novel face reconstruction approach where scene attributes - 3D geometry, reflectance (diffuse, specular and roughness), pose, camera parameters, and scene illumination - are estimated from unconstrained monocular images. The proposed method models scene illumination via a novel, parameterized virtual light stage, which in-conjunction with differentiable ray-tracing, introduces a coarse-to-fine optimization formulation for face reconstruction. Our method can not only handle unconstrained illumination and self-shadows conditions, but also estimates diffuse and specular albedos. To estimate the face attributes consistently and with practical semantics, a two-stage optimization strategy systematically uses a subset of parametric attributes, where subsequent attribute estimations factor those previously estimated. For example, self-shadows estimated during the first stage, later prevent its baking into the personalized diffuse and specular albedos in the second stage. We show the efficacy of our approach in several real-world scenarios, where face attributes can be estimated even under extreme illumination conditions. Ablation studies, analyses and comparisons against several recent state-of-the-art methods show improved accuracy and versatility of our approach. With consistent face attributes reconstruction, our method leads to several style -- illumination, albedo, self-shadow -- edit and transfer applications, as discussed in the paper.

Face Reflectance and Geometry Modeling via Differentiable Ray Tracing

Oct 03, 2019

Abstract:We present a novel strategy to automatically reconstruct 3D faces from monocular images with explicitly disentangled facial geometry (pose, identity and expression), reflectance (diffuse and specular albedo), and self-shadows. The scene lights are modeled as a virtual light stage with pre-oriented area lights used in conjunction with differentiable Monte-Carlo ray tracing to optimize the scene and face parameters. With correctly disentangled self-shadows and specular reflection parameters, we can not only obtain robust facial geometry reconstruction, but also gain explicit control over these parameters, with several practical applications. We can change facial expressions with accurate resultant self-shadows or relight the scene and obtain accurate specular reflection and several other parameter combinations.

Data Dependent Kernel Approximation using Pseudo Random Fourier Features

Nov 27, 2017

Abstract:Kernel methods are powerful and flexible approach to solve many problems in machine learning. Due to the pairwise evaluations in kernel methods, the complexity of kernel computation grows as the data size increases; thus the applicability of kernel methods is limited for large scale datasets. Random Fourier Features (RFF) has been proposed to scale the kernel method for solving large scale datasets by approximating kernel function using randomized Fourier features. While this method proved very popular, still it exists shortcomings to be effectively used. As RFF samples the randomized features from a distribution independent of training data, it requires sufficient large number of feature expansions to have similar performances to kernelized classifiers, and this is proportional to the number samples in the dataset. Thus, reducing the number of feature dimensions is necessary to effectively scale to large datasets. In this paper, we propose a kernel approximation method in a data dependent way, coined as Pseudo Random Fourier Features (PRFF) for reducing the number of feature dimensions and also to improve the prediction performance. The proposed approach is evaluated on classification and regression problems and compared with the RFF, orthogonal random features and Nystr{\"o}m approach

A comparison of dense region detectors for image search and fine-grained classification

Apr 17, 2015

Abstract:We consider a pipeline for image classification or search based on coding approaches like Bag of Words or Fisher vectors. In this context, the most common approach is to extract the image patches regularly in a dense manner on several scales. This paper proposes and evaluates alternative choices to extract patches densely. Beyond simple strategies derived from regular interest region detectors, we propose approaches based on super-pixels, edges, and a bank of Zernike filters used as detectors. The different approaches are evaluated on recent image retrieval and fine-grain classification benchmarks. Our results show that the regular dense detector is outperformed by other methods in most situations, leading us to improve the state of the art in comparable setups on standard retrieval and fined-grain benchmarks. As a byproduct of our study, we show that existing methods for blob and super-pixel extraction achieve high accuracy if the patches are extracted along the edges and not around the detected regions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge