Penghao Liang

Automating the Training and Deployment of Models in MLOps by Integrating Systems with Machine Learning

May 16, 2024

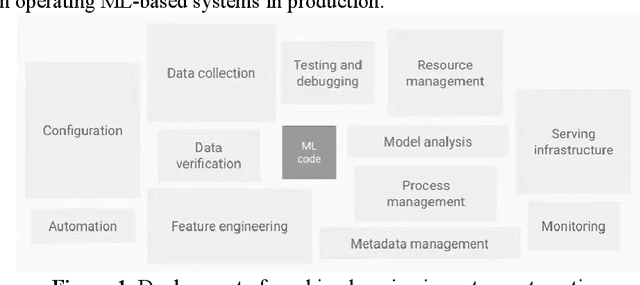

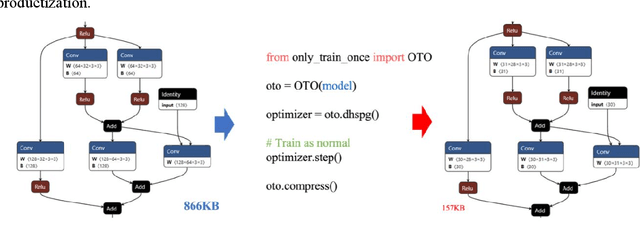

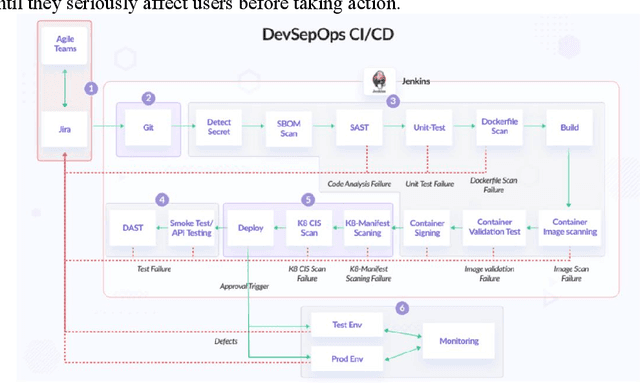

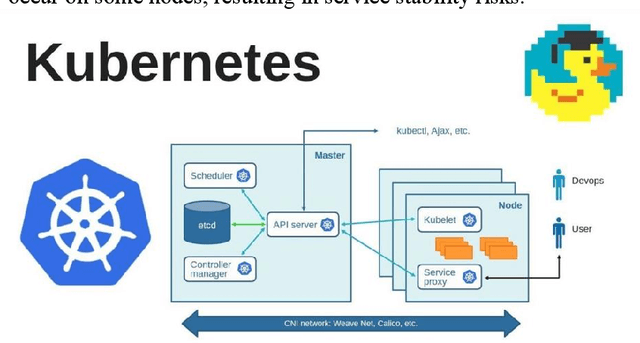

Abstract:This article introduces the importance of machine learning in real-world applications and explores the rise of MLOps (Machine Learning Operations) and its importance for solving challenges such as model deployment and performance monitoring. By reviewing the evolution of MLOps and its relationship to traditional software development methods, the paper proposes ways to integrate the system into machine learning to solve the problems faced by existing MLOps and improve productivity. This paper focuses on the importance of automated model training, and the method to ensure the transparency and repeatability of the training process through version control system. In addition, the challenges of integrating machine learning components into traditional CI/CD pipelines are discussed, and solutions such as versioning environments and containerization are proposed. Finally, the paper emphasizes the importance of continuous monitoring and feedback loops after model deployment to maintain model performance and reliability. Using case studies and best practices from Netflix, the article presents key strategies and lessons learned for successful implementation of MLOps practices, providing valuable references for other organizations to build and optimize their own MLOps practices.

Automated Lane Change Behavior Prediction and Environmental Perception Based on SLAM Technology

Apr 06, 2024

Abstract:In addition to environmental perception sensors such as cameras, radars, etc. in the automatic driving system, the external environment of the vehicle is perceived, in fact, there is also a perception sensor that has been silently dedicated in the system, that is, the positioning module. This paper explores the application of SLAM (Simultaneous Localization and Mapping) technology in the context of automatic lane change behavior prediction and environment perception for autonomous vehicles. It discusses the limitations of traditional positioning methods, introduces SLAM technology, and compares LIDAR SLAM with visual SLAM. Real-world examples from companies like Tesla, Waymo, and Mobileye showcase the integration of AI-driven technologies, sensor fusion, and SLAM in autonomous driving systems. The paper then delves into the specifics of SLAM algorithms, sensor technologies, and the importance of automatic lane changes in driving safety and efficiency. It highlights Tesla's recent update to its Autopilot system, which incorporates automatic lane change functionality using SLAM technology. The paper concludes by emphasizing the crucial role of SLAM in enabling accurate environment perception, positioning, and decision-making for autonomous vehicles, ultimately enhancing safety and driving experience.

Maximizing User Experience with LLMOps-Driven Personalized Recommendation Systems

Apr 01, 2024

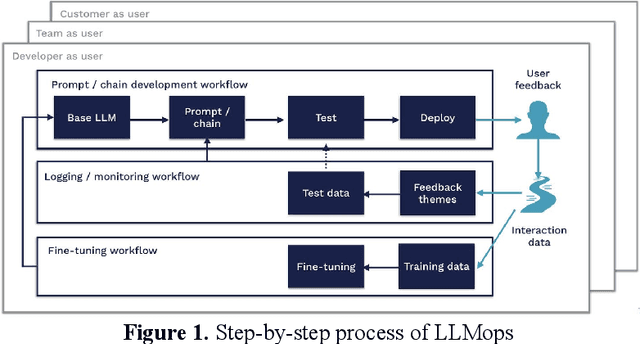

Abstract:The integration of LLMOps into personalized recommendation systems marks a significant advancement in managing LLM-driven applications. This innovation presents both opportunities and challenges for enterprises, requiring specialized teams to navigate the complexity of engineering technology while prioritizing data security and model interpretability. By leveraging LLMOps, enterprises can enhance the efficiency and reliability of large-scale machine learning models, driving personalized recommendations aligned with user preferences. Despite ethical considerations, LLMOps is poised for widespread adoption, promising more efficient and secure machine learning services that elevate user experience and shape the future of personalized recommendation systems.

Research on the Application of Deep Learning-based BERT Model in Sentiment Analysis

Mar 13, 2024

Abstract:This paper explores the application of deep learning techniques, particularly focusing on BERT models, in sentiment analysis. It begins by introducing the fundamental concept of sentiment analysis and how deep learning methods are utilized in this domain. Subsequently, it delves into the architecture and characteristics of BERT models. Through detailed explanation, it elucidates the application effects and optimization strategies of BERT models in sentiment analysis, supported by experimental validation. The experimental findings indicate that BERT models exhibit robust performance in sentiment analysis tasks, with notable enhancements post fine-tuning. Lastly, the paper concludes by summarizing the potential applications of BERT models in sentiment analysis and suggests directions for future research and practical implementations.

Emerging Synergies Between Large Language Models and Machine Learning in Ecommerce Recommendations

Mar 12, 2024

Abstract:With the boom of e-commerce and web applications, recommender systems have become an important part of our daily lives, providing personalized recommendations based on the user's preferences. Although deep neural networks (DNNs) have made significant progress in improving recommendation systems by simulating the interaction between users and items and incorporating their textual information, these DNN-based approaches still have some limitations, such as the difficulty of effectively understanding users' interests and capturing textual information. It is not possible to generalize to different seen/unseen recommendation scenarios and reason about their predictions. At the same time, the emergence of large language models (LLMs), represented by ChatGPT and GPT-4, has revolutionized the fields of natural language processing (NLP) and artificial intelligence (AI) due to their superior capabilities in the basic tasks of language understanding and generation, and their impressive generalization and reasoning capabilities. As a result, recent research has sought to harness the power of LLM to improve recommendation systems. Given the rapid development of this research direction in the field of recommendation systems, there is an urgent need for a systematic review of existing LLM-driven recommendation systems for researchers and practitioners in related fields to gain insight into. More specifically, we first introduced a representative approach to learning user and item representations using LLM as a feature encoder. We then reviewed the latest advances in LLMs techniques for collaborative filtering enhanced recommendation systems from the three paradigms of pre-training, fine-tuning, and prompting. Finally, we had a comprehensive discussion on the future direction of this emerging field.

LoRA-SP: Streamlined Partial Parameter Adaptation for Resource-Efficient Fine-Tuning of Large Language Models

Feb 28, 2024Abstract:In addressing the computational and memory demands of fine-tuning Large Language Models(LLMs), we propose LoRA-SP(Streamlined Partial Parameter Adaptation), a novel approach utilizing randomized half-selective parameter freezing within the Low-Rank Adaptation(LoRA)framework. This method efficiently balances pre-trained knowledge retention and adaptability for task-specific optimizations. Through a randomized mechanism, LoRA-SP determines which parameters to update or freeze, significantly reducing computational and memory requirements without compromising model performance. We evaluated LoRA-SP across several benchmark NLP tasks, demonstrating its ability to achieve competitive performance with substantially lower resource consumption compared to traditional full-parameter fine-tuning and other parameter-efficient techniques. LoRA-SP innovative approach not only facilitates the deployment of advanced NLP models in resource-limited settings but also opens new research avenues into effective and efficient model adaptation strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge