Paul Johnson

MTrainS: Improving DLRM training efficiency using heterogeneous memories

Apr 19, 2023

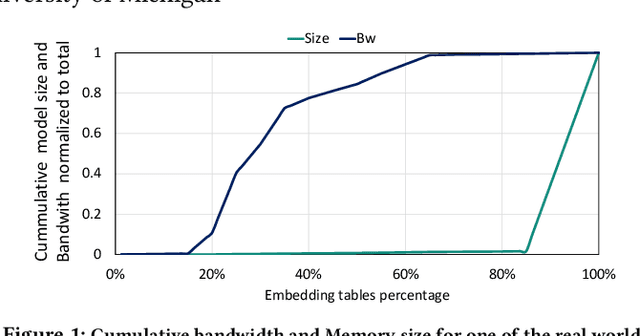

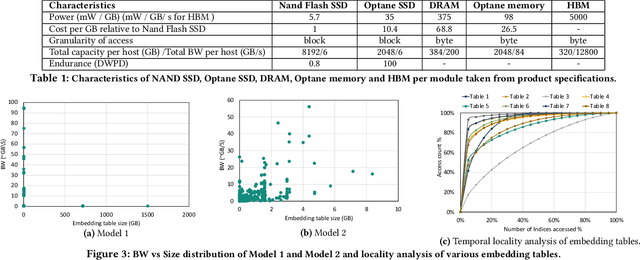

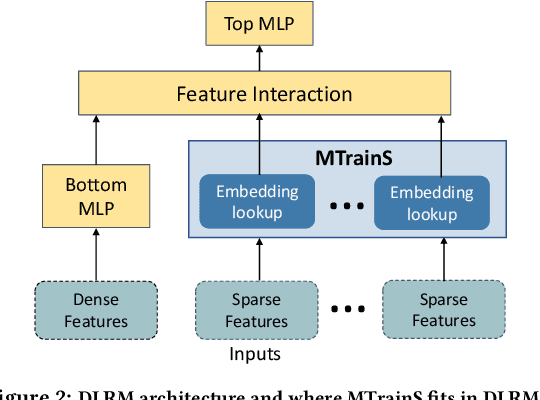

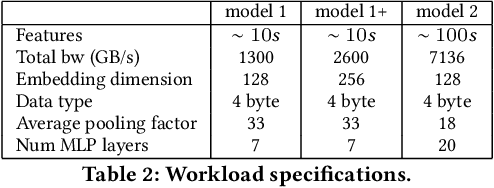

Abstract:Recommendation models are very large, requiring terabytes (TB) of memory during training. In pursuit of better quality, the model size and complexity grow over time, which requires additional training data to avoid overfitting. This model growth demands a large number of resources in data centers. Hence, training efficiency is becoming considerably more important to keep the data center power demand manageable. In Deep Learning Recommendation Models (DLRM), sparse features capturing categorical inputs through embedding tables are the major contributors to model size and require high memory bandwidth. In this paper, we study the bandwidth requirement and locality of embedding tables in real-world deployed models. We observe that the bandwidth requirement is not uniform across different tables and that embedding tables show high temporal locality. We then design MTrainS, which leverages heterogeneous memory, including byte and block addressable Storage Class Memory for DLRM hierarchically. MTrainS allows for higher memory capacity per node and increases training efficiency by lowering the need to scale out to multiple hosts in memory capacity bound use cases. By optimizing the platform memory hierarchy, we reduce the number of nodes for training by 4-8X, saving power and cost of training while meeting our target training performance.

Unsupervised classification of acoustic emissions from catalogs and fault time-to-failure prediction

Dec 12, 2019

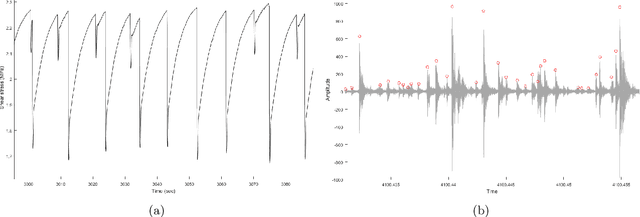

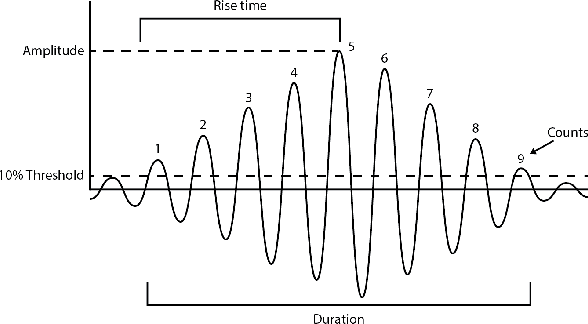

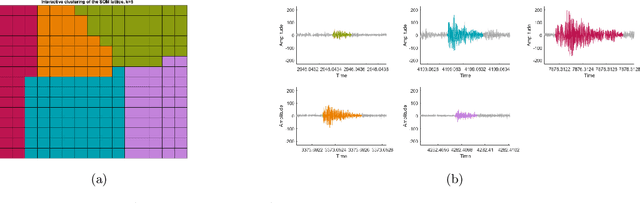

Abstract:When a rock is subjected to stress it deforms by creep mechanisms that include formation and slip on small-scale internal cracks. Intragranular cracks and slip along grain contacts release energy as elastic waves called acoustic emissions (AE). Early research into AEs envisioned that these signals could be used in the future to predict rock falls, mine collapse, or even earthquakes. Today, nondestructive testing, a field of engineering, involves monitoring the spatio-temporal evolution of AEs with the goal of predicting time-to-failure for manufacturing tools and infrastructure. The monitoring process involves clustering AEs by damage mechanism (e.g. matrix cracking, delamination) to track changes within the material. In this study, we aim to adapt aspects of this process to the task of generalized earthquake prediction. Our data are generated in a laboratory setting using a biaxial shearing device and a granular fault gouge that mimics the conditions around tectonic faults. In particular, we analyze the temporal evolution of AEs generated throughout several hundred laboratory earthquake cycles. We use a Conscience Self-Organizing Map (CSOM) to perform topologically ordered vector quantization based on waveform properties. The resulting map is used to interactively cluster AEs according to damage mechanism. Finally, we use an event-based LSTM network to test the predictive power of each cluster. By tracking cumulative waveform features over the seismic cycle, the network is able to forecast the time-to-failure of the fault.

Cascaded Region-based Densely Connected Network for Event Detection: A Seismic Application

Nov 29, 2017

Abstract:Automatic event detection from time series signals has wide applications, such as abnormal event detection in video surveillance and event detection in geophysical data. Traditional detection methods detect events primarily by the use of similarity and correlation in data. Those methods can be inefficient and yield low accuracy. In recent years, because of the significantly increased computational power, machine learning techniques have revolutionized many science and engineering domains. In this study, we apply a deep-learning-based method to the detection of events from time series seismic signals. However, a direct adaptation of the similar ideas from 2D object detection to our problem faces two challenges. The first challenge is that the duration of earthquake event varies significantly; The other is that the proposals generated are temporally correlated. To address these challenges, we propose a novel cascaded region-based convolutional neural network to capture earthquake events in different sizes, while incorporating contextual information to enrich features for each individual proposal. To achieve a better generalization performance, we use densely connected blocks as the backbone of our network. Because of the fact that some positive events are not correctly annotated, we further formulate the detection problem as a learning-from-noise problem. To verify the performance of our detection methods, we employ our methods to seismic data generated from a bi-axial "earthquake machine" located at Rock Mechanics Laboratory, and we acquire labels with the help of experts. Through our numerical tests, we show that our novel detection techniques yield high accuracy. Therefore, our novel deep-learning-based detection methods can potentially be powerful tools for locating events from time series data in various applications.

A Naive Bayes machine learning approach to risk prediction using censored, time-to-event data

Apr 08, 2014

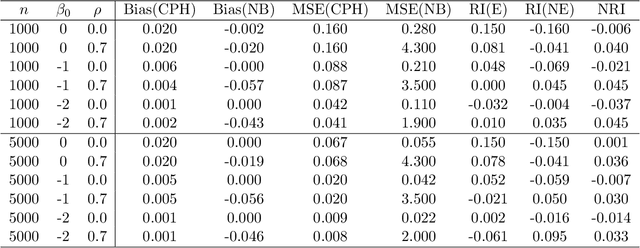

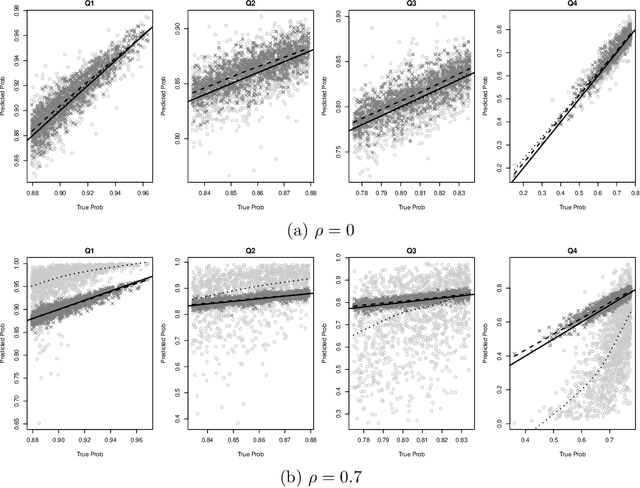

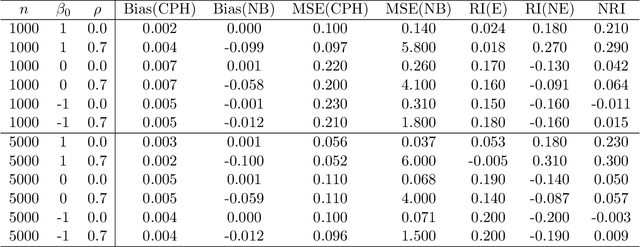

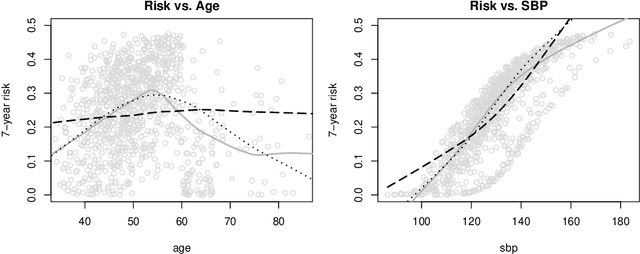

Abstract:Predicting an individual's risk of experiencing a future clinical outcome is a statistical task with important consequences for both practicing clinicians and public health experts. Modern observational databases such as electronic health records (EHRs) provide an alternative to the longitudinal cohort studies traditionally used to construct risk models, bringing with them both opportunities and challenges. Large sample sizes and detailed covariate histories enable the use of sophisticated machine learning techniques to uncover complex associations and interactions, but observational databases are often ``messy,'' with high levels of missing data and incomplete patient follow-up. In this paper, we propose an adaptation of the well-known Naive Bayes (NB) machine learning approach for classification to time-to-event outcomes subject to censoring. We compare the predictive performance of our method to the Cox proportional hazards model which is commonly used for risk prediction in healthcare populations, and illustrate its application to prediction of cardiovascular risk using an EHR dataset from a large Midwest integrated healthcare system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge