Paul Fisher

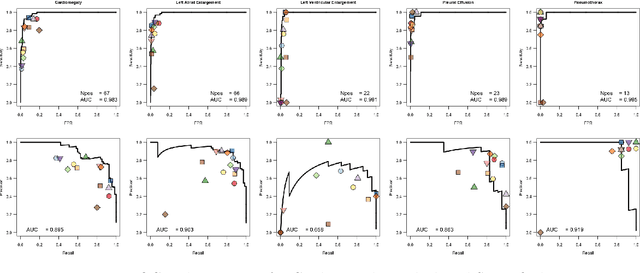

Who Goes First? Influences of Human-AI Workflow on Decision Making in Clinical Imaging

May 19, 2022

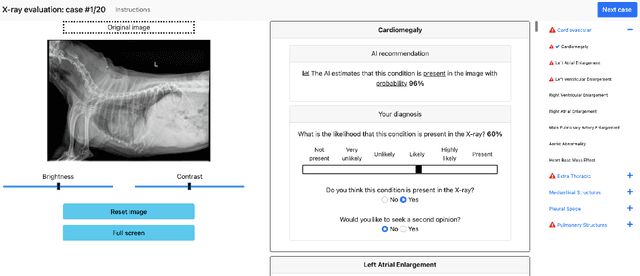

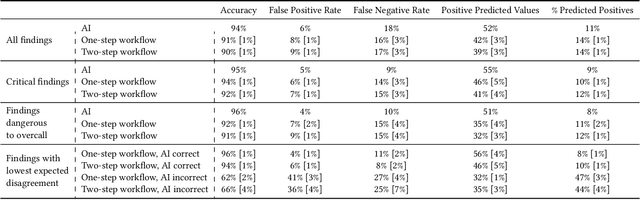

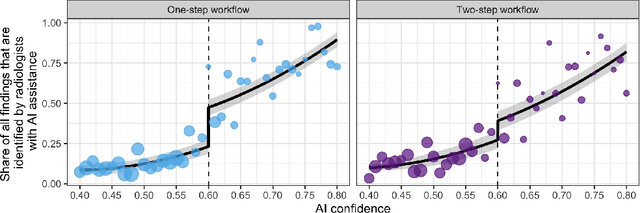

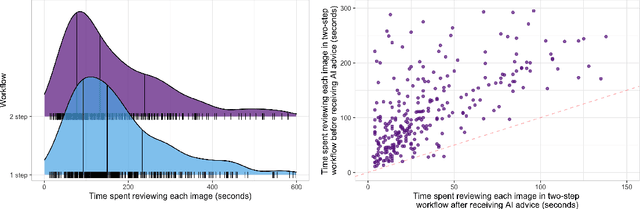

Abstract:Details of the designs and mechanisms in support of human-AI collaboration must be considered in the real-world fielding of AI technologies. A critical aspect of interaction design for AI-assisted human decision making are policies about the display and sequencing of AI inferences within larger decision-making workflows. We have a poor understanding of the influences of making AI inferences available before versus after human review of a diagnostic task at hand. We explore the effects of providing AI assistance at the start of a diagnostic session in radiology versus after the radiologist has made a provisional decision. We conducted a user study where 19 veterinary radiologists identified radiographic findings present in patients' X-ray images, with the aid of an AI tool. We employed two workflow configurations to analyze (i) anchoring effects, (ii) human-AI team diagnostic performance and agreement, (iii) time spent and confidence in decision making, and (iv) perceived usefulness of the AI. We found that participants who are asked to register provisional responses in advance of reviewing AI inferences are less likely to agree with the AI regardless of whether the advice is accurate and, in instances of disagreement with the AI, are less likely to seek the second opinion of a colleague. These participants also reported the AI advice to be less useful. Surprisingly, requiring provisional decisions on cases in advance of the display of AI inferences did not lengthen the time participants spent on the task. The study provides generalizable and actionable insights for the deployment of clinical AI tools in human-in-the-loop systems and introduces a methodology for studying alternative designs for human-AI collaboration. We make our experimental platform available as open source to facilitate future research on the influence of alternate designs on human-AI workflows.

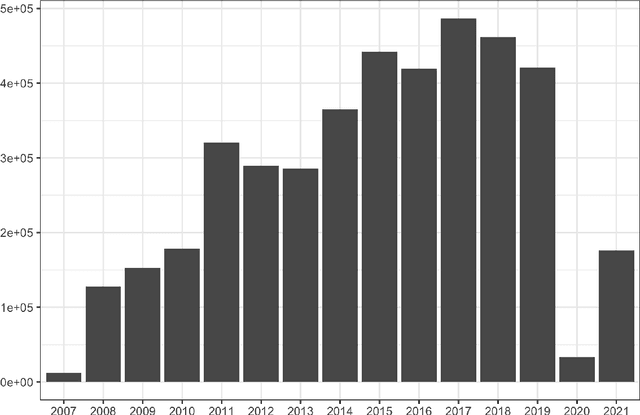

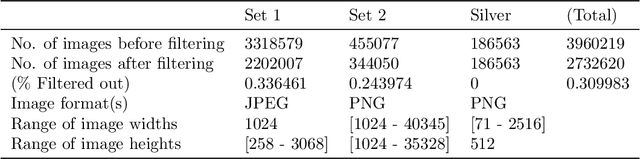

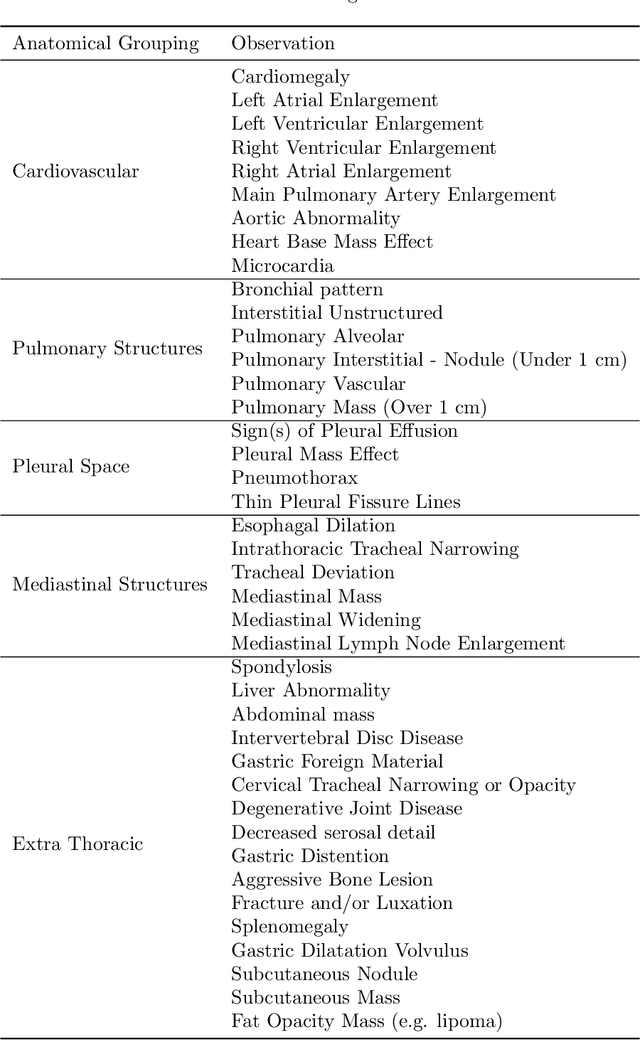

RapidRead: Global Deployment of State-of-the-art Radiology AI for a Large Veterinary Teleradiology Practice

Nov 09, 2021

Abstract:This work describes the development and real-world deployment of a deep learning-based AI system for evaluating canine and feline radiographs across a broad range of findings and abnormalities. We describe a new semi-supervised learning approach that combines NLP-derived labels with self-supervised training leveraging more than 2.5 million x-ray images. Finally we describe the clinical deployment of the model including system architecture, real-time performance evaluation and data drift detection.

Image Compression with Iterated Function Systems, Finite Automata and Zerotrees: Grand Unification

Mar 15, 2000

Abstract:Fractal image compression, Culik's image compression and zerotree prediction coding of wavelet image decomposition coefficients succeed only because typical images being compressed possess a significant degree of self-similarity. Besides the common concept, these methods turn out to be even more tightly related, to the point of algorithmical reducibility of one technique to another. The goal of the present paper is to demonstrate these relations. The paper offers a plain-term interpretation of Culik's image compression, in regular image processing terms, without resorting to finite state machines and similar lofty language. The interpretation is shown to be algorithmically related to an IFS fractal image compression method: an IFS can be exactly transformed into Culik's image code. Using this transformation, we will prove that in a self-similar (part of an) image any zero wavelet coefficient is the root of a zerotree, or its branch. The paper discusses the zerotree coding of (wavelet/projection) coefficients as a common predictor/corrector, applied vertically through different layers of a multiresolutional decomposition, rather than within the same view. This interpretation leads to an insight into the evolution of image compression techniques: from a causal single-layer prediction, to non-causal same-view predictions (wavelet decomposition among others) and to a causal cross-layer prediction (zero-trees, Culik's method).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge