Andre Dourson

VET-DINO: Learning Anatomical Understanding Through Multi-View Distillation in Veterinary Imaging

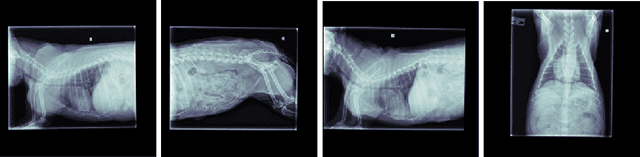

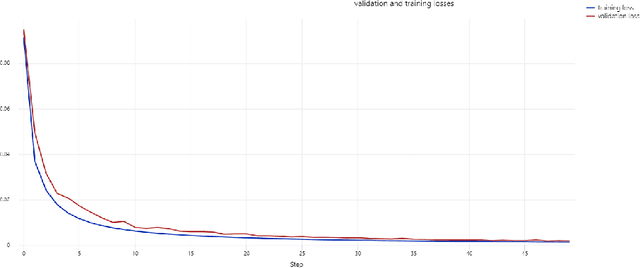

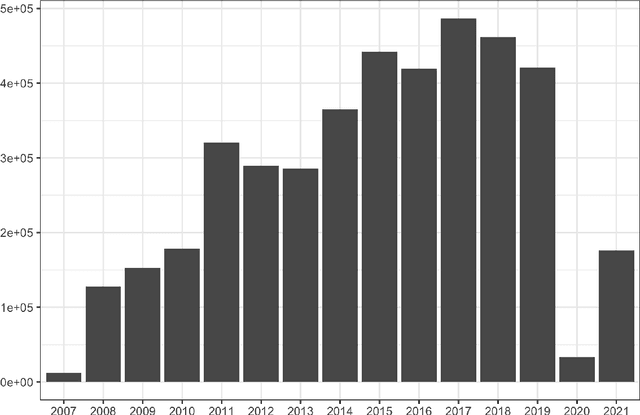

May 21, 2025Abstract:Self-supervised learning has emerged as a powerful paradigm for training deep neural networks, particularly in medical imaging where labeled data is scarce. While current approaches typically rely on synthetic augmentations of single images, we propose VET-DINO, a framework that leverages a unique characteristic of medical imaging: the availability of multiple standardized views from the same study. Using a series of clinical veterinary radiographs from the same patient study, we enable models to learn view-invariant anatomical structures and develop an implied 3D understanding from 2D projections. We demonstrate our approach on a dataset of 5 million veterinary radiographs from 668,000 canine studies. Through extensive experimentation, including view synthesis and downstream task performance, we show that learning from real multi-view pairs leads to superior anatomical understanding compared to purely synthetic augmentations. VET-DINO achieves state-of-the-art performance on various veterinary imaging tasks. Our work establishes a new paradigm for self-supervised learning in medical imaging that leverages domain-specific properties rather than merely adapting natural image techniques.

PulseNet: Deep Learning ECG-signal classification using random augmentation policy and continous wavelet transform for canines

May 17, 2023

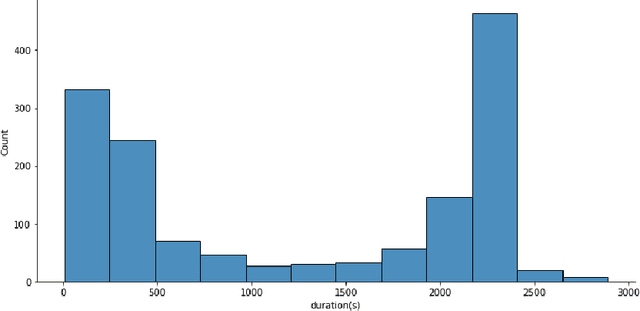

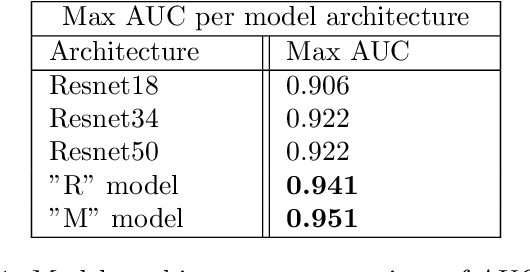

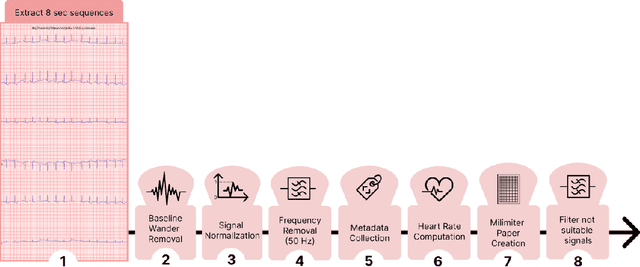

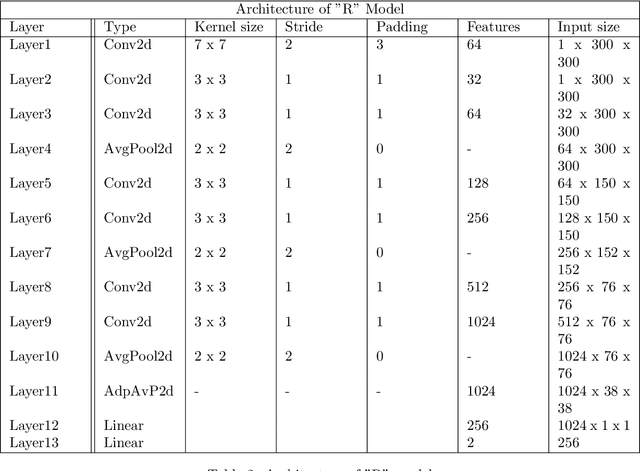

Abstract:Evaluating canine electrocardiograms (ECG) require skilled veterinarians, but current availability of veterinary cardiologists for ECG interpretation and diagnostic support is limited. Developing tools for automated assessment of ECG sequences can improve veterinary care by providing clinicians real-time results and decision support tools. We implement a deep convolutional neural network (CNN) approach for classifying canine electrocardiogram sequences as either normal or abnormal. ECG records are converted into 8 second Lead II sequences and classified as either normal (no evidence of cardiac abnormalities) or abnormal (presence of one or more cardiac abnormalities). For training ECG sequences are randomly augmented using RandomAugmentECG, a new augmentation library implemented specifically for this project. Each chunk is then is converted using a continuous wavelet transform into a 2D scalogram. The 2D scalogram are then classified as either normal or abnormal by a binary CNN classifier. Experimental results are validated against three boarded veterinary cardiologists achieving an AUC-ROC score of 0.9506 on test dataset matching human level performance. Additionally, we describe model deployment to Microsoft Azure using an MLOps approach. To our knowledge, this work is one of the first attempts to implement a deep learning model to automatically classify ECG sequences for canines.Implementing automated ECG classification will enhance veterinary care through improved diagnostic performance and increased clinic efficiency.

StudyFormer : Attention-Based and Dynamic Multi View Classifier for X-ray images

Feb 23, 2023

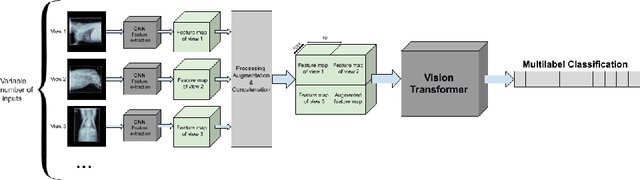

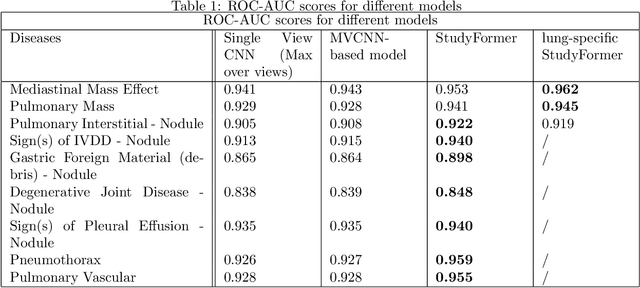

Abstract:Chest X-ray images are commonly used in medical diagnosis, and AI models have been developed to assist with the interpretation of these images. However, many of these models rely on information from a single view of the X-ray, while multiple views may be available. In this work, we propose a novel approach for combining information from multiple views to improve the performance of X-ray image classification. Our approach is based on the use of a convolutional neural network to extract feature maps from each view, followed by an attention mechanism implemented using a Vision Transformer. The resulting model is able to perform multi-label classification on 41 labels and outperforms both single-view models and traditional multi-view classification architectures. We demonstrate the effectiveness of our approach through experiments on a dataset of 363,000 X-ray images.

MONAI: An open-source framework for deep learning in healthcare

Nov 04, 2022

Abstract:Artificial Intelligence (AI) is having a tremendous impact across most areas of science. Applications of AI in healthcare have the potential to improve our ability to detect, diagnose, prognose, and intervene on human disease. For AI models to be used clinically, they need to be made safe, reproducible and robust, and the underlying software framework must be aware of the particularities (e.g. geometry, physiology, physics) of medical data being processed. This work introduces MONAI, a freely available, community-supported, and consortium-led PyTorch-based framework for deep learning in healthcare. MONAI extends PyTorch to support medical data, with a particular focus on imaging, and provide purpose-specific AI model architectures, transformations and utilities that streamline the development and deployment of medical AI models. MONAI follows best practices for software-development, providing an easy-to-use, robust, well-documented, and well-tested software framework. MONAI preserves the simple, additive, and compositional approach of its underlying PyTorch libraries. MONAI is being used by and receiving contributions from research, clinical and industrial teams from around the world, who are pursuing applications spanning nearly every aspect of healthcare.

RapidRead: Global Deployment of State-of-the-art Radiology AI for a Large Veterinary Teleradiology Practice

Nov 09, 2021

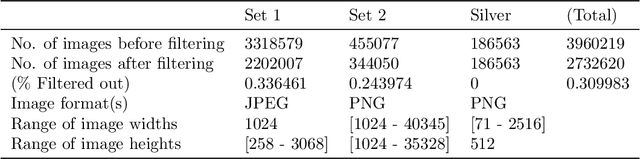

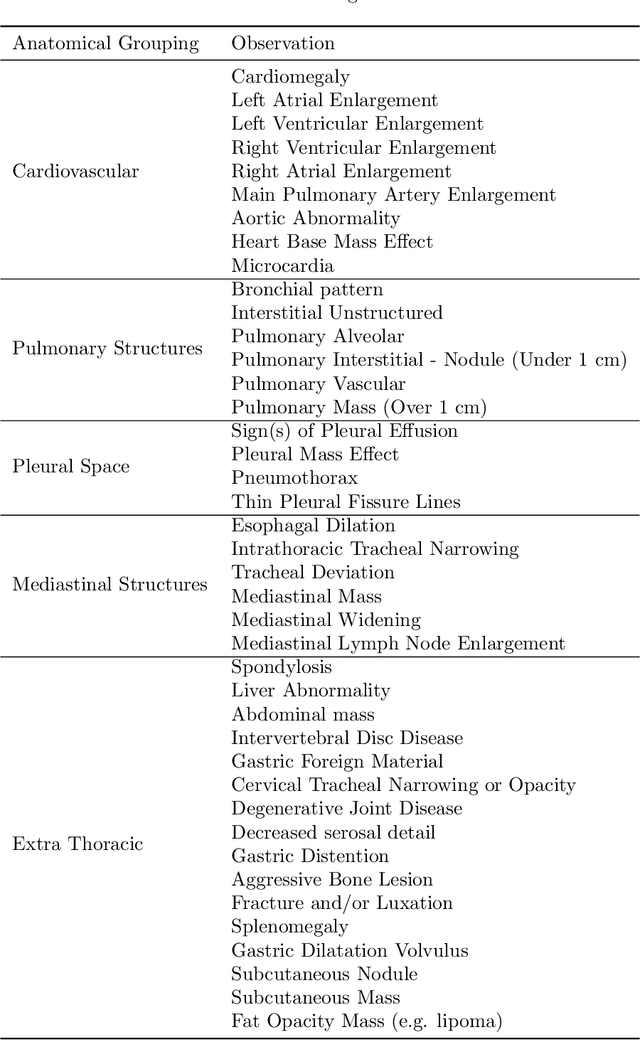

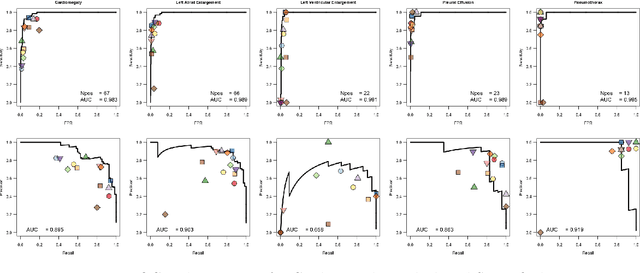

Abstract:This work describes the development and real-world deployment of a deep learning-based AI system for evaluating canine and feline radiographs across a broad range of findings and abnormalities. We describe a new semi-supervised learning approach that combines NLP-derived labels with self-supervised training leveraging more than 2.5 million x-ray images. Finally we describe the clinical deployment of the model including system architecture, real-time performance evaluation and data drift detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge