Paul D. Yoo

Empirical and Experimental Perspectives on Big Data in Recommendation Systems: A Comprehensive Survey

Feb 01, 2024Abstract:This survey paper provides a comprehensive analysis of big data algorithms in recommendation systems, addressing the lack of depth and precision in existing literature. It proposes a two-pronged approach: a thorough analysis of current algorithms and a novel, hierarchical taxonomy for precise categorization. The taxonomy is based on a tri-level hierarchy, starting with the methodology category and narrowing down to specific techniques. Such a framework allows for a structured and comprehensive classification of algorithms, assisting researchers in understanding the interrelationships among diverse algorithms and techniques. Covering a wide range of algorithms, this taxonomy first categorizes algorithms into four main analysis types: User and Item Similarity-Based Methods, Hybrid and Combined Approaches, Deep Learning and Algorithmic Methods, and Mathematical Modeling Methods, with further subdivisions into sub-categories and techniques. The paper incorporates both empirical and experimental evaluations to differentiate between the techniques. The empirical evaluation ranks the techniques based on four criteria. The experimental assessments rank the algorithms that belong to the same category, sub-category, technique, and sub-technique. Also, the paper illuminates the future prospects of big data techniques in recommendation systems, underscoring potential advancements and opportunities for further research in this field

Text Classification: A Review, Empirical, and Experimental Evaluation

Jan 11, 2024

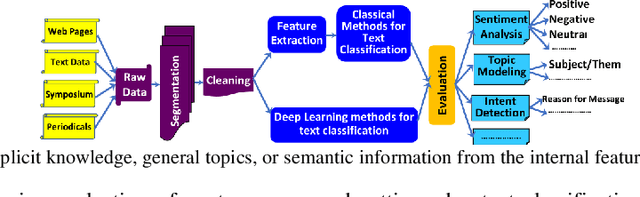

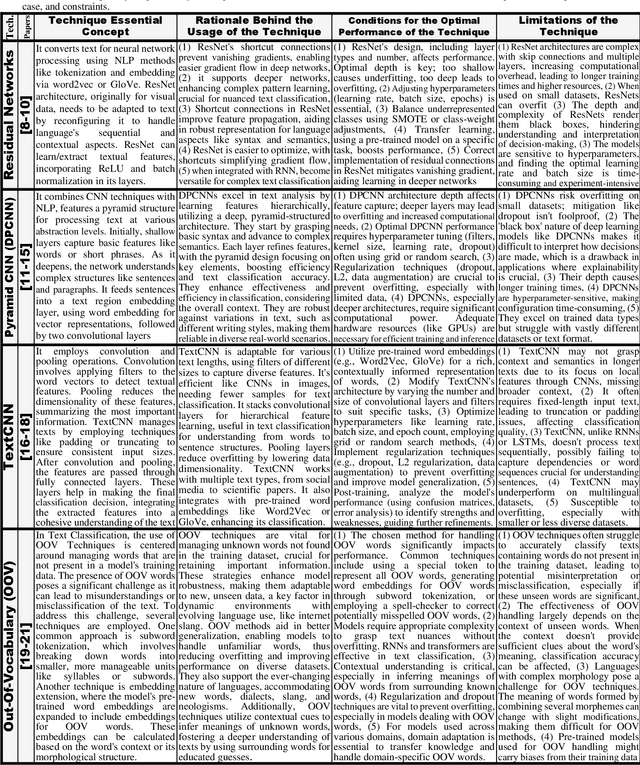

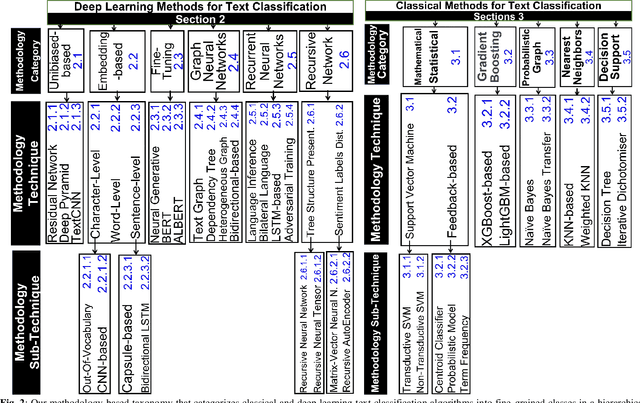

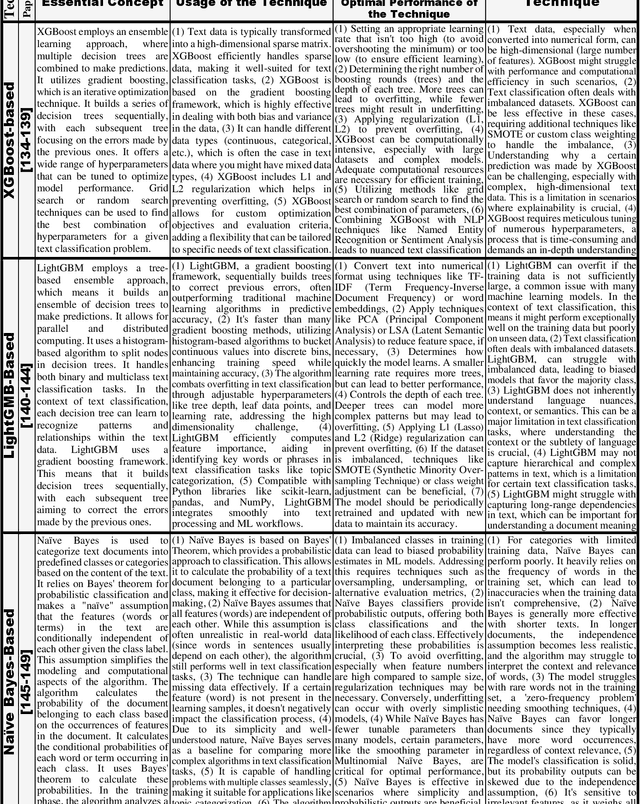

Abstract:The explosive and widespread growth of data necessitates the use of text classification to extract crucial information from vast amounts of data. Consequently, there has been a surge of research in both classical and deep learning text classification methods. Despite the numerous methods proposed in the literature, there is still a pressing need for a comprehensive and up-to-date survey. Existing survey papers categorize algorithms for text classification into broad classes, which can lead to the misclassification of unrelated algorithms and incorrect assessments of their qualities and behaviors using the same metrics. To address these limitations, our paper introduces a novel methodological taxonomy that classifies algorithms hierarchically into fine-grained classes and specific techniques. The taxonomy includes methodology categories, methodology techniques, and methodology sub-techniques. Our study is the first survey to utilize this methodological taxonomy for classifying algorithms for text classification. Furthermore, our study also conducts empirical evaluation and experimental comparisons and rankings of different algorithms that employ the same specific sub-technique, different sub-techniques within the same technique, different techniques within the same category, and categories

Neural Bounding

Oct 10, 2023

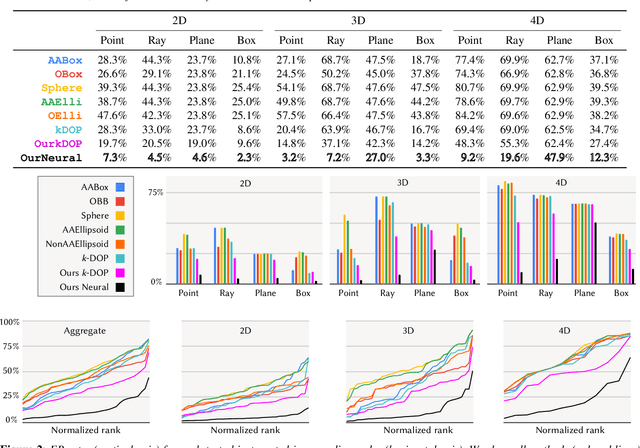

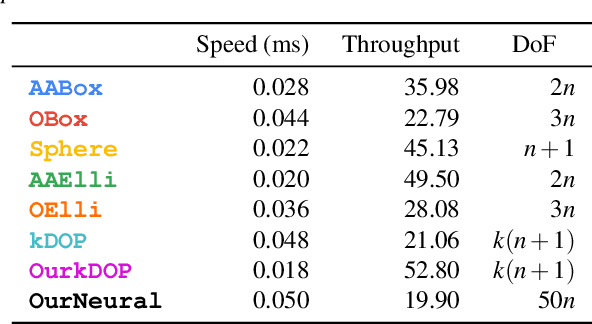

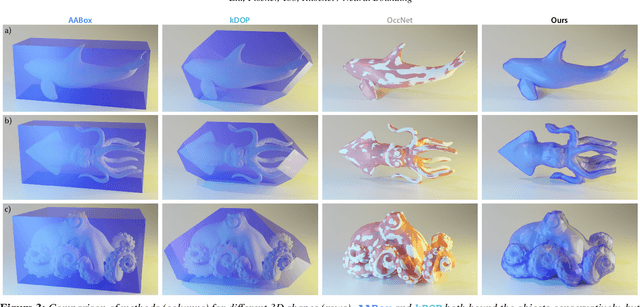

Abstract:Bounding volumes are an established concept in computer graphics and vision tasks but have seen little change since their early inception. In this work, we study the use of neural networks as bounding volumes. Our key observation is that bounding, which so far has primarily been considered a problem of computational geometry, can be redefined as a problem of learning to classify space into free and empty. This learning-based approach is particularly advantageous in high-dimensional spaces, such as animated scenes with complex queries, where neural networks are known to excel. However, unlocking neural bounding requires a twist: allowing -- but also limiting -- false positives, while ensuring that the number of false negatives is strictly zero. We enable such tight and conservative results using a dynamically-weighted asymmetric loss function. Our results show that our neural bounding produces up to an order of magnitude fewer false positives than traditional methods.

Deep Learning-Based Arrhythmia Detection Using RR-Interval Framed Electrocardiograms

Dec 01, 2020

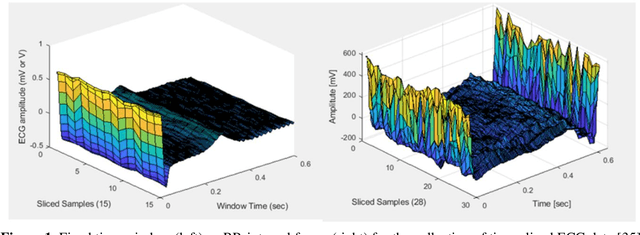

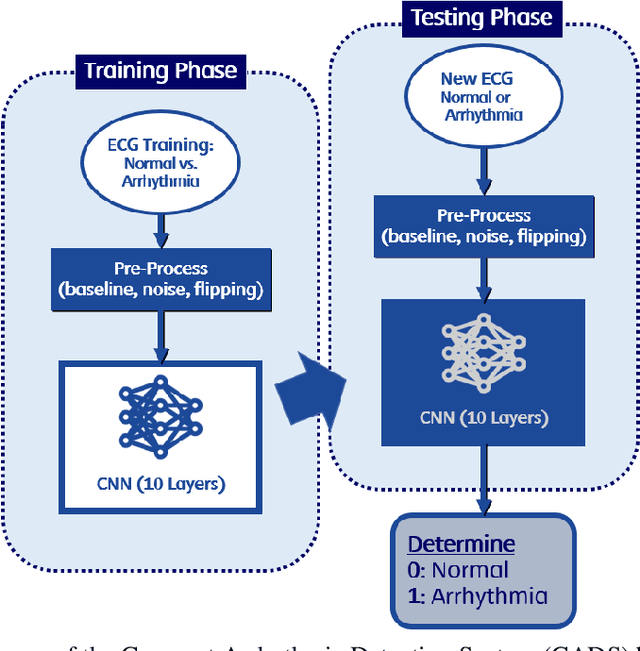

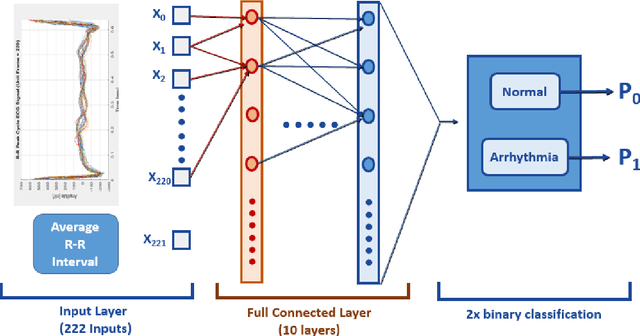

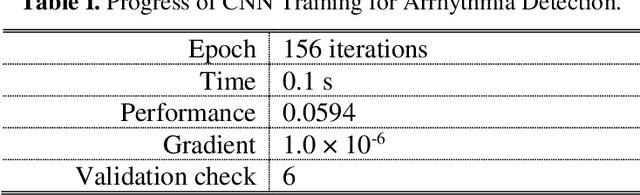

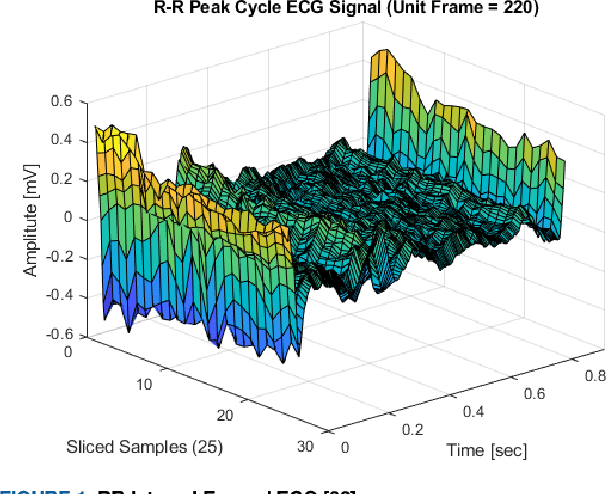

Abstract:Deep learning applied to electrocardiogram (ECG) data can be used to achieve personal authentication in biometric security applications, but it has not been widely used to diagnose cardiovascular disorders. We developed a deep learning model for the detection of arrhythmia in which time-sliced ECG data representing the distance between successive R-peaks are used as the input for a convolutional neural network (CNN). The main objective is developing the compact deep learning based detect system which minimally uses the dataset but delivers the confident accuracy rate of the Arrhythmia detection. This compact system can be implemented in wearable devices or real-time monitoring equipment because the feature extraction step is not required for complex ECG waveforms, only the R-peak data is needed. The results of both tests indicated that the Compact Arrhythmia Detection System (CADS) matched the performance of conventional systems for the detection of arrhythmia in two consecutive test runs. All features of the CADS are fully implemented and publicly available in MATLAB.

An Enhanced Machine Learning-based Biometric Authentication System Using RR-Interval Framed Electrocardiograms

Aug 07, 2019

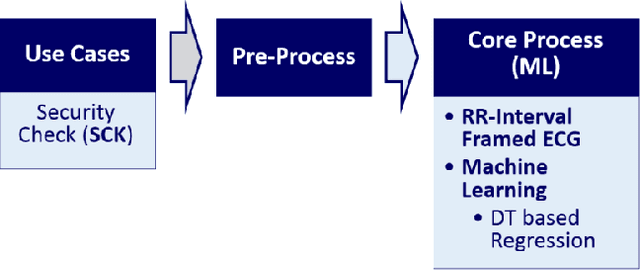

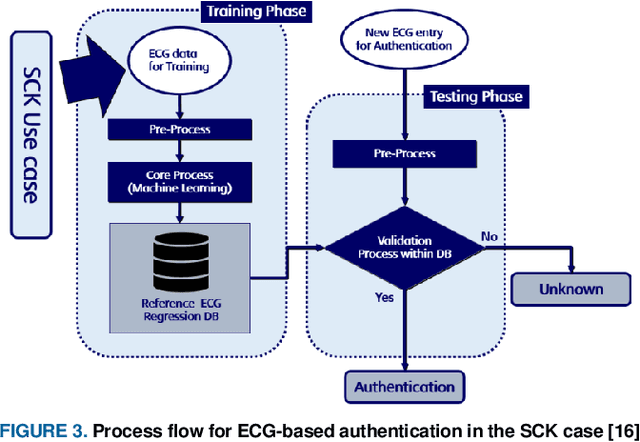

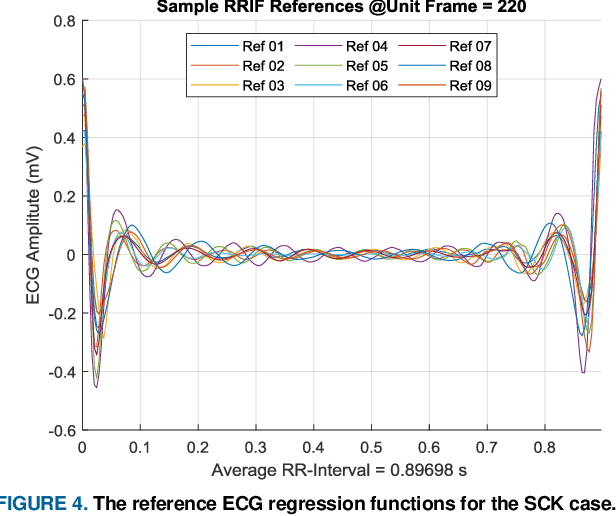

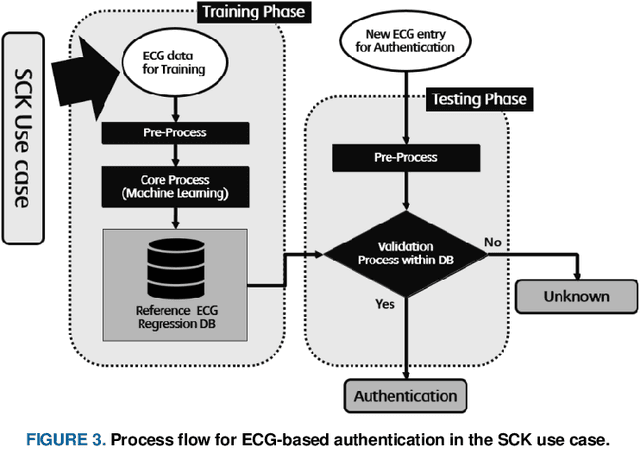

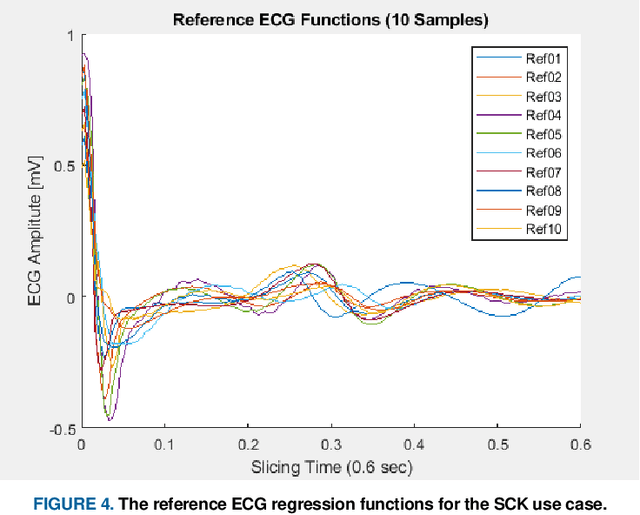

Abstract:This paper is targeted in the area of biometric data enabled security system based on the machine learning for the digital health. The disadvantages of traditional authentication systems include the risks of forgetfulness, loss, and theft. Biometric authentication is therefore rapidly replacing traditional authentication methods and is becoming an everyday part of life. The electrocardiogram (ECG) was recently introduced as a biometric authentication system suitable for security checks. The proposed authentication system helps investigators studying ECG-based biometric authentication techniques to reshape input data by slicing based on the RR-interval, and defines the Overall Performance (OP), which is the combined performance metric of multiple authentication measures. We evaluated the performance of the proposed system using a confusion matrix and achieved up to 95% accuracy by compact data analysis. We also used the Amang ECG (amgecg) toolbox in MATLAB to investigate the upper-range control limit (UCL) based on the mean square error, which directly affects three authentication performance metrics: the accuracy, the number of accepted samples, and the OP. Using this approach, we found that the OP can be optimized by using a UCL of 0.0028, which indicates 61 accepted samples out of 70 and ensures that the proposed authentication system achieves an accuracy of 95%.

An Enhanced Electrocardiogram Biometric Authentication System Using Machine Learning

Jun 30, 2019

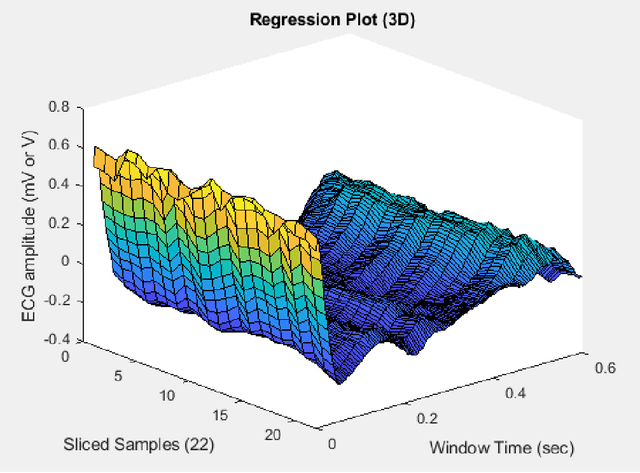

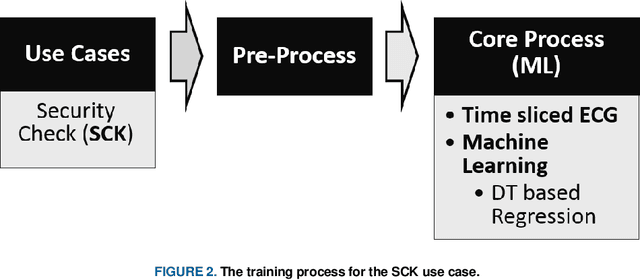

Abstract:Traditional authentication systems use alphanumeric or graphical passwords, or token-based techniques that require "something you know and something you have". The disadvantages of these systems include the risks of forgetfulness, loss, and theft. To address these shortcomings, biometric authentication is rapidly replacing traditional authentication methods and is becoming an everyday part of life. The electrocardiogram (ECG) is one of the most recent traits considered for biometric purposes, and three typical use cases have been described: security checks, hospitals and wearable devices. Here we describe an ECG-based authentication system suitable for security checks and hospital environments. The proposed authentication system will help investigators studying ECG-based biometric authentication techniques to define dataset boundaries and to acquire high-quality training data. We evaluated the performance of the proposed system using a confusion matrix and also by applying the Amang ECG (amgecg) toolbox in MATLAB to investigate two parameters that directly affect the accuracy of authentication: the ECG slicing time (sliding window) and sampling time. Using this approach, we found that accuracy was optimized by using a sliding window of 0.4 s and a sampling time of 37 s.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge