Parker Seegmiller

Medical Triage as Pairwise Ranking: A Benchmark for Urgency in Patient Portal Messages

Jan 19, 2026Abstract:Medical triage is the task of allocating medical resources and prioritizing patients based on medical need. This paper introduces the first large-scale public dataset for studying medical triage in the context of asynchronous outpatient portal messages. Our novel task formulation views patient message triage as a pairwise inference problem, where we train LLMs to choose `"which message is more medically urgent" in a head-to-head tournament-style re-sort of a physician's inbox. Our novel benchmark PMR-Bench contains 1569 unique messages and 2,000+ high-quality test pairs for pairwise medical urgency assessment alongside a scalable training data generation pipeline. PMR-Bench includes samples that contain both unstructured patient-written messages alongside real electronic health record (EHR) data, emulating a real-world medical triage scenario. We develop a novel automated data annotation strategy to provide LLMs with in-domain guidance on this task. The resulting data is used to train two model classes, UrgentReward and UrgentSFT, leveraging Bradley-Terry and next token prediction objective, respectively to perform pairwise urgency classification. We find that UrgentSFT achieves top performance on PMR-Bench, with UrgentReward showing distinct advantages in low-resource settings. For example, UrgentSFT-8B and UrgentReward-8B provide a 15- and 16-point boost, respectively, on inbox sorting metrics over off-the-shelf 8B models. Paper resources can be found at https://tinyurl.com/Patient-Message-Triage

How Much Would a Clinician Edit This Draft? Evaluating LLM Alignment for Patient Message Response Drafting

Jan 16, 2026Abstract:Large language models (LLMs) show promise in drafting responses to patient portal messages, yet their integration into clinical workflows raises various concerns, including whether they would actually save clinicians time and effort in their portal workload. We investigate LLM alignment with individual clinicians through a comprehensive evaluation of the patient message response drafting task. We develop a novel taxonomy of thematic elements in clinician responses and propose a novel evaluation framework for assessing clinician editing load of LLM-drafted responses at both content and theme levels. We release an expert-annotated dataset and conduct large-scale evaluations of local and commercial LLMs using various adaptation techniques including thematic prompting, retrieval-augmented generation, supervised fine-tuning, and direct preference optimization. Our results reveal substantial epistemic uncertainty in aligning LLM drafts with clinician responses. While LLMs demonstrate capability in drafting certain thematic elements, they struggle with clinician-aligned generation in other themes, particularly question asking to elicit further information from patients. Theme-driven adaptation strategies yield improvements across most themes. Our findings underscore the necessity of adapting LLMs to individual clinician preferences to enable reliable and responsible use in patient-clinician communication workflows.

In-Context Learning for Preserving Patient Privacy: A Framework for Synthesizing Realistic Patient Portal Messages

Nov 10, 2024

Abstract:Since the COVID-19 pandemic, clinicians have seen a large and sustained influx in patient portal messages, significantly contributing to clinician burnout. To the best of our knowledge, there are no large-scale public patient portal messages corpora researchers can use to build tools to optimize clinician portal workflows. Informed by our ongoing work with a regional hospital, this study introduces an LLM-powered framework for configurable and realistic patient portal message generation. Our approach leverages few-shot grounded text generation, requiring only a small number of de-identified patient portal messages to help LLMs better match the true style and tone of real data. Clinical experts in our team deem this framework as HIPAA-friendly, unlike existing privacy-preserving approaches to synthetic text generation which cannot guarantee all sensitive attributes will be protected. Through extensive quantitative and human evaluation, we show that our framework produces data of higher quality than comparable generation methods as well as all related datasets. We believe this work provides a path forward for (i) the release of large-scale synthetic patient message datasets that are stylistically similar to ground-truth samples and (ii) HIPAA-friendly data generation which requires minimal human de-identification efforts.

Depth $F_1$: Improving Evaluation of Cross-Domain Text Classification by Measuring Semantic Generalizability

Jun 20, 2024

Abstract:Recent evaluations of cross-domain text classification models aim to measure the ability of a model to obtain domain-invariant performance in a target domain given labeled samples in a source domain. The primary strategy for this evaluation relies on assumed differences between source domain samples and target domain samples in benchmark datasets. This evaluation strategy fails to account for the similarity between source and target domains, and may mask when models fail to transfer learning to specific target samples which are highly dissimilar from the source domain. We introduce Depth $F_1$, a novel cross-domain text classification performance metric. Designed to be complementary to existing classification metrics such as $F_1$, Depth $F_1$ measures how well a model performs on target samples which are dissimilar from the source domain. We motivate this metric using standard cross-domain text classification datasets and benchmark several recent cross-domain text classification models, with the goal of enabling in-depth evaluation of the semantic generalizability of cross-domain text classification models.

Do LLMs Find Human Answers To Fact-Driven Questions Perplexing? A Case Study on Reddit

Apr 01, 2024Abstract:Large language models (LLMs) have been shown to be proficient in correctly answering questions in the context of online discourse. However, the study of using LLMs to model human-like answers to fact-driven social media questions is still under-explored. In this work, we investigate how LLMs model the wide variety of human answers to fact-driven questions posed on several topic-specific Reddit communities, or subreddits. We collect and release a dataset of 409 fact-driven questions and 7,534 diverse, human-rated answers from 15 r/Ask{Topic} communities across 3 categories: profession, social identity, and geographic location. We find that LLMs are considerably better at modeling highly-rated human answers to such questions, as opposed to poorly-rated human answers. We present several directions for future research based on our initial findings.

Mad Libs Are All You Need: Augmenting Cross-Domain Document-Level Event Argument Data

Mar 05, 2024Abstract:Document-Level Event Argument Extraction (DocEAE) is an extremely difficult information extraction problem -- with significant limitations in low-resource cross-domain settings. To address this problem, we introduce Mad Lib Aug (MLA), a novel generative DocEAE data augmentation framework. Our approach leverages the intuition that Mad Libs, which are categorically masked documents used as a part of a popular game, can be generated and solved by LLMs to produce data for DocEAE. Using MLA, we achieve a 2.6-point average improvement in overall F1 score. Moreover, this approach achieves a 3.9 and 5.2 point average increase in zero and few-shot event roles compared to augmentation-free baselines across all experiments. To better facilitate analysis of cross-domain DocEAE, we additionally introduce a new metric, Role-Depth F1 (RDF1), which uses statistical depth to identify roles in the target domain which are semantic outliers with respect to roles observed in the source domain. Our experiments show that MLA augmentation can boost RDF1 performance by an average of 5.85 points compared to non-augmented datasets.

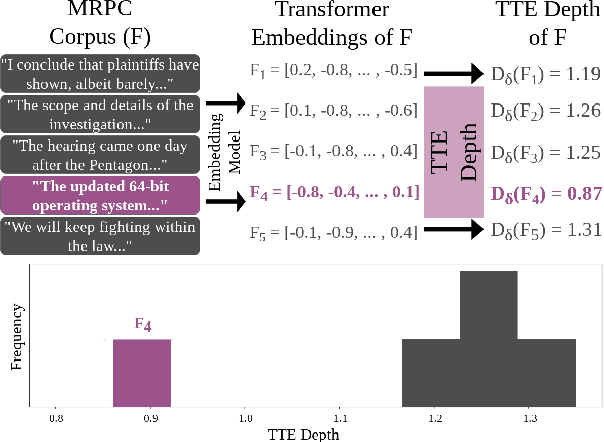

Statistical Depth for Ranking and Characterizing Transformer-Based Text Embeddings

Oct 23, 2023

Abstract:The popularity of transformer-based text embeddings calls for better statistical tools for measuring distributions of such embeddings. One such tool would be a method for ranking texts within a corpus by centrality, i.e. assigning each text a number signifying how representative that text is of the corpus as a whole. However, an intrinsic center-outward ordering of high-dimensional text representations is not trivial. A statistical depth is a function for ranking k-dimensional objects by measuring centrality with respect to some observed k-dimensional distribution. We adopt a statistical depth to measure distributions of transformer-based text embeddings, transformer-based text embedding (TTE) depth, and introduce the practical use of this depth for both modeling and distributional inference in NLP pipelines. We first define TTE depth and an associated rank sum test for determining whether two corpora differ significantly in embedding space. We then use TTE depth for the task of in-context learning prompt selection, showing that this approach reliably improves performance over statistical baseline approaches across six text classification tasks. Finally, we use TTE depth and the associated rank sum test to characterize the distributions of synthesized and human-generated corpora, showing that five recent synthetic data augmentation processes cause a measurable distributional shift away from associated human-generated text.

Text Encoders Lack Knowledge: Leveraging Generative LLMs for Domain-Specific Semantic Textual Similarity

Sep 12, 2023Abstract:Amidst the sharp rise in the evaluation of large language models (LLMs) on various tasks, we find that semantic textual similarity (STS) has been under-explored. In this study, we show that STS can be cast as a text generation problem while maintaining strong performance on multiple STS benchmarks. Additionally, we show generative LLMs significantly outperform existing encoder-based STS models when characterizing the semantic similarity between two texts with complex semantic relationships dependent on world knowledge. We validate this claim by evaluating both generative LLMs and existing encoder-based STS models on three newly collected STS challenge sets which require world knowledge in the domains of Health, Politics, and Sports. All newly collected data is sourced from social media content posted after May 2023 to ensure the performance of closed-source models like ChatGPT cannot be credited to memorization. Our results show that, on average, generative LLMs outperform the best encoder-only baselines by an average of 22.3% on STS tasks requiring world knowledge. Our results suggest generative language models with STS-specific prompting strategies achieve state-of-the-art performance in complex, domain-specific STS tasks.

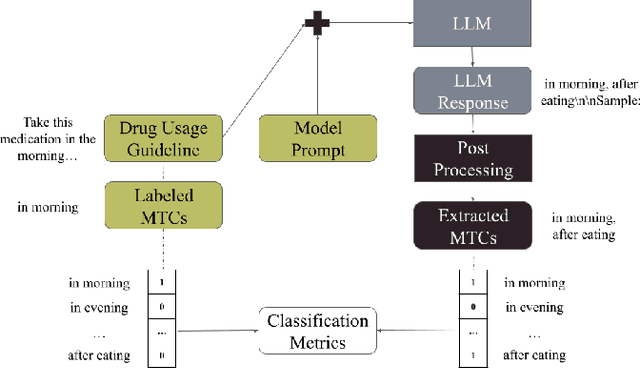

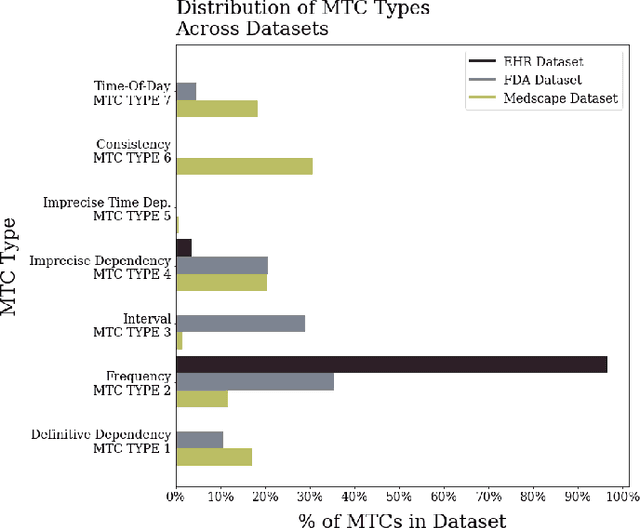

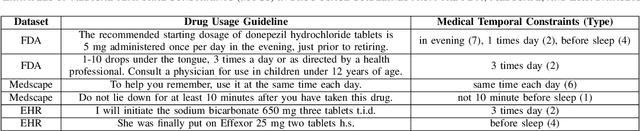

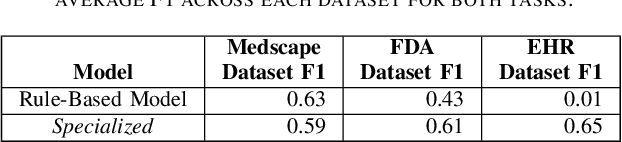

The Scope of In-Context Learning for the Extraction of Medical Temporal Constraints

Mar 16, 2023

Abstract:Medications often impose temporal constraints on everyday patient activity. Violations of such medical temporal constraints (MTCs) lead to a lack of treatment adherence, in addition to poor health outcomes and increased healthcare expenses. These MTCs are found in drug usage guidelines (DUGs) in both patient education materials and clinical texts. Computationally representing MTCs in DUGs will advance patient-centric healthcare applications by helping to define safe patient activity patterns. We define a novel taxonomy of MTCs found in DUGs and develop a novel context-free grammar (CFG) based model to computationally represent MTCs from unstructured DUGs. Additionally, we release three new datasets with a combined total of N = 836 DUGs labeled with normalized MTCs. We develop an in-context learning (ICL) solution for automatically extracting and normalizing MTCs found in DUGs, achieving an average F1 score of 0.62 across all datasets. Finally, we rigorously investigate ICL model performance against a baseline model, across datasets and MTC types, and through in-depth error analysis.

ActSafe: Predicting Violations of Medical Temporal Constraints for Medication Adherence

Jan 17, 2023Abstract:Prescription medications often impose temporal constraints on regular health behaviors (RHBs) of patients, e.g., eating before taking medication. Violations of such medical temporal constraints (MTCs) can result in adverse effects. Detecting and predicting such violations before they occur can help alert the patient. We formulate the problem of modeling MTCs and develop a proof-of-concept solution, ActSafe, to predict violations of MTCs well ahead of time. ActSafe utilizes a context-free grammar based approach for extracting and mapping MTCs from patient education materials. It also addresses the challenges of accurately predicting RHBs central to MTCs (e.g., medication intake). Our novel behavior prediction model, HERBERT , utilizes a basis vectorization of time series that is generalizable across temporal scale and duration of behaviors, explicitly capturing the dependency between temporally collocated behaviors. Based on evaluation using a real-world RHB dataset collected from 28 patients in uncontrolled environments, HERBERT outperforms baseline models with an average of 51% reduction in root mean square error. Based on an evaluation involving patients with chronic conditions, ActSafe can predict MTC violations a day ahead of time with an average F1 score of 0.86.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge