Panagiotis Tsakalides

Distribution Agnostic Symbolic Representations for Time Series Dimensionality Reduction and Online Anomaly Detection

May 20, 2021

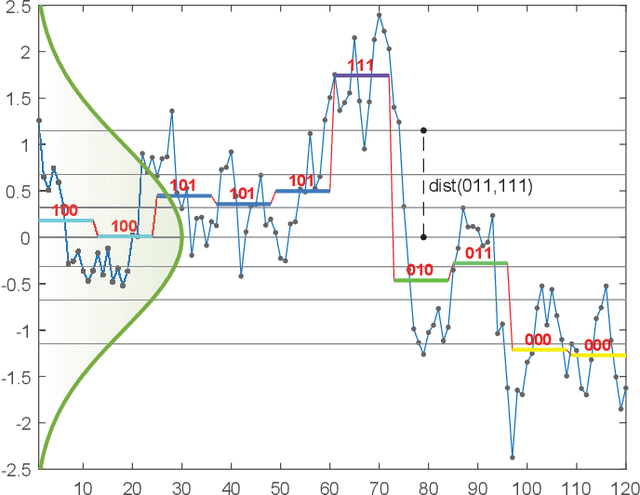

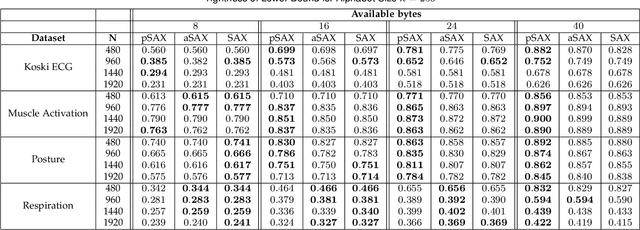

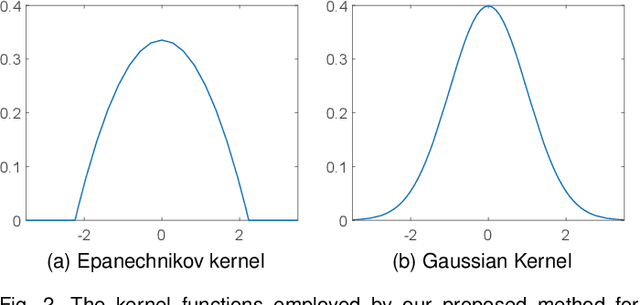

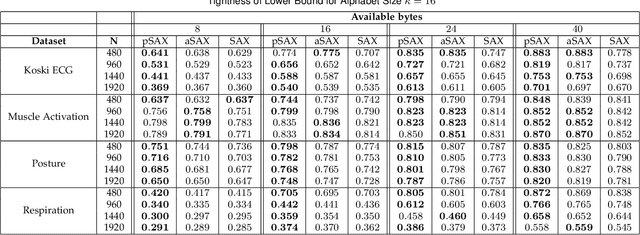

Abstract:Due to the importance of the lower bounding distances and the attractiveness of symbolic representations, the family of symbolic aggregate approximations (SAX) has been used extensively for encoding time series data. However, typical SAX-based methods rely on two restrictive assumptions; the Gaussian distribution and equiprobable symbols. This paper proposes two novel data-driven SAX-based symbolic representations, distinguished by their discretization steps. The first representation, oriented for general data compaction and indexing scenarios, is based on the combination of kernel density estimation and Lloyd-Max quantization to minimize the information loss and mean squared error in the discretization step. The second method, oriented for high-level mining tasks, employs the Mean-Shift clustering method and is shown to enhance anomaly detection in the lower-dimensional space. Besides, we verify on a theoretical basis a previously observed phenomenon of the intrinsic process that results in a lower than the expected variance of the intermediate piecewise aggregate approximation. This phenomenon causes an additional information loss but can be avoided with a simple modification. The proposed representations possess all the attractive properties of the conventional SAX method. Furthermore, experimental evaluation on real-world datasets demonstrates their superiority compared to the traditional SAX and an alternative data-driven SAX variant.

Artificial neural networks in action for an automated cell-type classification of biological neural networks

Nov 22, 2019

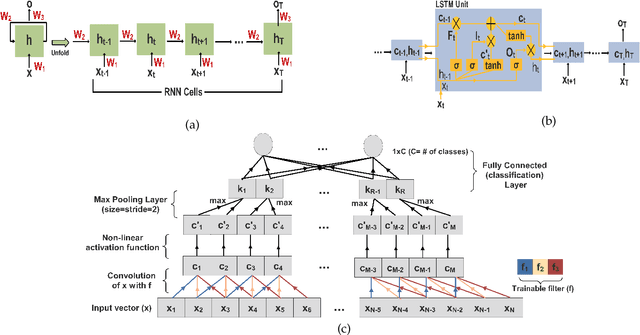

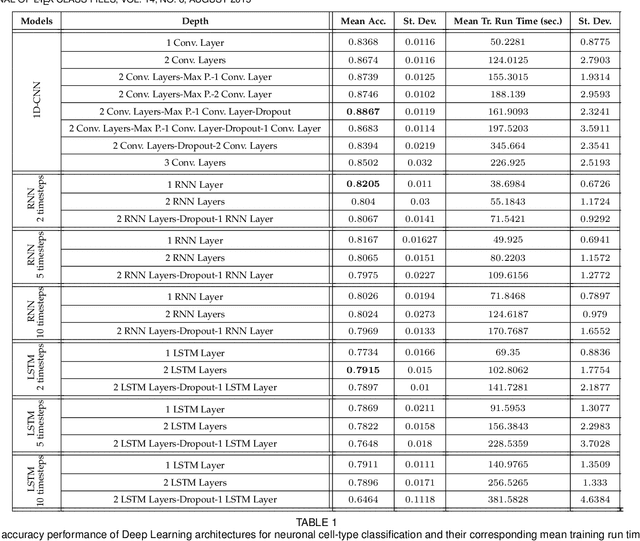

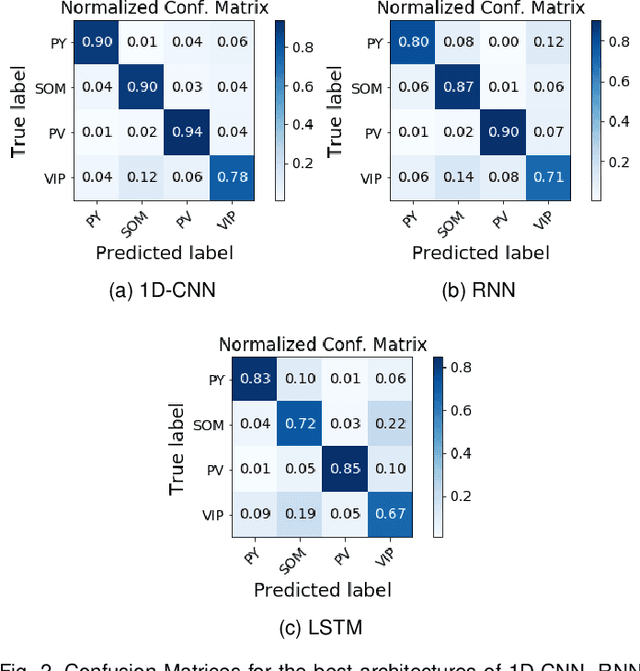

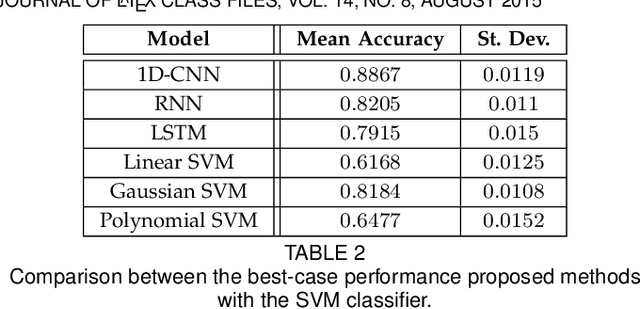

Abstract:In this work we address the problem of neuronal cell-type classification, and we employ a real-world dataset of raw neuronal activity measurements obtained with calcium imaging techniques. While neuronal cell-type classification is a crucial step in understanding the function of neuronal circuits, and thus a systematic classification of neurons is much needed, it still remains a challenge. In recent years, several approaches have been employed for a reliable neuronal cell-type recognition, such as immunohistochemical (IHC) analysis and feature extraction algorithms based on several characteristics of neuronal cells. These methods, however, demand a lot of human intervention and observation, they are time-consuming and regarding the feature extraction algorithms it is not clear or obvious what are the best features that define a neuronal cell class. In this work we examine three different deep learning models aiming at an automated neuronal cell-type classification and compare their performance. Experimental analysis demonstrates the efficacy and potent capabilities for each one of the proposed schemes.

Adversarial dictionary learning for a robust analysis and modelling of spontaneous neuronal activity

Nov 05, 2019

Abstract:The field of neuroscience is experiencing rapid growth in the complexity and quantity of the recorded neural activity allowing us unprecedented access to its dynamics in different brain areas. One of the major goals of neuroscience is to find interpretable descriptions of what the brain represents and computes by trying to explain complex phenomena in simple terms. Considering this task from the perspective of dimensionality reduction provides an entry point into principled mathematical techniques allowing us to discover these representations directly from experimental data, a key step to developing rich yet comprehensible models for brain function. In this work, we employ two real-world binary datasets describing the spontaneous neuronal activity of two laboratory mice over time, and we aim to their efficient low-dimensional representation. We develop an innovative, robust to noise, dictionary learning algorithm for the identification of patterns with synchronous activity and we also extend it to identify patterns within larger time windows. The results on the classification accuracy for the discrimination between the clean and the adversarial-noisy activation patterns obtained by an SVM classifier highlight the efficacy of the proposed scheme, and the visualization of the dictionary's distribution demonstrates the multifarious information that we obtain from it.

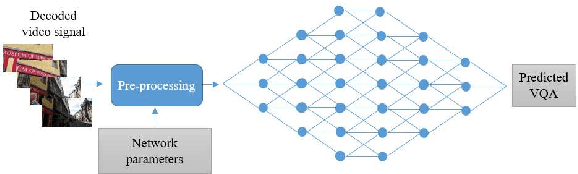

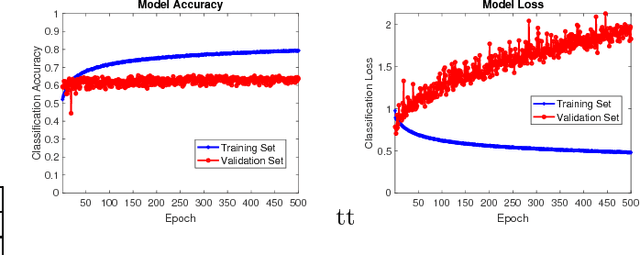

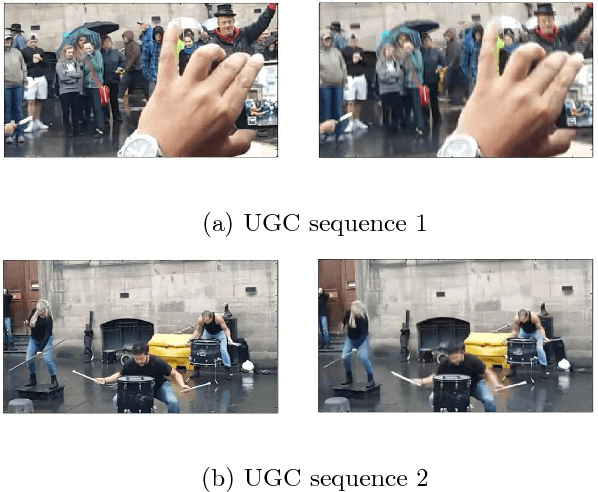

Convolutional Neural Networks for Video Quality Assessment

Sep 26, 2018

Abstract:Video Quality Assessment (VQA) is a very challenging task due to its highly subjective nature. Moreover, many factors influence VQA. Compression of video content, while necessary for minimising transmission and storage requirements, introduces distortions which can have detrimental effects on the perceived quality. Especially when dealing with modern video coding standards, it is extremely difficult to model the effects of compression due to the unpredictability of encoding on different content types. Moreover, transmission also introduces delays and other distortion types which affect the perceived quality. Therefore, it would be highly beneficial to accurately predict the perceived quality of video to be distributed over modern content distribution platforms, so that specific actions could be undertaken to maximise the Quality of Experience (QoE) of the users. Traditional VQA techniques based on feature extraction and modelling may not be sufficiently accurate. In this paper, a novel Deep Learning (DL) framework is introduced for effectively predicting VQA of video content delivery mechanisms based on end-to-end feature learning. The proposed framework is based on Convolutional Neural Networks, taking into account compression distortion as well as transmission delays. Training and evaluation of the proposed framework are performed on a user annotated VQA dataset specifically created to undertake this work. The experiments show that the proposed methods can lead to high accuracy of the quality estimation, showcasing the potential of using DL in complex VQA scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge