Pamodya Peiris

Vision-based Xylem Wetness Classification in Stem Water Potential Determination

Sep 24, 2024

Abstract:Water is often overused in irrigation, making efficient management of it crucial. Precision Agriculture emphasizes tools like stem water potential (SWP) analysis for better plant status determination. However, such tools often require labor-intensive in-situ sampling. Automation and machine learning can streamline this process and enhance outcomes. This work focused on automating stem detection and xylem wetness classification using the Scholander Pressure Chamber, a widely used but demanding method for SWP measurement. The aim was to refine stem detection and develop computer-vision-based methods to better classify water emergence at the xylem. To this end, we collected and manually annotated video data, applying vision- and learning-based methods for detection and classification. Additionally, we explored data augmentation and fine-tuned parameters to identify the most effective models. The identified best-performing models for stem detection and xylem wetness classification were evaluated end-to-end over 20 SWP measurements. Learning-based stem detection via YOLOv8n combined with ResNet50-based classification achieved a Top-1 accuracy of 80.98%, making it the best-performing approach for xylem wetness classification.

On-the-Go Tree Detection and Geometric Traits Estimation with Ground Mobile Robots in Fruit Tree Groves

Apr 03, 2024

Abstract:By-tree information gathering is an essential task in precision agriculture achieved by ground mobile sensors, but it can be time- and labor-intensive. In this paper we present an algorithmic framework to perform real-time and on-the-go detection of trees and key geometric characteristics (namely, width and height) with wheeled mobile robots in the field. Our method is based on the fusion of 2D domain-specific data (normalized difference vegetation index [NDVI] acquired via a red-green-near-infrared [RGN] camera) and 3D LiDAR point clouds, via a customized tree landmark association and parameter estimation algorithm. The proposed system features a multi-modal and entropy-based landmark correspondences approach, integrated into an underlying Kalman filter system to recognize the surrounding trees and jointly estimate their spatial and vegetation-based characteristics. Realistic simulated tests are used to evaluate our proposed algorithm's behavior in a variety of settings. Physical experiments in agricultural fields help validate our method's efficacy in acquiring accurate by-tree information on-the-go and in real-time by employing only onboard computational and sensing resources.

BabyNet: A Lightweight Network for Infant Reaching Action Recognition in Unconstrained Environments to Support Future Pediatric Rehabilitation Applications

Aug 09, 2022

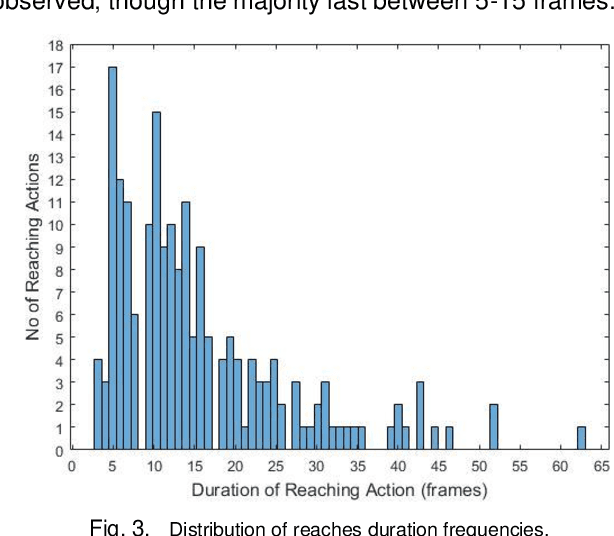

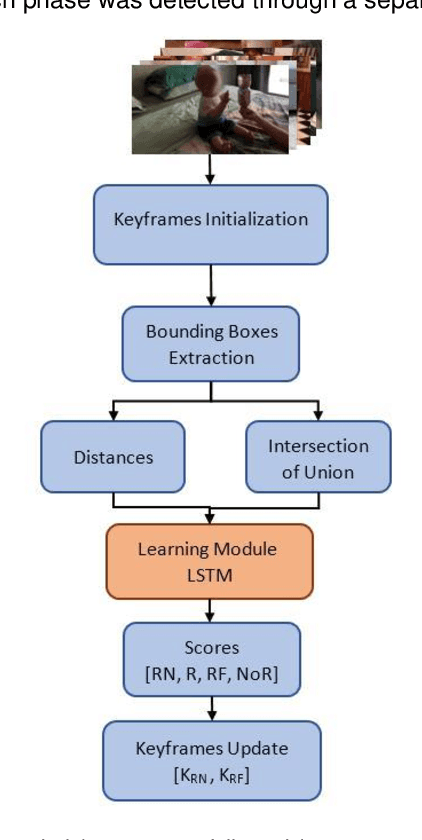

Abstract:Action recognition is an important component to improve autonomy of physical rehabilitation devices, such as wearable robotic exoskeletons. Existing human action recognition algorithms focus on adult applications rather than pediatric ones. In this paper, we introduce BabyNet, a light-weight (in terms of trainable parameters) network structure to recognize infant reaching action from off-body stationary cameras. We develop an annotated dataset that includes diverse reaches performed while in a sitting posture by different infants in unconstrained environments (e.g., in home settings, etc.). Our approach uses the spatial and temporal connection of annotated bounding boxes to interpret onset and offset of reaching, and to detect a complete reaching action. We evaluate the efficiency of our proposed approach and compare its performance against other learning-based network structures in terms of capability of capturing temporal inter-dependencies and accuracy of detection of reaching onset and offset. Results indicate our BabyNet can attain solid performance in terms of (average) testing accuracy that exceeds that of other larger networks, and can hence serve as a light-weight data-driven framework for video-based infant reaching action recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge