Pablo Zinemanas

Leveraging Pre-Trained Autoencoders for Interpretable Prototype Learning of Music Audio

Feb 14, 2024

Abstract:We present PECMAE, an interpretable model for music audio classification based on prototype learning. Our model is based on a previous method, APNet, which jointly learns an autoencoder and a prototypical network. Instead, we propose to decouple both training processes. This enables us to leverage existing self-supervised autoencoders pre-trained on much larger data (EnCodecMAE), providing representations with better generalization. APNet allows prototypes' reconstruction to waveforms for interpretability relying on the nearest training data samples. In contrast, we explore using a diffusion decoder that allows reconstruction without such dependency. We evaluate our method on datasets for music instrument classification (Medley-Solos-DB) and genre recognition (GTZAN and a larger in-house dataset), the latter being a more challenging task not addressed with prototypical networks before. We find that the prototype-based models preserve most of the performance achieved with the autoencoder embeddings, while the sonification of prototypes benefits understanding the behavior of the classifier.

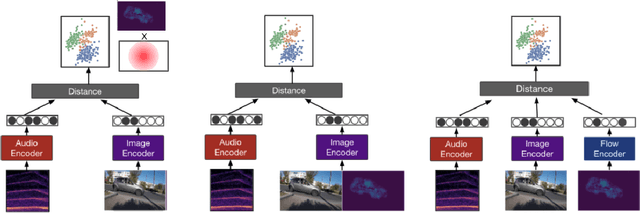

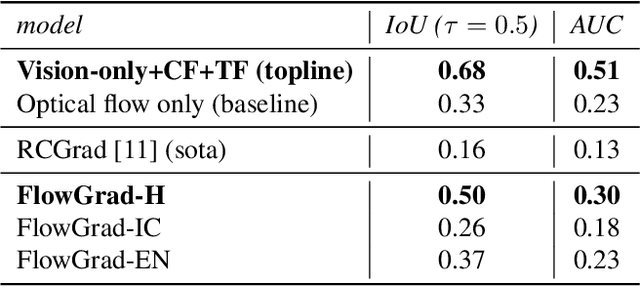

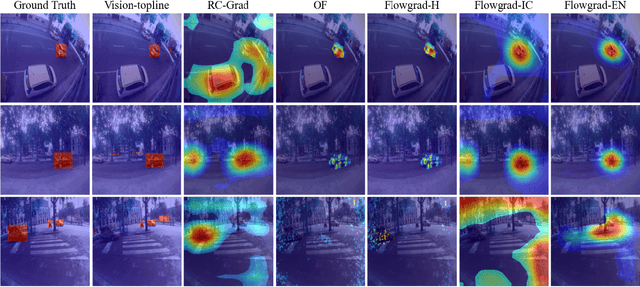

FlowGrad: Using Motion for Visual Sound Source Localization

Nov 15, 2022

Abstract:Most recent work in visual sound source localization relies on semantic audio-visual representations learned in a self-supervised manner, and by design excludes temporal information present in videos. While it proves to be effective for widely used benchmark datasets, the method falls short for challenging scenarios like urban traffic. This work introduces temporal context into the state-of-the-art methods for sound source localization in urban scenes using optical flow as a means to encode motion information. An analysis of the strengths and weaknesses of our methods helps us better understand the problem of visual sound source localization and sheds light on open challenges for audio-visual scene understanding.

Soundata: A Python library for reproducible use of audio datasets

Oct 04, 2021Abstract:Soundata is a Python library for loading and working with audio datasets in a standardized way, removing the need for writing custom loaders in every project, and improving reproducibility by providing tools to validate data against a canonical version. It speeds up research pipelines by allowing users to quickly download a dataset, load it into memory in a standardized and reproducible way, validate that the dataset is complete and correct, and more. Soundata is based and inspired on mirdata and design to complement mirdata by working with environmental sound, bioacoustic and speech datasets, among others. Soundata was created to be easy to use, easy to contribute to, and to increase reproducibility and standardize usage of sound datasets in a flexible way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge