Péter Kovács

Transformer Encoder and Multi-features Time2Vec for Financial Prediction

Apr 18, 2025Abstract:Financial prediction is a complex and challenging task of time series analysis and signal processing, expected to model both short-term fluctuations and long-term temporal dependencies. Transformers have remarkable success mostly in natural language processing using attention mechanism, which also influenced the time series community. The ability to capture both short and long-range dependencies helps to understand the financial market and to recognize price patterns, leading to successful applications of Transformers in stock prediction. Although, the previous research predominantly focuses on individual features and singular predictions, that limits the model's ability to understand broader market trends. In reality, within sectors such as finance and technology, companies belonging to the same industry often exhibit correlated stock price movements. In this paper, we develop a novel neural network architecture by integrating Time2Vec with the Encoder of the Transformer model. Based on the study of different markets, we propose a novel correlation feature selection method. Through a comprehensive fine-tuning of multiple hyperparameters, we conduct a comparative analysis of our results against benchmark models. We conclude that our method outperforms other state-of-the-art encoding methods such as positional encoding, and we also conclude that selecting correlation features enhance the accuracy of predicting multiple stock prices.

Hybrid Rendering for Multimodal Autonomous Driving: Merging Neural and Physics-Based Simulation

Mar 12, 2025Abstract:Neural reconstruction models for autonomous driving simulation have made significant strides in recent years, with dynamic models becoming increasingly prevalent. However, these models are typically limited to handling in-domain objects closely following their original trajectories. We introduce a hybrid approach that combines the strengths of neural reconstruction with physics-based rendering. This method enables the virtual placement of traditional mesh-based dynamic agents at arbitrary locations, adjustments to environmental conditions, and rendering from novel camera viewpoints. Our approach significantly enhances novel view synthesis quality -- especially for road surfaces and lane markings -- while maintaining interactive frame rates through our novel training method, NeRF2GS. This technique leverages the superior generalization capabilities of NeRF-based methods and the real-time rendering speed of 3D Gaussian Splatting (3DGS). We achieve this by training a customized NeRF model on the original images with depth regularization derived from a noisy LiDAR point cloud, then using it as a teacher model for 3DGS training. This process ensures accurate depth, surface normals, and camera appearance modeling as supervision. With our block-based training parallelization, the method can handle large-scale reconstructions (greater than or equal to 100,000 square meters) and predict segmentation masks, surface normals, and depth maps. During simulation, it supports a rasterization-based rendering backend with depth-based composition and multiple camera models for real-time camera simulation, as well as a ray-traced backend for precise LiDAR simulation.

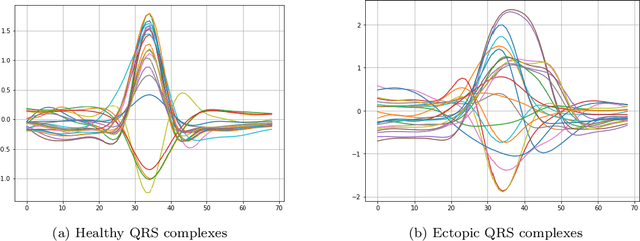

Rational Gaussian wavelets and corresponding model driven neural networks

Feb 03, 2025Abstract:In this paper we consider the continuous wavelet transform using Gaussian wavelets multiplied by an appropriate rational term. The zeros and poles of this rational modifier act as free parameters and their choice highly influences the shape of the mother wavelet. This allows the proposed construction to approximate signals with complex morphology using only a few wavelet coefficients. We show that the proposed rational Gaussian wavelets are admissible and provide numerical approximations of the wavelet coefficients using variable projection operators. In addition, we show how the proposed variable projection based rational Gaussian wavelet transform can be used in neural networks to obtain a highly interpretable feature learning layer. We demonstrate the effectiveness of the proposed scheme through a biomedical application, namely, the detection of ventricular ectopic beats (VEBs) in real ECG measurements.

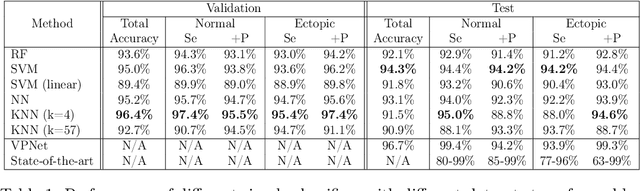

Learning ECG signal features without backpropagation

Jul 04, 2023

Abstract:Representation learning has become a crucial area of research in machine learning, as it aims to discover efficient ways of representing raw data with useful features to increase the effectiveness, scope and applicability of downstream tasks such as classification and prediction. In this paper, we propose a novel method to generate representations for time series-type data. This method relies on ideas from theoretical physics to construct a compact representation in a data-driven way, and it can capture both the underlying structure of the data and task-specific information while still remaining intuitive, interpretable and verifiable. This novel methodology aims to identify linear laws that can effectively capture a shared characteristic among samples belonging to a specific class. By subsequently utilizing these laws to generate a classifier-agnostic representation in a forward manner, they become applicable in a generalized setting. We demonstrate the effectiveness of our approach on the task of ECG signal classification, achieving state-of-the-art performance.

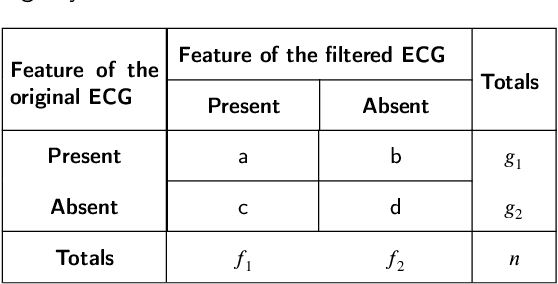

Diagnostic Quality Assessment for Low-Dimensional ECG Representations

Oct 01, 2022

Abstract:There have been several attempts to quantify the diagnostic distortion caused by algorithms that perform low-dimensional electrocardiogram (ECG) representation. However, there is no universally accepted quantitative measure that allows the diagnostic distortion arising from denoising, compression, and ECG beat representation algorithms to be determined. Hence, the main objective of this work was to develop a framework to enable biomedical engineers to efficiently and reliably assess diagnostic distortion resulting from ECG processing algorithms. We propose a semiautomatic framework for quantifying the diagnostic resemblance between original and denoised/reconstructed ECGs. Evaluation of the ECG must be done manually, but is kept simple and does not require medical training. In a case study, we quantified the agreement between raw and reconstructed (denoised) ECG recordings by means of kappa-based statistical tests. The proposed methodology takes into account that the observers may agree by chance alone. Consequently, for the case study, our statistical analysis reports the "true", beyond-chance agreement in contrast to other, less robust measures, such as simple percent agreement calculations. Our framework allows efficient assessment of clinically important diagnostic distortion, a potential side effect of ECG (pre-)processing algorithms. Accurate quantification of a possible diagnostic loss is critical to any subsequent ECG signal analysis, for instance, the detection of ischemic ST episodes in long-term ECG recordings.

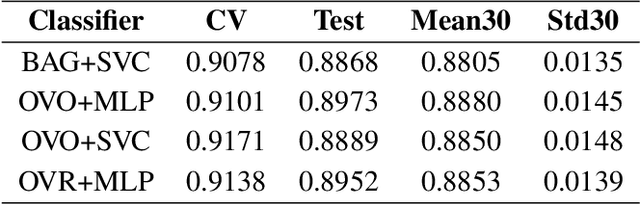

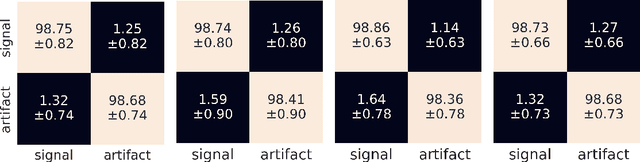

Machine learning aided noise filtration and signal classification for CREDO experiment

Oct 01, 2021

Abstract:The wealth of smartphone data collected by the Cosmic Ray Extremely Distributed Observatory(CREDO) greatly surpasses the capabilities of manual analysis. So, efficient means of rejectingthe non-cosmic-ray noise and identification of signals attributable to extensive air showers arenecessary. To address these problems we discuss a Convolutional Neural Network-based method ofartefact rejection and complementary method of particle identification based on common statisticalclassifiers as well as their ensemble extensions. These approaches are based on supervised learning,so we need to provide a representative subset of the CREDO dataset for training and validation.According to this approach over 2300 images were chosen and manually labeled by 5 judges.The images were split into spot, track, worm (collectively named signals) and artefact classes.Then the preprocessing consisting of luminance summation of RGB channels (grayscaling) andbackground removal by adaptive thresholding was performed. For purposes of artefact rejectionthe binary CNN-based classifier was proposed which is able to distinguish between artefacts andsignals. The classifier was fed with input data in the form of Daubechies wavelet transformedimages. In the case of cosmic ray signal classification, the well-known feature-based classifierswere considered. As feature descriptors, we used Zernike moments with additional feature relatedto total image luminance. For the problem of artefact rejection, we obtained an accuracy of 99%. For the 4-class signal classification, the best performing classifiers achieved a recognition rate of 88%.

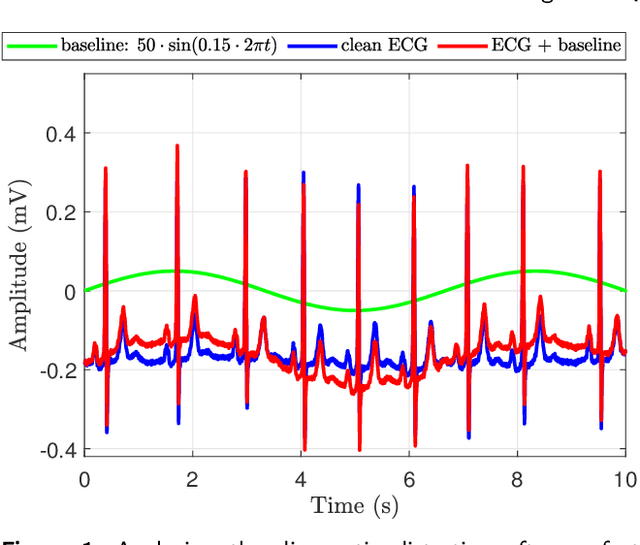

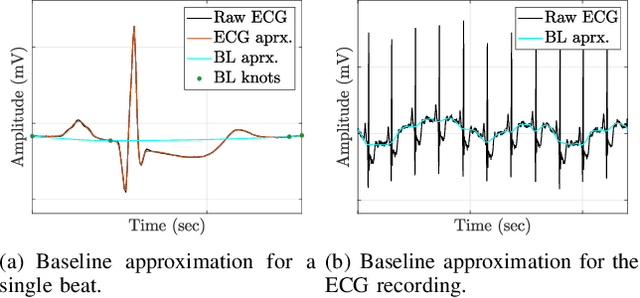

ECG Beat Representation and Delineation by means of Variable Projection

Sep 27, 2021

Abstract:The electrocardiogram (ECG) follows a characteristic shape, which has led to the development of several mathematical models for extracting clinically important information. Our main objective is to resolve limitations of previous approaches, that means to simultaneously cope with various noise sources, perform exact beat segmentation, and to retain diagnostically important morphological information. Methods: We therefore propose a model that is based on Hermite and sigmoid functions combined with piecewise polynomial interpolation for exact segmentation and low-dimensional representation of individual ECG beat segments. Hermite and sigmoidal functions enable reliable extraction of important ECG waveform information while the piecewise polynomial interpolation captures noisy signal features like the BLW. For that we use variable projection, which allows the separation of linear and nonlinear morphological variations of the according ECG waveforms. The resulting ECG model simultaneously performs BLW cancellation, beat segmentation, and low-dimensional waveform representation. Results: We demonstrate its BLW denoising and segmentation performance in two experiments, using synthetic and real data (Physionet QT database). Compared to state-of-the-art algorithms, the experiments showed less diagnostic distortion in case of denoising and a more robust delineation for the P and T wave. Conclusion: This work suggests a novel concept for ECG beat representation, easily adaptable to other biomedical signals with similar shape characteristics, such as blood pressure and evoked potentials. Significance: Our method is able to capture linear and nonlinear wave shape changes. Therefore, it provides a novel methodology to understand the origin of morphological variations caused, for instance, by respiration, medication, and abnormalities.

* Codes, experiments, demonstrations are available at: https://codeocean.com/capsule/2038948/tree/v1

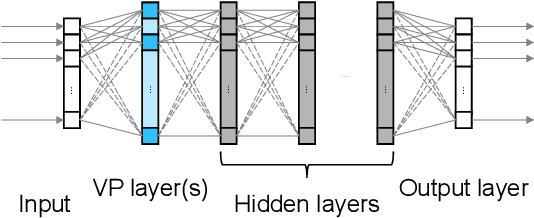

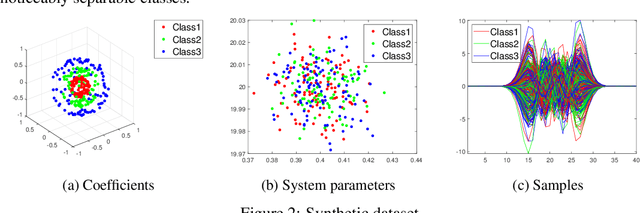

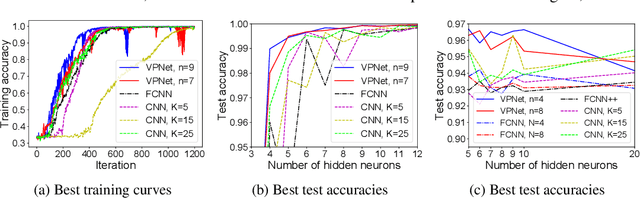

VPNet: Variable Projection Networks

Jun 28, 2020

Abstract:In this paper, we introduce VPNet, a novel model-driven neural network architecture based on variable projections (VP). The application of VP operators in neural networks implies learnable features, interpretable parameters, and compact network structures. This paper discusses the motivation and mathematical background of VPNet as well as experiments. The concept was evaluated in the context of signal processing. We performed classification tasks on a synthetic dataset, and real electrocardiogram (ECG) signals. Compared to fully-connected and 1D convolutional networks, VPNet features fast learning ability and good accuracy at a low computational cost in both of the training and inference. Based on the promising results and mentioned advantages, we expect broader impact in signal processing, including classification, regression, and even clustering problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge