Mario Huemer

Automotive Radar Online Channel Imbalance Estimation via NLMS

Jun 12, 2025Abstract:Automotive radars are one of the essential enablers of advanced driver assistance systems (ADASs). Continuous monitoring of the functional safety and reliability of automotive radars is a crucial requirement to prevent accidents and increase road safety. One of the most critical aspects to monitor in this context is radar channel imbalances, as they are a key parameter regarding the reliability of the radar. These imbalances may originate from several parameter variations or hardware fatigues, e.g., a solder ball break (SBB), and may affect some radar processing steps, such as the angle of arrival estimation. In this work, a novel method for online estimation of automotive radar channel imbalances is proposed. The proposed method exploits a normalized least mean squares (NLMS) algorithm as a block in the processing chain of the radar to estimate the channel imbalances. The input of this block is the detected targets in the range-Doppler map of the radar on the road without any prior knowledge on the angular parameters of the targets. This property in combination with low computational complexity of the NLMS, makes the proposed method suitable for online channel imbalance estimation, in parallel to the normal operation of the radar. Furthermore, it features reduced dependency on specific targets of interest and faster update rates of the channel imbalance estimation compared to the majority of state-of-the-art methods. This improvement is achieved by allowing for multiple targets in the angular spectrum, whereas most other methods are restricted to only single targets in the angular spectrum. The performance of the proposed method is validated using various simulation scenarios and is supported by measurement results.

Estimators and Performance Bounds for Short Periodic Pulses

May 27, 2025Abstract:In many industrial applications, signals with short periodic pulses, caused by repeated steps in the manufacturing process, are present, and their fundamental frequency or period may be of interest. Fundamental frequency estimation is in many cases performed by describing the periodic signal as a multiharmonic signal and employing the corresponding maximum likelihood estimator. However, since signals with short periodic pulses contain a large number of noise-only samples, the multiharmonic signal model is not optimal to describe them. In this work, two models of short periodic pulses with known and unknown pulse shape are considered. For both models, the corresponding maximum likelihood estimators, Fisher information matrices, and approximate Cram\'er-Rao lower bounds are presented. Numerical results demonstrate that the proposed estimators outperform the maximum likelihood estimator based on the multiharmonic signal model for low signal-to-noise ratios.

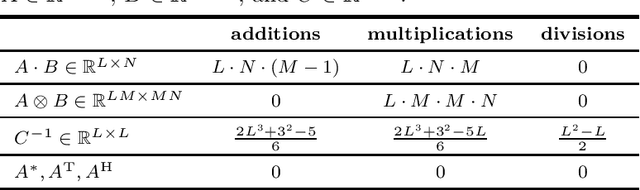

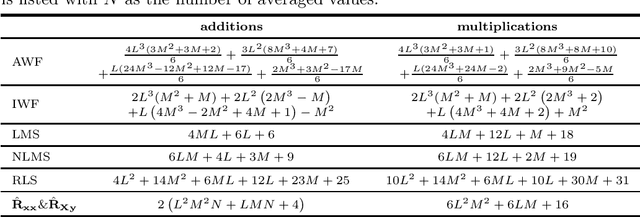

Optimum and Adaptive Complex-Valued Bilinear Filters

May 14, 2025Abstract:The identification of nonlinear systems is a frequent task in digital signal processing. Such nonlinear systems may be grouped into many sub-classes, whereby numerous nonlinear real-world systems can be approximated as bilinear (BL) models. Therefore, various optimum and adaptive BL filters have been introduced in recent years. Further, in many applications such as communications and radar, complex-valued (CV) BL systems in combination with CV signals may occur. Hence, in this work, we investigate the extension of real-valued (RV) BL filters to CV BL filters. First, we derive CV BL filters by applying two or four RV BL filters, and compare them with respect to their computational complexity and performance. Second, we introduce novel fully CV BL filters, such as the CV BL Wiener filter (WF), the CV BL least squares (LS) filter, the CV BL least mean squares (LMS) filter, the CV BL normalized LMS (NLMS) filter, and the CV BL recursive least squares (RLS) filter. Finally, these filters are applied to identify multiple-input-single-output (MISO) systems and Hammerstein models.

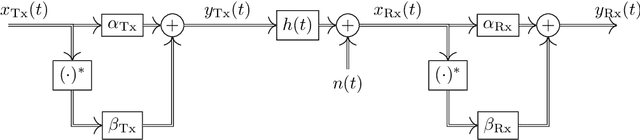

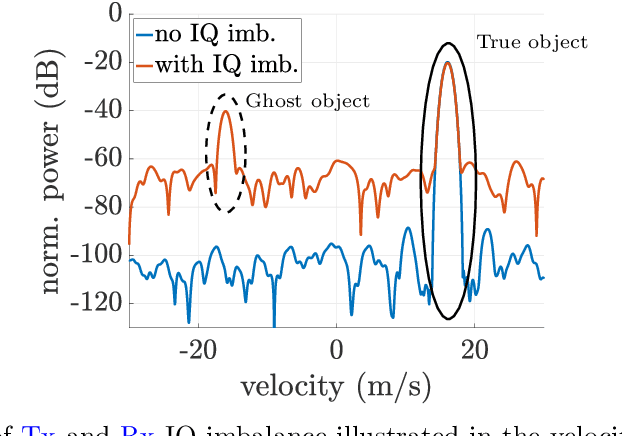

Tx and Rx IQ Imbalance Compensation for JCAS in 5G NR

Apr 14, 2025

Abstract:Beside traditional communications, joint communications and sensing (JCAS) is gaining increasing relevance as a key enabler for next-generation wireless systems. The ability to accurately transmit and receive data is the basis for high-speed communications and precise sensing, where a fundamental requirement is an accurate in-phase (I) and quadrature-phase (Q) modulation. For sensing, imperfections in IQ modulation lead to two critical issues in the range-Doppler-map (RDM) in form of an increased noise floor and the presence of ghost objects, degrading the accuracy and reliability of the information in the RDM. This paper presents a low-complex estimation and compensation method to mitigate the IQ imbalance effects. This is achieved by utilizing, amongst others, the leakage signal, which is the direct signal from the transmitter to the receiver path, and is typically the strongest signal component in the RDM. The parameters of the IQ imbalance suppression structure are estimated based on a mixed complex-/real-valued bilinear filter approach, that considers IQ imbalance in the transmitter and the receiver of the JCAS-capable user equipment (UE). The UE uses a 5G New Radio (NR)-compliant orthogonal frequency-division multiplexing (OFDM) waveform with the system configuration assumed to be predefined from the communication side. To assess the effectiveness of the proposed approach, simulations are conducted, illustrating the performance in the suppression of IQ imbalance introduced distortions in the RDM.

Relaxed Multi-Tx DDM Online Calibration

Jun 25, 2024Abstract:In multiple-input and multiple-output (MIMO) radar systems based on Doppler-division multiplexing (DDM), phase shifters are employed in the transmit paths and require calibration strategies to maintain optimal performance all along the radar system's life cycle. In this paper, we propose a novel family of DDM codes that enable an online calibration of the phase shifters that scale realistically to any number of simultaneously activated transmit (Tx)-channels during the calibration frames. To achieve this goal we employ the previously developed odd-DDM (ODDM) sequences to design calibration DDM codes with reduced inter-Tx leakage. The proposed calibration sequence is applied to an automotive radar data set modulated with erroneous phase shifters.

OFDM-based Waveforms for Joint Sensing and Communications Robust to Frequency Selective IQ Imbalance

Nov 16, 2023Abstract:Orthogonal frequency-division multiplexing (OFDM) is a promising waveform candidate for future joint sensing and communication systems. It is well known that the OFDM waveform is vulnerable to in-phase and quadrature-phase (IQ) imbalance, which increases the noise floor in a range-Doppler map (RDM). A state-of-the-art method for robustifying the OFDM waveform against IQ imbalance avoids an increased noise floor, but it generates additional ghost objects in the RDM [1]. A consequence of these additional ghost objects is a reduction of the maximum unambiguous range. In this work, a novel OFDM-based waveform robust to IQ imbalance is proposed, which neither increases the noise floor nor reduces the maximum unambiguous range. The latter is achieved by shifting the ghost objects in the RDM to different velocities such that their range variations observed over several consecutive RDMs do not correspond to the observed velocity. This allows tracking algorithms to identify them as ghost objects and eliminate them for the follow-up processing steps. Moreover, we propose complete communication systems for both the proposed waveform as well as for the state-of-the-art waveform, including methods for channel estimation, synchronization, and data estimation that are specifically designed to deal with frequency selective IQ imbalance which occurs in wideband systems. The effectiveness of these communication systems is demonstrated by means of bit error ratio (BER) simulations.

Bi-Linear Homogeneity Enforced Calibration for Pipelined ADCs

Sep 06, 2023Abstract:Pipelined analog-to-digital converters (ADCs) are key enablers in many state-of-the-art signal processing systems with high sampling rates. In addition to high sampling rates, such systems often demand a high linearity. To meet these challenging linearity requirements, ADC calibration techniques were heavily investigated throughout the past decades. One limitation in ADC calibration is the need for a precisely known test signal. In our previous work, we proposed the homogeneity enforced calibration (HEC) approach, which circumvents this need by consecutively feeding a test signal and a scaled version of it into the ADC. The calibration itself is performed using only the corresponding output samples, such that the test signal can remain unknown. On the downside, the HEC approach requires the option to accurately scale the test signal, impeding an on-chip implementation. In this work, we provide a thorough analysis of the HEC approach, including the effects of an inaccurately scaled test signal. Furthermore, the bi-linear homogeneity enforced calibration (BL-HEC) approach is introduced and suggested to account for an inaccurate scaling and, therefore, to facilitate an on-chip implementation. In addition, a comprehensive stability and convergence analysis of the BL-HEC approach is carried out. Finally, we verify our concept with simulations.

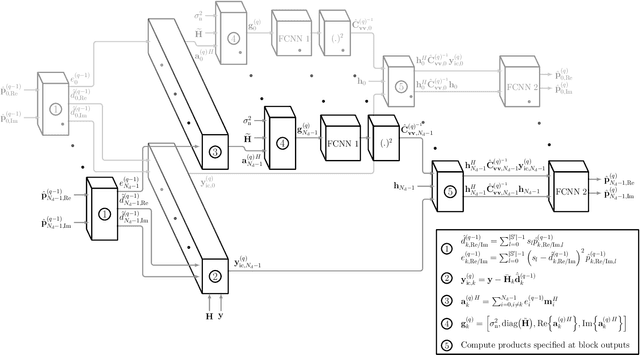

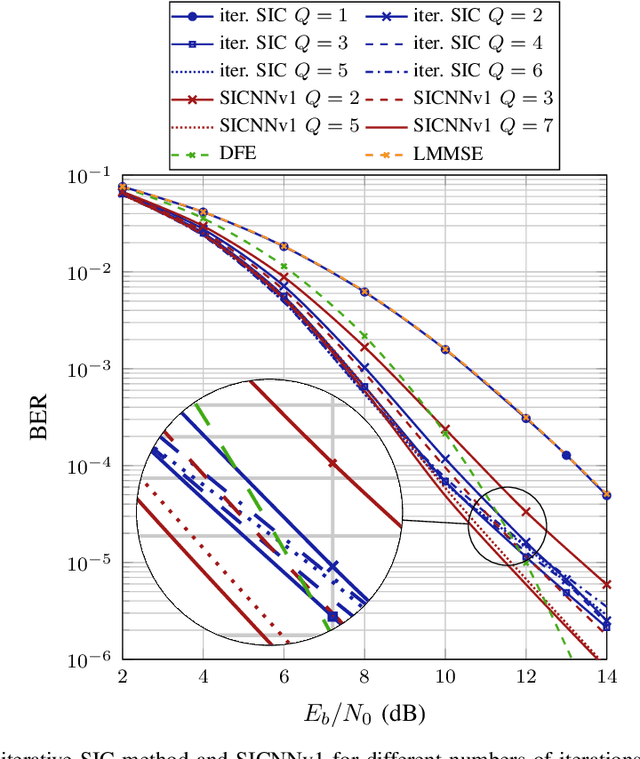

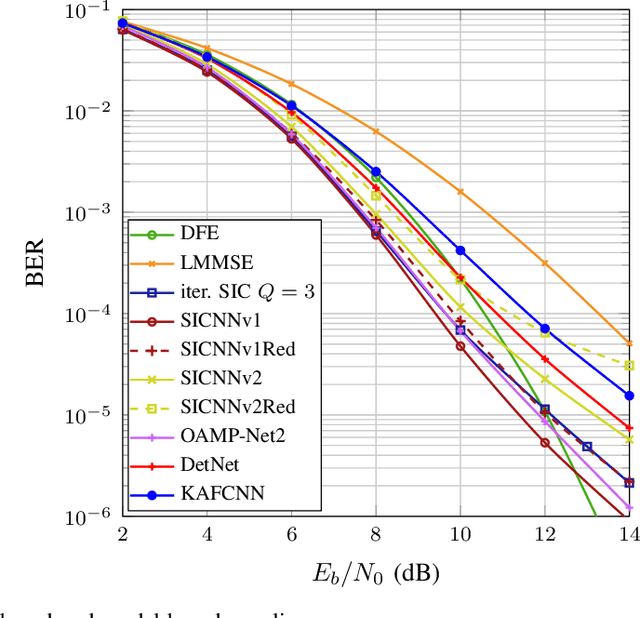

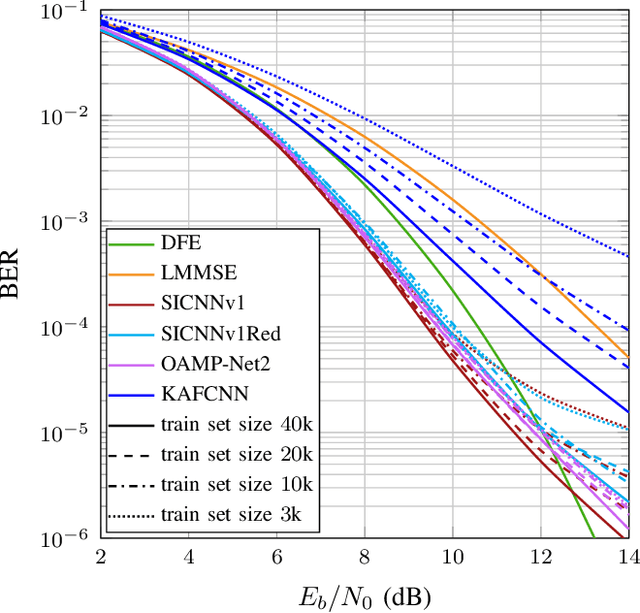

SICNN: Soft Interference Cancellation Inspired Neural Network Equalizers

Aug 24, 2023

Abstract:Equalization is an important task at the receiver side of a digital wireless communication system, which is traditionally conducted with model-based estimation methods. Among the numerous options for model-based equalization, iterative soft interference cancellation (SIC) is a well-performing approach since error propagation caused by hard decision data symbol estimation during the iterative estimation procedure is avoided. However, the model-based method suffers from high computational complexity and performance degradation due to required approximations. In this work, we propose a novel neural network (NN-)based equalization approach, referred to as SICNN, which is designed by deep unfolding of a model-based iterative SIC method, eliminating the main disadvantages of its model-based counterpart. We present different variants of SICNN. SICNNv1 is very similar to the model-based method, and is specifically tailored for single carrier frequency domain equalization systems, which is the communication system we regard in this work. The second variant, SICNNv2, is more universal, and is applicable as an equalizer in any communication system with a block-based data transmission scheme. We highlight the pros and cons of both variants. Moreover, for both SICNNv1 and SICNNv2 we present a version with a highly reduced number of learnable parameters. We compare the achieved bit error ratio performance of the proposed NN-based equalizers with state-of-the-art model-based and NN-based approaches, highlighting the superiority of SICNNv1 over all other methods. Also, we present a thorough complexity analysis of the proposed NN-based equalization approaches, and we investigate the influence of the training set size on the performance of NN-based equalizers.

Analysis and Compensation of Carrier Frequency Offset Impairments in Unique Word OFDM

Aug 10, 2023Abstract:Unique Word-orthogonal frequency division multiplexing (UW-OFDM) is known to provide various performance benefits over conventional cyclic prefix (CP) based OFDM. Most important, UW-OFDM features excellent spectral sidelobe suppression properties and an outstanding bit error ratio (BER) performance. Carrier frequency offset (CFO) induced impairments denote a challenging task for OFDM systems of any kind. In this work we investigate the CFO effects on UW-OFDM and compare it to conventional multi-carrier and single-carrier systems. Different CFO compensation approaches with different computational complexity are considered throughout this work and assessed against each other. A mean squared error analysis carried out after data estimation reveals a significant higher robustness of UW-OFDM over CP-OFDM against CFO effects. Additionally, the conducted BER simulations generally support this conclusion for various scenarios, ranging from uncoded to coded transmission in a frequency selective environment.

Complex-valued Adaptive System Identification via Low-Rank Tensor Decomposition

Jun 28, 2023Abstract:Machine learning (ML) and tensor-based methods have been of significant interest for the scientific community for the last few decades. In a previous work we presented a novel tensor-based system identification framework to ease the computational burden of tensor-only architectures while still being able to achieve exceptionally good performance. However, the derived approach only allows to process real-valued problems and is therefore not directly applicable on a wide range of signal processing and communications problems, which often deal with complex-valued systems. In this work we therefore derive two new architectures to allow the processing of complex-valued signals, and show that these extensions are able to surpass the trivial, complex-valued extension of the original architecture in terms of performance, while only requiring a slight overhead in computational resources to allow for complex-valued operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge